簡單來說GPDB是一個分散式資料庫軟體,其可以管理和處理分佈在多個不同主機上的海量數據。對於GPDB來說,一個DB實例實際上是由多個獨立的PostgreSQL實例組成的,它們分佈在不同的物理主機上,協同工作,呈現給用戶的是一個DB的效果。Master是GPDB系統的訪問入口,其負責處理客戶端的連接及 ...

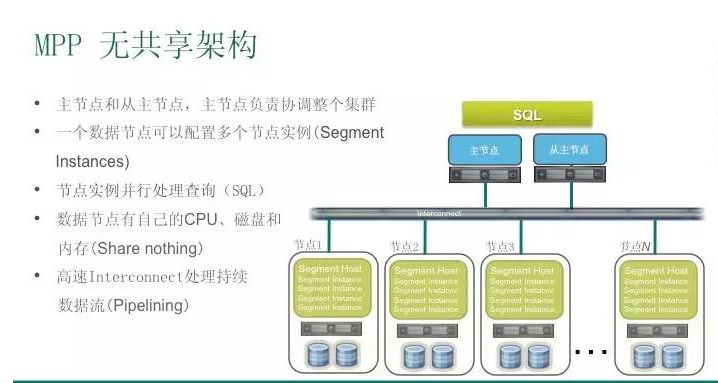

簡單來說GPDB是一個分散式資料庫軟體,其可以管理和處理分佈在多個不同主機上的海量數據。對於GPDB來說,一個DB實例實際上是由多個獨立的PostgreSQL實例組成的,它們分佈在不同的物理主機上,協同工作,呈現給用戶的是一個DB的效果。Master是GPDB系統的訪問入口,其負責處理客戶端的連接及SQL 命令、協調系統中的其他Instance(Segment)工作,Segment負責管理和處理用戶數據。

環境準備:

操作系統:CentOS Linux release 7.6.1810 (Core) 64位

master 1台(架構圖中的主節點),Standby 1台(架構圖中的從節點),Segment 2台。共4台伺服器。

一、Master主機 Root 用戶上操作

1. 修改/etc/hosts文件,添加下麵內容(註:4台伺服器相同的配置)

vim /etc/hosts

192.168.18.130 gp-master

192.168.18.131 gp-standby

192.168.18.132 gp-node1

192.168.18.133 gp-node2

2. 伺服器關閉selinux,防火牆4台伺服器相互開放,測試環境可以直接先關閉防火牆。(註:4台伺服器相同的配置)

關閉Firewalld

systemctl stop firewalld

systemctl disable firewalld

永久關閉Selinux

vim /etc/selinux/conf

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

註:查看Selinux運行狀態:getenforce,CLI界面非永久生效設置SeLinux:setenforce 0(0-1對應關閉和開啟)

3. 操作系統參數設置

vim /etc/sysctl.conf (註:4台伺服器相同的配置)

kernel.shmmax = 500000000

kernel.shmmni = 4096

kernel.shmall = 4000000000

kernel.sem = 250 512000 100 2048

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

net.ipv4.tcp_syncookies = 1

net.ipv4.ip_forward = 0

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.ipv4.conf.default.arp_filter = 1

net.core.netdev_max_backlog = 10000

vm.overcommit_memory = 2

kernel.msgmni = 2048

net.ipv4.ip_local_port_range = 1025 65535

vim /etc/security/limits.conf (註:4台伺服器相同的配置)

* soft nofile 65536

* hard nofile 65536

* soft nproc 131072

* hard nproc 131072

磁碟預讀參數及 deadline演算法修改 (註:4台伺服器相同的配置)

blockdev --setra 65536 /dev/sda

echo deadline > /sys/block/sda/queue/scheduler

註:磁碟盤符sda需根據自己的實際情況進行配置

軟體下載地址:https://network.pivotal.io/products/pivotal-gpdb,下載:greenplum-db-5.21.1-rhel7-x86_64.rpm

在Master主機上安裝GP二進位文件,也就是主機名是mdw的伺服器。(註:在master上安裝即可,後面通過批量的方法安裝剩下的伺服器)

rpm -ivh greenplum-db-5.21.1-rhel7-x86_64.rpm

註:預設安裝目錄:/usr/local

在Master上添加gpadmin用戶

adduser gpadmin

echo gpadmin | passwd --stdin gpadmin

註:設置密碼為了後面gpssh-exkeys -f hostfile_allhosts 使用

在Master上給gpadmin用戶提權

[root@gp-master ~]# visudo

gpadmin ALL=(ALL) ALL

gpadmin ALL=(ALL) NOPASSWD:ALL

在Master主機上賦予gpadmin用戶Greenplum文件夾的的許可權

chown -R gpadmin.gpadmin /usr/local/greenplum-db*

二、Master 主機 Gpadmin用戶上操作

準備用於批量安裝軟體以及後續集群的初始化文件,hostfile_allhosts,hostfile_segments,hostfile_mshosts,存放到/home/gpadmin

su - gpadmin

vim hostfile_allhosts

gp-master

gp-standby

gp-node1

gp-node2

vim hostfile_segments

gp-node1

gp-node2

vim hostfile_mshosts

gp-master

gp-standby

設置各主機之間免密登錄

gpssh-exkeys -f hostfile_allhosts

註:需輸入gpadmin用戶的密碼,此處為:gpadmin

設置用於安裝Greenplum的文件夾許可權

gpssh -f hostfile_allhosts

=> sudo chown gpadmin.gpadmin /usr/local

=> exit

創建及賦權master/standby主機元數據存儲目錄

gpssh -f hostfile_mshosts =>sudo mkdir /data/greenplum_data/gpmaster =>sudo chown -R gpadmin.gpadmin /data

=>exit

創建及賦權Segments主機數據存儲目錄

gpssh -f hostfile_segments =>sudo mkdir /data/greenplum_data/{primary,mirror}

=>sudo chown -R gpadmin.gpadmin /data

=>exit

批量安裝軟體(GP)

cd /home/gpadmin/

source /usr/local/greenplum-db/greenplum_path.sh

gpseginstall -f hostfile_allhosts -u gpadmin -p gpadmin

設置NTP同步

Yum下載安裝NTP伺服器,已安裝的可以略過

sudo yum install ntp -y

若出現如下報錯,可看下一步解決方法

There was a problem importing one of the Python modules

required to run yum. The error leading to this problem was:

No module named yum

Please install a package which provides this module, or

verify that the module is installed correctly.

It's possible that the above module doesn't match the

current version of Python, which is:

2.7.13 (r266:84292, Jan 22 2014, 09:37:14)

[GCC 4.4.7 20120313 (Red Hat 4.4.7-4)]

If you cannot solve this problem yourself, please go to

the yum faq at:

http://yum.baseurl.org/wiki/Faq

View Code

解決方法:

unset PYTHONHOME

unset PYTHONPATH

unset LD_LIBRARY_PATH

再進行yum安裝之後,再修改回來,使得GP能正常使用

source /usr/local/greenplum-db/greenplum_path.sh

註:報錯原因:在安裝GP集群之後,會在master節點中的環境變數中會增加 PYTHONHOME,PYTHONPATH,LD_LIBRARY_PATH幾項,並且會修改原本的path。

補充:LD_LIBRARY_PATH 該環境變數主要用於指定查找共用庫(動態鏈接庫)時除了預設路徑之外的其他路徑。

在每個Segment主機,編輯/etc/ntp.conf文件。設置第一個server參數指向Master主機,第二個server參數指向Standby主機。如下麵:

sudo vim /etc/ntp.conf

server gp-master prefer

server gp-standby

在Standby主機,編輯/etc/ntp.conf文件。設置第一個server參數指向Master主機,第二個參數指向數據中心的時間伺服器。

sudo vim /etc/ntp.conf

server gp-master prefer

在Master主機,使用NTP守護進程同步所有Segment主機的系統時鐘。例如,使用gpssh來完成:

gpssh -f hostfile_allhosts -v -e 'ntpd'

輸出如下代表成功:

[root@gp-master gpadmin]# gpssh -f all_hosts -v -e 'ntpd'

[WARN] Reference default values as $MASTER_DATA_DIRECTORY/gpssh.conf could not be found

Using delaybeforesend 0.05 and prompt_validation_timeout 1.0

[Reset ...]

[INFO] login mdw

[INFO] login smdw

[INFO] login sdw1

[INFO] login sdw2

[ mdw] ntpd

[smdw] ntpd

[sdw1] ntpd

[sdw2] ntpd

[INFO] completed successfully

[Cleanup...]

配置Greenplum初始化文件

cp $GPHOME/docs/cli_help/gpconfigs/gpinitsystem_config /home/gpadmin/gpinitsystem_config

chmod 775 gpinitsystem_config

相關配置如下:

[gpadmin@gp-master ~]$ cat gpinitsystem_config

# FILE NAME: gpinitsystem_config

# Configuration file needed by the gpinitsystem

################################################

#### REQUIRED PARAMETERS

################################################

#### Name of this Greenplum system enclosed in quotes.

ARRAY_NAME="Greenplum Data Platform"

#### Naming convention for utility-generated data directories.

SEG_PREFIX=gpseg

#### Base number by which primary segment port numbers

#### are calculated.

PORT_BASE=40000

#### File system location(s) where primary segment data directories

#### will be created. The number of locations in the list dictate

#### the number of primary segments that will get created per

#### physical host (if multiple addresses for a host are listed in

#### the hostfile, the number of segments will be spread evenly across

#### the specified interface addresses).

declare -a DATA_DIRECTORY=(/data/greenplum_data/primary)

#### OS-configured hostname or IP address of the master host.

MASTER_HOSTNAME=k8s-master

#### File system location where the master data directory

#### will be created.

MASTER_DIRECTORY=/data/greenplum_data/gpmaster

#### Port number for the master instance.

MASTER_PORT=5432

#### Shell utility used to connect to remote hosts.

TRUSTED_SHELL=ssh

#### Maximum log file segments between automatic WAL checkpoints.

CHECK_POINT_SEGMENTS=8

#### Default server-side character set encoding.

ENCODING=UTF-8

################################################

#### OPTIONAL MIRROR PARAMETERS

################################################

#### Base number by which mirror segment port numbers

#### are calculated.

MIRROR_PORT_BASE=43000

#### Base number by which primary file replication port

#### numbers are calculated.

REPLICATION_PORT_BASE=34000

#### Base number by which mirror file replication port

#### numbers are calculated.

MIRROR_REPLICATION_PORT_BASE=44000

#### File system location(s) where mirror segment data directories

#### will be created. The number of mirror locations must equal the

#### number of primary locations as specified in the

#### DATA_DIRECTORY parameter.

declare -a MIRROR_DATA_DIRECTORY=(/data/greenplum_data/mirror)

################################################

#### OTHER OPTIONAL PARAMETERS

################################################

#### Create a database of this name after initialization.

DATABASE_NAME=testDB

#### Specify the location of the host address file here instead of

#### with the the -h option of gpinitsystem.

MACHINE_LIST_FILE=/home/gpadmin/hostfile_segments

View Code

運行初始化工具初始化資料庫

source /usr/local/greenplum-db/greenplum_path.sh

gpinitsystem -c gpinitsystem_config

初始化日誌:

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-Review options for gpinitstandby

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-The Master /data/master/gpseg-1/pg_hba.conf post gpinitsystem

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-has been configured to allow all hosts within this new

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-array to intercommunicate. Any hosts external to this

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-new array must be explicitly added to this file

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-Refer to the Greenplum Admin support guide which is

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-located in the /usr/local/greenplum-db/./docs directory

20160827:16:23:11:002458 gpinitsystem:mdw:gpadmin-[INFO]:-------------------------------------------------------

現在只有1個master,2個segment,沒有standby,那麼接下來把standby加入集群。

在Master伺服器上執行

gpinitstandby -s gp-standby

輸出如下:

[gpadmin@mdw ~]$ gpinitstandby -s smdw

20160827:16:59:24:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Validating environment and parameters for standby initialization...

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Checking for filespace directory /data/master/gpseg-1 on smdw

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master initialization parameters

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master hostname = mdw

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master data directory = /data/master/gpseg-1

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum master port = 5432

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master hostname = smdw

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master port = 5432

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum standby master data directory = /data/master/gpseg-1

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Greenplum update system catalog = On

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:- Filespace locations

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:------------------------------------------------------

20160827:16:59:25:023346 gpinitstandby:mdw:gpadmin-[INFO]:-pg_system -> /data/master/gpseg-1

Do you want to continue with standby master initialization? Yy|Nn (default=N):

> y

20160827:16:59:31:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Syncing Greenplum Database extensions to standby

20160827:16:59:31:023346 gpinitstandby:mdw:gpadmin-[INFO]:-The packages on smdw are consistent.

20160827:16:59:31:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Adding standby master to catalog...

20160827:16:59:31:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Database catalog updated successfully.

20160827:16:59:31:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Updating pg_hba.conf file...

20160827:16:59:37:023346 gpinitstandby:mdw:gpadmin-[INFO]:-pg_hba.conf files updated successfully.

20160827:16:59:39:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Updating filespace flat files...

20160827:16:59:39:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Filespace flat file updated successfully.

20160827:16:59:39:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Starting standby master

20160827:16:59:39:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Checking if standby master is running on host: smdw in directory: /data/master/gpseg-1

20160827:16:59:40:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Cleaning up pg_hba.conf backup files...

20160827:16:59:46:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Backup files of pg_hba.conf cleaned up successfully.

20160827:16:59:46:023346 gpinitstandby:mdw:gpadmin-[INFO]:-Successfully created standby master on gp-standby

View Code

查看啟動進程:

[gpadmin@gp-master ~]$ ps -ef | grep postgres

gpadmin 10975 1 0 00:57 ? 00:00:00 /usr/local/greenplum-db-5.21.1/bin/postgres -D /data/greenplum_data/gpmaster/gpseg-1 -p 5432 --gp_dbid=1 --gp_num_contents_in_cluster=2 --silent-mode=true -i -M master --gp_contentid=-1 -x 0 -E

gpadmin 10976 10975 0 00:57 ? 00:00:00 postgres: 5432, master logger process

gpadmin 10979 10975 0 00:57 ? 00:00:00 postgres: 5432, stats collector process

gpadmin 10980 10975 0 00:57 ? 00:00:01 postgres: 5432, writer process

gpadmin 10981 10975 0 00:57 ? 00:00:00 postgres: 5432, checkpointer process

gpadmin 10982 10975 0 00:57 ? 00:00:00 postgres: 5432, seqserver process

gpadmin 10983 10975 0 00:57 ? 00:00:00 postgres: 5432, ftsprobe process

gpadmin 10984 10975 0 00:57 ? 00:00:00 postgres: 5432, sweeper process

gpadmin 10985 10975 0 00:57 ? 00:00:05 postgres: 5432, stats sender process

gpadmin 10986 10975 0 00:57 ? 00:00:01 postgres: 5432, wal writer process

gpadmin 11279 10975 0 00:59 ? 00:00:00 postgres: 5432, wal sender process gpadmin 192.168.18.131(53573) streaming 0/C05A028

gpadmin 16800 16608 0 04:15 pts/0 00:00:00 grep --color=auto postgres

設置gpadmin用戶環境變數,Master,Standby都需設置。

vim /home/gpadmin/.bashrc

[gpadmin@gp-master ~]$ cat .bashrc

# .bashrc

# Source global definitions

if [ -f /etc/bashrc ]; then

. /etc/bashrc

fi

# Uncomment the following line if you don't like systemctl's auto-paging feature:

# export SYSTEMD_PAGER=

# User specific aliases and functions

source /usr/local/greenplum-db/greenplum_path.sh

export MASTER_DATA_DIRECTORY=/data/greenplum_data/gpmaster/gpseg-1

export PGPRORT=5432

export PGDATABASE=testDB

[gpadmin@gp-master ~]$ scp .bashrc gp-standby:`pwd`

啟動和停止資料庫測試是否能正常啟動和關閉,命令如下

gpstart

gpstop

到此 Greenplum 就部署完成了。下麵進行一些簡單的測試。

登錄資料庫:psql -d postgres

建表,插入,查詢

postgres=# create table student ( no int primary key,student_name varchar(40),age int);

NOTICE: CREATE TABLE / PRIMARY KEY will create implicit index "student_pkey" for table "student"

CREATE TABLE

postgres=# insert into student values(1,'yayun',18);

INSERT 0 1

postgres=# select * from student;

no | student_name | age

----+--------------+-----

1 | yayun | 18

(1 row)