資源池是資料庫資源管控重要的一環,通過這篇文章您可以瞭解到資源池的來龍去脈,如何使用資源池,如何使用資源池監控去分析問題等。 ...

本文分享自華為雲社區《GaussDB(DWS)監控工具指南(三)資源池級監控【綻放吧!GaussDB(DWS)雲原生數倉】》,作者:幕後小黑爪。

一、資源池

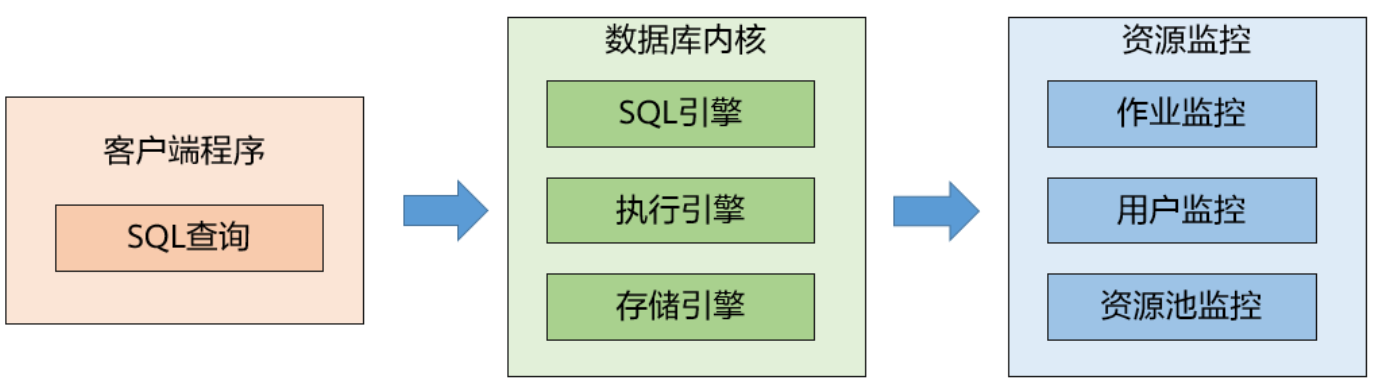

在資料庫最初階段,是沒有資源概念的,給資料庫輸入SQL語句,資料庫輸出結果,在簡單業務場景下,用戶獨占資料庫是不存在資源爭搶問題的。隨著資料庫業務增長,用戶也越來越多,此時不同用戶間的SQL會搶占操作系統的資源(CPU、記憶體、IO、網路等),如果不加限制的話就會影響整個集群的用戶,造成集群不可用的情況。為了防止在這種場景發生,需要對用戶業務SQL進行區分,對不同的用戶需要資源分配和管控。為此,資源池應運而生,資源池作為一種邏輯媒介,連接用戶和系統資源,管控每個用戶的資源使用,保證集群的可用狀態。

二、GaussDB(DWS)中的資源池

當管理員創建用戶後,會自動綁定在預設資源池default_pool上,從網頁上,可以再創建資源池,然後綁定相應的用戶在對應的資源池上。此時用戶下發SQL語句執行,下發的語句就會收到資源池配置參數的管控。通過資源池可以劃分不同用戶的資源使用情況,簡化了集群的管理,它可以統一管理所有的系統計算資源。這意味著管理員只需要管理資源池,而不是每個節點上的資源。

GaussDB(DWS)資源池(租戶)功能支持通過管控CPU、併發、記憶體、網路等手段對用戶下發的業務語句進行管控,在不同的維度對用戶語句進行管控。具體來說支持設置資源池參數的方式控制併發、記憶體、CPU利用率等能力。當用戶的語句是未知新語句時,也可以通過一些異常規則來控制語句情況,比如查殺超過限制的爛SQL等,在此基礎上,還支持設置黑名單的方式嚴格管控用戶下發的語句,這些功能後續會進行說明,本文聚焦資源池先不展開。

通過下列語句可查詢資源池信息

postgres=# select * from pg_resource_pool; respool_name | mem_percent | cpu_affinity | control_group | active_statements | max_dop | memory_limit | parentid | io_limits | io_priority | nodegroup | is_foreign | short_acc | except_rule | weight ----------------------+-------------+--------------+---------------------+-------------------+---------+--------------+------------+-----------+-------------+------------------+------------+-----------+-------------+-------- default_pool | 0 | -1 | DefaultClass:Medium | -1 | -1 | default | 0 | 0 | None | installation | f | t | None | -1 respool_1 | 0 | -1 | ClassN1:wn1 | 10 | -1 | default | 0 | 0 | None | logical_cluster1 | f | t | None | -1 respool_grp_1 | 20 | -1 | ClassG1 | 10 | -1 | default | 0 | 0 | None | logical_cluster1 | f | t | None | -1 respool_g1_job_1 | 20 | -1 | ClassG1:wg1_1 | 10 | -1 | default | 2147484586 | 0 | None | logical_cluster1 | f | t | None | -1 respool_g1_job_2 | 20 | -1 | ClassG1:wg1_2 | 10 | -1 | default | 2147484586 | 0 | None | logical_cluster1 | f | t | None | -1 respool_0_mempercent | 0 | -1 | DefaultClass:Medium | 10 | -1 | default | 0 | 0 | None | logical_cluster1 | f | t | None | -1 (6 rows)

對於資源池的相關參數,用戶可通過管控面進行配置,同時也支持管理員通過語句來修改資源池配置,如下所示,可修改預設資源池的快車道併發限制。其他參數同理,不過該操作有風險,建議用戶在GaussDB(DWS)運維人員確認後執行。

alter resource pool default_pool with (max_dop=1);

三、資源池監控

GaussDB(DWS)為用戶提供了多維度的資源監控視圖,可支持從不同維度查詢集群狀態。

GaussDB(DWS)提供資源池級別的監控能力,監控SQL語句的運行情況,主要包含實時監控和歷史監控,跟用戶監控類似,資源池監控展示了每個資源池的運行作業數、排隊作業數、記憶體使用、記憶體使用上限、 CPU使用情況、讀寫IO情況等,通過下列語句可進行查詢獲取

postgres=# select * from gs_respool_resource_info; nodegroup | rpname | cgroup | ref_count | fast_run | fast_wait | fast_limit | slow_run | slow_wait | slow_limit | used_cpu | cpu_limit | used_mem | estimate_mem | mem_limit | read_kbytes | write_kbytes | read_counts | write_counts | read_speed | write_speed -----------+--------------+---------------------+-----------+----------+-----------+------------+----------+-----------+------------+----------+-----------+----------+--------------+-----------+-------------+--------------+-------------+--------------+------------+------------- lc1 | pool_group | ClassN | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 116844 | 0 | 0 | 0 | 0 | 0 | 0 lc1 | pool_work | ClassN:wg1 | 0 | 0 | 0 | 10 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 23364 | 0 | 0 | 0 | 0 | 0 | 0 lc2 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 208 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 lc1 | resp_other | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | 100 | 0 | 312 | 0 | 0 | 175260 | 0 | 0 | 0 | 0 | 0 | 0 lc1 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 312 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 (5 rows)

其中,nodegroup 代表資源池所屬的邏輯集群信息。fast_run、slow_run代表資源池快車道運行數、慢車道運行數,fast_wait、slow_wait為快車道和慢車道的排隊作業數。其中,slow_wait也包含了CCN排隊的作業。fast_limit和slow_limit代表了快慢車道的併發上限,cpu_limit代表了資源池配置的cpu設置的限額是多少,通過used_cpu可以看到不同資源池的CPU使用率情況。estimate_mem為資源池內用戶下發作業的估算記憶體總和,used_mem為資源池實際使用的記憶體,mem_limit為資源池設置的可用記憶體上限。

同樣,歷史資源監控也提供了歷史問題定位的能力,該視圖會30s採集一次,timestamp為採集的時刻。

postgres=# select * from gs_respool_resource_history; timestamp | nodegroup | rpname | cgroup | ref_count | fast_run | fast_wait | fast_limit | slow_run | slow_wait | slow_limit | used_cpu | cpu_limit | used_mem | estimate_mem | mem_limit | read_kbytes | write_kbytes | read_counts | write_counts | read_speed | write_speed -------------------------------+-----------+--------------+---------------------+-----------+----------+-----------+------------+----------+-----------+------------+----------+-----------+----------+--------------+-----------+-------------+--------------+-------------+--------------+------------+------------- 2023-10-20 20:24:14.715107+08 | lc1 | pool_group | ClassN | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 116844 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:14.715107+08 | lc1 | pool_work | ClassN:wg1 | 0 | 0 | 0 | 10 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 23364 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:14.715107+08 | lc2 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 208 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:14.715107+08 | lc1 | resp_other | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | 100 | 0 | 312 | 0 | 0 | 175260 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:14.715107+08 | lc1 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 312 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:44.791512+08 | lc1 | pool_group | ClassN | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 116844 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:44.791512+08 | lc1 | pool_work | ClassN:wg1 | 0 | 0 | 0 | 10 | 0 | 0 | 10 | 0 | 312 | 0 | 0 | 23364 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:44.791512+08 | lc2 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 208 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:44.791512+08 | lc1 | resp_other | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | 100 | 0 | 312 | 0 | 0 | 175260 | 0 | 0 | 0 | 0 | 0 | 0 2023-10-20 20:24:44.791512+08 | lc1 | default_pool | DefaultClass:Medium | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 | 312 | 0 | 0 | 584220 | 0 | 0 | 0 | 0 | 0 | 0 ...

四、通過資源池監控分析定位問題(待補充)

1、當發現業務反饋語句阻塞嚴重,不執行作業,可查詢實時資源池監控或者歷史資源池監控進行分析,看是否作業堆積排隊,如果fast_limit上的限製為10,,fast_run欄位也為10,然後fast_wait較多,此時可嘗試修改資源池參數max_dop,適當調大併發上限。

2、業務反饋跑批業務在正常運行的情況下批量變慢,此時可觀察歷史資源池監控對比觀察,統計劣化前後同一批作業一段時間的記憶體資源使用情況,也可通過當時資源池作業排隊現象來定位問題。

五、更好用的監控視圖

為了提升系統可用性,GaussDB(DWS)也提供了更便捷,更易用的視圖用以幫用戶進行觀察系統狀態和定位問題。

在內核821版本中,用戶可使用gs_query_monitor、gs_user_monitor、gs_respool_monitor視圖進行語句級、用戶級、資源池的資源監控,這些視圖以GaussDB(DWS)監控工具指南系列中所講的視圖為基礎,選取常用的定位欄位,為現網用戶提供更易用的一套實時監控腳本。

具體效果如下:

1. 作業監控

postgres=# select * from gs_query_monitor; usename | nodename | nodegroup | rpname | priority | xact_start | query_start | block_time | duration | query_band | attribute | lane | status | queue | used_mem | estimate_mem | used_cpu | read_speed | write_speed | send_speed | recv_speed | dn_count | stream_count | pid | lw tid | query_id | unique_sql_id | query --------------+----------+------------------+--------------+----------+-------------------------------+-------------------------------+------------+----------+------------+-------------+------+---------+-------+----------+--------------+----------+------------+-------------+------------+------------+----------+--------------+-----------------+--- -----+-------------------+---------------+-------------------------------------------------- user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.754207+08 | 2023-10-30 16:39:28.748855+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878865264 | 98 2280 | 72902018968076864 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.760305+08 | 2023-10-30 16:39:28.754861+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878866632 | 98 2283 | 72902018968076871 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.761491+08 | 2023-10-30 16:39:28.756124+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878865720 | 98 2281 | 72902018968076872 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.768333+08 | 2023-10-30 16:39:28.762653+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878867544 | 98 2285 | 72902018968076877 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.772288+08 | 2023-10-30 16:39:28.766933+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878868912 | 98 2288 | 72902018968076881 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.772304+08 | 2023-10-30 16:39:28.766966+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878867088 | 98 2284 | 72902018968076882 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.777958+08 | 2023-10-30 16:39:28.772572+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878868000 | 98 2286 | 72902018968076888 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.779373+08 | 2023-10-30 16:39:28.773997+08 | 59 | 0 | | Complicated | slow | pending | CCN | 0 | 4360 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878868456 | 98 2287 | 72902018968076889 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.753845+08 | 2023-10-30 16:39:28.748498+08 | 0 | 59 | | Complicated | slow | running | None | 4 | 4360 | .289 | 0 | 0 | 0 | 0 | 0 | 0 | 139906878864808 | 98 2279 | 72902018968076862 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); user_default | cn_5001 | logical_cluster1 | default_pool | Medium | 2023-10-30 16:39:28.753957+08 | 2023-10-30 16:39:28.748609+08 | 0 | 59 | | Complicated | slow | running | None | 4 | 4360 | .288 | 0 | 0 | 17 | 23 | 0 | 0 | 139906878866176 | 98 2282 | 72902018968076863 | 2372000271 | INSERT INTO t1 SELECT generate_series(1,100000); (10 rows)

2. 用戶監控

postgres=# select * from gs_user_monitor; usename | rpname | nodegroup | session_count | active_count | global_wait | fast_run | fast_wait | slow_run | slow_wait | used_mem | estimate_mem | used_cpu | read_speed | write_speed | send_speed | recv_speed | used_space | space_limit | used_temp_space | temp_space_limit | used_spill_space | spill_space_limit ------------------+---------------+------------------+---------------+--------------+-------------+----------+-----------+----------+-----------+----------+--------------+----------+------------+-------------+------------+------------+------------+-------------+-----------------+------------------+------------------+------------------- logical_cluster2 | default_pool | logical_cluster2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | -1 | 0 | -1 | 0 | -1 user_grp_1 | respool_grp_1 | logical_cluster1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | -1 | 0 | -1 | 0 | -1 logical_cluster1 | default_pool | logical_cluster1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1834424 | -1 | 0 | -1 | 0 | -1 user_normal | respool_1 | logical_cluster1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | -1 | 0 | -1 | 0 | -1 user_default | default_pool | logical_cluster1 | 10 | 10 | 0 | 0 | 0 | 2 | 8 | 8 | 8720 | .563 | 0 | 15 | 0 | 0 | 640080 | -1 | 0 | -1 | 0 | -1 (5 rows)

3. 資源池監控

postgres=# select * from gs_respool_monitor; rpname | nodegroup | cn_count | short_acc | session_count | active_count | global_wait | fast_run | fast_wait | fast_limit | slow_run | slow_wait | slow_limit | used_mem | estimate_mem | mem_limit | query_mem_limit | used_cpu | cpu_limit | read_speed | write_speed | send_speed | recv_speed ----------------------+------------------+----------+-----------+---------------+--------------+-------------+----------+-----------+------------+----------+-----------+------------+----------+--------------+-----------+-----------------+----------+-----------+------------+-------------+------------+------------ default_pool | logical_cluster2 | 3 | t | 0 | 0 | 0 | 0 | 0 | -1 | 0 | 0 | -1 | 0 bytes | 0 bytes | 11 GB | 4376 MB | 0 | 8 | 0 bytes/s | 0 bytes/s | 0 bytes/s | 0 bytes/s respool_g1_job_1 | logical_cluster1 | 3 | t | 0 | 0 | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 bytes | 0 bytes | 437 MB | 175 MB | 0 | 8 | 0 bytes/s | 0 bytes/s | 0 bytes/s | 0 bytes/s respool_1 | logical_cluster1 | 3 | t | 0 | 0 | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 bytes | 0 bytes | 11 GB | 4376 MB | 0 | 8 | 0 bytes/s | 0 bytes/s | 0 bytes/s | 0 bytes/s respool_0_mempercent | logical_cluster1 | 3 | t | 0 | 0 | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 bytes | 0 bytes | 11 GB | 4376 MB | 0 | 8 | 0 bytes/s | 0 bytes/s | 0 bytes/s | 0 bytes/s respool_g1_job_2 | logical_cluster1 | 3 | t | 0 | 0 | 0 | 0 | 0 | -1 | 0 | 0 | 10 | 0 bytes | 0 bytes | 437 MB | 175 MB | 0 | 8 | 0 bytes/s | 0 bytes/s | 0 bytes/s | 0 bytes/s default_pool | logical_cluster1 | 3 | t | 10 | 10 | 0 | 0 | 0 | -1 | 2 | 8 | -1 | 8192 KB | 8720 MB | 11 GB | 4376 MB