Ingress控制器介紹 安裝部署traefik 創建traefik的web ui的ingress規則 ingress實驗 hostPath實驗 PV和PVC 研究的方向 重啟k8s二進位安裝(kubeadm)需要重啟組件 ...

Ingress控制器介紹

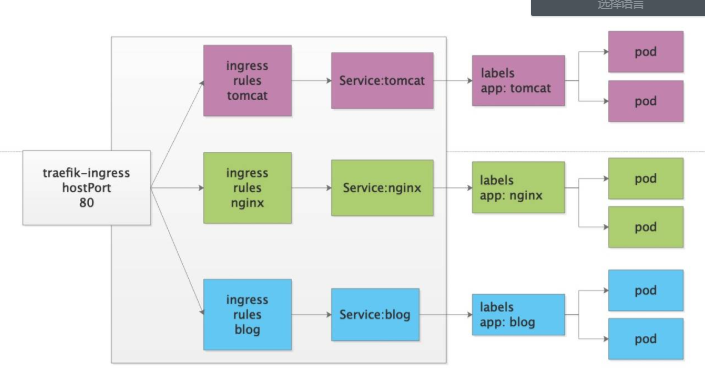

1.沒有ingress之前,pod對外提供服務只能通過NodeIP:NodePort的形式,但是這種形式有缺點,一個節點上的PORT不能重覆利用。比如某個服務占用了80,那麼其他服務就不能在用這個埠了。

2.NodePort是4層代理,不能解析7層的http,不能通過功能變數名稱區分流量

3.為瞭解決這個問題,我們需要用到資源控制器叫Ingress,作用就是提供一個統一的訪問入口。工作在7層

4.雖然我們可以使用nginx/haproxy來實現類似的效果,但是傳統部署不能動態的發現我們新創建的資源,必須手動修改配置文件並重啟。

5.適用於k8s的ingress控制器主流的有ingress-nginx和traefik

6.ingress-nginx == nginx + go --> deployment部署

7.traefik有一個UI界面

安裝部署traefik

1.traefik_dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

k8s-app: traefik-ingress-lb

spec:

replicas: 1

selector:

matchLabels:

k8s-app: traefik-ingress-lb

template:

metadata:

labels:

k8s-app: traefik-ingress-lb

name: traefik-ingress-lb

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

tolerations:

- operator: "Exists"

nodeSelector:

kubernetes.io/hostname: node1

containers:

- image: traefik:v1.7.17

name: traefik-ingress-lb

ports:

- name: http

containerPort: 80

hostPort: 80

- name: admin

containerPort: 8080

args:

- --api

- --kubernetes

- --logLevel=INFO

2.traefik_rbac.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

3.traefik_svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress-lb

ports:

- protocol: TCP

port: 80

name: web

- protocol: TCP

port: 8080

name: admin

type: NodePort

4.應用資源配置

kubectl create -f ./

5.查看並訪問

kubectl -n kube-system get svc

創建traefik的web-ui的ingress規則

1.類比nginx:

upstream traefik-ui {

server traefik-ingress-service:8080;

}

server {

location / {

proxy_pass http://traefik-ui;

include proxy_params;

}

}

2.ingress寫法:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-ui

namespace: kube-system

spec:

rules:

- host: traefik.ui.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080

3.訪問測試:

traefik.ui.comingress實驗

1.實驗目標

未使用ingress之前只能通過IP+埠訪問:

tomcat 8080

nginx 8090

使用ingress之後直接可以使用功能變數名稱訪問:

traefik.nginx.com:80 --> nginx 8090

traefik.tomcat.com:80 --> tomcat 8080

2.創建2個pod和svc

mysql-dp.yaml

mysql-svc.yaml

tomcat-dp.yaml

tomcat-svc.yaml

nginx-dp.yaml

nginx-svc-clusterip.yaml

3.創建ingress控制器資源配置清單並應用

cat >nginx-ingress.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-nginx

namespace: default

spec:

rules:

- host: traefik.nginx.com

http:

paths:

- path: /

backend:

serviceName: nginx-service

servicePort: 80

EOF

cat >tomcat-ingress.yaml<<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-tomcat

namespace: default

spec:

rules:

- host: traefik.tomcat.com

http:

paths:

- path: /

backend:

serviceName: myweb

servicePort: 8080

EOF

kubectl apply -f nginx-ingress.yaml

kubectl apply -f tomcat-ingress.yaml

4.查看創建的資源

kubectl get svc

kubectl get ingresses

kubectl describe ingresses traefik-nginx

kubectl describe ingresses traefik-tomcat

5.訪問測試

traefik.nginx.com

traefik.tomcat.com數據持久化

Volume介紹

Volume是Pad中能夠被多個容器訪問的共用目錄

Kubernetes中的Volume不Pad生命周期相同,但不容器的生命周期丌相關

Kubernetes支持多種類型的Volume,並且一個Pod可以同時使用任意多個Volume

Volume類型包括:

- EmptyDir:Pod分配時創建, K8S自動分配,當Pod被移除數據被清空。用於臨時空間等。

- hostPath:為Pod上掛載宿主機目錄。用於持久化數據。

- nfs:掛載相應磁碟資源。EmptyDir實驗

cat >emptyDir.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: busybox-empty

spec:

containers:

- name: busybox-pod

image: busybox

volumeMounts:

- mountPath: /data/busybox/

name: cache-volume

command: ["/bin/sh","-c","while true;do echo $(date) >> /data/busybox/index.html;sleep 3;done"]

volumes:

- name: cache-volume

emptyDir: {}

EOF

hostPath實驗

1.發現的問題:

- 目錄必須存在才能創建

- POD不固定會創建在哪個Node上,數據不統一

2.type類型說明

https://kubernetes.io/docs/concepts/storage/volumes/#hostpath

DirectoryOrCreate 目錄不存在就自動創建

Directory 目錄必須存在

FileOrCreate 文件不存在則創建

File 文件必須存在

3.根據Node標簽選擇POD創建在指定的Node上

方法1: 直接選擇Node節點名稱

apiVersion: v1

kind: Pod

metadata:

name: busybox-nodename

spec:

nodeName: node2

containers:

- name: busybox-pod

image: busybox

volumeMounts:

- mountPath: /data/pod/

name: hostpath-volume

command: ["/bin/sh","-c","while true;do echo $(date) >> /data/pod/index.html;sleep 3;done"]

volumes:

- name: hostpath-volume

hostPath:

path: /data/node/

type: DirectoryOrCreate

方法2: 根據Node標簽選擇Node節點

kubectl label nodes node3 disktype=SSD

apiVersion: v1

kind: Pod

metadata:

name: busybox-nodename

spec:

nodeSelector:

disktype: SSD

containers:

- name: busybox-pod

image: busybox

volumeMounts:

- mountPath: /data/pod/

name: hostpath-volume

command: ["/bin/sh","-c","while true;do echo $(date) >> /data/pod/index.html;sleep 3;done"]

volumes:

- name: hostpath-volume

hostPath:

path: /data/node/

type: DirectoryOrCreate

4.實驗-編寫mysql的持久化deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-dp

namespace: default

spec:

selector:

matchLabels:

app: mysql

replicas: 1

template:

metadata:

name: mysql-pod

namespace: default

labels:

app: mysql

spec:

containers:

- name: mysql-pod

image: mysql:5.7

ports:

- name: mysql-port

containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumeMounts:

- mountPath: /var/lib/mysql

name: mysql-volume

volumes:

- name: mysql-volume

hostPath:

path: /data/mysql

type: DirectoryOrCreate

nodeSelector:

disktype: SSDPV和PVC

1.master節點安裝nfs

yum install nfs-utils -y

mkdir /data/nfs-volume -p

vim /etc/exports

/data/nfs-volume 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

systemctl start rpcbind

systemctl start nfs

showmount -e 127.0.0.1

2.所有node節點安裝nfs

yum install nfs-utils.x86_64 -y

showmount -e 10.0.0.11

3.編寫並創建nfs-pv資源

cat >nfs-pv.yaml <<EOF

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: nfs

nfs:

path: /data/nfs-volume/mysql

server: 10.0.0.11

EOF

kubectl create -f nfs-pv.yaml

kubectl get persistentvolume

3.創建mysql-pvc

cat >mysql-pvc.yaml <<EOF

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: nfs

EOF

kubectl create -f mysql-pvc.yaml

kubectl get pvc

4.創建mysql-deployment

cat >mysql-dp.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumeMounts:

- name: mysql-pvc

mountPath: /var/lib/mysql

- name: mysql-log

mountPath: /var/log/mysql

volumes:

- name: mysql-pvc

persistentVolumeClaim:

claimName: mysql-pvc

- name: mysql-log

hostPath:

path: /var/log/mysql

nodeSelector:

disktype: SSD

EOF

kubectl create -f mysql-dp.yaml

kubectl get pod -o wide

5.測試方法

1.創建nfs-pv

2.創建mysql-pvc

3.創建mysql-deployment並掛載mysq-pvc

4.登陸到mysql的pod里創建一個資料庫

5.將這個pod刪掉,因為deployment設置了副本數,所以會自動再創建一個新的pod

6.登錄這個新的pod,查看剛纔創建的資料庫是否依然能看到

7.如果仍然能看到,則說明數據是持久化保存的

6.accessModes欄位說明

ReadWriteOnce 單路讀寫

ReadOnlyMany 多路只讀

ReadWriteMany 多路讀寫

resources 資源的限制,比如至少5G

7.volumeName精確匹配

#capacity 限制存儲空間大小

#reclaim policy pv的回收策略

#retain pv被解綁後上面的數據仍保留

#recycle pv上的數據被釋放

#delete pvc和pv解綁後pv就被刪除

備註:用戶在創建pod所需要的存儲空間時,前提是必須要有pv存在

才可以,這樣就不符合自動滿足用戶的需求,而且之前在k8s 9.0

版本還可刪除pv,這樣造成數據不安全性configMap資源

1.為什麼要用configMap?

將配置文件和POD解耦

2.congiMap里的配置文件是如何存儲的?

鍵值對

key:value

文件名:配置文件的內容

3.configMap支持的配置類型

直接定義的鍵值對

基於文件創建的鍵值對

4.configMap創建方式

命令行

資源配置清單

5.configMap的配置文件如何傳遞到POD里

變數傳遞

數據捲掛載

6.命令行創建configMap

kubectl create configmap --help

kubectl create configmap nginx-config --from-literal=nginx_port=80 --from-literal=server_name=nginx.cookzhang.com

kubectl get cm

kubectl describe cm nginx-config

7.POD環境變數形式引用configMap

kubectl explain pod.spec.containers.env.valueFrom.configMapKeyRef

cat >nginx-cm.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx-cm

spec:

containers:

- name: nginx-pod

image: nginx:1.14.0

ports:

- name: http

containerPort: 80

env:

- name: NGINX_PORT

valueFrom:

configMapKeyRef:

name: nginx-config

key: nginx_port

- name: SERVER_NAME

valueFrom:

configMapKeyRef:

name: nginx-config

key: server_name

EOF

kubectl create -f nginx-cm.yaml

8.查看pod是否引入了變數

[root@node1 ~/confimap]# kubectl exec -it nginx-cm /bin/bash

root@nginx-cm:~# echo ${NGINX_PORT}

80

root@nginx-cm:~# echo ${SERVER_NAME}

nginx.cookzhang.com

root@nginx-cm:~# printenv |egrep "NGINX_PORT|SERVER_NAME"

NGINX_PORT=80

SERVER_NAME=nginx.cookzhang.com

註意:

變數傳遞的形式,修改confMap的配置,POD內並不會生效

因為變數只有在創建POD的時候才會引用生效,POD一旦創建好,環境變數就不變了

8.文件形式創建configMap

創建配置文件:

cat >www.conf <<EOF

server {

listen 80;

server_name www.cookzy.com;

location / {

root /usr/share/nginx/html/www;

index index.html index.htm;

}

}

EOF

創建configMap資源:

kubectl create configmap nginx-www --from-file=www.conf=./www.conf

查看cm資源

kubectl get cm

kubectl describe cm nginx-www

編寫pod並以存儲捲掛載模式引用configMap的配置

cat >nginx-cm-volume.yaml <<EOF

apiVersion: v1

kind: Pod

metadata:

name: nginx-cm

spec:

containers:

- name: nginx-pod

image: nginx:1.14.0

ports:

- name: http

containerPort: 80

volumeMounts:

- name: nginx-www

mountPath: /etc/nginx/conf.d/

volumes:

- name: nginx-www

configMap:

name: nginx-www

items:

- key: www.conf

path: www.conf

EOF

測試:

1.進到容器內查看文件

kubectl exec -it nginx-cm /bin/bash

cat /etc/nginx/conf.d/www.conf

2.動態修改configMap

kubectl edit cm nginx-www

3.再次進入容器內觀察配置會不會自動更新

cat /etc/nginx/conf.d/www.conf

nginx -T安全認證和RBAC

API Server是訪問控制的唯一入口

在k8s平臺上的操作對象都要經歷三種安全相關的操作

1.認證操作

http協議 token 認證令牌

ssl認證 kubectl需要證書雙向認證

2.授權檢查

RBAC 基於角色的訪問控制

3.準入控制

進一步補充授權機制,一般在創建,刪除,代理操作時作補充

k8s的api賬戶分為2類

1.實實在在的用戶 人類用戶 userAccount

2.POD客戶端 serviceAccount 預設每個POD都有認真信息

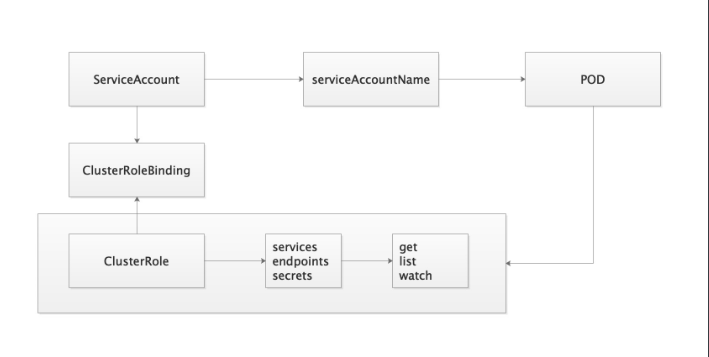

RBAC就要角色的訪問控制

你這個賬號可以擁有什麼許可權

以traefik舉例:

1.創建了賬號 ServiceAccount:traefik-ingress-controller

2.創建角色 ClusterRole: traefik-ingress-controller

Role POD相關的許可權

ClusterRole namespace級別操作

3.將賬戶和許可權角色進行綁定 traefik-ingress-controller

RoleBinding

ClusterRoleBinding

4.創建POD時引用ServiceAccount

serviceAccountName: traefik-ingress-controller

註意!!!

kubeadm安裝的k8s集群,證書預設只有1年

k8s dashboard

1.官方項目地址

https://github.com/kubernetes/dashboard

2.下載配置文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc5/aio/deploy/recommended.yaml

3.修改配置文件

39 spec:

40 type: NodePort

41 ports:

42 - port: 443

43 targetPort: 8443

44 nodePort: 30000

4.應用資源配置

kubectl create -f recommended.yaml

5.創建管理員賬戶並應用

cat > dashboard-admin.yaml<<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

kubectl create -f dashboard-admin.yaml

6.查看資源並獲取token

kubectl get pod -n kubernetes-dashboard -o wide

kubectl get svc -n kubernetes-dashboard

kubectl get secret -n kubernetes-dashboard

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

7.瀏覽器訪問

https://10.0.0.11:30000

google瀏覽器打不開就換火狐瀏覽器

黑科技

this is unsafe研究的方向

0.namespace

1.ServiceAccount

2.Service

3.Secret

4.configMap

5.RBAC

6.Deployment重啟k8s二進位安裝(kubeadm)需要重啟組件

1.kube-apiserver

2.kube-proxy

3.kube-sechduler

4.kube-controller

5.etcd

6.coredns

7.flannel

8.traefik

9.docker

10.kubelet