用三台虛擬機搭建Hadoop全分佈集群 所有的軟體都裝在/home/software下 虛擬機系統:centos6.5 jdk版本:1.8.0_181 zookeeper版本:3.4.7 hadoop版本:2.7.1 1.安裝jdk 準備好免安裝壓縮包放在/home/software下 配置環境變數 ...

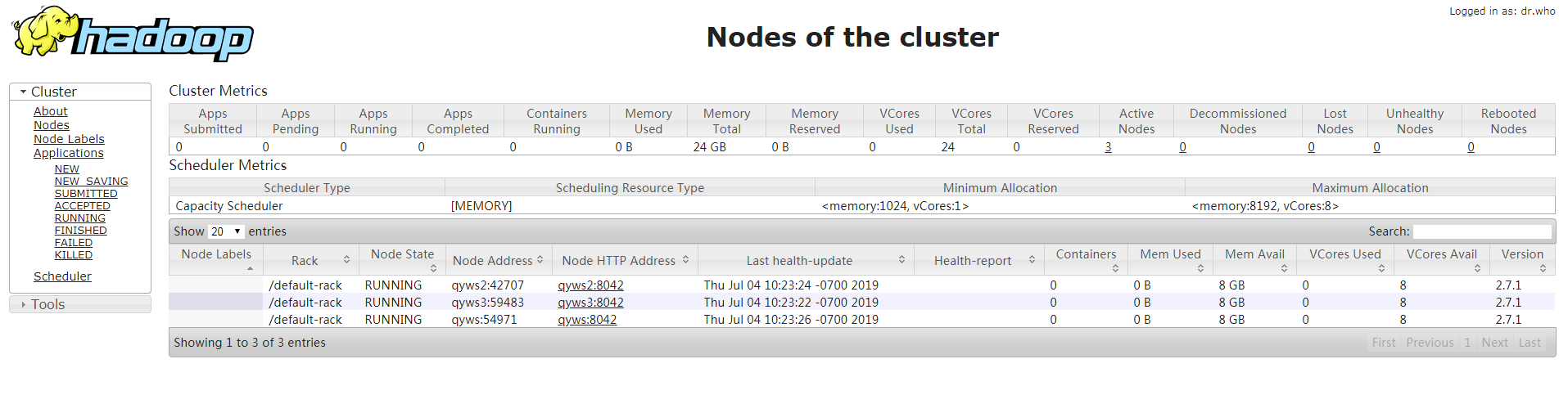

用三台虛擬機搭建Hadoop全分佈集群

所有的軟體都裝在/home/software下

虛擬機系統:centos6.5

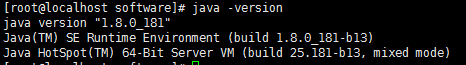

jdk版本:1.8.0_181

zookeeper版本:3.4.7

hadoop版本:2.7.1

1.安裝jdk

準備好免安裝壓縮包放在/home/software下

cd /home/software tar -xvf jdk-8u181-linux-x64.tar.gz

配置環境變數

vim /etc/profile

末尾添加

export JAVA_HOME=/home/software/jdk1.8.0_181

export CLASSPATH=$:CLASSPATH:$JAVA_HOME/lib/

export PATH=$PATH:$JAVA_HOME/bin

source /etc/profile

檢查是否配置成功,查看jdk版本

java –version

2.關閉防火牆

service iptables stop

chkconfig iptables off

3.配置主機名

vim /etc/sysconfig/network

HOSTNAME=qyws

三個節點主機名分別設置為qyws,qyws2,qyws3

source /etc/sysconfig/network

4.改hosts文件

vim /etc/hosts

192.168.38.133 qyws 192.168.38.134 qyws2 192.168.38.135 qyws3

5.重啟

reboot

6.配置免密登陸

ssh-keygen ssh-copy-id root@qyws ssh-copy-id root@qyws2 ssh-copy-id root@qyws3

7.解壓zookeeper壓縮包

tar –xf zookeeper-3.4.7.tar.gz

8.搭建zookeeper集群

cd /home/software/zookeeper-3.4.7/conf cp zoo_sample.cfg zoo.cfg vim zoo.cfg

14 行

dataDir=/home/software/zookeeper-3.4.7/tmp

末尾追加

server.1=192.168.38.133:2888:3888 server.2=192.168.38.134:2888:3888 server.3=192.168.38.135:2888:3888

9.將配置好的zookeeper拷貝到另兩個節點

scp -r zookeeper-3.4.7 root@qyws2:/home/software/ scp -r zookeeper-3.4.7 root@qyws3:/home/software/

10.進入zookeeper目錄下創建tmp目錄,新建myid文件

cd /home/software/zookeeper-3.4.7 mkdir tmp cd tmp vim myid

三個節點myid分別設置為1,2,3

11.解壓hadoop壓縮包

tar -xvf hadoop-2.7.1_64bit.tar.gz

12.編輯hadoop-env.sh

cd /home/software/hadoop-2.7.1/etc/hadoop vim hadoop-env.sh

25行

export JAVA_HOME=/home/software/jdk1.8.0_181

33行

export HADOOP_CONF_DIR=/home/software/hadoop-2.7.1/etc/hadoop

source hadoop-env.sh

13.編輯core-site.xml

<!--指定hdfs的nameservice,為整個集群起一個別名--> <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <!--指定Hadoop數據臨時存放目錄--> <property> <name>hadoop.tmp.dir</name> <value>/home/software/hadoop-2.7.1/tmp</value> </property> <!--指定zookeeper的存放地址--> <property> <name>ha.zookeeper.quorum</name> <value>qyws:2181,qyws2:2181,qyws3:2181</value> </property>

14.編輯hdfs-site.xml

<!--執行hdfs的nameservice為ns,註意要和core-site.xml中的名稱保持一致--> <property> <name>dfs.nameservices</name> <value>ns</value> </property> <!--ns集群下有兩個namenode,分別為nn1, nn2--> <property> <name>dfs.ha.namenodes.ns</name> <value>nn1,nn2</value> </property> <!--nn1的RPC通信--> <property> <name>dfs.namenode.rpc-address.ns.nn1</name> <value>qyws:9000</value> </property> <!--nn1的http通信--> <property> <name>dfs.namenode.http-address.ns.nn1</name> <value>qyws:50070</value> </property> <!-- nn2的RPC通信地址 --> <property> <name>dfs.namenode.rpc-address.ns.nn2</name> <value>qyws2:9000</value> </property> <!-- nn2的http通信地址 --> <property> <name>dfs.namenode.http-address.ns.nn2</name> <value>qyws2:50070</value> </property> <!--指定namenode的元數據在JournalNode上存放的位置,這樣,namenode2可以從journalnode集群里的指定位置上獲取信息,達到熱備效果--> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://qyws:8485;qyws2:8485;qyws3:8485/ns</value> </property> <!-- 指定JournalNode在本地磁碟存放數據的位置 --> <property> <name>dfs.journalnode.edits.dir</name> <value>/home/software/hadoop-2.7.1/tmp/journal</value> </property> <!-- 開啟NameNode故障時自動切換 --> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <!-- 配置失敗自動切換實現方式 --> <property> <name>dfs.client.failover.proxy.provider.ns</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 配置隔離機制 --> <property> <name>dfs.ha.fencing.methods</name> <value>sshfence</value> </property> <!-- 使用隔離機制時需要ssh免登陸 --> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <!--配置namenode存放元數據的目錄,可以不配置,如果不配置則預設放到hadoop.tmp.dir下--> <property> <name>dfs.namenode.name.dir</name> <value>file:///home/software/hadoop-2.7.1/tmp/hdfs/name</value> </property> <!--配置datanode存放元數據的目錄,可以不配置,如果不配置則預設放到hadoop.tmp.dir下--> <property> <name>dfs.datanode.data.dir</name> <value>file:///home/software/hadoop-2.7.1/tmp/hdfs/data</value> </property> <!--配置複本數量--> <property> <name>dfs.replication</name> <value>3</value> </property> <!--設置用戶的操作許可權,false表示關閉許可權驗證,任何用戶都可以操作--> <property> <name>dfs.permissions</name> <value>false</value> </property>

15.編輯mapred-site.xml

cp mapred-site.xml.template mapred-site.xml vim mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property>

16.編輯yarn-site.xml

<!--配置yarn的高可用--> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!--指定兩個resourcemaneger的名稱--> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <!--配置rm1的主機--> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>qyws</value> </property> <!--配置rm2的主機--> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>qyws3</value> </property> <!--開啟yarn恢復機制--> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <!--執行rm恢復機制實現類--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <!--配置zookeeper的地址--> <property> <name>yarn.resourcemanager.zk-address</name> <value>qyws:2181,qyws2:2181,qyws3:2181</value> </property> <!--執行yarn集群的別名--> <property> <name>yarn.resourcemanager.cluster-id</name> <value>ns-yarn</value> </property> <!-- 指定nodemanager啟動時載入server的方式為shuffle server --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 指定resourcemanager地址 --> <property> <name>yarn.resourcemanager.hostname</name> <value>qyws3</value> </property>

17.編輯slaves

vim /home/software/hadoop-2.7.1/etc/hadoop/slaves

qyws

qyws2

qyws3

18.把配置好的hadoop拷貝到其他節點

scp -r hadoop-2.7.1 root@qyws2:/home/software/ scp -r hadoop-2.7.1 root@qyws3:/home/software/

19.配置環境變數

vim /etc/profile

末尾添加

export HADOOP_HOME=/home/software/hadoop-2.7.1 export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

source /etc/profile

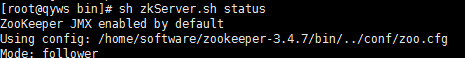

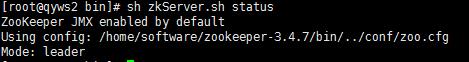

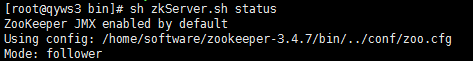

20.三個節點啟動zookeeper

cd /home/software/zookeeper-3.4.7/bin sh zkServer.sh start

查看zookeeper狀態

sh zkServer.sh status

21.格式化zookeeper(在第一個節點操作即可):

hdfs zkfc -formatZK

22.在每個節點啟動JournalNode:

hadoop-daemon.sh start journalnode

23.在第一個節點上格式化NameNode:

hadoop namenode -format

24.在第一個節點上啟動NameNode:

hadoop-daemon.sh start namenode

25.在第二個節點上格式化NameNode:

hdfs namenode -bootstrapStandby

26.在第二個節點上啟動NameNode:

hadoop-daemon.sh start namenode

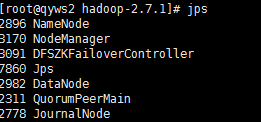

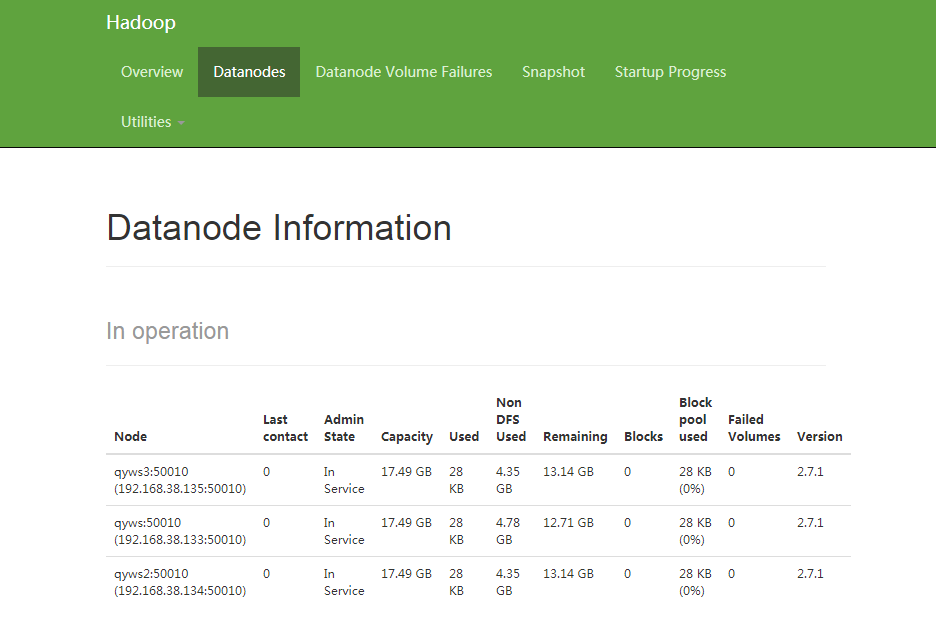

27.在三個節點上啟動DataNode:

hadoop-daemon.sh start datanode

28.在第一個節點和第二個節點上啟動zkfc(FailoverController):

hadoop-daemon.sh start zkfc

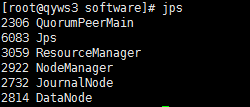

29.在第一個節點上啟動Yarn:

start-yarn.sh

30.在第三個節點上啟動ResourceManager:

yarn-daemon.sh start resourcemanager

31.查看運行的服務

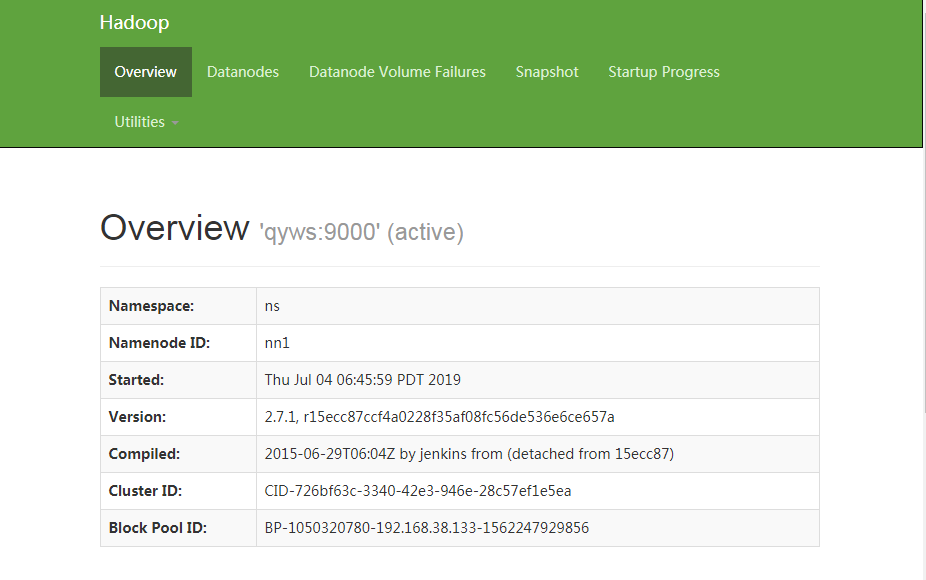

32.查看第一個節點namenode

瀏覽器輸入http://192.168.38.133:50070

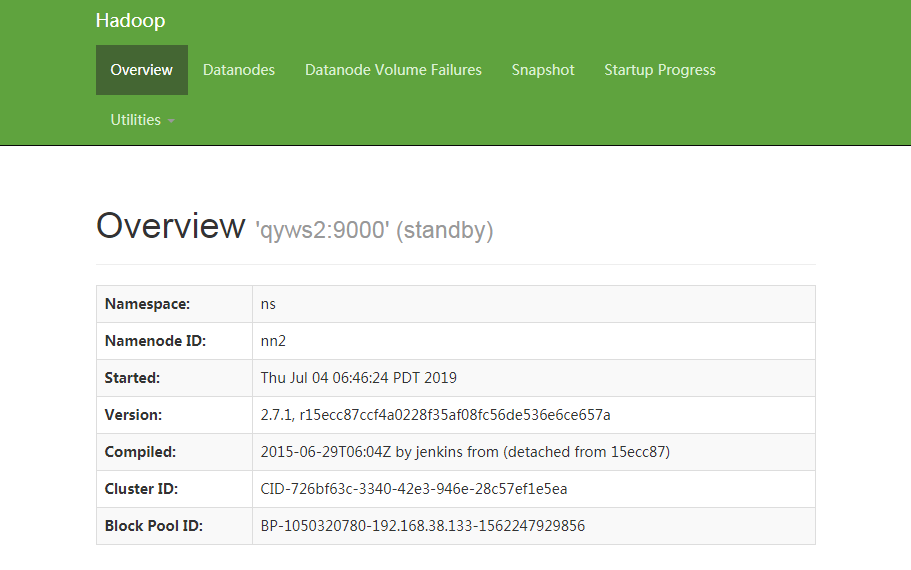

查看第二個節點namenode(主備)

瀏覽器輸入http://192.168.38.134:50070

33.訪問管理頁面http://192.168.38.133:8088