1.安裝open-vm-tools sudo apt-get install open-vm-tools 2.安裝openjdk sudo apt-get install openjdk-8-jdk 3.安裝配置ssh apt-get install openssh-server 4.在進行了初次登 ...

1.安裝open-vm-tools

sudo apt-get install open-vm-tools

2.安裝openjdk

sudo apt-get install openjdk-8-jdk

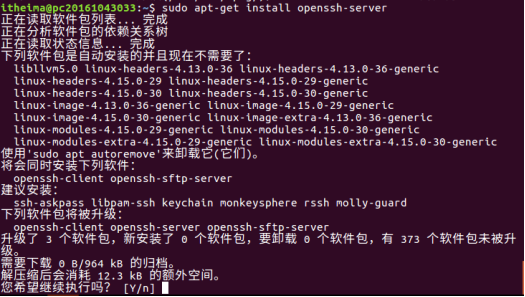

3.安裝配置ssh

apt-get install openssh-server

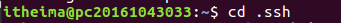

4.在進行了初次登陸後,會在當前家目錄用戶下有一個.ssh文件夾,進入該文件夾下:cd .ssh

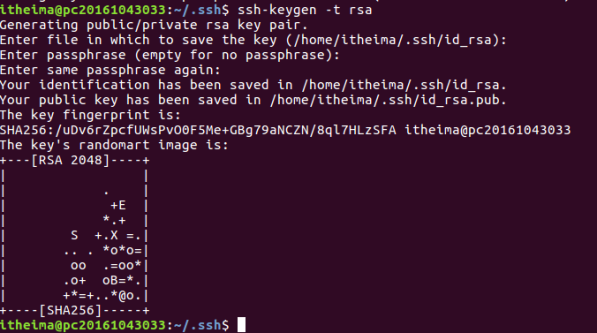

ssh-keygen -t rsa

一路回車c

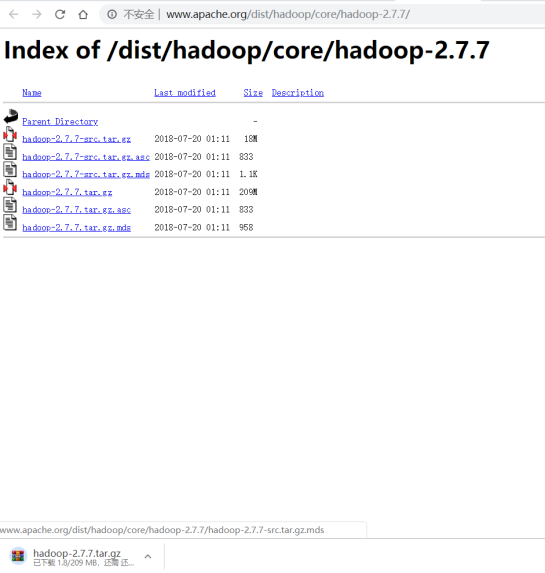

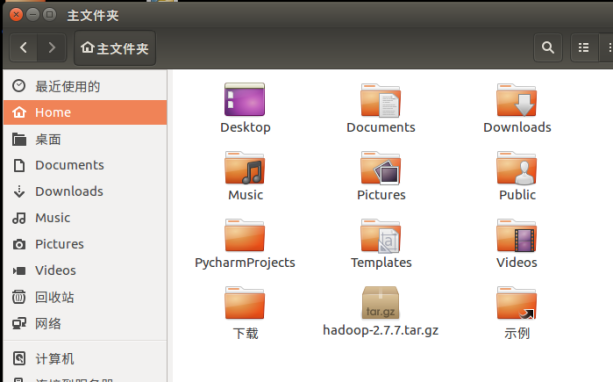

5.下載hadoop2.7.7 解壓縮並改名為hadoop目錄,放到/usr/local下(註意許可權)

sudo mv ~/hadoop-2.7.7 /usr/local/hadoop

![]()

6.修改目錄所有者 /usr/local/下的hadoop文件夾

sudo chown -R 當前用戶名 /usr/local/hadoop

![]()

7.設置環境變數

(1)進入 sudo gedit ~/.bashrc

#~/.bashrc

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

#打包hadoop程式需要的環境變數

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

#讓環境變數生效

source ~/.bashrc

(2) 進入 /usr/local/hadoop/etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

(3) 進入 /usr/local/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

(4) 進入 /usr/local/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hadoop_data/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/hadoop_data/hdfs/datanode</value>

</property>

</configuration>

(5) 進入 /usr/local/hadoop/etc/hadoop/mapred-site.xml(mapred-site.xml.template重命名)

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

(6) 進入 /usr/local/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

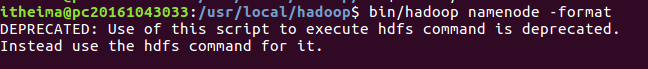

8.格式化hdfs文件系統

hdfs namenode -format

9.啟動hadoop

start-all.sh 或start-dfs.sh start-yarn.sh

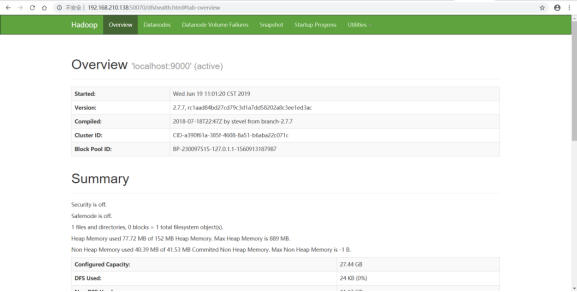

10.瀏覽器搜索