Spark http://spark.apache.org/ http://spark.apache.org/docs/latest/ http://spark.apache.org/docs/2.2.0/quick-start.html http://spark.apache.org/docs/2 ...

Spark

http://spark.apache.org/docs/latest/

http://spark.apache.org/docs/2.2.0/quick-start.html

http://spark.apache.org/docs/2.2.0/spark-standalone.html

http://spark.apache.org/docs/2.2.0/running-on-yarn.html

http://spark.apache.org/docs/2.2.0/configuration.html

http://spark.apache.org/docs/2.2.0/structured-streaming-programming-guide.html

http://spark.apache.org/docs/2.2.0/streaming-programming-guide.html

從2.21開始,spark-streaming,有變化

http://spark.apache.org/docs/2.2.1/streaming-programming-guide.html

報錯內容:

System memory 259522560 must be at least 4.718592E8. Please use a larger heap size.在Eclipse里開發spark項目,嘗試直接在spark里運行程式的時候,遇到下麵這個報錯:

ERROR SparkContext: Error initializing SparkContext.

java.lang.IllegalArgumentException: System memory 468189184 must be at least 4.718592E8. Please use a larger heap size.

很明顯,這是JVM申請的memory不夠導致無法啟動SparkContext。但是該怎麼設呢?

通過查看spark源碼,發現源碼是這麼寫的:

/**

* Return the total amount of memory shared between execution and storage, in bytes.

*/

private def getMaxMemory(conf: SparkConf): Long = {

val systemMemory = conf.getLong("spark.testing.memory", Runtime.getRuntime.maxMemory)

val reservedMemory = conf.getLong("spark.testing.reservedMemory",

if (conf.contains("spark.testing")) 0 else RESERVED_SYSTEM_MEMORY_BYTES)

val minSystemMemory = reservedMemory * 1.5

if (systemMemory < minSystemMemory) {

throw new IllegalArgumentException(s"System memory $systemMemory must " +

s"be at least $minSystemMemory. Please use a larger heap size.")

}

val usableMemory = systemMemory - reservedMemory

val memoryFraction = conf.getDouble("spark.memory.fraction", 0.75)

(usableMemory * memoryFraction).toLong

}

所以,這裡主要是val systemMemory = conf.getLong("spark.testing.memory", Runtime.getRuntime.maxMemory)。

conf.getLong()的定義和解釋是

- getLong(key: String, defaultValue: Long): Long

- Get a parameter as a long, falling back to a default if not set

所以,我們應該在conf里設置一下spark.testing.memory.

通過嘗試,發現可以有2個地方可以設置

1. 自己的源代碼處,可以在conf之後加上:

val conf = new SparkConf().setAppName("word count")

conf.set("spark.testing.memory", "2147480000")//後面的值大於512m即可

2. 可以在Eclipse的Run Configuration處,有一欄是Arguments,下麵有VMarguments,在下麵添加下麵一行(值也是只要大於512m即可)

-Dspark.testing.memory=1073741824

其他的參數,也可以動態地在這裡設置,比如-Dspark.master=spark://hostname:7077

再運行就不會報這個錯誤了。

解決:

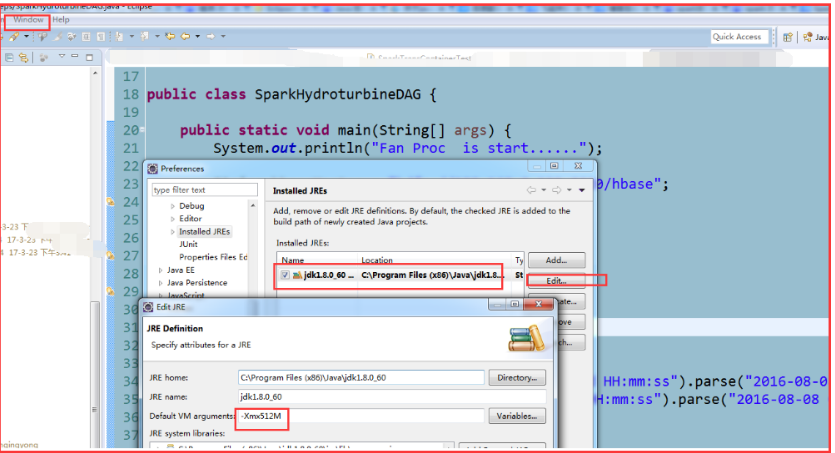

Window——Preference——Java——Installed JREs——選中一個Jre 後

Edit在Default VM arguments 裡加入:-Xmx512M

OK!!!