一 基礎準備 參考《002.Ceph安裝部署》文檔部署一個基礎集群; 新增節點主機名及IP在deploy節點添加解析: 參考《002.Ceph安裝部署》文檔部署一個基礎集群; 新增節點主機名及IP在deploy節點添加解析: 配置國內yum源: 配置國內yum源: 二 塊設備 2.1 添加普通用戶 ...

一 基礎準備

- 參考《002.Ceph安裝部署》文檔部署一個基礎集群;

- 新增節點主機名及IP在deploy節點添加解析:

1 [root@deploy ~]# echo "172.24.8.75 cephclient" >>/etc/hosts

- 配置國內yum源:

1 [root@cephclient ~]# yum -y update 2 [root@cephclient ~]# rm /etc/yum.repos.d/* -rf 3 [root@cephclient ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo 4 [root@cephclient ~]# yum -y install epel-release 5 [root@cephclient ~]# mv /etc/yum.repos.d/epel.repo /etc/yum.repos.d/epel.repo.backup 6 [root@cephclient ~]# mv /etc/yum.repos.d/epel-testing.repo /etc/yum.repos.d/epel-testing.repo.backup 7 [root@cephclient ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

二 塊設備

2.1 添加普通用戶

1 [root@cephclient ~]# useradd -d /home/cephuser -m cephuser 2 [root@cephclient ~]# echo "cephuser" | passwd --stdin cephuser #cephclient節點創建cephuser用戶 3 [root@cephclient ~]# echo "cephuser ALL = (root) NOPASSWD:ALL" > /etc/sudoers.d/cephuser 4 [root@cephclient ~]# chmod 0440 /etc/sudoers.d/cephuser 5 [root@deploy ~]# su - manager 6 [manager@deploy ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected]

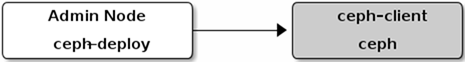

2.2 安裝ceph-client

1 [root@deploy ~]# su - manager 2 [manager@deploy ~]$ cd my-cluster/ 3 [manager@deploy my-cluster]$ vi ~/.ssh/config 4 Host node1 5 Hostname node1 6 User cephuser 7 Host node2 8 Hostname node2 9 User cephuser 10 Host node3 11 Hostname node3 12 User cephuser 13 Host cephclient 14 Hostname cephclient #新增cephclient節點信息 15 User cephuser 16 [manager@deploy my-cluster]$ ceph-deploy install cephclient #安裝Ceph註意:若使用ceph-deploy部署的時候出現安裝包無法下載,可在部署時候指定ceph.repo為國內源:

1 ceph-deploy install cephclient --repo-url=https://mirrors.aliyun.com/ceph/rpm-mimic/el7/ --gpg-url=https://mirrors.aliyun.com/ceph/keys/release.asc

1 [manager@deploy my-cluster]$ ceph-deploy admin cephclient

提示:為方便後期deploy節點管理cephclient,在CLI中使用命令中簡化相關key的輸出,可將key複製至相應節點。ceph-deploy 工具會把密鑰環複製到/etc/ceph目錄,要確保此密鑰環文件有讀許可權(如 sudo chmod +r /etc/ceph/ceph.client.admin.keyring )。

2.3 創建pool

1 [manager@deploy my-cluster]$ ssh node1 sudo ceph osd pool create mytestpool 64

2.4 初始化pool

1 [root@cephclient ~]# ceph osd lspools 2 [root@cephclient ~]# rbd pool init mytestpool

2.5 創建塊設備

1 [root@cephclient ~]# rbd create mytestpool/mytestimages --size 4096 --image-feature layering

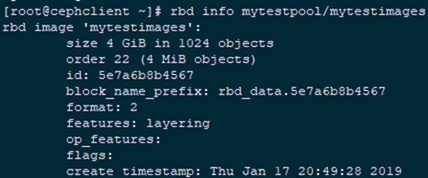

2.6 確認驗證

1 [root@cephclient ~]# rbd ls mytestpool 2 mytestimages 3 [root@cephclient ~]# rbd showmapped 4 id pool image snap device 5 0 mytestpool mytestimages - /dev/rbd0 6 [root@cephclient ~]# rbd info mytestpool/mytestimages

2.7 將image映射為塊設備

1 [root@cephclient ~]# rbd map mytestpool/mytestimages --name client.admin 2 /dev/rbd0

2.8 格式化設備

1 [root@cephclient ~]# mkfs.ext4 /dev/rbd/mytestpool/mytestimages 2 [root@cephclient ~]# lsblk

2.9 掛載並測試

1 [root@cephclient ~]# sudo mkdir /mnt/ceph-block-device 2 [root@cephclient ~]# sudo mount /dev/rbd/mytestpool/mytestimages /mnt/ceph-block-device/ 3 [root@cephclient ~]# cd /mnt/ceph-block-device/ 4 [root@cephclient ceph-block-device]# echo 'This is my test file!' >> test.txt 5 [root@cephclient ceph-block-device]# ls 6 lost+found test.txt

2.10 自動map

1 [root@cephclient ~]# vim /etc/ceph/rbdmap 2 # RbdDevice Parameters 3 #poolname/imagename id=client,keyring=/etc/ceph/ceph.client.keyring 4 mytestpool/mytestimages id=admin,keyring=/etc/ceph/ceph.client.admin.keyring

2.11 開機掛載

1 [root@cephclient ~]# vi /etc/fstab 2 #…… 3 /dev/rbd/mytestpool/mytestimages /mnt/ceph-block-device ext4 defaults,noatime,_netdev 0 0

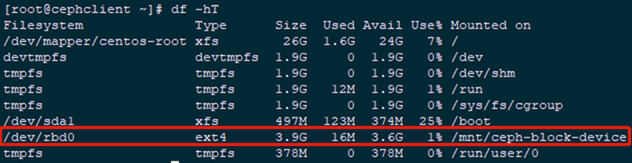

2.12 rbdmap開機啟動

1 [root@cephclient ~]# systemctl enable rbdmap.service 2 [root@cephclient ~]# df -hT #查看驗證

提示:若出現開機後依舊無法自動掛載,rbdmap也異常,可如下操作:

提示:若出現開機後依舊無法自動掛載,rbdmap也異常,可如下操作:

1 [root@cephclient ~]# vi /usr/lib/systemd/system/rbdmap.service 2 [Unit] 3 Description=Map RBD devices 4 WantedBy=multi-user.target #需要新增此行 5 #……