用scrapy只創建一個項目,創建多個spider,每個spider指定items,pipelines.啟動爬蟲時只寫一個啟動腳本就可以全部同時啟動。 本文代碼已上傳至github,鏈接在文未。 一,創建多個spider的scrapy項目 scrapy startproject mymultispi ...

用scrapy只創建一個項目,創建多個spider,每個spider指定items,pipelines.啟動爬蟲時只寫一個啟動腳本就可以全部同時啟動。

本文代碼已上傳至github,鏈接在文未。

一,創建多個spider的scrapy項目

scrapy startproject mymultispider

cd mymultispider

scrapy genspider myspd1 sina.com.cn

scrapy genspider myspd2 sina.com.cn

scrapy genspider myspd3 sina.com.cn

二,運行方法

1.為了方便觀察,在spider中分別列印相關信息

import scrapy class Myspd1Spider(scrapy.Spider): name = 'myspd1' allowed_domains = ['sina.com.cn'] start_urls = ['http://sina.com.cn/']

def parse(self, response): print('myspd1')

其他如myspd2,myspd3分別列印相關內容。

2.多個spider運行方法有兩種,第一種寫法比較簡單,在項目目錄下創建crawl.py文件,內容如下

from scrapy.crawler import CrawlerProcess from scrapy.utils.project import get_project_settings process = CrawlerProcess(get_project_settings()) # myspd1是爬蟲名 process.crawl('myspd1') process.crawl('myspd2') process.crawl('myspd3') process.start()

為了觀察方便,可在settings.py文件中限定日誌輸出

LOG_LEVEL = 'ERROR'

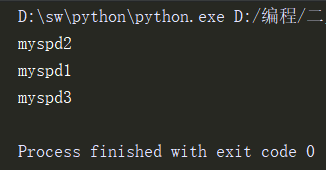

右鍵運行此文件即可,輸出如下

3.第二種運行方法為修改crawl源碼,可以從官方的github中找到:https://github.com/scrapy/scrapy/blob/master/scrapy/commands/crawl.py

在spiders目錄的同級目錄下創建一個mycmd目錄,併在該目錄中創建一個mycrawl.py,將crawl源碼複製進來,修改其中的run方法,改為如下內容

def run(self, args, opts): # 獲取爬蟲列表 spd_loader_list = self.crawler_process.spider_loader.list() # 遍歷各爬蟲 for spname in spd_loader_list or args: self.crawler_process.crawl(spname, **opts.spargs) print("此時啟動的爬蟲:" + spname) self.crawler_process.start()

在該文件的目錄下創建初始化文件__init__.py

完成後機構目錄如下

使用命令啟動爬蟲

scrapy mycrawl --nolog

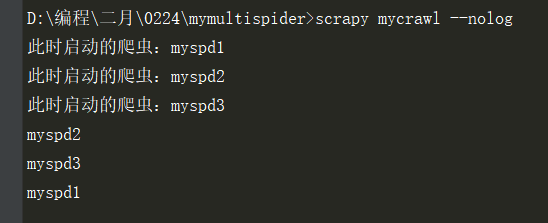

輸出如下:

三,指定items

1,這個比較簡單,在items.py文件內創建相應的類,在spider中引入即可

items.py

import scrapy class MymultispiderItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() pass class Myspd1spiderItem(scrapy.Item): name = scrapy.Field() class Myspd2spiderItem(scrapy.Item): name = scrapy.Field() class Myspd3spiderItem(scrapy.Item): name = scrapy.Field()

spider內,例myspd1

# -*- coding: utf-8 -*- import scrapy from mymultispider.items import Myspd1spiderItem class Myspd1Spider(scrapy.Spider): name = 'myspd1' allowed_domains = ['sina.com.cn'] start_urls = ['http://sina.com.cn/'] def parse(self, response): print('myspd1') item = Myspd1spiderItem() item['name'] = 'myspd1的pipelines' yield item

四,指定pipelines

1,這個也有兩種方法,方法一,定義多個pipeline類:

pipelines.py文件內:

class Myspd1spiderPipeline(object): def process_item(self,item,spider): print(item['name']) return item class Myspd2spiderPipeline(object): def process_item(self,item,spider): print(item['name']) return item class Myspd3spiderPipeline(object): def process_item(self,item,spider): print(item['name']) return item

1.1settings.py文件開啟管道

ITEM_PIPELINES = { # 'mymultispider.pipelines.MymultispiderPipeline': 300, 'mymultispider.pipelines.Myspd1spiderPipeline': 300, 'mymultispider.pipelines.Myspd2spiderPipeline': 300, 'mymultispider.pipelines.Myspd3spiderPipeline': 300, }

1.2spider中設置管道,例myspd1

# -*- coding: utf-8 -*- import scrapy from mymultispider.items import Myspd1spiderItem class Myspd1Spider(scrapy.Spider): name = 'myspd1' allowed_domains = ['sina.com.cn'] start_urls = ['http://sina.com.cn/'] custom_settings = { 'ITEM_PIPELINES': {'mymultispider.pipelines.Myspd1spiderPipeline': 300}, } def parse(self, response): print('myspd1') item = Myspd1spiderItem() item['name'] = 'myspd1的pipelines' yield item

指定管道的代碼

custom_settings = { 'ITEM_PIPELINES': {'mymultispider.pipelines.Myspd1spiderPipeline': 300}, }

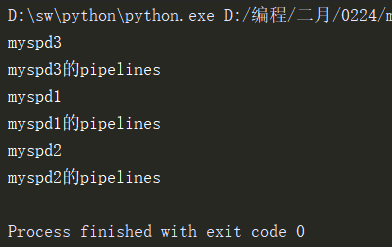

1.3運行crawl文件,運行結果如下

2,方法二,在pipelines.py文件內判斷是哪個爬蟲的結果

2.1 pipelines.py文件內

class MymultispiderPipeline(object): def process_item(self, item, spider): if spider.name == 'myspd1': print('myspd1的pipelines') elif spider.name == 'myspd2': print('myspd2的pipelines') elif spider.name == 'myspd3': print('myspd3的pipelines') return item

2.2 settings.py文件內只開啟MymultispiderPipeline這個管道文件

ITEM_PIPELINES = { 'mymultispider.pipelines.MymultispiderPipeline': 300, # 'mymultispider.pipelines.Myspd1spiderPipeline': 300, # 'mymultispider.pipelines.Myspd2spiderPipeline': 300, # 'mymultispider.pipelines.Myspd3spiderPipeline': 300, }

2.3spider中屏蔽掉指定pipelines的相關代碼

# -*- coding: utf-8 -*- import scrapy from mymultispider.items import Myspd1spiderItem class Myspd1Spider(scrapy.Spider): name = 'myspd1' allowed_domains = ['sina.com.cn'] start_urls = ['http://sina.com.cn/'] # custom_settings = { # 'ITEM_PIPELINES': {'mymultispider.pipelines.Myspd1spiderPipeline': 300}, # } def parse(self, response): print('myspd1') item = Myspd1spiderItem() item['name'] = 'myspd1的pipelines' yield item

2.4 運行crawl.py文件,結果如下

代碼git地址:https://github.com/terroristhouse/crawler

python系列教程:

鏈接:https://pan.baidu.com/s/10eUCb1tD9GPuua5h_ERjHA

提取碼:h0td