1 MongoDB 分片(高可用) 1.1 準備工作 三台虛擬機 安裝MongoDB 虛擬機相互之間可以相互通信 虛擬機與主機之間可以相互通信 1.2 安裝MongoDB 在Ubuntu16.04 中安裝 MongoDB 。參考步驟 "MongoDB官方網站" 安裝時會報錯 提示需要安裝apt tr ...

1 MongoDB 分片(高可用)

1.1 準備工作

- 三台虛擬機

- 安裝MongoDB

- 虛擬機相互之間可以相互通信

- 虛擬機與主機之間可以相互通信

1.2 安裝MongoDB

在Ubuntu16.04 中安裝 MongoDB 。參考步驟MongoDB官方網站

安裝時會報錯

E: The method driver /usr/lib/apt/methods/https could not be found. N: Is the package apt-transport-https installed?提示需要安裝apt-transport-https

sudo apt-get install -y apt-transport-https

1.3 啟動MongoDB

sudo service mongod start檢查是否啟動成功

sudo cat /var/log/mongodb/mongod.log

2019-04-19T15:40:52.808+0800 I NETWORK [initandlisten] waiting for connections on port 27017

2 MongoDB 分片

分片(sharding)是將數據拆分,將其分散存到不同機器上的過程。MongoDB 支持自動分片,可以使資料庫架構對應用程式不可見。對於應用程式來說,好像始終在使用一個單機的 MongoDB 伺服器一樣,另一方面,MongoDB 自動處理數據在分片上的分佈,也更容易添加和刪除分片。類似於MySQL中的分庫。

2.1 基礎組件

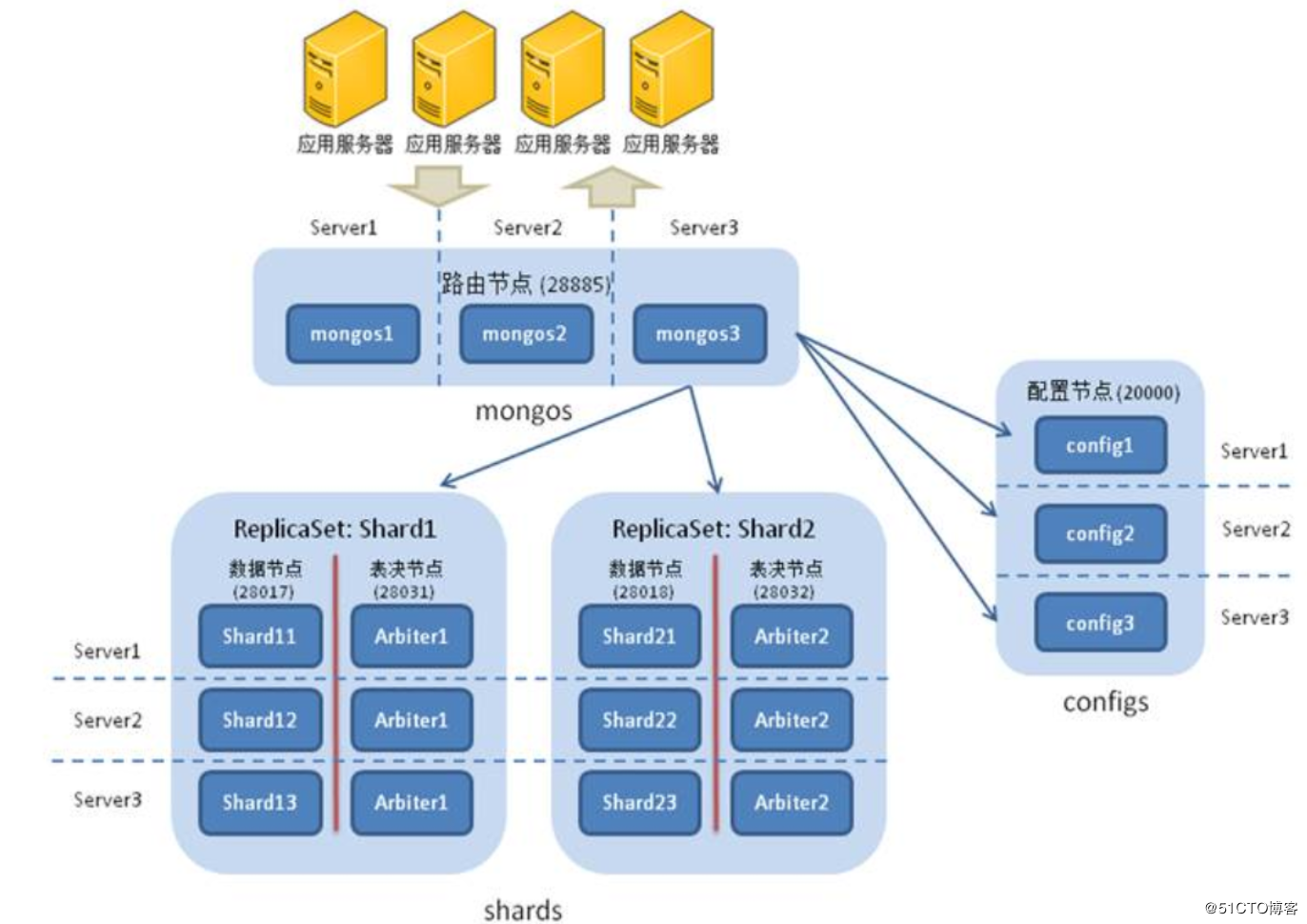

其利用到了四個組件:mongos,config server,shard,replica set

mongos:資料庫集群請求的入口,所有請求需要經過mongos進行協調,無需在應用層面利用程式來進行路由選擇,mongos其自身是一個請求分發中心,負責將外部的請求分發到對應的shard伺服器上,mongos作為統一的請求入口,為防止mongos單節點故障,一般需要對其做HA。可以理解為在微服務架構中的路由Eureka。

config server:配置伺服器,存儲所有資料庫元數據(分片,路由)的配置。mongos本身沒有物理存儲分片伺服器和數據路由信息,只是存緩存在記憶體中來讀取數據,mongos在第一次啟動或後期重啟時候,就會從config server中載入配置信息,如果配置伺服器信息發生更新會通知所有的mongos來更新自己的狀態,從而保證準確的請求路由,生產環境中通常也需要多個config server,防止配置文件存在單節點丟失問題。理解為 配置中心

shard:在傳統意義上來講,如果存在海量數據,單台伺服器存儲1T壓力非常大,無論考慮資料庫的硬碟,網路IO,又有CPU,記憶體的瓶頸,如果多台進行分攤1T的數據,到每臺上就是可估量的較小數據,在mongodb集群只要設置好分片規則,通過mongos操作資料庫,就可以自動把對應的操作請求轉發到對應的後端分片伺服器上。真正的存儲

replica set:在總體mongodb集群架構中,對應的分片節點,如果單台機器下線,對應整個集群的數據就會出現部分缺失,這是不能發生的,因此對於shard節點需要replica set來保證數據的可靠性,生產環境通常為2個副本+1個仲裁。 副本保持數據的HA

2.2 架構圖

3 安裝部署

為了節省伺服器,採用多實例配置,三個mongos,三個config server,單個伺服器上面運行不通角色的shard(為了後期數據分片均勻,將三台shard在各個伺服器上充當不同的角色。),在一個節點內採用replica set保證高可用,對應主機與埠信息如下:

| 主機名 | IP地址 | 組件mongos | 組件config server | shard |

|---|---|---|---|---|

| 主節點: 22001 | ||||

| mongodb-1 | 192.168.90.130 | 埠:20000 | 埠:21000 | 副本節點:22002 |

| 仲裁節點:22003 | ||||

| 主節點: 22002 | ||||

| mongodb-2 | 192.168.90.131 | 埠:20000 | 埠:21000 | 副本節點:22001 |

| 仲裁節點:22003 | ||||

| 主節點: 22003 | ||||

| mongodb-3 | 192.168.90.132 | 埠:20000 | 埠:21000 | 副本節點:22001 |

| 仲裁節點:22002 |

3.1 部署配置伺服器集群

3.1.1 先創建對應的文件夾

mkdir -p /usr/local/mongo/mongoconf/{data,log,config}

touch mongoconfg.conf

touch mongoconf.log3.1.2 填寫對應的配置文件

# mongod.conf

# for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/

# Where and how to store data.

storage:

dbPath: /usr/local/mongo/mongoconf/data

journal:

enabled: true

commitIntervalMs: 200

# engine:

# mmapv1:

# wiredTiger:

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /usr/local/mongo/mongoconf/log/mongoconf.log

# network interfaces

net:

port: 21000

bindIp: 0.0.0.0

maxIncomingConnections: 1000

# how the process runs

processManagement:

fork: true

#security:

#operationProfiling:

#replication:

replication:

replSetName: replconf

#sharding:

sharding:

clusterRole: configsvr

## Enterprise-Only Options:

#auditLog:

#snmp:

配置集群需要指定 data ,log ,以及對應的sharding角色

3.1.3 啟動config集群

在三台機器上都配置好對應的文件後可以啟動config集群

mogod -f /usr/local/mongo/mongoconf/conf/mongoconf.conf 3.1.4 副本初始化操作

進入任意一臺機器(130為例),做集群副本初始化操作

mongo 192.168.90.130

config = {

_id:"replconf"

members:[

{_id:0,host:"192.168.90.130:21000"}

{_id:0,host:"192.168.90.131:21000"}

{_id:0,host:"192.168.90.132:21000"}

]

}

rs.initiate(config)

//查看集群狀態

rs.status()返回結果如下

{

"set" : "replconf",

"date" : ISODate("2019-04-21T06:38:50.164Z"),

"myState" : 2,

"term" : NumberLong(3),

"syncingTo" : "192.168.90.131:21000",

"syncSourceHost" : "192.168.90.131:21000",

...

"members" : [

{

"_id" : 0,

"name" : "192.168.90.130:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 305,

"optime" : {

"ts" : Timestamp(1555828718, 1),

"t" : NumberLong(3)

},

"optimeDurable" : {

"ts" : Timestamp(1555828718, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2019-04-21T06:38:38Z"),

"optimeDurableDate" : ISODate("2019-04-21T06:38:38Z"),

"lastHeartbeat" : ISODate("2019-04-21T06:38:49.409Z"),

"lastHeartbeatRecv" : ISODate("2019-04-21T06:38:49.408Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.90.131:21000",

"syncSourceHost" : "192.168.90.131:21000",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.90.131:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 307,

"optime" : {

"ts" : Timestamp(1555828718, 1),

"t" : NumberLong(3)

},

"optimeDurable" : {

"ts" : Timestamp(1555828718, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2019-04-21T06:38:38Z"),

"optimeDurableDate" : ISODate("2019-04-21T06:38:38Z"),

"lastHeartbeat" : ISODate("2019-04-21T06:38:49.380Z"),

"lastHeartbeatRecv" : ISODate("2019-04-21T06:38:49.635Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1555828429, 1),

"electionDate" : ISODate("2019-04-21T06:33:49Z"),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.90.132:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 319,

"optime" : {

"ts" : Timestamp(1555828718, 1),

"t" : NumberLong(3)

},

"optimeDate" : ISODate("2019-04-21T06:38:38Z"),

"syncingTo" : "192.168.90.131:21000",

"syncSourceHost" : "192.168.90.131:21000",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

}

],

"ok" : 1,

...

}

可以看出config集群部署成功,且通過選舉機制選定131為primary節點。

3.2 部署Shard分片集群

3.2.1 分別對三片數據存儲創建對應的文件夾

mkdir -p shard1/{data,conf,log}

mkdir -p shard2/{data,conf,log}

mkdir -p shard3/{data,conf,log}

touch shard1/log/shard1.log

touch shard2/log/shard2.log

touch shard3/log/shard3.log

3.2.2 創建對應的配置文件

# mongod.conf

# for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/

# Where and how to store data.

storage:

dbPath: /usr/local/mongo/shard1/data

journal:

enabled: true

commitIntervalMs: 200

mmapv1:

smallFiles: true

# wiredTiger:

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /usr/local/mongo/shard1/log/shard1.log

# network interfaces

net:

port: 22001

bindIp: 0.0.0.0

maxIncomingConnections: 1000

# how the process runs

processManagement:

fork: true

#security:

#operationProfiling:

#replication:

replication:

replSetName: shard1

oplogSizeMB: 4096

#sharding:

sharding:

clusterRole: shardsvr

## Enterprise-Only Options:

#auditLog:

#snmp:

分別建立shard1,shard2,shard3 的配置文件

3.2.3 啟動對應的shard

mongod -f /usr/local/mongo/shard1/conf/shard1.conf

mongod -f /usr/local/mongo/shard2/conf/shard2.conf

mongod -f /usr/local/mongo/shard3/conf/shard3.conf

使用

netstat -lntup 來查看進程

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22001 0.0.0.0:* LISTEN 1891/mongod

tcp 0 0 0.0.0.0:22002 0.0.0.0:* LISTEN 1974/mongod

tcp 0 0 0.0.0.0:22003 0.0.0.0:* LISTEN 2026/mongod

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:21000 0.0.0.0:* LISTEN 1779/mongod 發現進程全部啟動

3.2.4 指定對應shard集群的副本

在130 上登錄

mongo 192.168.90.130:22001

指定集群以及對應的arbiter節點

config = {

_id:"shard1",

members:[

{_id:0,host:"192.168.90.130:22001"},

{_id:1,host:"192.168.90.131:22001"},

{_id:2,host:"192.168.90.132:22001",arbiterOnly:true}

]

}

rs.initate(config)

rs.status()在131,132 節點登錄,哪一個節點初始化 即哪一個節點第一次優先作為primary節點

config = {

_id:"shard2",

members:[

{_id:0,host:"192.168.90.130:22002",arbiterOnly:true},

{_id:1,host:"192.168.90.131:22002"},

{_id:2,host:"192.168.90.132:22002"}

]

}

rs.initate(config)

rs.status()

config = {

_id:"shard3",

members:[

{_id:0,host:"192.168.90.130:22001"},

{_id:1,host:"192.168.90.131:22001",arbiterOnly:true},

{_id:2,host:"192.168.90.132:22001"}

]

}

rs.initate(config)

rs.status()3.3 配置mongos路由集群

3.3.1 創建對應文件

mkdir -p ./mongos/{config,log}因為mongos不需要存儲數據或者元數據信息,只負責處理請求分發,當啟動時到config集群中取得元數據信息載入到記憶體使用。

3.3.2 配置文件

# mongod.conf

# for documentation of all options, see:

# http://docs.mongodb.org/manual/reference/configuration-options/

# Where and how to store data.

# engine:

# mmapv1:

# wiredTiger:

# where to write logging data.

systemLog:

destination: file

logAppend: true

path: /usr/local/mongo/mongos/log/mongos.log

# network interfaces

net:

port: 20000

bindIp: 0.0.0.0

maxIncomingConnections: 1000

# how the process runs

processManagement:

fork: true

sharding:

configDB: replconf/mongo0:21000,mongo1:21000,mongo2:21000

## Enterprise-Only Options:

#auditLog:

3.3.3 啟動mongos

mongos -f /usr/local/mongo/mongos/conf/mongos.conf

查看集群啟動情況

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22001 0.0.0.0:* LISTEN 1891/mongod

tcp 0 0 0.0.0.0:22002 0.0.0.0:* LISTEN 1974/mongod

tcp 0 0 0.0.0.0:22003 0.0.0.0:* LISTEN 2026/mongod

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:20000 0.0.0.0:* LISTEN 2174/mongos

tcp 0 0 0.0.0.0:21000 0.0.0.0:* LISTEN 1779/mongod

3.3.4 在admin表中填寫shard分片信息

登錄任意一臺mongos,在admin表中加入信息

use admin

db.runCommand(

{

addShard:"shard1/192.168.90.130:22001,192.168.90.131:22001,192.168.90.132:22001"

}

)

db.runCommand(

{

addShard:"shard2/192.168.90.130:22002,192.168.90.131:22002,192.168.90.132:22002"

}

)

db.runCommand(

{

addShard:"shard3/192.168.90.130:22003,192.168.90.131:22003,192.168.90.132:22003"

}

)

sh.status()

返回信息:

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5cba82d33290d8f4fb3ac8f7")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.90.130:22001,192.168.90.132:22001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.90.130:22002,192.168.90.131:22002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.90.131:22003,192.168.90.132:22003", "state" : 1 }

active mongoses:

....

3.3.5 給對應的表進行分片

use admin

db.runCommand({

shardCollection:"lishubindb.table1",key:{_id:"hashed"}

})

db.runCommand({

listshards:1

})

3.4 測試

加入10W條數據到table1集合中

use lishubindb

for(var i=0;i<100000;i++){

db.table1.insert({

"name":"lishubin"+i,"num":i

})

}

觀察分片情況

db.table1.status()

"ns" : "lishubindb.table1",

"count" : 100000,

"size" : 5888890,

"storageSize" : 2072576,

"totalIndexSize" : 4694016,

"indexSizes" : {

"_id_" : 1060864,

"_id_hashed" : 3633152

},

"avgObjSize" : 58,

"maxSize" : NumberLong(0),

"nindexes" : 2,

"nchunks" : 6,

"shards" : {

"shard3" : {

"ns" : "lishubindb.table1",

"size" : 1969256,

"count" : 33440,

"avgObjSize" : 58,

"storageSize" : 712704,

"capped" : false,

...

},

"shard2" : {

"ns" : "lishubindb.table1",

"size" : 1973048,

"count" : 33505,

"avgObjSize" : 58,

"storageSize" : 708608,

"capped" : false,

...

},

"shard1" : {

"ns" : "lishubindb.table1",

"size" : 1946586,

"count" : 33055,

"avgObjSize" : 58,

"storageSize" : 651264,

"capped" : false,

...

},