一次“Error Domain=AVFoundationErrorDomain Code= 11841”的調試 起因 最近在重構視頻輸出模塊的時候,調試碰到AVAssetReader 調用開始方法總是返回NO而失敗,代碼如下: reader的創建代碼如下,主要用的是GPUImageMovieComp ...

一次“Error Domain=AVFoundationErrorDomain Code=-11841”的調試

起因

最近在重構視頻輸出模塊的時候,調試碰到AVAssetReader 調用開始方法總是返回NO而失敗,代碼如下:

if ([reader startReading] == NO)

{

NSLog(@"Error reading from file at URL: %@", self.url);

return;

}reader的創建代碼如下,主要用的是GPUImageMovieCompostion.

- (AVAssetReader*)createAssetReader

{

NSError *error = nil;

AVAssetReader *assetReader = [AVAssetReader assetReaderWithAsset:self.compositon error:&error];

NSDictionary *outputSettings = @{(id)kCVPixelBufferPixelFormatTypeKey: @(kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)};

AVAssetReaderVideoCompositionOutput *readerVideoOutput = [AVAssetReaderVideoCompositionOutput assetReaderVideoCompositionOutputWithVideoTracks:[_compositon tracksWithMediaType:AVMediaTypeVideo]

videoSettings:outputSettings];

readerVideoOutput.videoComposition = self.videoComposition;

readerVideoOutput.alwaysCopiesSampleData = NO;

if ([assetReader canAddOutput:readerVideoOutput]) {

[assetReader addOutput:readerVideoOutput];

}

NSArray *audioTracks = [_compositon tracksWithMediaType:AVMediaTypeAudio];

BOOL shouldRecordAudioTrack = (([audioTracks count] > 0) && (self.audioEncodingTarget != nil) );

AVAssetReaderAudioMixOutput *readerAudioOutput = nil;

if (shouldRecordAudioTrack)

{

[self.audioEncodingTarget setShouldInvalidateAudioSampleWhenDone:YES];

NSDictionary *audioReaderSetting = @{AVFormatIDKey: @(kAudioFormatLinearPCM),

AVLinearPCMIsBigEndianKey: @(NO),

AVLinearPCMIsFloatKey: @(NO),

AVLinearPCMBitDepthKey: @(16)};

readerAudioOutput = [AVAssetReaderAudioMixOutput assetReaderAudioMixOutputWithAudioTracks:audioTracks audioSettings:audioReaderSetting];

readerAudioOutput.audioMix = self.audioMix;

readerAudioOutput.alwaysCopiesSampleData = NO;

[assetReader addOutput:readerAudioOutput];

}

return assetReader;

}

然後在該處斷點,查看reader的status和error,顯示Error Domain=AVFoundationErrorDomain Code=-11841。查了一下文檔發現如下:

AVErrorInvalidVideoComposition = -11841

You attempted to perform a video composition operation that is not supported.

應該就是readerVideoOutput.videoComposition = self.videoComposition; 這個videoCompostion有問題了。AVMutableVideoComposition這個類在視頻編輯裡面非常重要,包含對AVComposition中的各個視頻track如何融合的信息,我做的是兩個視頻的融合小demo,就需要用到它,設置這個類稍微有點複雜,比較容易出錯,特別是設置AVMutableVideoCompositionInstruction這個類的timeRange屬性,我的這次錯誤就是因為這個。

開始調試

馬上調用AVMutableVideoComposition的如下方法:

BOOL isValid = [self.videoComposition isValidForAsset:self.compositon timeRange:CMTimeRangeMake(kCMTimeZero, self.compositon.duration) validationDelegate:self];

這個時候isValid為No,確定就是這個videoCompostion問題了,添加了AVVideoCompositionValidationHandling協議四個方法列印一下,這個協議太貼心了,估計知道這個地方出錯概率比較高,所以特地弄的吧。代碼如下:

- (BOOL)videoComposition:(AVVideoComposition *)videoComposition shouldContinueValidatingAfterFindingInvalidValueForKey:(NSString *)key

{

NSLog(@"%s===%@",__func__,key);

return YES;

}

- (BOOL)videoComposition:(AVVideoComposition *)videoComposition shouldContinueValidatingAfterFindingEmptyTimeRange:(CMTimeRange)timeRange

{

NSLog(@"%s===%@",__func__,CFBridgingRelease(CMTimeRangeCopyDescription(kCFAllocatorDefault, timeRange)));

return YES;

}

- (BOOL)videoComposition:(AVVideoComposition *)videoComposition shouldContinueValidatingAfterFindingInvalidTimeRangeInInstruction:(id<AVVideoCompositionInstruction>)videoCompositionInstruction

{

NSLog(@"%s===%@",__func__,videoCompositionInstruction);

return YES;

}

- (BOOL)videoComposition:(AVVideoComposition *)videoComposition shouldContinueValidatingAfterFindingInvalidTrackIDInInstruction:(id<AVVideoCompositionInstruction>)videoCompositionInstruction layerInstruction:(AVVideoCompositionLayerInstruction *)layerInstruction asset:(AVAsset *)asset

{

NSLog(@"%s===%@===%@",__func__,layerInstruction,asset);

return YES;

}

重新運行一下發現第三個方法列印輸出,就是instruction的timeRange問題了,又重新看了一下兩個instruction的設置問題,感覺又沒什麼問題,代碼如下:

- (void)buildCompositionWithAssets:(NSArray *)assetsArray

{

for (int i = 0; i < assetsArray.count; i++) {

AVURLAsset *asset = assetsArray[i];

NSArray *videoTracks = [asset tracksWithMediaType:AVMediaTypeVideo];

NSArray *audioTracks = [asset tracksWithMediaType:AVMediaTypeAudio];

AVAssetTrack *videoTrack = videoTracks[0];

AVAssetTrack *audioTrack = audioTracks[0];

NSError *error = nil;

AVMutableCompositionTrack *videoT = [self.avcompostions.mutableComps addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableCompositionTrack *audioT = [self.avcompostions.mutableComps addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

[videoT insertTimeRange:videoTrack.timeRange ofTrack:videoTrack atTime:self.offsetTime error:&error];

[audioT insertTimeRange:audioTrack.timeRange ofTrack:audioTrack atTime:self.offsetTime error:nil];

NSAssert(!error, @"insert error = %@",error);

AVMutableVideoCompositionInstruction *instruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

AVMutableVideoCompositionLayerInstruction *layerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoT];

instruction.layerInstructions = @[layerInstruction];

instruction.timeRange = CMTimeRangeMake(self.offsetTime, asset.duration);

[self.instrucionArray addObject:instruction];

self.offsetTime = CMTimeAdd(self.offsetTime,asset.duration);

}

}

這個數組裡面會有兩個載入好的AVAsset對象,載入AVAsset的代碼如下:

- (void)loadAssetFromPath:(NSArray *)paths

{

NSMutableArray *assetsArray = [NSMutableArray arrayWithCapacity:paths.count];

dispatch_group_t dispatchGroup = dispatch_group_create();

for (int i = 0; i < paths.count; i++) {

NSString *path = paths[i];

//first find from cache

AVAsset *asset = [self.assetsCache objectForKey:path];

if (!asset) {

NSDictionary *inputOptions = [NSDictionary dictionaryWithObject:[NSNumber numberWithBool:YES] forKey:AVURLAssetPreferPreciseDurationAndTimingKey];

asset = [AVURLAsset URLAssetWithURL:[NSURL fileURLWithPath:path] options:inputOptions];

// cache asset

NSAssert(asset != nil, @"Can't create asset from path", path);

NSArray *loadKeys = @[@"tracks", @"duration", @"composable"];

[self loadAsset:asset withKeys:loadKeys usingDispatchGroup:dispatchGroup];

[self.assetsCache setObject:asset forKey:path];

}else {

}

[assetsArray addObject:asset];

}

dispatch_group_notify(dispatchGroup, dispatch_get_main_queue(), ^{

!self.assetLoadBlock?:self.assetLoadBlock(assetsArray);

});

}

- (void)loadAsset:(AVAsset *)asset withKeys:(NSArray *)assetKeysToLoad usingDispatchGroup:(dispatch_group_t)dispatchGroup

{

dispatch_group_enter(dispatchGroup);

[asset loadValuesAsynchronouslyForKeys:assetKeysToLoad completionHandler:^(){

for (NSString *key in assetKeysToLoad) {

NSError *error;

if ([asset statusOfValueForKey:key error:&error] == AVKeyValueStatusFailed) {

NSLog(@"Key value loading failed for key:%@ with error: %@", key, error);

goto bail;

}

}

if (![asset isComposable]) {

NSLog(@"Asset is not composable");

goto bail;

}

bail:

dispatch_group_leave(dispatchGroup);

}];

}

按照正常來說,應該不會有什麼問題的,但是問題還是來了。列印出創建的兩個AVMutableVideoCompositionInstruction的信息,發現他們的timeRange確實有重合的地方。AVMutableVideoCompositionInstruction的timeRange必須對應AVMutableCompositionTrack裡面的一段段插入的track的timeRange。開發文檔是這麼說的:

to report a video composition instruction with a timeRange that's invalid, that overlaps with the timeRange of a prior instruction, or that contains times earlier than the timeRange of a prior instruction

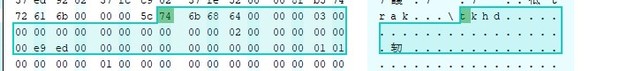

我在插入的時候使用的是videoTrack.timeRange,而設置instruction.timeRange = CMTimeRangeMake(self.offsetTime, asset.duration);使用的是asset.duration。這個兩個竟然是不一樣的。asset.duration是{59885/1000 = 59.885},videoTrack.timeRange是{{0/1000 = 0.000}, {59867/1000 = 59.867}}

這兩個時長有細微差別,到底哪個是比較準確一點的呢?

視頻文件時長的計算

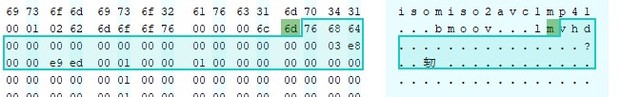

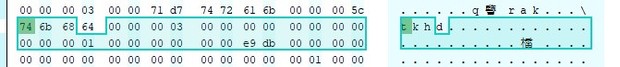

筆者在demo中使用的是mp4格式的文件,mp4文件是若幹個不同的類型box組成的,box可以理解為裝有數據的容器。其中有一種moov類型的box裡面裝有視頻播放的元數據(metadata),這裡面有視頻的時長信息:timescale和duration。 duration / timescale = 可播放時長(s)。從mvhd中讀到timescal為0x03e8,duration為0xe9ed,也就是59885 / 1000,為59.885。再看看tkhd box裡面的內容,這裡面是包含單一track的信息。如下圖:

從上圖可以看出videotrack和audiotrack兩個的時長是不一樣的,videotrack為0xe9db,也就是59.867,audiotrack為0xe9ed,就是59.885。所以我們在計算timeRange的時候最好統一使用相對精確一點的videotrack,而不要AVAsset的duration,儘量避免時間上的誤差,視頻精細化的編輯,對這些誤差敏感。