該文章是基於 Hadoop2.7.6_01_部署 、 Hive-1.2.1_01_安裝部署 進行的 1. 前言 在一個完整的大數據處理系統中,除了hdfs+mapreduce+hive組成分析系統的核心之外,還需要數據採集、結果數據導出、任務調度等不可或缺的輔助系統,而這些輔助工具在hadoop生態 ...

該文章是基於 Hadoop2.7.6_01_部署 、 Hive-1.2.1_01_安裝部署 進行的

1. 前言

在一個完整的大數據處理系統中,除了hdfs+mapreduce+hive組成分析系統的核心之外,還需要數據採集、結果數據導出、任務調度等不可或缺的輔助系統,而這些輔助工具在hadoop生態體系中都有便捷的開源框架,如圖所示:

1.1. 概述

sqoop是apache旗下一款“Hadoop和關係資料庫伺服器之間傳送數據”的工具。

導入數據:MySQL,Oracle導入數據到Hadoop的HDFS、HIVE、HBASE等數據存儲系統;

導出數據:從Hadoop的文件系統中導出數據到關係資料庫

1.3. 工作機制

將導入或導出命令翻譯成mapreduce程式來實現

在翻譯出的mapreduce中主要是對inputformat和outputformat進行定製

2. Sqoop的安裝部署

2.1. 軟體部署

1 [yun@mini01 software]$ pwd 2 /app/software 3 [yun@mini01 software]$ tar xf sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz 4 [yun@mini01 software]$ mv sqoop-1.4.7.bin__hadoop-2.6.0 /app/sqoop-1.4.7 5 [yun@mini01 software]$ cd /app/ 6 [yun@mini01 ~]$ ln -s sqoop-1.4.7/ sqoop 7 [yun@mini01 ~]$ ll 8 total 28 9 ………… 10 lrwxrwxrwx 1 yun yun 12 Aug 2 11:40 sqoop -> sqoop-1.4.7/ 11 drwxr-xr-x 9 yun yun 318 Dec 19 2017 sqoop-1.4.7

2.2. 配置修改

1 [yun@mini01 conf]$ pwd 2 /app/sqoop/conf 3 [yun@mini01 conf]$ ll 4 total 32 5 -rw-rw-r-- 1 yun yun 3895 Dec 19 2017 oraoop-site-template.xml 6 -rw-rw-r-- 1 yun yun 1404 Dec 19 2017 sqoop-env-template.cmd 7 -rwxr-xr-x 1 yun yun 1345 Dec 19 2017 sqoop-env-template.sh 8 -rw-rw-r-- 1 yun yun 6044 Dec 19 2017 sqoop-site-template.xml 9 -rw-rw-r-- 1 yun yun 6044 Dec 19 2017 sqoop-site.xml 10 [yun@mini01 conf]$ cp -a sqoop-env-template.sh sqoop-env.sh 11 [yun@mini01 conf]$ cat sqoop-env.sh 12 # Licensed to the Apache Software Foundation (ASF) under one or more 13 ……………… 14 15 # Set Hadoop-specific environment variables here. 16 17 #Set path to where bin/hadoop is available # 修改的配置 18 export HADOOP_COMMON_HOME=${HADOOP_HOME} 19 20 #Set path to where hadoop-*-core.jar is available # 修改的配置 21 export HADOOP_MAPRED_HOME=${HADOOP_HOME} 22 23 #set the path to where bin/hbase is available 24 #export HBASE_HOME= 25 26 #Set the path to where bin/hive is available # 修改的配置 27 export HIVE_HOME=${HIVE_HOME} 28 29 #Set the path for where zookeper config dir is 30 #export ZOOCFGDIR=

2.3. 加入mysql的jdbc驅動包

1 [yun@mini01 software]$ pwd 2 /app/software 3 [yun@mini01 software]$ cp -a mysql-connector-java-5.1.46.jar /app/sqoop/lib

2.4. 加入hive的執行包

1 [yun@mini01 lib]$ pwd 2 /app/hive/lib 3 [yun@mini01 lib]$ cp -a hive-exec-1.2.1.jar /app/sqoop/lib/

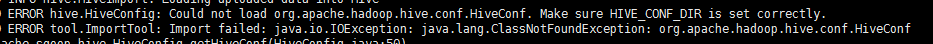

避免出現

2.5. 驗證啟動

1 [yun@mini01 bin]$ pwd 2 /app/sqoop/bin 3 [yun@mini01 bin]$ ./sqoop-version 4 18/08/02 14:56:54 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 5 Sqoop 1.4.7 6 git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8 7 Compiled by maugli on Thu Dec 21 15:59:58 STD 2017 8 [yun@mini01 bin]$ ./sqoop help # 查看幫助 9 18/08/02 15:30:00 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 10 usage: sqoop COMMAND [ARGS] 11 12 Available commands: 13 codegen Generate code to interact with database records 14 create-hive-table Import a table definition into Hive 15 eval Evaluate a SQL statement and display the results 16 export Export an HDFS directory to a database table 17 help List available commands 18 import Import a table from a database to HDFS 19 import-all-tables Import tables from a database to HDFS 20 import-mainframe Import datasets from a mainframe server to HDFS 21 job Work with saved jobs 22 list-databases List available databases on a server 23 list-tables List available tables in a database 24 merge Merge results of incremental imports 25 metastore Run a standalone Sqoop metastore 26 version Display version information 27 28 See 'sqoop help COMMAND' for information on a specific command.

3. 資料庫信息

1 # 建庫 2 CREATE DATABASE sqoop_test DEFAULT CHARACTER SET utf8 ; 3 # 建賬號 資料庫在mini03機器上 4 grant all on sqoop_test.* to sqoop_test@'%' identified by 'sqoop_test'; 5 grant all on sqoop_test.* to sqoop_test@'mini03' identified by 'sqoop_test'; 6 # 刷新許可權 7 flush privileges;

3.1. 表信息

表emp:

|

id |

name |

deg |

salary |

dept |

|

1201 |

gopal |

manager |

50000 |

TP |

|

1202 |

manisha |

Proof reader |

50000 |

TP |

|

1203 |

khalil |

php dev |

30000 |

AC |

|

1204 |

prasanth |

php dev |

30000 |

AC |

|

1205 |

kranthi |

admin |

20000 |

TP |

表emp_add:

|

id |

name |

deg |

salary |

|

1201 |

288A |

vgiri |

jublee |

|

1202 |

108I |

aoc |

sec-bad |

|

1203 |

144Z |

pgutta |

hyd |

|

1204 |

78B |

old city |

sec-bad |

|

1205 |

720X |

hitec |

sec-bad |

表emp_conn:

|

id |

name |

deg |

|

1201 |

2356742 |

|

|

1202 |

1661663 |

|

|

1203 |

8887776 |

|

|

1204 |

9988774 |

|

|

1205 |

1231231 |

4. Sqoop的數據導入

“導入工具”導入單個表從RDBMS到HDFS。表中的每一行被視為HDFS的記錄。所有記錄都存儲為文本文件的文本數據(或者Avro、sequence文件等二進位數據)

1 $ sqoop import (generic-args) (import-args) 2 $ sqoop-import (generic-args) (import-args)

4.1. 導入表數據到HDFS

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import \ 4 --connect jdbc:mysql://mini03:3306/sqoop_test \ 5 --username sqoop_test \ 6 --password sqoop_test \ 7 --table emp \ 8 --m 1 9 18/08/02 15:57:55 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 10 18/08/02 15:57:55 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 11 ……………… 12 18/08/02 15:58:47 INFO mapreduce.Job: map 100% reduce 0% 13 18/08/02 15:58:47 INFO mapreduce.Job: Job job_1533196573365_0001 completed successfully 14 ……………… 15 18/08/02 15:58:47 INFO mapreduce.ImportJobBase: Transferred 151 bytes in 47.2865 seconds (3.1933 bytes/sec) 16 18/08/02 15:58:47 INFO mapreduce.ImportJobBase: Retrieved 5 records.

查看導入的數據

1 [yun@mini02 ~]$ hadoop fs -ls /user/yun/emp 2 Found 2 items 3 -rw-r--r-- 2 yun supergroup 0 2018-08-02 15:58 /user/yun/emp/_SUCCESS 4 -rw-r--r-- 2 yun supergroup 151 2018-08-02 15:58 /user/yun/emp/part-m-00000 5 [yun@mini02 ~]$ hadoop fs -cat /user/yun/emp/part-m-00000 6 1201,gopal,manager,50000,TP 7 1202,manisha,Proof reader,50000,TP 8 1203,khalil,php dev,30000,AC 9 1204,prasanth,php dev,30000,AC 10 1205,kranthi,admin,20000,TP

4.2. 導入表到HDFS指定目錄

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --target-dir /sqoop_test/table_emp/queryresult \ 6 --table emp --num-mappers 1

註意:如果沒有目錄,那麼會創建

查看導入的數據

1 [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult 2 Found 2 items 3 -rw-r--r-- 2 yun supergroup 0 2018-08-02 17:57 /sqoop_test/table_emp/queryresult/_SUCCESS 4 -rw-r--r-- 2 yun supergroup 151 2018-08-02 17:57 /sqoop_test/table_emp/queryresult/part-m-00000 5 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult/part-m-00000 6 1201,gopal,manager,50000,TP 7 1202,manisha,Proof reader,50000,TP 8 1203,khalil,php dev,30000,AC 9 1204,prasanth,php dev,30000,AC 10 1205,kranthi,admin,20000,TP

4.3. 導入關係表到HIVE

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --table emp --hive-import \ 6 --num-mappers 1 7 18/08/02 17:40:45 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7 8 18/08/02 17:40:45 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead. 9 ……………… 10 18/08/02 17:41:09 INFO mapreduce.ImportJobBase: Transferred 151 bytes in 20.6744 seconds (7.3037 bytes/sec) 11 18/08/02 17:41:09 INFO mapreduce.ImportJobBase: Retrieved 5 records. 12 18/08/02 17:41:09 INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table emp 13 18/08/02 17:41:10 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT 1 14 18/08/02 17:41:10 WARN hive.TableDefWriter: Column salary had to be cast to a less precise type in Hive 15 18/08/02 17:41:10 INFO hive.HiveImport: Loading uploaded data into Hive 16 18/08/02 17:41:12 INFO hive.HiveImport: 17 18/08/02 17:41:12 INFO hive.HiveImport: Logging initialized using configuration in jar:file:/app/sqoop-1.4.7/lib/hive-exec-1.2.1.jar!/hive-log4j.properties 18 18/08/02 17:41:20 INFO hive.HiveImport: OK 19 18/08/02 17:41:20 INFO hive.HiveImport: Time taken: 1.677 seconds 20 18/08/02 17:41:20 INFO hive.HiveImport: Loading data to table default.emp 21 18/08/02 17:41:21 INFO hive.HiveImport: Table default.emp stats: [numFiles=1, totalSize=151] 22 18/08/02 17:41:21 INFO hive.HiveImport: OK 23 18/08/02 17:41:21 INFO hive.HiveImport: Time taken: 0.629 seconds 24 18/08/02 17:41:21 INFO hive.HiveImport: Hive import complete. 25 18/08/02 17:41:21 INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

查看導入的數據

1 hive (default)> show tables; 2 OK 3 emp 4 Time taken: 0.031 seconds, Fetched: 1 row(s) 5 hive (default)> select * from emp; 6 OK 7 1201 gopal manager 50000.0 TP 8 1202 manisha Proof reader 50000.0 TP 9 1203 khalil php dev 30000.0 AC 10 1204 prasanth php dev 30000.0 AC 11 1205 kranthi admin 20000.0 TP 12 Time taken: 0.489 seconds, Fetched: 5 row(s)

4.4. 導入表到HIVE指定庫指定表

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --table emp \ 6 --delete-target-dir \ 7 --fields-terminated-by '\t' \ 8 --hive-import \ 9 --hive-database sqoop_test \ 10 --hive-table hive_emp \ 11 --num-mappers 1

註意:hive的庫sqoop_test,必須先建立。否則會報:FAILED: SemanticException [Error 10072]: Database does not exist: sqoop_test

查看導入的數據

1 0: jdbc:hive2://mini01:10000> use sqoop_test; 2 No rows affected (0.049 seconds) 3 0: jdbc:hive2://mini01:10000> show tables; 4 +-----------+--+ 5 | tab_name | 6 +-----------+--+ 7 | hive_emp | 8 +-----------+--+ 9 1 row selected (0.076 seconds) 10 0: jdbc:hive2://mini01:10000> select * from hive_emp; 11 +--------------+----------------+---------------+------------------+----------------+--+ 12 | hive_emp.id | hive_emp.name | hive_emp.deg | hive_emp.salary | hive_emp.dept | 13 +--------------+----------------+---------------+------------------+----------------+--+ 14 | 1201 | gopal | manager | 50000.0 | TP | 15 | 1202 | manisha | Proof reader | 50000.0 | TP | 16 | 1203 | khalil | php dev | 30000.0 | AC | 17 | 1204 | prasanth | php dev | 30000.0 | AC | 18 | 1205 | kranthi | admin | 20000.0 | TP | 19 +--------------+----------------+---------------+------------------+----------------+--+ 20 5 rows selected (0.162 seconds)

4.5. 導入表數據子集

4.5.1. where子句的導入

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --table emp_add \ 6 --where "city ='sec-bad'" \ 7 --target-dir /sqoop_test/table_emp/queryresult2 \ 8 --num-mappers 1

查看導入的數據

1 [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult2 2 Found 2 items 3 -rw-r--r-- 2 yun supergroup 0 2018-08-02 19:33 /sqoop_test/table_emp/queryresult2/_SUCCESS 4 -rw-r--r-- 2 yun supergroup 72 2018-08-02 19:33 /sqoop_test/table_emp/queryresult2/part-m-00000 5 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult2/part-m-00000 6 1202,108I,aoc,sec-bad 7 1204,78B,old city,sec-bad 8 1205,720X,hitec,sec-bad

4.5.2. query按需導入

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --query 'select id,name,deg from emp WHERE id>1203 and $CONDITIONS' \ 6 --split-by id \ 7 --fields-terminated-by '\t' \ 8 --target-dir /sqoop_test/table_emp/queryresult4 \ 9 --num-mappers 1

查看導入的數據

1 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-00000 2 1204 prasanth php dev 3 1205 kranthi admin

4.6. 增量導入

所需參數

1 --check-column (col) 2 --incremental (mode) # mode include append and lastmodified 3 --last-value (value)

示例

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \ 4 --username sqoop_test --password sqoop_test \ 5 --table emp \ 6 --incremental append \ 7 --check-column id \ 8 --last-value 1202 \ 9 --fields-terminated-by '\t' \ 10 --target-dir /sqoop_test/table_emp/queryresult4 \ 11 --num-mappers 1

查看導入的數據

1 [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult4/ 2 Found 3 items 3 -rw-r--r-- 2 yun supergroup 0 2018-08-02 19:47 /sqoop_test/table_emp/queryresult4/_SUCCESS 4 -rw-r--r-- 2 yun supergroup 41 2018-08-02 19:47 /sqoop_test/table_emp/queryresult4/part-m-00000 5 -rw-r--r-- 2 yun supergroup 88 2018-08-02 20:16 /sqoop_test/table_emp/queryresult4/part-m-00001 6 [yun@mini02 ~]$ 7 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-00000 8 1204 prasanth php dev 9 1205 kranthi admin 10 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-00001 11 1203 khalil php dev 30000 AC 12 1204 prasanth php dev 30000 AC 13 1205 kranthi admin 20000 TP

5. Sqoop的數據導出

將數據從HDFS導出到RDBMS資料庫

導出前,目標表必須存在於目標資料庫中。

- 預設操作是從將文件中的數據使用INSERT語句插入到表中

- 更新模式下,是生成UPDATE語句更新表數據

1 $ sqoop export (generic-args) (export-args) 2 $ sqoop-export (generic-args) (export-args)

5.1. 示例

數據

1 [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult 2 Found 2 items 3 -rw-r--r-- 2 yun supergroup 0 2018-08-02 17:57 /sqoop_test/table_emp/queryresult/_SUCCESS 4 -rw-r--r-- 2 yun supergroup 151 2018-08-02 17:57 /sqoop_test/table_emp/queryresult/part-m-00000 5 [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult/part-m-00000 6 1201,gopal,manager,50000,TP 7 1202,manisha,Proof reader,50000,TP 8 1203,khalil,php dev,30000,AC 9 1204,prasanth,php dev,30000,AC 10 1205,kranthi,admin,20000,TP

1、首先需要手動創建mysql中的目標表

1 MariaDB [(none)]> use sqoop_test; 2 Database changed 3 4 MariaDB [sqoop_test]> CREATE TABLE employee ( 5 id INT NOT NULL PRIMARY KEY, 6 name VARCHAR(20), 7 deg VARCHAR(20), 8 salary INT, 9 dept VARCHAR(10)); 10 Query OK, 0 rows affected (0.00 sec) 11 12 MariaDB [sqoop_test]> show tables; 13 +----------------------+ 14 | Tables_in_sqoop_test | 15 +----------------------+ 16 | emp | 17 | emp_add | 18 | emp_conn | 19 | employee | 20 +----------------------+ 21 4 rows in set (0.00 sec)

2、然後執行導出命令

1 [yun@mini01 sqoop]$ pwd 2 /app/sqoop 3 [yun@mini01 sqoop]$ bin/sqoop export \ 4 --connect jdbc:mysql://mini03:3306/sqoop_test \ 5 --username sqoop_test --password sqoop_test \ 6 --table employee \ 7 --export-dir /sqoop_test/table_emp/queryresult/

3、驗證表mysql命令行

1 MariaDB [sqoop_test]> select * from employee; 2 +------+----------+--------------+--------+------+ 3 | id | name | deg | salary | dept | 4 +------+----------+--------------+--------+------+ 5 | 1201 | gopal | manager | 50000 | TP | 6 | 1202 | manisha | Proof reader | 50000 | TP | 7 | 1203 | khalil | php dev | 30000 | AC | 8 | 1204 | prasanth | php dev | 30000 | AC | 9 | 1205 | kranthi | admin | 20000 | TP | 10 +------+----------+--------------+--------+------+ 11 5 rows in set (0.00 sec)