目錄一、什麼是分片二、分片集群1、組件構成2、分片集群內各組件間交互三、數據如何切分四、分片策略1、哈希分片2、範圍分片五、分片集群架構六、搭建分片集群1、涉及主機2、所有主機安裝MongoDB3、分片節點副本集的創建3.1、第一套副本集shard13.1.1、準備存放數據和日誌的目錄3.1.2、創 ...

目錄

- 一、什麼是分片

- 二、分片集群

- 三、數據如何切分

- 四、分片策略

- 五、分片集群架構

- 六、搭建分片集群

一、什麼是分片

分片是一種跨多台機器分佈數據的方法。MongoDB 使用分片來支持超大數據集和高吞吐量操作的部署。

存在大型數據集或高吞吐量應用程式的資料庫系統可能對單個伺服器的容量構成挑戰。例如,較高的查詢速率可能會耗盡伺服器的 CPU 容量。大於系統 RAM 的工作集大小會對磁碟驅動器的 I/O 容量造成壓力。

有兩種方法可解決系統增長問題:垂直擴展和水平擴展。

Vertical Scaling(垂直擴展)涉及增大單個伺服器的容量,例如使用更強大的 CPU、添加更多 RAM 或增加存儲空間量。可用技術所存在的限制可能會導致單個機器對給定工作負載來說不夠強大。此外,基於雲的提供商存在基於可用硬體配置的硬上限。因此,垂直擴展存在實際的最大值。

Horizontal Scaling(橫向擴展)涉及將系統數據集和負載劃分到多個伺服器,以及按需增加伺服器以提高容量。雖然單個機器的總體速度或容量可能不高,但每個機器均可處理總體工作負載的一部分,因此可能會比單個高速、高容量伺服器提供更高的效率。擴展部署的容量只需按需添加額外的伺服器,而這可能會比單個機器的高端硬體的整體成本更低。但代價在於它會增大部署的基礎設施與維護的複雜性。

MongoDB 支持通過水平擴展進行分片

二、分片集群

1、組件構成

- 分片:每個分片都包含分片數據的一個子集。每個分片都必須作為一個副本集

- mongos :

mongos充當查詢路由器,在客戶端應用程式和分片集群之間提供介面。mongos可以支持對沖讀,以最大限度地減少延遲。 - 配置伺服器:配置伺服器會存儲集群的元數據和配置設置。 從 MongoDB 3.4 開始,配置伺服器必須作為副本集 (CSRS) 部署。

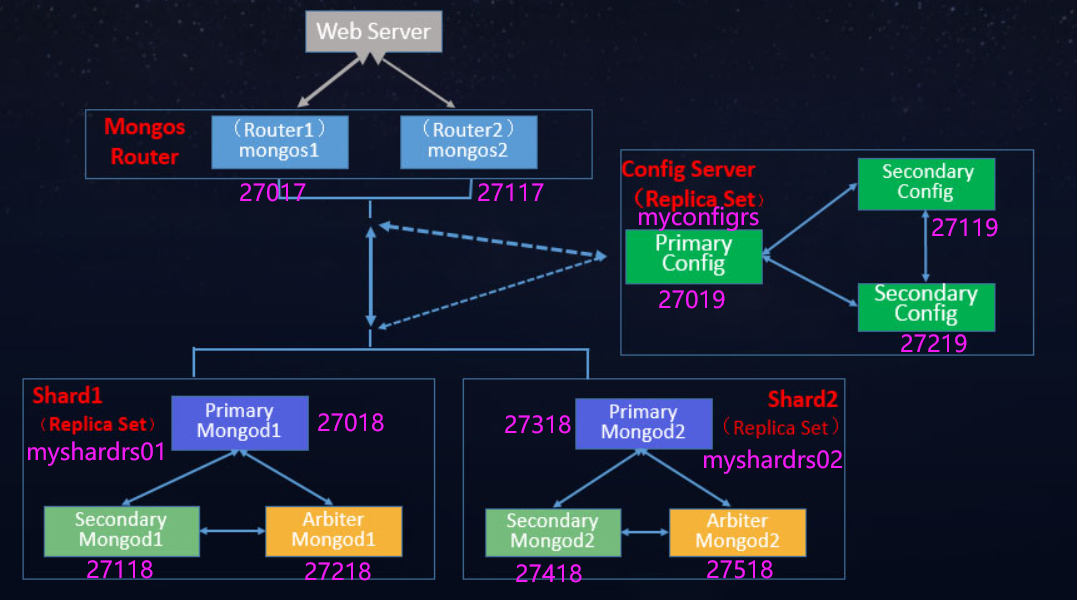

2、分片集群內各組件間交互

三、數據如何切分

基於分片切分後的數據塊稱為 chunk,一個分片後的集合會包含多個 chunk,每個 chunk 位於哪個分片(Shard) 則記錄在 Config Server(配置伺服器)上。

Mongos 在操作分片集合時,會自動根據分片鍵找到對應的 chunk,並向該 chunk 所在的分片發起操作請求。

數據是根據分片策略來進行切分的,而分片策略則由 分片鍵(ShardKey)+分片演算法(ShardStrategy)組成。

四、分片策略

1、哈希分片

哈希分片涉及計算分片鍵欄位值的哈希值。然後,根據哈希分片鍵值為每個數據段分配一個範圍。

在使用哈希索引解析查詢時,MongoDB 會自動計算哈希值。應用程式無需計算哈希值

雖然分片鍵的範圍可能“相近”,但它們的哈希值卻不太可能位於同一數據段。基於哈希值的數據分配可促進更均勻的數據分佈,尤其是在分片鍵單調

然而,哈希分佈意味著對分片鍵進行基於範圍的查詢時,不太可能以單個分片為目標,從而導致更多的集群範圍的廣播操作

2、範圍分片

範圍分片涉及根據分片鍵值將數據劃分為多個範圍。然後,根據分片鍵值為每個數據段分配一個範圍。

具有“相近”數值的一系列分片鍵更有可能位於同一個數據段上。這允許進行有針對性的操作,因為 mongos 只能將操作路由到包含所需數據的分片。

範圍分片的效率取決於所選分片鍵。考慮不周的分片鍵可能會導致數據分佈不均,從而抵消分片的某些好處甚或導致性能瓶頸。請參閱針對基於範圍的分片的分片鍵選擇

五、分片集群架構

兩個分片節點副本集(3+3)+ 一個配置節點副本集(3)+兩個路由節點(2)

共11個服務節點。

六、搭建分片集群

1、涉及主機

| 角色 | 主機名 | IP地址 |

|---|---|---|

| 分片節點1 | shard1 | 192.168.112.10 |

| 分片節點2 | shard2 | 192.168.112.20 |

| 配置節點 | config-server | 192.168.112.30 |

| 路由節點 | router | 192.168.112.40 |

2、所有主機安裝MongoDB

wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel70-4.4.6.tgz

tar xzvf mongodb-linux-x86_64-rhel70-4.4.6.tgz -C /usr/local

cd /usr/local/

ln -s /usr/local/mongodb-linux-x86_64-rhel70-4.4.6 /usr/local/mongodb

vim /etc/profile

export PATH=/usr/local/mongodb/bin/:$PATH

source /etc/profile

3、分片節點副本集的創建

3.1、第一套副本集shard1

shard1主機操作

3.1.1、準備存放數據和日誌的目錄

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs01_27018/log \

/usr/local/mongodb/sharded_cluster/myshardrs01_27018/data/db \

/usr/local/mongodb/sharded_cluster/myshardrs01_27118/log \

/usr/local/mongodb/sharded_cluster/myshardrs01_27118/data/db \

/usr/local/mongodb/sharded_cluster/myshardrs01_27218/log \

/usr/local/mongodb/sharded_cluster/myshardrs01_27218/data/db

3.1.2、創建配置文件

myshardrs01_27018

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27018/mongod.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs01_27018/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27018/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27018/log/mongod.pid"

net:

bindIp: localhost,192.168.112.10

port: 27018

replication:

replSetName: myshardrs01

sharding:

clusterRole: shardsvr

myshardrs01_27118

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27118/mongod.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs01_27118/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27118/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27118/log/mongod.pid"

net:

bindIp: localhost,192.168.112.10

port: 27118

replication:

replSetName: myshardrs01

sharding:

clusterRole: shardsvr

myshardrs01_27218

vim /usr/local/mongodb/sharded_cluster/myshardrs01_27218/mongod.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs01_27218/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27218/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs01_27218/log/mongod.pid"

net:

bindIp: localhost,192.168.112.10

port: 27218

replication:

replSetName: myshardrs01

sharding:

clusterRole: shardsvr

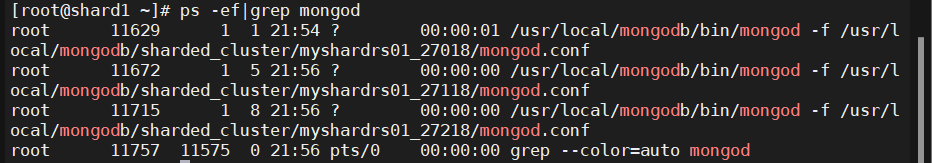

3.1.3、啟動第一套副本集:一主一副本一仲裁

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27018/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27118/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs01_27218/mongod.conf

ps -ef|grep mongod

3.1.4、初始化副本集、添加副本,仲裁節點

連接主節點

mongo --host 192.168.112.10 --port 27018

- 初始化副本集

rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.112.10:27018",

"ok" : 1

}

myshardrs01:SECONDARY>

myshardrs01:PRIMARY>

- 添加副本節點

rs.add("192.168.112.10:27118")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1714658449, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714658449, 1)

}

- 添加仲裁節點

rs.addArb("192.168.112.10:27218")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1714658509, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714658509, 1)

}

-

查看副本集(關註各成員角色信息、健康狀況即可)

-

rs.conf()

- 作用:此命令用於顯示當前副本集的配置信息。它返回一個文檔,包含了副本集的名稱、版本、協議版本、成員列表(包括每個成員的ID、主機地址、優先順序、角色等信息)、設置選項等詳細配置細節。

- 應用場景:當你需要查看或修改副本集的配置時(比如添加新成員、調整成員優先順序、查看仲裁節點配置等),可以使用此命令。獲取到的配置信息可以進一步用於重新配置副本集(

rs.reconfig())。

-

rs.status()

- 作用:此命令提供了副本集當前狀態的快照,包括各成員的運行狀態、主從角色、健康狀況、同步進度、心跳信息、選舉信息等。它幫助你瞭解副本集是否運行正常,成員之間是否保持同步,以及是否有主節點故障等情況。

- 應用場景:當監控副本集健康狀態、排查連接問題、檢查數據同步情況或確認主節點變更時,使用此命令非常有用。通過檢查

rs.status()的輸出,管理員可以快速識別並解決集群中的問題。

-

3.2、第二套副本集shard2

shard2主機操作

3.2.1、準備存放數據和日誌的目錄

mkdir -p /usr/local/mongodb/sharded_cluster/myshardrs02_27318/log \

/usr/local/mongodb/sharded_cluster/myshardrs02_27318/data/db \

/usr/local/mongodb/sharded_cluster/myshardrs02_27418/log \

/usr/local/mongodb/sharded_cluster/myshardrs02_27418/data/db \

/usr/local/mongodb/sharded_cluster/myshardrs02_27518/log \

/usr/local/mongodb/sharded_cluster/myshardrs02_27518/data/db

3.1.2、創建配置文件

myshardrs02_27318

vim /usr/local/mongodb/sharded_cluster/myshardrs02_27318/mongod.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs02_27318/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27318/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27318/log/mongod.pid"

net:

bindIp: localhost,192.168.112.20

port: 27318

replication:

replSetName: myshardrs02

sharding:

clusterRole: shardsvr

myshardrs02_27418

vim /usr/local/mongodb/sharded_cluster/myshardrs02_27418/mongod.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs02_27418/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27418/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27418/log/mongod.pid"

net:

bindIp: localhost,192.168.112.20

port: 27418

replication:

replSetName: myshardrs02

sharding:

clusterRole: shardsvr

myshardrs02_27518

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myshardrs02_27518/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27518/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myshardrs02_27518/log/mongod.pid"

net:

bindIp: localhost,192.168.112.20

port: 27518

replication:

replSetName: myshardrs02

sharding:

clusterRole: shardsvr

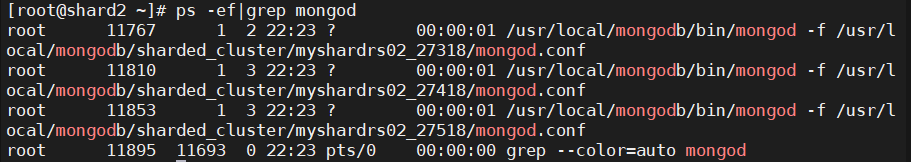

3.2.3、啟動第二套副本集:一主一副本一仲裁

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs02_27318/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs02_27418/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myshardrs02_27518/mongod.conf

ps -ef|grep mongod

3.2.4、初始化副本集、添加副本,仲裁節點

連接主節點

mongo --host 192.168.112.20 --port 27318

- 初始化副本集

rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.112.20:27318",

"ok" : 1

}

myshardrs02:SECONDARY>

myshardrs02:PRIMARY>

- 添加副本節點

rs.add("192.168.112.20:27418")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1714660065, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714660065, 1)

}

- 添加仲裁節點

rs.addArb("192.168.112.20:27518")

{

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1714660094, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714660094, 1)

}

- 查看副本集

rs.status()

{

"set" : "myshardrs02",

"date" : ISODate("2024-05-02T14:29:57.930Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 2,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1714660197, 1),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2024-05-02T14:29:57.868Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1714660197, 1),

"t" : NumberLong(1)

},

"readConcernMajorityWallTime" : ISODate("2024-05-02T14:29:57.868Z"),

"appliedOpTime" : {

"ts" : Timestamp(1714660197, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1714660197, 1),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2024-05-02T14:29:57.868Z"),

"lastDurableWallTime" : ISODate("2024-05-02T14:29:57.868Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1714660147, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2024-05-02T14:27:07.797Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1714660027, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 1,

"priorityAtElection" : 1,

"electionTimeoutMillis" : NumberLong(10000),

"newTermStartDate" : ISODate("2024-05-02T14:27:07.802Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2024-05-02T14:27:07.899Z")

},

"members" : [

{

"_id" : 0,

"name" : "192.168.112.20:27318",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 403,

"optime" : {

"ts" : Timestamp(1714660197, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2024-05-02T14:29:57Z"),

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1714660027, 2),

"electionDate" : ISODate("2024-05-02T14:27:07Z"),

"configVersion" : 3,

"configTerm" : -1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.112.20:27418",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 132,

"optime" : {

"ts" : Timestamp(1714660187, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1714660187, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2024-05-02T14:29:47Z"),

"optimeDurableDate" : ISODate("2024-05-02T14:29:47Z"),

"lastHeartbeat" : ISODate("2024-05-02T14:29:57.035Z"),

"lastHeartbeatRecv" : ISODate("2024-05-02T14:29:57.113Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "192.168.112.20:27318",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 3,

"configTerm" : -1

},

{

"_id" : 2,

"name" : "192.168.112.20:27518",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 102,

"lastHeartbeat" : ISODate("2024-05-02T14:29:57.035Z"),

"lastHeartbeatRecv" : ISODate("2024-05-02T14:29:57.068Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"configVersion" : 3,

"configTerm" : -1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1714660197, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714660197, 1)

}

4、配置節點副本集的創建

config-server主機操作

4.1、準備存放數據和日誌的目錄

mkdir -p /usr/local/mongodb/sharded_cluster/myconfigrs_27019/log \

/usr/local/mongodb/sharded_cluster/myconfigrs_27019/data/db \

/usr/local/mongodb/sharded_cluster/myconfigrs_27119/log \

/usr/local/mongodb/sharded_cluster/myconfigrs_27119/data/db \

/usr/local/mongodb/sharded_cluster/myconfigrs_27219/log \

/usr/local/mongodb/sharded_cluster/myconfigrs_27219/data/db

4.2、創建配置文件

myconfigrs_27019

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myconfigrs_27019/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27019/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27019/log/mongod.pid"

net:

bindIp: localhost,192.168.112.30

port: 27019

replication:

replSetName: myconfigr

sharding:

clusterRole: configsvr

註意:這裡的配置文件與分片節點的配置文件不一樣

分片角色:shardsvr為分片節點,configsvr配置節點

myconfigrs_27119

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myconfigrs_27119/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27119/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27119/log/mongod.pid"

net:

bindIp: localhost,192.168.112.30

port: 27119

replication:

replSetName: myconfigr

sharding:

clusterRole: configsvr

myconfigrs_27219

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/myconfigrs_27219/log/mongod.log"

logAppend: true

storage:

dbPath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27219/data/db"

journal:

enabled: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/myconfigrs_27219/log/mongod.pid"

net:

bindIp: localhost,192.168.112.30

port: 27219

replication:

replSetName: myconfigr

sharding:

clusterRole: configsvr

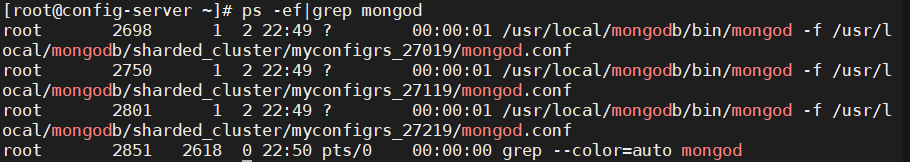

4.3、啟動副本集

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myconfigrs_27019/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myconfigrs_27119/mongod.conf

/usr/local/mongodb/bin/mongod -f /usr/local/mongodb/sharded_cluster/myconfigrs_27219/mongod.conf

ps -ef|grep mongod

4.4、初始化副本集、添加副本節點

連接主節點

mongo --host 192.168.112.30 --port 27019

- 初始化副本集

rs.initiate()

{

"info2" : "no configuration specified. Using a default configuration for the set",

"me" : "192.168.112.30:27019",

"ok" : 1,

"$gleStats" : {

"lastOpTime" : Timestamp(1714702204, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"lastCommittedOpTime" : Timestamp(0, 0)

}

- 添加副本節點

rs.add("192.168.112.30:27119")

{

"ok" : 1,

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1714702333, 2),

"t" : NumberLong(1)

},

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1714702333, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1714702335, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714702333, 2)

}

rs.add("192.168.112.30:27219")

{

"ok" : 1,

"$gleStats" : {

"lastOpTime" : {

"ts" : Timestamp(1714702365, 2),

"t" : NumberLong(1)

},

"electionId" : ObjectId("7fffffff0000000000000001")

},

"lastCommittedOpTime" : Timestamp(1714702367, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1714702367, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1714702365, 2)

}

- 查看副本集

rs.conf()

{

"_id" : "myconfigr",

"version" : 3,

"term" : 1,

"configsvr" : true,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.112.30:27019",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.112.30:27119",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.112.30:27219",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("6634477c7677719ed2388701")

}

}

5、路由節點的創建和操作

router主機操作

5.1、創建連接第一個路由節點

5.1.1、準備存放日誌的目錄

mkdir -p /usr/local/mongodb/sharded_cluster/mymongos_27017/log

5.1.2、創建配置文件

vim /usr/local/mongodb/sharded_cluster/mymongos_27017/mongos.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/mymongos_27017/log/mongod.log"

logAppend: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/mymongos_27017/log/mongod.pid"

net:

bindIp: localhost,192.168.112.40

port: 27017

sharding:

configDB: myconfigrs/192.168.112.30:27019,192.168.112.30:27119,192.168.112.30:27219

5.1.3、啟動mongos

/usr/local/mongodb/bin/mongos -f /usr/local/mongodb/sharded_cluster/mymongos_27017/mongos.conf

5.1.4、客戶端登錄mongos

mongo --port 27017

mongos> use test1

switched to db test1

mongos> db.q1.insert({a:"a1"})

WriteCommandError({

"ok" : 0,

"errmsg" : "unable to initialize targeter for write op for collection test1.q1 :: caused by :: Database test1 could not be created :: caused by :: No shards found",

"code" : 70,

"codeName" : "ShardNotFound",

"operationTime" : Timestamp(1714704496, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1714704496, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

})

此時寫入不了數據

通過路由節點操作,現在只是連接了配置節點

還沒有連接分片數據節點,因此無法寫入業務數據

5.2、添加分片副本集

5.2.1、將第一套分片副本集添加進來

sh.addShard("myshardrs01/192.168.112.10:27018,192.168.112.10:27118,192.168.112.10:27218")

{

"shardAdded" : "myshardrs01",

"ok" : 1,

"operationTime" : Timestamp(1714705107, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1714705107, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

5.2.2、查看分片狀態情況

sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6634477c7677719ed2388706")

}

shards:

{ "_id" : "myshardrs01", "host" : "myshardrs01/192.168.112.10:27018,192.168.112.10:27118", "state" : 1 }

most recently active mongoses:

"4.4.6" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

5.2.3、將第二套分片副本集添加進來

sh.addShard("myshardrs02/192.168.112.20:27318,192.168.112.20:27418,192.168.112.20:27518")

{

"shardAdded" : "myshardrs02",

"ok" : 1,

"operationTime" : Timestamp(1714705261, 2),

"$clusterTime" : {

"clusterTime" : Timestamp(1714705261, 2),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

5.2.4、查看分片狀態情況

sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6634477c7677719ed2388706")

}

shards:

{ "_id" : "myshardrs01", "host" : "myshardrs01/192.168.112.10:27018,192.168.112.10:27118", "state" : 1 }

{ "_id" : "myshardrs02", "host" : "myshardrs02/192.168.112.20:27318,192.168.112.20:27418", "state" : 1 }

most recently active mongoses:

"4.4.6" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

34 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

myshardrs01 990

myshardrs02 34

too many chunks to print, use verbose if you want to force print

5.3、移除分片

如果只剩下最後一個shard,是無法刪除的。

移除時會自動轉移分片數據,需要一個時間過程。

完成後,再次執行刪除分片命令才能真正刪除。

#示例:移除分片myshardrs02

use db

db.runCommand({removeShard: "myshardrs02"})

5.4、開啟分片功能

-

sh.enableSharding("庫名")

-

sh.shardCollection("庫名.集合名",{"key":1})

mongos> sh.enableSharding("test")

{

"ok" : 1,

"operationTime" : Timestamp(1714706298, 6),

"$clusterTime" : {

"clusterTime" : Timestamp(1714706298, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

5.5、針對分片策略--哈希分片

router主機操作

對comment這個集合使用hash方式分片

sh.shardCollection("test.comment",{"nickname":"hashed"})

{

"collectionsharded" : "test.comment",

"collectionUUID" : UUID("61ea1ec6-500b-4d00-86ed-3b2cce81a021"),

"ok" : 1,

"operationTime" : Timestamp(1714706573, 25),

"$clusterTime" : {

"clusterTime" : Timestamp(1714706573, 25),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

- 查看分片狀態

sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6634477c7677719ed2388706")

}

shards:

{ "_id" : "myshardrs01", "host" : "myshardrs01/192.168.112.10:27018,192.168.112.10:27118", "state" : 1 }

{ "_id" : "myshardrs02", "host" : "myshardrs02/192.168.112.20:27318,192.168.112.20:27418", "state" : 1 }

most recently active mongoses:

"4.4.6" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

512 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

myshardrs01 512

myshardrs02 512

too many chunks to print, use verbose if you want to force print

{ "_id" : "test", "primary" : "myshardrs02", "partitioned" : true, "version" : { "uuid" : UUID("7f240c27-83dd-48eb-be8c-2e1336ec9190"), "lastMod" : 1 } }

test.comment

shard key: { "nickname" : "hashed" }

unique: false

balancing: true

chunks:

myshardrs01 2

myshardrs02 2

{ "nickname" : { "$minKey" : 1 } } -->> { "nickname" : NumberLong("-4611686018427387902") } on : myshardrs01 Timestamp(1, 0)

{ "nickname" : NumberLong("-4611686018427387902") } -->> { "nickname" : NumberLong(0) } on : myshardrs01 Timestamp(1, 1)

{ "nickname" : NumberLong(0) } -->> { "nickname" : NumberLong("4611686018427387902") } on : myshardrs02 Timestamp(1, 2)

{ "nickname" : NumberLong("4611686018427387902") } -->> { "nickname" : { "$maxKey" : 1 } } on : myshardrs02 Timestamp(1, 3)

5.5.1、插入數據測試

哈希分片

向comment集合迴圈插入1000條數據做測試

mongos> use test

switched to db test

mongos> for(var i=1;i<=1000;i++){db.comment.insert({_id:i+"",nickname:"Test"+i})}

WriteResult({ "nInserted" : 1 })

mongos> db.comment.count()

1000

5.5.2、分別登錄兩個分片節點主節點,統計文檔數量

-

myshardrs01:

-

mongo --host 192.168.112.10 --port 27018 myshardrs01:PRIMARY> show dbs admin 0.000GB config 0.001GB local 0.001GB test 0.000GB myshardrs01:PRIMARY> use test switched to db test myshardrs01:PRIMARY> db.comment.count() 505 -

myshardrs01:PRIMARY> db.comment.find() { "_id" : "2", "nickname" : "Test2" } { "_id" : "4", "nickname" : "Test4" } { "_id" : "8", "nickname" : "Test8" } { "_id" : "9", "nickname" : "Test9" } { "_id" : "13", "nickname" : "Test13" } { "_id" : "15", "nickname" : "Test15" } { "_id" : "16", "nickname" : "Test16" } { "_id" : "18", "nickname" : "Test18" } { "_id" : "19", "nickname" : "Test19" } { "_id" : "20", "nickname" : "Test20" } { "_id" : "21", "nickname" : "Test21" } { "_id" : "25", "nickname" : "Test25" } { "_id" : "26", "nickname" : "Test26" } { "_id" : "27", "nickname" : "Test27" } { "_id" : "31", "nickname" : "Test31" } { "_id" : "32", "nickname" : "Test32" } { "_id" : "33", "nickname" : "Test33" } { "_id" : "35", "nickname" : "Test35" } { "_id" : "36", "nickname" : "Test36" } { "_id" : "38", "nickname" : "Test38" } Type "it" for more基於哈希值的數據分配

-

-

myshardrs02:

-

mongo --port 27318 myshardrs02:PRIMARY> show dbs admin 0.000GB config 0.001GB local 0.001GB test 0.000GB myshardrs02:PRIMARY> use test switched to db test myshardrs02:PRIMARY> db.comment.count() 495 -

myshardrs02:PRIMARY> db.comment.find() { "_id" : "1", "nickname" : "Test1" } { "_id" : "3", "nickname" : "Test3" } { "_id" : "5", "nickname" : "Test5" } { "_id" : "6", "nickname" : "Test6" } { "_id" : "7", "nickname" : "Test7" } { "_id" : "10", "nickname" : "Test10" } { "_id" : "11", "nickname" : "Test11" } { "_id" : "12", "nickname" : "Test12" } { "_id" : "14", "nickname" : "Test14" } { "_id" : "17", "nickname" : "Test17" } { "_id" : "22", "nickname" : "Test22" } { "_id" : "23", "nickname" : "Test23" } { "_id" : "24", "nickname" : "Test24" } { "_id" : "28", "nickname" : "Test28" } { "_id" : "29", "nickname" : "Test29" } { "_id" : "30", "nickname" : "Test30" } { "_id" : "34", "nickname" : "Test34" } { "_id" : "37", "nickname" : "Test37" } { "_id" : "39", "nickname" : "Test39" } { "_id" : "44", "nickname" : "Test44" } Type "it" for more基於哈希值的數據分配

-

5.6、針對分片策略--範圍分片

router主機操作

使用作者年齡欄位作為片鍵,按照年齡的值進行分片

mongos> sh.shardCollection("test.author",{"age":1})

{

"collectionsharded" : "test.author",

"collectionUUID" : UUID("9b8055c8-7a35-49d5-9e8f-07d6004b19b3"),

"ok" : 1,

"operationTime" : Timestamp(1714709641, 12),

"$clusterTime" : {

"clusterTime" : Timestamp(1714709641, 12),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

5.6.1、插入數據測試

範圍分片

向author集合迴圈插入20000條測試數據

mongos> use test

switched to db test

mongos> for (var i=1;i<=2000;i++){db.author.save({"name":"test"+i,"age":NumberInt(i%120)})}

WriteResult({ "nInserted" : 1 })

mongos> db.author.count()

2000

5.6.2、分別登錄兩個分片節點主節點,統計文檔數量

-

myshardrs02

-

myshardrs02:PRIMARY> show dbs admin 0.000GB config 0.001GB local 0.001GB test 0.000GB myshardrs02:PRIMARY> use test switched to db test myshardrs02:PRIMARY> show collections author comment myshardrs02:PRIMARY> db.author.count() 2000

-

-

myshardrs01

-

myshardrs01:PRIMARY> db.author.count() 0

-

-

發現所有的數據都集中在了一個分片副本上

-

如果發現沒有分片:

-

系統繁忙,正在分片中

-

數據塊(chunk)沒有填滿,預設的數據塊尺寸是64M,填滿後才會向其他片的資料庫填充數據,為了測試可以改小,但是生產環境請勿改動

-

use config

db.settings.save({_id:"chunksize",value:1})

# 改成1M

db.settings.save({_id:"chunksize",value:64})

6、再添加一個路由節點

router主機操作

6.1、準備存放日誌的目錄

mkdir -p /usr/local/mongodb/sharded_cluster/mymongos_27117/log

6.2、創建配置文件

vim /usr/local/mongodb/sharded_cluster/mymongos_27117/mongos.conf

systemLog:

destination: file

path: "/usr/local/mongodb/sharded_cluster/mymongos_27117/log/mongod.log"

logAppend: true

processManagement:

fork: true

pidFilePath: "/usr/local/mongodb/sharded_cluster/mymongos_27117/log/mongod.pid"

net:

bindIp: localhost,192.168.112.40

port: 27117

sharding:

configDB: myconfigrs/192.168.112.30:27019,192.168.112.30:27119,192.168.112.30:27219

6.3、啟動mongos

/usr/local/mongodb/bin/mongos -f /usr/local/mongodb/sharded_cluster/mymongos_27117/mongos.conf

6.4、客戶端登錄mongos

使用mongo客戶端登錄27117

發現第二個路由無需配置,因為分片配置都保存到了配置伺服器中了

mongo --port 27117

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6634477c7677719ed2388706")

}

shards:

{ "_id" : "myshardrs01", "host" : "myshardrs01/192.168.112.10:27018,192.168.112.10:27118", "state" : 1 }

{ "_id" : "myshardrs02", "host" : "myshardrs02/192.168.112.20:27318,192.168.112.20:27418", "state" : 1 }

active mongoses:

"4.4.6" : 2

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

512 : Success

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

myshardrs01 512

myshardrs02 512

too many chunks to print, use verbose if you want to force print

{ "_id" : "test", "primary" : "myshardrs02", "partitioned" : true, "version" : { "uuid" : UUID("7f240c27-83dd-48eb-be8c-2e1336ec9190"), "lastMod" : 1 } }

test.author

shard key: { "age" : 1 }

unique: false

balancing: true

chunks:

myshardrs02 1

{ "age" : { "$minKey" : 1 } } -->> { "age" : { "$maxKey" : 1 } } on : myshardrs02 Timestamp(1, 0)

test.comment

shard key: { "nickname" : "hashed" }

unique: false

balancing: true

chunks:

myshardrs01 2

myshardrs02 2

{ "nickname" : { "$minKey" : 1 } } -->> { "nickname" : NumberLong("-4611686018427387902") } on : myshardrs01 Timestamp(1, 0)

{ "nickname" : NumberLong("-4611686018427387902") } -->> { "nickname" : NumberLong(0) } on : myshardrs01 Timestamp(1, 1)

{ "nickname" : NumberLong(0) } -->> { "nickname" : NumberLong("4611686018427387902") } on : myshardrs02 Timestamp(1, 2)

{ "nickname" : NumberLong("4611686018427387902") } -->> { "nickname" : { "$maxKey" : 1 } } on : myshardrs02 Timestamp(1, 3)

6.5、查看之前測試的數據

mongos> show dbs

admin 0.000GB

config 0.003GB

test 0.000GB

mongos> use test

switched to db test

mongos> db.comment.count()

1000

mongos> db.author.count()

2000

至此兩個分片節點副本集(3+3)+ 一個配置節點副本集(3)+兩個路由節點(2)的分片集群搭建完成