列表中自動播放視頻,常規方案是在每個 xml 中寫入視頻佈局,然後在滑動時獲取當前的下標,播放此下標的視頻 弊端:播放容易出錯,需要精準控制好停止播放操作,並且適配器中容易觸發多次刷新,導致執行多次同樣的操作,不易控制離開停止等操作,增加了佈局的負擔,影響滑動流暢度,無法復用... 使用過的都比較清 ...

前言

在上一篇理論文章中我們介紹了YUV到RGB之間轉換的幾種公式與一些優化演算法,今天我們再來介紹一下RGB到YUV的轉換,順便使用Opengl ES做個實踐,將一張RGB的圖片通過Shader

的方式轉換YUV格式圖,然後保存到本地。

可能有的童鞋會問,YUV轉RGB是為了渲染顯示,那麼RGB轉YUV的應用場景是什麼?在做視頻編碼的時候我們可以使用MediaCodec搭配Surface就可以完成,貌似也沒有用到RGB轉YUV的功能啊,

硬編碼沒有用到,那麼軟編碼呢?一般我們做視頻編碼的時候都是硬編碼優先,軟編碼兜底的原則,在遇到一些硬編碼不可用的情況下可能就需要用到x264庫進行軟編碼了,而此時RGB轉YUV可能就派上用場啦。

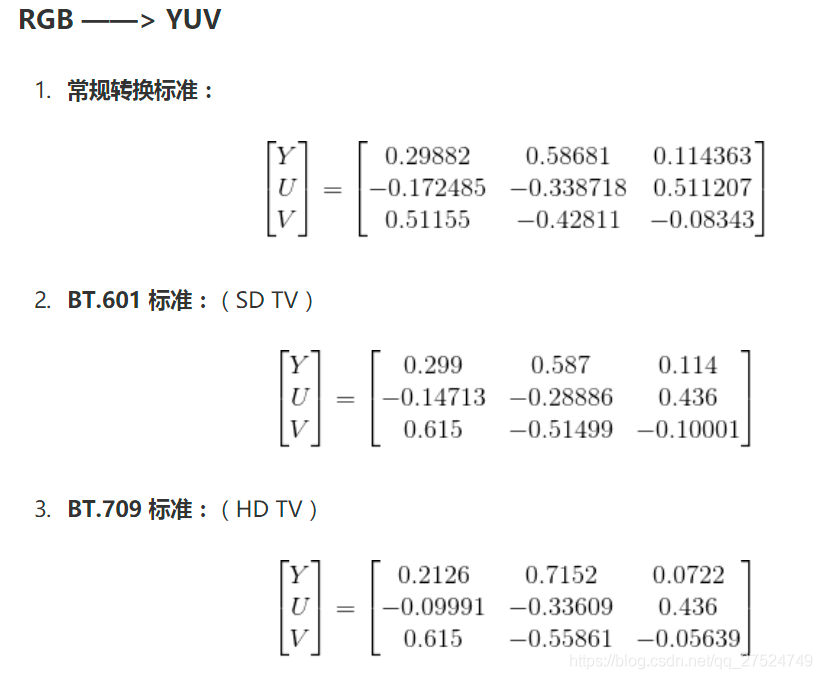

RGB到YUV的轉換公式

在前面 Opengl ES之YUV數據渲染 一文中我們介紹過YUV的幾種相容標準,下麵我們看看RGB到YUV的轉換公式:

RGB 轉 BT.601 YUV

Y = 0.257R + 0.504G + 0.098B + 16

Cb = -0.148R - 0.291G + 0.439B + 128

Cr = 0.439R - 0.368G - 0.071B + 128

RGB 轉 BT.709 YUV

Y = 0.183R + 0.614G + 0.062B + 16

Cb = -0.101R - 0.339G + 0.439B + 128

Cr = 0.439R - 0.399G - 0.040B + 128

或者也可以使用矩陣運算的方式進行轉換,更加的便捷:

RGB轉YUV

先說一下RGB轉YUV的過程,先將RGB數據按照公式轉換為YUV數據,然後將YUV數據按照RGBA進行排布,這一步的目的是為了後續數據讀取,最後使用glReadPixels讀取YUV數據。

而對於OpenGL ES來說,目前它輸入只認RGBA、lumiance、luminace alpha這幾個格式,輸出大多數實現只認RGBA格式,因此輸出的數據格式雖然是YUV格式,但是在存儲時我們仍然要按照RGBA方式去訪問texture數據。

以NV21的YUV數據為例,它的記憶體大小為width x height * 3 / 2。如果是RGBA的格式存儲的話,占用的記憶體空間大小是width x height x 4(因為 RGBA 一共4個通道)。很顯然它們的記憶體大小是對不上的,

那麼該如何調整Opengl buffer的大小讓RGBA的輸出能對應上YUV的輸出呢?我們可以設計輸出的寬為width / 4,高為height * 3 / 2即可。

為什麼是這樣的呢?雖然我們的目的是將RGB轉換成YUV,但是我們的輸入和輸出時讀取的類型GLenum是依然是RGBA,也就是說:width x height x 4 = (width / 4) x (height * 3 / 2) * 4

而YUV數據在記憶體中的分佈以下這樣子的:

width / 4

|--------------|

| |

| | h

| Y |

|--------------|

| U | V |

| | | h / 2

|--------------|

那麼上面的排序如果進行了歸一化之後呢,就變成了下麵這樣子了:

(0,0) width / 4 (1,0)

|--------------|

| |

| | h

| Y |

|--------------| (1,2/3)

| U | V |

| | | h / 2

|--------------|

(0,1) (1,1)

從上面的排布可以看出看出,在紋理坐標y < (2/3)時,需要完成一次對整個紋理的採樣,用於生成Y數據,當紋理坐標 y > (2/3)時,同樣需要再進行一次對整個紋理的採樣,用於生成UV的數據。

同時還需要將我們的視窗設置為glViewport(0, 0, width / 4, height * 1.5);

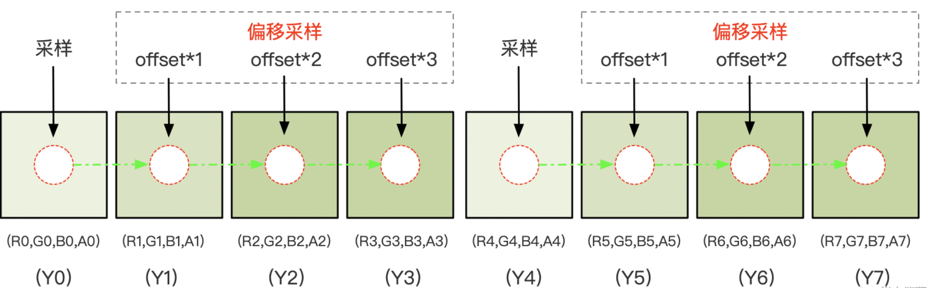

由於視口寬度設置為原來的 1/4 ,可以簡單的認為相對於原來的圖像每隔4個像素做一次採樣,由於我們生成Y數據是要對每一個像素都進行採樣,所以還需要進行3次偏移採樣。

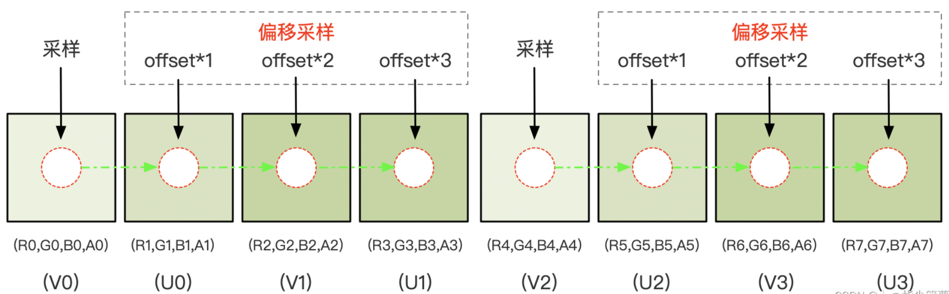

同理,生成對於UV數據也需要進行3次額外的偏移採樣。

在著色器中offset變數需要設置為一個歸一化之後的值:1.0/width, 按照原理圖,在紋理坐標 y < (2/3) 範圍,一次採樣(加三次偏移採樣)4 個 RGBA 像素(R,G,B,A)生成 1 個(Y0,Y1,Y2,Y3),整個範圍採樣結束時填充好 width*height 大小的緩衝區;

當紋理坐標 y > (2/3) 範圍,一次採樣(加三次偏移採樣)4 個 RGBA 像素(R,G,B,A)生成 1 個(V0,U0,V0,U1),又因為 UV 緩衝區的高度為 height/2 ,VU plane 在垂直方向的採樣是隔行進行,整個範圍採樣結束時填充好 width*height/2 大小的緩衝區。

主要代碼

RGBtoYUVOpengl.cpp

#include "../utils/Log.h"

#include "RGBtoYUVOpengl.h"

// 頂點著色器

static const char *ver = "#version 300 es\n"

"in vec4 aPosition;\n"

"in vec2 aTexCoord;\n"

"out vec2 v_texCoord;\n"

"void main() {\n"

" v_texCoord = aTexCoord;\n"

" gl_Position = aPosition;\n"

"}";

// 片元著色器

static const char *fragment = "#version 300 es\n"

"precision mediump float;\n"

"in vec2 v_texCoord;\n"

"layout(location = 0) out vec4 outColor;\n"

"uniform sampler2D s_TextureMap;\n"

"uniform float u_Offset;\n"

"const vec3 COEF_Y = vec3(0.299, 0.587, 0.114);\n"

"const vec3 COEF_U = vec3(-0.147, -0.289, 0.436);\n"

"const vec3 COEF_V = vec3(0.615, -0.515, -0.100);\n"

"const float UV_DIVIDE_LINE = 2.0 / 3.0;\n"

"void main(){\n"

" vec2 texelOffset = vec2(u_Offset, 0.0);\n"

" if (v_texCoord. y <= UV_DIVIDE_LINE) {\n"

" vec2 texCoord = vec2(v_texCoord. x, v_texCoord. y * 3.0 / 2.0);\n"

" vec4 color0 = texture(s_TextureMap, texCoord);\n"

" vec4 color1 = texture(s_TextureMap, texCoord + texelOffset);\n"

" vec4 color2 = texture(s_TextureMap, texCoord + texelOffset * 2.0);\n"

" vec4 color3 = texture(s_TextureMap, texCoord + texelOffset * 3.0);\n"

" float y0 = dot(color0. rgb, COEF_Y);\n"

" float y1 = dot(color1. rgb, COEF_Y);\n"

" float y2 = dot(color2. rgb, COEF_Y);\n"

" float y3 = dot(color3. rgb, COEF_Y);\n"

" outColor = vec4(y0, y1, y2, y3);\n"

" } else {\n"

" vec2 texCoord = vec2(v_texCoord.x, (v_texCoord.y - UV_DIVIDE_LINE) * 3.0);\n"

" vec4 color0 = texture(s_TextureMap, texCoord);\n"

" vec4 color1 = texture(s_TextureMap, texCoord + texelOffset);\n"

" vec4 color2 = texture(s_TextureMap, texCoord + texelOffset * 2.0);\n"

" vec4 color3 = texture(s_TextureMap, texCoord + texelOffset * 3.0);\n"

" float v0 = dot(color0. rgb, COEF_V) + 0.5;\n"

" float u0 = dot(color1. rgb, COEF_U) + 0.5;\n"

" float v1 = dot(color2. rgb, COEF_V) + 0.5;\n"

" float u1 = dot(color3. rgb, COEF_U) + 0.5;\n"

" outColor = vec4(v0, u0, v1, u1);\n"

" }\n"

"}";

// 使用繪製兩個三角形組成一個矩形的形式(三角形帶)

// 第一第二第三個點組成一個三角形,第二第三第四個點組成一個三角形

const static GLfloat VERTICES[] = {

1.0f,-1.0f, // 右下

1.0f,1.0f, // 右上

-1.0f,-1.0f, // 左下

-1.0f,1.0f // 左上

};

// FBO貼圖紋理坐標(參考手機屏幕坐標系統,原點在左下角)

// 註意坐標不要錯亂

const static GLfloat TEXTURE_COORD[] = {

1.0f,0.0f, // 右下

1.0f,1.0f, // 右上

0.0f,0.0f, // 左下

0.0f,1.0f // 左上

};

RGBtoYUVOpengl::RGBtoYUVOpengl() {

initGlProgram(ver,fragment);

positionHandle = glGetAttribLocation(program,"aPosition");

textureHandle = glGetAttribLocation(program,"aTexCoord");

textureSampler = glGetUniformLocation(program,"s_TextureMap");

u_Offset = glGetUniformLocation(program,"u_Offset");

LOGD("program:%d",program);

LOGD("positionHandle:%d",positionHandle);

LOGD("textureHandle:%d",textureHandle);

LOGD("textureSample:%d",textureSampler);

LOGD("u_Offset:%d",u_Offset);

}

RGBtoYUVOpengl::~RGBtoYUVOpengl() noexcept {

}

void RGBtoYUVOpengl::fboPrepare() {

glGenTextures(1, &fboTextureId);

// 綁定紋理

glBindTexture(GL_TEXTURE_2D, fboTextureId);

// 為當前綁定的紋理對象設置環繞、過濾方式

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glBindTexture(GL_TEXTURE_2D, GL_NONE);

glGenFramebuffers(1,&fboId);

glBindFramebuffer(GL_FRAMEBUFFER,fboId);

// 綁定紋理

glBindTexture(GL_TEXTURE_2D,fboTextureId);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, fboTextureId, 0);

// 這個紋理是多大的?

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, imageWidth / 4, imageHeight * 1.5, 0, GL_RGBA, GL_UNSIGNED_BYTE, nullptr);

// 檢查FBO狀態

if (glCheckFramebufferStatus(GL_FRAMEBUFFER)!= GL_FRAMEBUFFER_COMPLETE) {

LOGE("FBOSample::CreateFrameBufferObj glCheckFramebufferStatus status != GL_FRAMEBUFFER_COMPLETE");

}

// 解綁

glBindTexture(GL_TEXTURE_2D, GL_NONE);

glBindFramebuffer(GL_FRAMEBUFFER, GL_NONE);

}

// 渲染邏輯

void RGBtoYUVOpengl::onDraw() {

// 繪製到FBO上去

// 綁定fbo

glBindFramebuffer(GL_FRAMEBUFFER, fboId);

glPixelStorei(GL_UNPACK_ALIGNMENT,1);

// 設置視口大小

glViewport(0, 0,imageWidth / 4, imageHeight * 1.5);

glClearColor(0.0f, 1.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

glUseProgram(program);

// 激活紋理

glActiveTexture(GL_TEXTURE2);

glUniform1i(textureSampler, 2);

// 綁定紋理

glBindTexture(GL_TEXTURE_2D, textureId);

// 設置偏移

float texelOffset = (float) (1.f / (float) imageWidth);

glUniform1f(u_Offset,texelOffset);

/**

* size 幾個數字表示一個點,顯示是兩個數字表示一個點

* normalized 是否需要歸一化,不用,這裡已經歸一化了

* stride 步長,連續頂點之間的間隔,如果頂點直接是連續的,也可填0

*/

// 啟用頂點數據

glEnableVertexAttribArray(positionHandle);

glVertexAttribPointer(positionHandle,2,GL_FLOAT,GL_FALSE,0,VERTICES);

// 紋理坐標

glEnableVertexAttribArray(textureHandle);

glVertexAttribPointer(textureHandle,2,GL_FLOAT,GL_FALSE,0,TEXTURE_COORD);

// 4個頂點繪製兩個三角形組成矩形

glDrawArrays(GL_TRIANGLE_STRIP,0,4);

glUseProgram(0);

// 禁用頂點

glDisableVertexAttribArray(positionHandle);

if(nullptr != eglHelper){

eglHelper->swapBuffers();

}

glBindTexture(GL_TEXTURE_2D, 0);

// 解綁fbo

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

// 設置RGB圖像數據

void RGBtoYUVOpengl::setPixel(void *data, int width, int height, int length) {

LOGD("texture setPixel");

imageWidth = width;

imageHeight = height;

// 準備fbo

fboPrepare();

glGenTextures(1, &textureId);

// 激活紋理,註意以下這個兩句是搭配的,glActiveTexture激活的是那個紋理,就設置的sampler2D是那個

// 預設是0,如果不是0的話,需要在onDraw的時候重新激活一下?

// glActiveTexture(GL_TEXTURE0);

// glUniform1i(textureSampler, 0);

// 例如,一樣的

glActiveTexture(GL_TEXTURE2);

glUniform1i(textureSampler, 2);

// 綁定紋理

glBindTexture(GL_TEXTURE_2D, textureId);

// 為當前綁定的紋理對象設置環繞、過濾方式

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, data);

// 生成mip貼圖

glGenerateMipmap(GL_TEXTURE_2D);

// 解綁定

glBindTexture(GL_TEXTURE_2D, 0);

}

// 讀取渲染後的YUV數據

void RGBtoYUVOpengl::readYUV(uint8_t **data, int *width, int *height) {

// 從fbo中讀取

// 綁定fbo

*width = imageWidth;

*height = imageHeight;

glBindFramebuffer(GL_FRAMEBUFFER, fboId);

glBindTexture(GL_TEXTURE_2D, fboTextureId);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0,

GL_TEXTURE_2D, fboTextureId, 0);

*data = new uint8_t[imageWidth * imageHeight * 3 / 2];

glReadPixels(0, 0, imageWidth / 4, imageHeight * 1.5, GL_RGBA, GL_UNSIGNED_BYTE, *data);

glBindTexture(GL_TEXTURE_2D, 0);

// 解綁fbo

glBindFramebuffer(GL_FRAMEBUFFER, 0);

}

下麵是Activity的主要代碼邏輯:

public class RGBToYUVActivity extends AppCompatActivity {

protected MyGLSurfaceView myGLSurfaceView;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_rgb_to_yuv);

myGLSurfaceView = findViewById(R.id.my_gl_surface_view);

myGLSurfaceView.setOpenGlListener(new MyGLSurfaceView.OnOpenGlListener() {

@Override

public BaseOpengl onOpenglCreate() {

return new RGBtoYUVOpengl();

}

@Override

public Bitmap requestBitmap() {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inScaled = false;

return BitmapFactory.decodeResource(getResources(),R.mipmap.ic_smile,options);

}

@Override

public void readPixelResult(byte[] bytes) {

if (null != bytes) {

}

}

// 也就是RGBtoYUVOpengl::readYUV讀取到結果數據回調

@Override

public void readYUVResult(byte[] bytes) {

if (null != bytes) {

String fileName = System.currentTimeMillis() + ".yuv";

File fileParent = getFilesDir();

if (!fileParent.exists()) {

fileParent.mkdirs();

}

FileOutputStream fos = null;

try {

File file = new File(fileParent, fileName);

fos = new FileOutputStream(file);

fos.write(bytes,0,bytes.length);

fos.flush();

fos.close();

Toast.makeText(RGBToYUVActivity.this, "YUV圖片保存成功" + file.getAbsolutePath(), Toast.LENGTH_LONG).show();

} catch (Exception e) {

Log.v("fly_learn_opengl", "圖片保存異常:" + e.getMessage());

Toast.makeText(RGBToYUVActivity.this, "YUV圖片保存失敗", Toast.LENGTH_LONG).show();

}

}

}

});

Button button = findViewById(R.id.bt_rgb_to_yuv);

button.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

myGLSurfaceView.readYuvData();

}

});

ImageView iv_rgb = findViewById(R.id.iv_rgb);

iv_rgb.setImageResource(R.mipmap.ic_smile);

}

}

以下是自定義SurfaceView的代碼:

public class MyGLSurfaceView extends SurfaceView implements SurfaceHolder.Callback {

private final static int MSG_CREATE_GL = 101;

private final static int MSG_CHANGE_GL = 102;

private final static int MSG_DRAW_GL = 103;

private final static int MSG_DESTROY_GL = 104;

private final static int MSG_READ_PIXEL_GL = 105;

private final static int MSG_UPDATE_BITMAP_GL = 106;

private final static int MSG_UPDATE_YUV_GL = 107;

private final static int MSG_READ_YUV_GL = 108;

public BaseOpengl baseOpengl;

private OnOpenGlListener onOpenGlListener;

private HandlerThread handlerThread;

private Handler renderHandler;

public int surfaceWidth;

public int surfaceHeight;

public MyGLSurfaceView(Context context) {

this(context,null);

}

public MyGLSurfaceView(Context context, AttributeSet attrs) {

super(context, attrs);

getHolder().addCallback(this);

handlerThread = new HandlerThread("RenderHandlerThread");

handlerThread.start();

renderHandler = new Handler(handlerThread.getLooper()){

@Override

public void handleMessage(@NonNull Message msg) {

switch (msg.what){

case MSG_CREATE_GL:

baseOpengl = onOpenGlListener.onOpenglCreate();

Surface surface = (Surface) msg.obj;

if(null != baseOpengl){

baseOpengl.surfaceCreated(surface);

Bitmap bitmap = onOpenGlListener.requestBitmap();

if(null != bitmap){

baseOpengl.setBitmap(bitmap);

}

}

break;

case MSG_CHANGE_GL:

if(null != baseOpengl){

Size size = (Size) msg.obj;

baseOpengl.surfaceChanged(size.getWidth(),size.getHeight());

}

break;

case MSG_DRAW_GL:

if(null != baseOpengl){

baseOpengl.onGlDraw();

}

break;

case MSG_READ_PIXEL_GL:

if(null != baseOpengl){

byte[] bytes = baseOpengl.readPixel();

if(null != bytes && null != onOpenGlListener){

onOpenGlListener.readPixelResult(bytes);

}

}

break;

case MSG_READ_YUV_GL:

if(null != baseOpengl){

byte[] bytes = baseOpengl.readYUVResult();

if(null != bytes && null != onOpenGlListener){

onOpenGlListener.readYUVResult(bytes);

}

}

break;

case MSG_UPDATE_BITMAP_GL:

if(null != baseOpengl){

Bitmap bitmap = onOpenGlListener.requestBitmap();

if(null != bitmap){

baseOpengl.setBitmap(bitmap);

baseOpengl.onGlDraw();

}

}

break;

case MSG_UPDATE_YUV_GL:

if(null != baseOpengl){

YUVBean yuvBean = (YUVBean) msg.obj;

if(null != yuvBean){

baseOpengl.setYuvData(yuvBean.getyData(),yuvBean.getUvData(),yuvBean.getWidth(),yuvBean.getHeight());

baseOpengl.onGlDraw();

}

}

break;

case MSG_DESTROY_GL:

if(null != baseOpengl){

baseOpengl.surfaceDestroyed();

}

break;

}

}

};

}

public void setOpenGlListener(OnOpenGlListener listener) {

this.onOpenGlListener = listener;

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder surfaceHolder) {

Message message = Message.obtain();

message.what = MSG_CREATE_GL;

message.obj = surfaceHolder.getSurface();

renderHandler.sendMessage(message);

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder surfaceHolder, int i, int w, int h) {

Message message = Message.obtain();

message.what = MSG_CHANGE_GL;

message.obj = new Size(w,h);

renderHandler.sendMessage(message);

Message message1 = Message.obtain();

message1.what = MSG_DRAW_GL;

renderHandler.sendMessage(message1);

surfaceWidth = w;

surfaceHeight = h;

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder surfaceHolder) {

Message message = Message.obtain();

message.what = MSG_DESTROY_GL;

renderHandler.sendMessage(message);

}

public void readGlPixel(){

Message message = Message.obtain();

message.what = MSG_READ_PIXEL_GL;

renderHandler.sendMessage(message);

}

public void readYuvData(){

Message message = Message.obtain();

message.what = MSG_READ_YUV_GL;

renderHandler.sendMessage(message);

}

public void updateBitmap(){

Message message = Message.obtain();

message.what = MSG_UPDATE_BITMAP_GL;

renderHandler.sendMessage(message);

}

public void setYuvData(byte[] yData,byte[] uvData,int width,int height){

Message message = Message.obtain();

message.what = MSG_UPDATE_YUV_GL;

message.obj = new YUVBean(yData,uvData,width,height);

renderHandler.sendMessage(message);

}

public void release(){

// todo 主要線程同步問題,當心surfaceDestroyed還沒有執行到,但是就被release了,那就記憶體泄漏了

if(null != baseOpengl){

baseOpengl.release();

}

}

public void requestRender(){

Message message = Message.obtain();

message.what = MSG_DRAW_GL;

renderHandler.sendMessage(message);

}

public interface OnOpenGlListener{

BaseOpengl onOpenglCreate();

Bitmap requestBitmap();

void readPixelResult(byte[] bytes);

void readYUVResult(byte[] bytes);

}

}

BaseOpengl的java代碼:

public class BaseOpengl {

public static final int YUV_DATA_TYPE_NV12 = 0;

public static final int YUV_DATA_TYPE_NV21 = 1;

// 三角形

public static final int DRAW_TYPE_TRIANGLE = 0;

// 四邊形

public static final int DRAW_TYPE_RECT = 1;

// 紋理貼圖

public static final int DRAW_TYPE_TEXTURE_MAP = 2;

// 矩陣變換

public static final int DRAW_TYPE_MATRIX_TRANSFORM = 3;

// VBO/VAO

public static final int DRAW_TYPE_VBO_VAO = 4;

// EBO

public static final int DRAW_TYPE_EBO_IBO = 5;

// FBO

public static final int DRAW_TYPE_FBO = 6;

// PBO

public static final int DRAW_TYPE_PBO = 7;

// YUV nv12與nv21渲染

public static final int DRAW_YUV_RENDER = 8;

// 將rgb圖像轉換城nv21

public static final int DRAW_RGB_TO_YUV = 9;

public long glNativePtr;

protected EGLHelper eglHelper;

protected int drawType;

public BaseOpengl(int drawType) {

this.drawType = drawType;

this.eglHelper = new EGLHelper();

}

public void surfaceCreated(Surface surface) {

Log.v("fly_learn_opengl","------------surfaceCreated:" + surface);

eglHelper.surfaceCreated(surface);

}

public void surfaceChanged(int width, int height) {

Log.v("fly_learn_opengl","------------surfaceChanged:" + Thread.currentThread());

eglHelper.surfaceChanged(width,height);

}

public void surfaceDestroyed() {

Log.v("fly_learn_opengl","------------surfaceDestroyed:" + Thread.currentThread());

eglHelper.surfaceDestroyed();

}

public void release(){

if(glNativePtr != 0){

n_free(glNativePtr,drawType);

glNativePtr = 0;

}

}

public void onGlDraw(){

Log.v("fly_learn_opengl","------------onDraw:" + Thread.currentThread());

if(glNativePtr == 0){

glNativePtr = n_gl_nativeInit(eglHelper.nativePtr,drawType);

}

if(glNativePtr != 0){

n_onGlDraw(glNativePtr,drawType);

}

}

public void setBitmap(Bitmap bitmap){

if(glNativePtr == 0){

glNativePtr = n_gl_nativeInit(eglHelper.nativePtr,drawType);

}

if(glNativePtr != 0){

n_setBitmap(glNativePtr,bitmap);

}

}

public void setYuvData(byte[] yData,byte[] uvData,int width,int height){

if(glNativePtr != 0){

n_setYuvData(glNativePtr,yData,uvData,width,height,drawType);

}

}

public void setMvpMatrix(float[] mvp){

if(glNativePtr == 0){

glNativePtr = n_gl_nativeInit(eglHelper.nativePtr,drawType);

}

if(glNativePtr != 0){

n_setMvpMatrix(glNativePtr,mvp);

}

}

public byte[] readPixel(){

if(glNativePtr != 0){

return n_readPixel(glNativePtr,drawType);

}

return null;

}

public byte[] readYUVResult(){

if(glNativePtr != 0){

return n_readYUV(glNativePtr,drawType);

}

return null;

}

// 繪製

private native void n_onGlDraw(long ptr,int drawType);

private native void n_setMvpMatrix(long ptr,float[] mvp);

private native void n_setBitmap(long ptr,Bitmap bitmap);

protected native long n_gl_nativeInit(long eglPtr,int drawType);

private native void n_free(long ptr,int drawType);

private native byte[] n_readPixel(long ptr,int drawType);

private native byte[] n_readYUV(long ptr,int drawType);

private native void n_setYuvData(long ptr,byte[] yData,byte[] uvData,int width,int height,int drawType);

}

將轉換後的YUV數據讀取保存好後,可以將數據拉取到電腦上使用YUVViewer這個軟體查看是否真正轉換成功。

參考

https://juejin.cn/post/7025223104569802789

專欄系列

Opengl ES之EGL環境搭建

Opengl ES之著色器

Opengl ES之三角形繪製

Opengl ES之四邊形繪製

Opengl ES之紋理貼圖

Opengl ES之VBO和VAO

Opengl ES之EBO

Opengl ES之FBO

Opengl ES之PBO

Opengl ES之YUV數據渲染

YUV轉RGB的一些理論知識

關註我,一起進步,人生不止coding!!!