在上兩篇文章中已經將播放視頻的功能實現了,今天我就來講解一下如何通過FFmpeg來解析音頻內容,並且用NAudio來進行音頻播放; 效果圖 雖然效果圖是gif並不能 聽到音頻播放的內容,不過可以從圖中看到已經是實現了音頻的播放,暫停,停止已經更改進度的內容了; 一。添加NAudio庫: 一.音頻解碼 ...

在上兩篇文章中已經將播放視頻的功能實現了,今天我就來講解一下如何通過FFmpeg來解析音頻內容,並且用NAudio來進行音頻播放;

效果圖

雖然效果圖是gif並不能

聽到音頻播放的內容,不過可以從圖中看到已經是實現了音頻的播放,暫停,停止已經更改進度的內容了;

一。添加NAudio庫:

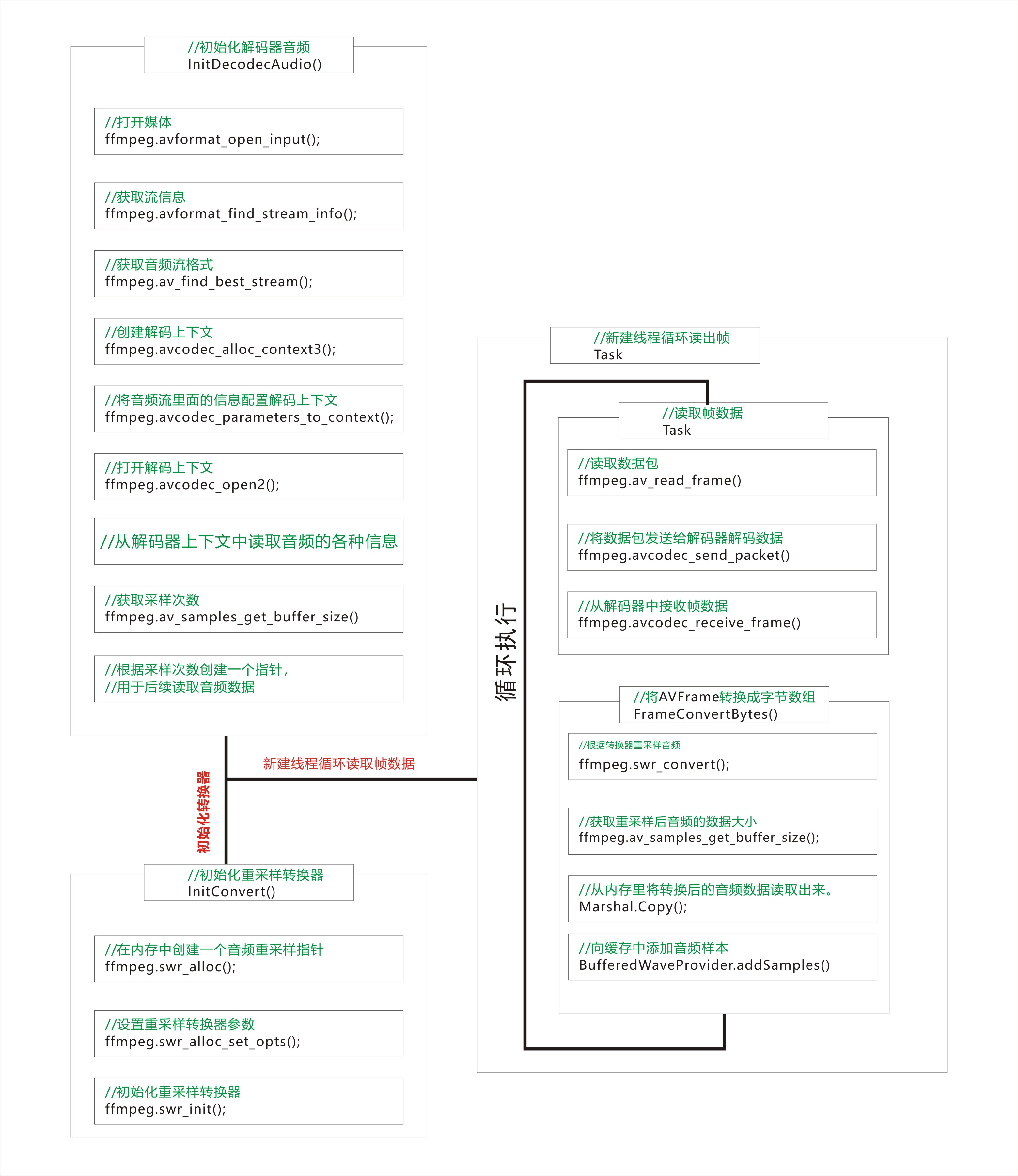

一.音頻解碼播放流程

可以從流程圖中看到音頻的解碼跟視頻的解碼是差不多的,只有是重採樣跟將幀數據轉換成位元組數組這兩個步驟有區別而已。

1.初始化音頻解碼

public void InitDecodecAudio(string path) { int error = 0; //創建一個 媒體格式上下文 format = ffmpeg.avformat_alloc_context(); var tempFormat = format; //打開媒體文件 error = ffmpeg.avformat_open_input(&tempFormat, path, null, null); if (error < 0) { Debug.WriteLine("打開媒體文件失敗"); return; } //嗅探媒體信息 ffmpeg.avformat_find_stream_info(format, null); AVCodec* codec; //獲取音頻流索引 audioStreamIndex = ffmpeg.av_find_best_stream(format, AVMediaType.AVMEDIA_TYPE_AUDIO, -1, -1, &codec, 0); if (audioStreamIndex < 0) { Debug.WriteLine("沒有找到音頻流"); return; } //獲取音頻流 audioStream = format->streams[audioStreamIndex]; //創建解碼上下文 codecContext = ffmpeg.avcodec_alloc_context3(codec); //將音頻流裡面的解碼器參數設置到 解碼器上下文中 error = ffmpeg.avcodec_parameters_to_context(codecContext, audioStream->codecpar); if (error < 0) { Debug.WriteLine("設置解碼器參數失敗"); } error = ffmpeg.avcodec_open2(codecContext, codec, null); //媒體時長 Duration = TimeSpan.FromMilliseconds(format->duration / 1000); //編解碼id CodecId = codec->id.ToString(); //解碼器名字 CodecName = ffmpeg.avcodec_get_name(codec->id); //比特率 Bitrate = codecContext->bit_rate; //音頻通道數 Channels = codecContext->channels; //通道佈局類型 ChannelLyaout = codecContext->channel_layout; //音頻採樣率 SampleRate = codecContext->sample_rate; //音頻採樣格式 SampleFormat = codecContext->sample_fmt; //採樣次數 //獲取給定音頻參數所需的緩衝區大小。 BitsPerSample = ffmpeg.av_samples_get_buffer_size(null, 2, codecContext->frame_size, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); //創建一個指針 audioBuffer = Marshal.AllocHGlobal((int)BitsPerSample); bufferPtr = (byte*)audioBuffer; //初始化音頻重採樣轉換器 InitConvert((int)ChannelLyaout, AVSampleFormat.AV_SAMPLE_FMT_S16, (int)SampleRate, (int)ChannelLyaout, SampleFormat, (int)SampleRate); //創建一個包和幀指針 packet = ffmpeg.av_packet_alloc(); frame = ffmpeg.av_frame_alloc(); State = MediaState.Read; }InitDecodecAudio

在初始化各個結構的代碼其實是跟解碼視頻的流程差不多的,只是獲取媒體流的類型從視頻類型更改成了音頻類型,和媒體的信息從視頻改為了音頻信息,並且獲取了音頻的採樣次數和創建了一個用於後續讀取音頻數據的指針。

2.初始化音頻重採樣轉換器。

/// <summary> /// 初始化重採樣轉換器 /// </summary> /// <param name="occ">輸出的通道類型</param> /// <param name="osf">輸出的採樣格式</param> /// <param name="osr">輸出的採樣率</param> /// <param name="icc">輸入的通道類型</param> /// <param name="isf">輸入的採樣格式</param> /// <param name="isr">輸入的採樣率</param> /// <returns></returns> bool InitConvert(int occ, AVSampleFormat osf, int osr, int icc, AVSampleFormat isf, int isr) { //創建一個重採樣轉換器 convert = ffmpeg.swr_alloc(); //設置重採樣轉換器參數 convert = ffmpeg.swr_alloc_set_opts(convert, occ, osf, osr, icc, isf, isr, 0, null); if (convert == null) return false; //初始化重採樣轉換器 ffmpeg.swr_init(convert); return true; }InitConvert

根據輸入參數初始化了一個 SwrContext結構的音頻轉換器,跟 視頻的SwsContext結構是不同的。

3。從音頻幀讀取數據。

public byte[] FrameConvertBytes(AVFrame* sourceFrame) { var tempBufferPtr = bufferPtr; //重採樣音頻 var outputSamplesPerChannel = ffmpeg.swr_convert(convert, &tempBufferPtr, frame->nb_samples, sourceFrame->extended_data, sourceFrame->nb_samples); //獲取重採樣後的音頻數據大小 var outPutBufferLength = ffmpeg.av_samples_get_buffer_size(null, 2, outputSamplesPerChannel, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); if (outputSamplesPerChannel < 0) return null; byte[] bytes = new byte[outPutBufferLength]; //從記憶體中讀取轉換後的音頻數據 Marshal.Copy(audioBuffer, bytes, 0, bytes.Length); return bytes; }FrameConvertBytes

調用ffmpeg.swr_convert()將音頻幀通過重採樣後音頻的數據大小會發生改變的,需要再次調用 ffmpeg.av_samples_get_buffer_size() 來重新計算 音頻數據大小,並通過指針位置獲取數據;

4.聲明音頻播放組件

//NAudio音頻播放組件 private WaveOut waveOut; private BufferedWaveProvider bufferedWaveProvider;

5.線上程任務上迴圈讀取音頻幀解碼成位元組數組並 向bufferedAveProvider 添加音頻樣本,當添加的音頻數據大於預設的數據量則將緩存內的數據都清除掉。

PlayTask = new Task(() => { while (true) { //播放中 if (audio.IsPlaying) { //獲取下一幀視頻 if (audio.TryReadNextFrame(out var frame)) { var bytes = audio.FrameConvertBytes(&frame); if (bytes == null) continue; if (bufferedWaveProvider.BufferLength <= bufferedWaveProvider.BufferedBytes+bytes.Length) { bufferedWaveProvider.ClearBuffer(); } bufferedWaveProvider.AddSamples(bytes, 0, bytes.Length);//向緩存中添加音頻樣本 } } } }); PlayTask.Start();PlayTask

二.音頻讀取解碼整個流程 DecodecAudio 類

public unsafe class DecodecAudio : IMedia { //媒體格式容器 AVFormatContext* format; //解碼上下文 AVCodecContext* codecContext; AVStream* audioStream; //媒體數據包 AVPacket* packet; AVFrame* frame; SwrContext* convert; int audioStreamIndex; bool isNextFrame = true; //播放上一幀的時間 TimeSpan lastTime; TimeSpan OffsetClock; object SyncLock = new object(); Stopwatch clock = new Stopwatch(); bool isNexFrame = true; public event IMedia.MediaHandler MediaCompleted; //是否是正在播放中 public bool IsPlaying { get; protected set; } /// <summary> /// 媒體狀態 /// </summary> public MediaState State { get; protected set; } /// <summary> /// 幀播放時長 /// </summary> public TimeSpan frameDuration { get; protected set; } /// <summary> /// 媒體時長 /// </summary> public TimeSpan Duration { get; protected set; } /// <summary> /// 播放位置 /// </summary> public TimeSpan Position { get => OffsetClock + clock.Elapsed; } /// <summary> /// 解碼器名字 /// </summary> public string CodecName { get; protected set; } /// <summary> /// 解碼器Id /// </summary> public string CodecId { get; protected set; } /// <summary> /// 比特率 /// </summary> public long Bitrate { get; protected set; } //通道數 public int Channels { get; protected set; } //採樣率 public long SampleRate { get; protected set; } //採樣次數 public long BitsPerSample { get; protected set; } //通道佈局 public ulong ChannelLyaout { get; protected set; } /// <summary> /// 採樣格式 /// </summary> public AVSampleFormat SampleFormat { get; protected set; } public void InitDecodecAudio(string path) { int error = 0; //創建一個 媒體格式上下文 format = ffmpeg.avformat_alloc_context(); var tempFormat = format; //打開媒體文件 error = ffmpeg.avformat_open_input(&tempFormat, path, null, null); if (error < 0) { Debug.WriteLine("打開媒體文件失敗"); return; } //嗅探媒體信息 ffmpeg.avformat_find_stream_info(format, null); AVCodec* codec; //獲取音頻流索引 audioStreamIndex = ffmpeg.av_find_best_stream(format, AVMediaType.AVMEDIA_TYPE_AUDIO, -1, -1, &codec, 0); if (audioStreamIndex < 0) { Debug.WriteLine("沒有找到音頻流"); return; } //獲取音頻流 audioStream = format->streams[audioStreamIndex]; //創建解碼上下文 codecContext = ffmpeg.avcodec_alloc_context3(codec); //將音頻流裡面的解碼器參數設置到 解碼器上下文中 error = ffmpeg.avcodec_parameters_to_context(codecContext, audioStream->codecpar); if (error < 0) { Debug.WriteLine("設置解碼器參數失敗"); } error = ffmpeg.avcodec_open2(codecContext, codec, null); //媒體時長 Duration = TimeSpan.FromMilliseconds(format->duration / 1000); //編解碼id CodecId = codec->id.ToString(); //解碼器名字 CodecName = ffmpeg.avcodec_get_name(codec->id); //比特率 Bitrate = codecContext->bit_rate; //音頻通道數 Channels = codecContext->channels; //通道佈局類型 ChannelLyaout = codecContext->channel_layout; //音頻採樣率 SampleRate = codecContext->sample_rate; //音頻採樣格式 SampleFormat = codecContext->sample_fmt; //採樣次數 //獲取給定音頻參數所需的緩衝區大小。 BitsPerSample = ffmpeg.av_samples_get_buffer_size(null, 2, codecContext->frame_size, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); //創建一個指針 audioBuffer = Marshal.AllocHGlobal((int)BitsPerSample); bufferPtr = (byte*)audioBuffer; //初始化音頻重採樣轉換器 InitConvert((int)ChannelLyaout, AVSampleFormat.AV_SAMPLE_FMT_S16, (int)SampleRate, (int)ChannelLyaout, SampleFormat, (int)SampleRate); //創建一個包和幀指針 packet = ffmpeg.av_packet_alloc(); frame = ffmpeg.av_frame_alloc(); State = MediaState.Read; } //緩衝區指針 IntPtr audioBuffer; //緩衝區句柄 byte* bufferPtr; /// <summary> /// 初始化重採樣轉換器 /// </summary> /// <param name="occ">輸出的通道類型</param> /// <param name="osf">輸出的採樣格式</param> /// <param name="osr">輸出的採樣率</param> /// <param name="icc">輸入的通道類型</param> /// <param name="isf">輸入的採樣格式</param> /// <param name="isr">輸入的採樣率</param> /// <returns></returns> bool InitConvert(int occ, AVSampleFormat osf, int osr, int icc, AVSampleFormat isf, int isr) { //創建一個重採樣轉換器 convert = ffmpeg.swr_alloc(); //設置重採樣轉換器參數 convert = ffmpeg.swr_alloc_set_opts(convert, occ, osf, osr, icc, isf, isr, 0, null); if (convert == null) return false; //初始化重採樣轉換器 ffmpeg.swr_init(convert); return true; } /// <summary> /// 嘗試讀取下一幀 /// </summary> /// <param name="outFrame"></param> /// <returns></returns> public bool TryReadNextFrame(out AVFrame outFrame) { if (lastTime == TimeSpan.Zero) { lastTime = Position; isNextFrame = true; } else { if (Position - lastTime >= frameDuration) { lastTime = Position; isNextFrame = true; } else { outFrame = *frame; return false; } } if (isNextFrame) { lock (SyncLock) { int result = -1; //清理上一幀的數據 ffmpeg.av_frame_unref(frame); while (true) { //清理上一幀的數據包 ffmpeg.av_packet_unref(packet); //讀取下一幀,返回一個int 查看讀取數據包的狀態 result = ffmpeg.av_read_frame(format, packet); //讀取了最後一幀了,沒有數據了,退出讀取幀 if (result == ffmpeg.AVERROR_EOF || result < 0) { outFrame = *frame; StopPlay(); return false; } //判斷讀取的幀數據是否是視頻數據,不是則繼續讀取 if (packet->stream_index != audioStreamIndex) continue; //將包數據發送給解碼器解碼 ffmpeg.avcodec_send_packet(codecContext, packet); //從解碼器中接收解碼後的幀 result = ffmpeg.avcodec_receive_frame(codecContext, frame); if (result < 0) continue; //計算當前幀播放的時長 frameDuration = TimeSpan.FromTicks((long)Math.Round(TimeSpan.TicksPerMillisecond * 1000d * frame->nb_samples / frame->sample_rate, 0)); outFrame = *frame; return true; } } } else { outFrame = *frame; return false; } } void StopPlay() { lock (SyncLock) { if (State == MediaState.None) return; IsPlaying = false; OffsetClock = TimeSpan.FromSeconds(0); clock.Reset(); clock.Stop(); var tempFormat = format; ffmpeg.avformat_free_context(tempFormat); format = null; var tempCodecContext = codecContext; ffmpeg.avcodec_free_context(&tempCodecContext); var tempPacket = packet; ffmpeg.av_packet_free(&tempPacket); var tempFrame = frame; ffmpeg.av_frame_free(&tempFrame); var tempConvert = convert; ffmpeg.swr_free(&tempConvert); Marshal.FreeHGlobal(audioBuffer); bufferPtr = null; audioStream = null; audioStreamIndex = -1; //視頻時長 Duration = TimeSpan.FromMilliseconds(0); //編解碼器名字 CodecName = String.Empty; CodecId = String.Empty; //比特率 Bitrate = 0; //幀率 Channels = 0; ChannelLyaout = 0; SampleRate = 0; BitsPerSample = 0; State = MediaState.None; lastTime = TimeSpan.Zero; MediaCompleted?.Invoke(Duration); } } /// <summary> /// 更改進度 /// </summary> /// <param name="seekTime">更改到的位置(秒)</param> public void SeekProgress(int seekTime) { if (format == null || audioStreamIndex == null) return; lock (SyncLock) { IsPlaying = false;//將視頻暫停播放 clock.Stop(); //將秒數轉換成視頻的時間戳 var timestamp = seekTime / ffmpeg.av_q2d(audioStream->time_base); //將媒體容器裡面的指定流(視頻)的時間戳設置到指定的位置,並指定跳轉的方法; ffmpeg.av_seek_frame(format, audioStreamIndex, (long)timestamp, ffmpeg.AVSEEK_FLAG_BACKWARD | ffmpeg.AVSEEK_FLAG_FRAME); ffmpeg.av_frame_unref(frame);//清除上一幀的數據 ffmpeg.av_packet_unref(packet); //清除上一幀的數據包 int error = 0; //迴圈獲取幀數據,判斷獲取的幀時間戳已經大於給定的時間戳則說明已經到達了指定的位置則退出迴圈 while (packet->pts < timestamp) { do { do { ffmpeg.av_packet_unref(packet);//清除上一幀數據包 error = ffmpeg.av_read_frame(format, packet);//讀取數據 if (error == ffmpeg.AVERROR_EOF)//是否是到達了視頻的結束位置 return; } while (packet->stream_index != audioStreamIndex);//判斷當前獲取的數據是否是視頻數據 ffmpeg.avcodec_send_packet(codecContext, packet);//將數據包發送給解碼器解碼 error = ffmpeg.avcodec_receive_frame(codecContext, frame);//從解碼器獲取解碼後的幀數據 } while (error == ffmpeg.AVERROR(ffmpeg.EAGAIN)); } OffsetClock = TimeSpan.FromSeconds(seekTime);//設置時間偏移 clock.Restart();//時鐘從新開始 IsPlaying = true;//視頻開始播放 lastTime = TimeSpan.Zero; } } /// <summary> /// 將音頻幀轉換成位元組數組 /// </summary> /// <param name="sourceFrame"></param> /// <returns></returns> public byte[] FrameConvertBytes(AVFrame* sourceFrame) { var tempBufferPtr = bufferPtr; //重採樣音頻 var outputSamplesPerChannel = ffmpeg.swr_convert(convert, &tempBufferPtr, frame->nb_samples, sourceFrame->extended_data, sourceFrame->nb_samples); //獲取重採樣後的音頻數據大小 var outPutBufferLength = ffmpeg.av_samples_get_buffer_size(null, 2, outputSamplesPerChannel, AVSampleFormat.AV_SAMPLE_FMT_S16, 1); if (outputSamplesPerChannel < 0) return null; byte[] bytes = new byte[outPutBufferLength]; //從記憶體中讀取轉換後的音頻數據 Marshal.Copy(audioBuffer, bytes, 0, bytes.Length); return bytes; } public void Play() { if (State == MediaState.Play) return; clock.Start(); IsPlaying = true; State = MediaState.Play; } public void Pause() { if (State != MediaState.Play) return; IsPlaying = false; OffsetClock = clock.Elapsed; clock.