搭建免費的代理ip池 需要解決的問題: 使用什麼方式存儲ip 文件存儲 缺點: 打開文件修改文件操作較麻煩 mysql 缺點: 查詢速度較慢 mongodb 缺點: 查詢速度較慢. 沒有查重功能 redis --> 使用redis存儲最為合適 所以 -> 數據結構採用redis中的zset有序集合 ...

搭建免費的代理ip池

需要解決的問題:

-

使用什麼方式存儲ip

-

文件存儲

缺點: 打開文件修改文件操作較麻煩

-

mysql

缺點: 查詢速度較慢

-

mongodb

缺點: 查詢速度較慢. 沒有查重功能

-

redis --> 使用redis存儲最為合適

所以 -> 數據結構採用redis中的zset有序集合

-

-

獲取ip的網站

-

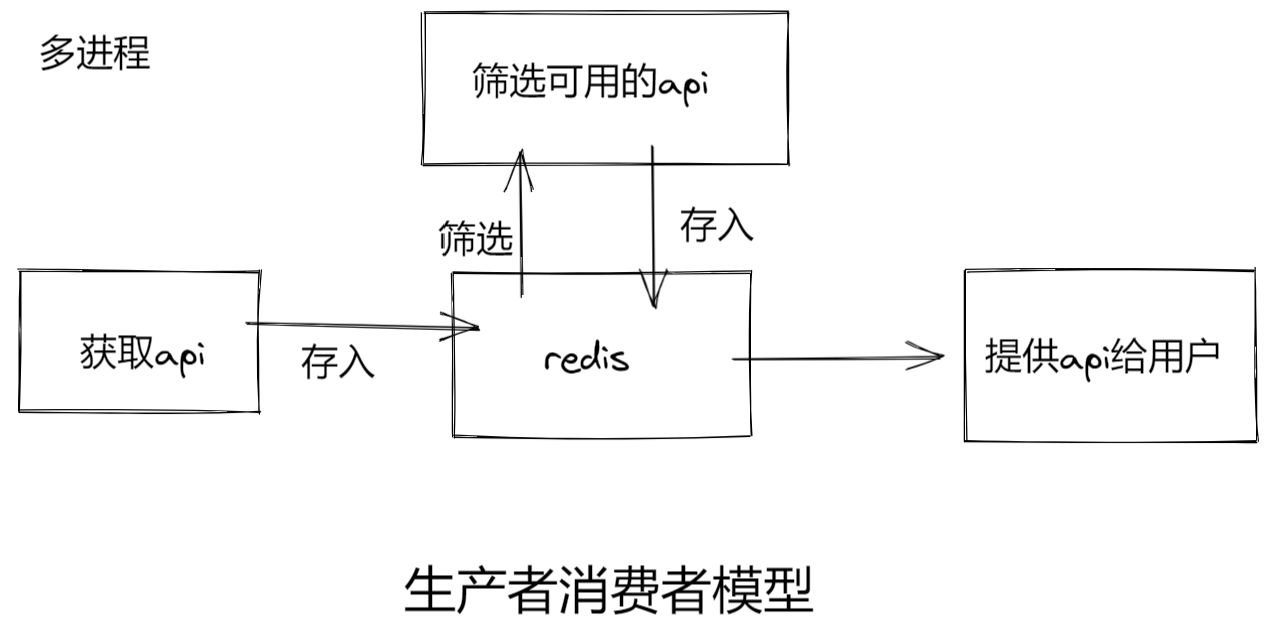

項目架構???

項目架構

- 獲取api

- 篩選api

- 驗證api的有效性

- 提供api

項目結構圖

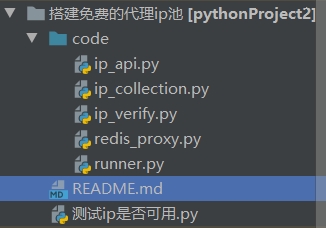

項目結構如下:

項目代碼

code文件夾

redis_proxy.py

# -*- encoding:utf-8 -*- # @time: 2022/7/4 11:32 # @author: Maxs_hu """ 這裡用來做redis中間商. 去控制redis和ip之間的調用關係 """ from redis import Redis import random class RedisProxy: def __init__(self): # 連接到redis資料庫 self.red = Redis( host='localhost', port=6379, db=9, password=123456, decode_responses=True ) # 1. 存儲到redis中. 存儲之前需要提前判斷ip是否存在. 防止將已存在的ip的score抵掉 # 2. 需要校驗所有的ip. 查詢ip # 3. 驗證可用性. 可用分值拉滿. 不可用扣分 # 4. 將可用的ip查出來返回給用戶 # 先給滿分的 # 再給有分的 # 都沒有分. 就不給 def add_ip(self, ip): # 外界調用並傳入ip # 判斷ip在redis中是否存在 if not self.red.zscore('proxy_ip', ip): self.red.zadd('proxy_ip', {ip: 10}) print('proxy_ip存儲完畢', ip) else: print('存在重覆', ip) def get_all_proxy(self): # 查詢所有的ip功能 return self.red.zrange('proxy_ip', 0, -1) def set_max_score(self, ip): self.red.zadd('proxy_ip', {ip: 100}) # 註意是引號的格式 def deduct_score(self, ip): # 先將分數查詢出來 score = self.red.zscore('proxy_ip', ip) # 如果有分值.那就扣一分 if score > 0: self.red.zincrby('proxy_ip', -1, ip) else: # 如果分值已經扣的小於0了. 那麼可以直接刪除了 self.red.zrem('proxy_ip', ip) def effect_ip(self): # 先將ip通過分數篩選出來 ips = self.red.zrangebyscore('proxy_ip', 100, 100, 0, -1) if ips: return random.choice(ips) else: # 沒有滿分的 # 將九十分以上的篩選出來 ips = self.red.zrangebyscore('proxy_ip', 11, 99, 0, -1) if ips: return random.choice(ips) else: print('無可用ip') return None

ip_collection.py

# -*- encoding:utf-8 -*- # @time: 2022/7/4 11:32 # @author: Maxs_hu """ 這裡用來收集ip """ from redis_proxy import RedisProxy import requests from lxml import html from multiprocessing import Process import time import random def get_kuai_ip(red): url = "https://free.kuaidaili.com/free/intr/" headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36" } resp = requests.get(url, headers=headers) etree = html.etree et = etree.HTML(resp.text) trs = et.xpath('//table//tr') for tr in trs: ip = tr.xpath('./td[1]/text()') port = tr.xpath('./td[2]/text()') if not ip: # 將不含有ip值的篩除 continue proxy_ip = ip[0] + ":" + port[0] red.add_ip(proxy_ip) def get_unknown_ip(red): url = "https://ip.jiangxianli.com/" headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36" } resp = requests.get(url, headers=headers) etree = html.etree et = etree.HTML(resp.text) trs = et.xpath('//table//tr') for tr in trs: ip = tr.xpath('./td[1]/text()') port = tr.xpath('./td[2]/text()') if not ip: # 將不含有ip值的篩除 continue proxy_ip = ip[0] + ":" + port[0] red.add_ip(proxy_ip) def get_happy_ip(red): page = random.randint(1, 5) url = f'http://www.kxdaili.com/dailiip/2/{page}.html' headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36" } resp = requests.get(url, headers=headers) etree = html.etree et = etree.HTML(resp.text) trs = et.xpath('//table//tr') for tr in trs: ip = tr.xpath('./td[1]/text()') port = tr.xpath('./td[2]/text()') if not ip: # 將不含有ip值的篩除 continue proxy_ip = ip[0] + ":" + port[0] red.add_ip(proxy_ip) def get_nima_ip(red): url = 'http://www.nimadaili.com/' headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36" } resp = requests.get(url, headers=headers) etree = html.etree et = etree.HTML(resp.text) trs = et.xpath('//table//tr') for tr in trs: ip = tr.xpath('./td[1]/text()') # 這裡存在空值. 所以不能在後面加[0] if not ip: continue red.add_ip(ip[0]) def get_89_ip(red): page = random.randint(1, 26) url = f'https://www.89ip.cn/index_{page}.html' headers = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36" } resp = requests.get(url, headers=headers) etree = html.etree et = etree.HTML(resp.text) trs = et.xpath('//table//tr') for tr in trs: ip = tr.xpath('./td[1]/text()') if not ip: continue red.add_ip(ip[0].strip()) def main(): # 創建一個redis實例化對象 red = RedisProxy() print("開始採集數據") while 1: try: # 這裡可以添加各種採集的網站 print('>>>開始收集快代理ip') get_kuai_ip(red) # 收集快代理 # get_unknown_ip(red) # 收集ip print(">>>開始收集開心代理ip") get_happy_ip(red) # 收集開心代理 print(">>>開始收集泥馬代理ip") # get_nima_ip(red) # 收集泥馬代理 print(">>>開始收集89代理ip") get_89_ip(red) time.sleep(60) except Exception as e: print('ip儲存出錯了', e) time.sleep(60) if __name__ == '__main__': main() # 創建一個子進程 # p = Process(target=main) # p.start()

ip_verify.py

# -*- encoding:utf-8 -*- # @time: 2022/7/4 11:34 # @author: Maxs_hu """ 這裡用來驗證ip的可用性: 使用攜程發送請求增加效率 """ from redis_proxy import RedisProxy from multiprocessing import Process import asyncio import aiohttp import time async def verify_ip(ip, red, sem): timeout = aiohttp.ClientTimeout(total=10) # 設置網頁等待時間不超過十秒 try: async with sem: async with aiohttp.ClientSession() as session: async with session.get(url='http://www.baidu.com/', proxy='http://'+ip, timeout=timeout) as resp: page_source = await resp.text() if resp.status in [200, 302]: # 如果可用. 加分 red.set_max_score(ip) print('驗證沒有問題. 分值拉滿~', ip) else: # 如果不可用. 扣分 red.deduct_score(ip) print('問題ip. 扣一分', ip) except Exception as e: print('出錯了', e) red.deduct_score(ip) print('問題ip. 扣一分', ip) async def task(red): ips = red.get_all_proxy() sem = asyncio.Semaphore(30) # 設置每次三十的信號量 tasks = [] for ip in ips: tasks.append(asyncio.create_task(verify_ip(ip, red, sem))) if tasks: await asyncio.wait(tasks) def main(): red = RedisProxy() time.sleep(5) # 初始的等待時間. 等待採集到數據 print("開始驗證可用性") while 1: try: asyncio.run(task(red)) time.sleep(100) except Exception as e: print("ip_verify出錯了", e) time.sleep(100) if __name__ == '__main__': main() # 創建一個子進程 # p = Process(target=main()) # p.start()

ip_api.py

# -*- encoding:utf-8 -*- # @time: 2022/7/4 11:35 # @author: Maxs_hu """ 這裡用來提供給用戶ip介面. 通過寫後臺伺服器. 用戶訪問我們的伺服器就可以得到可用的代理ip: 1. flask 2. sanic --> 今天使用這個要稍微簡單一點 """ from redis_proxy import RedisProxy from sanic import Sanic, json from sanic_cors import CORS from multiprocessing import Process # 創建一個app app = Sanic('ip') # 隨便給個名字 # 解決跨域問題 CORS(app) red = RedisProxy() @app.route('maxs_hu_ip') # 添加路由 def api(req): # 第一個請求參數固定. 請求對象 ip = red.effect_ip() return json({"ip": ip}) def main(): # 讓sanic跑起來 app.run(host='127.0.0.1', port=1234) if __name__ == '__main__': main() # p = Process(target=main()) # p.start()

runner.py

# -*- encoding:utf-8 -*- # @time: 2022/7/5 17:36 # @author: Maxs_hu from ip_api import main as api_run from ip_collection import main as coll_run from ip_verify import main as veri_run from multiprocessing import Process def main(): # 設置互不幹擾的三個進程 p1 = Process(target=api_run) # 只需要將目標函數的記憶體地址傳過去即可 p2 = Process(target=coll_run) p3 = Process(target=veri_run) p1.start() p2.start() p3.start() if __name__ == '__main__': main()

測試ip是否可用.py

# -*- encoding:utf-8 -*-

# @time: 2022/7/5 18:15

# @author: Maxs_hu

import requests

def get_proxy():

url = "http://127.0.0.1:1234/maxs_hu_ip"

resp = requests.get(url)

return resp.json()

def main():

url = 'http://mip.chinaz.com/?query=' + get_proxy()["ip"]

proxies = {

"http": 'http://' + get_proxy()["ip"],

"https": 'http://' + get_proxy()["ip"] # 目前代理只支持http請求

}

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.0.0 Safari/537.36",

}

resp = requests.get(url, proxies=proxies, headers=headers)

resp.encoding = 'utf-8'

print(resp.text) # 物理位置

if __name__ == '__main__':

main()

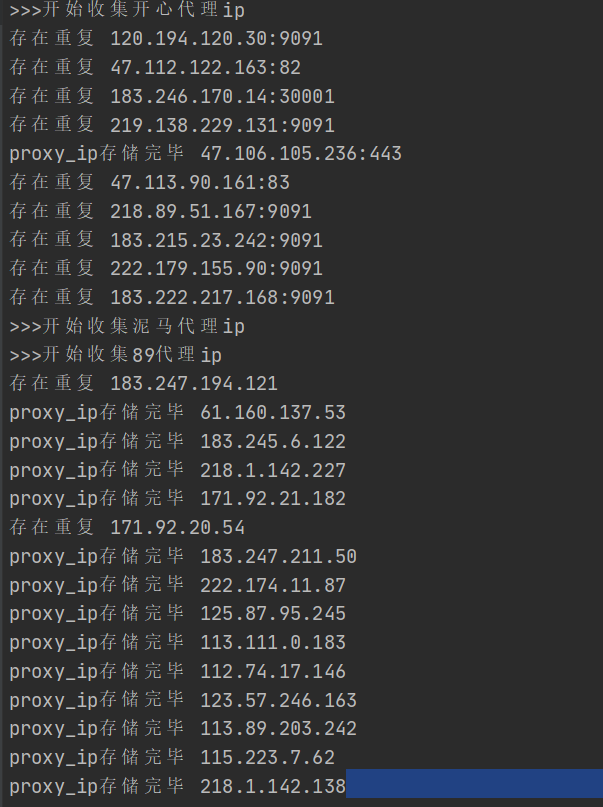

運行效果

項目運行截圖:

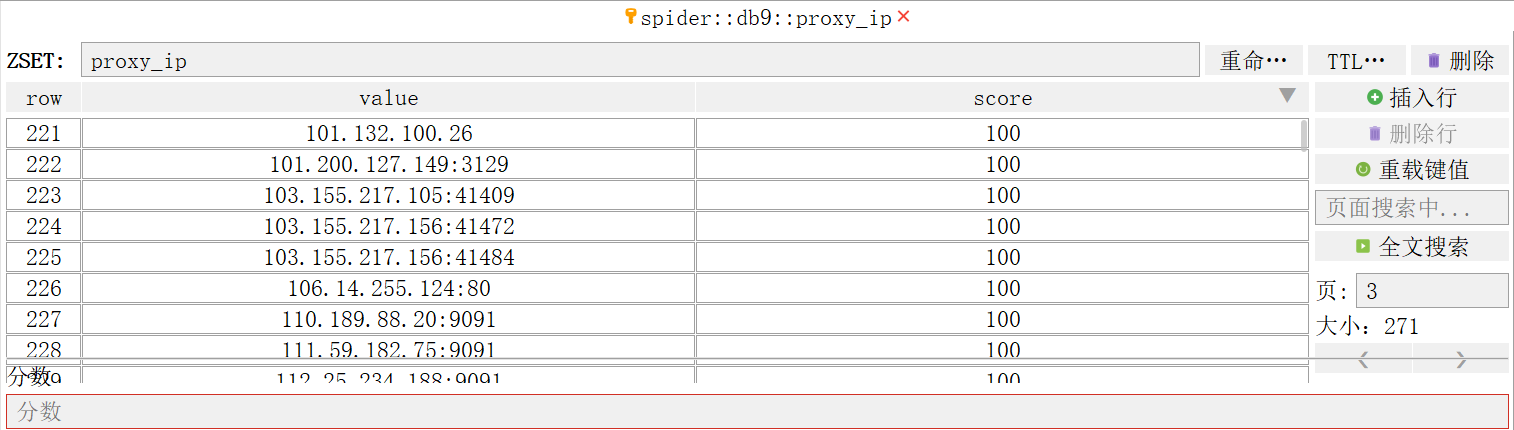

redis儲存截圖:

總結

- 免費代理ip只支持http的網頁操作. 並不好用. 如果有需求可以進行購買然後加入ip代理池

- 網頁部署到自己的伺服器上. 別人訪問自己的伺服器. 以後學了全棧可以加上登錄. 和付費功能. 實現功能的進一步拓展

- 項目架構是生產者消費者模型. 三個模塊同時運行. 每個模塊為一個進程. 互不影響

- 代理設計細節有待處理. 但總體運行效果還可以. 遇到問題再修改

本文來自博客園,作者:{Max},僅供學習和參考