系統環境: centos 7 git:gitee.com 當然隨便一個git服務端都行 jenkins: lts版本,部署在伺服器上,沒有通過部署在k8s集群中 harbor: offline版本,用來存儲docker鏡像 Kubernetes 集群為了方便快捷,使用了kubeadm方式搭建,是三台 ...

系統環境:

centos 7

git:gitee.com 當然隨便一個git服務端都行

jenkins: lts版本,部署在伺服器上,沒有通過部署在k8s集群中

harbor: offline版本,用來存儲docker鏡像

Kubernetes 集群為了方便快捷,使用了kubeadm方式搭建,是三台,並且啟用了IPVS,具體伺服器用途說明如下:

| HOSTNAME | IP地址 | 伺服器用途 |

| master.test.cn | 192.168.184.31 | k8s-master |

| node1.test.cn | 192.168.184.32 | k8s-node1 |

| node2.test.cn | 192.168.184.33 | k8s-node2 |

| soft.test.cn | 192.168.184.34 | harbor、jenkins |

一、Kubernetes 搭建

本節點參考:https://www.cnblogs.com/lovesKey/p/10888006.html

1.1 系統配置

先將4台主機寫好hosts

1 [root@master ~]# cat /etc/hosts 2 192.168.184.31 master.test.cn 3 192.168.184.32 node1.test.cn fanli.test.cn 4 192.168.184.33 node2.test.cn 5 192.168.184.34 soft.test.cn jenkins.test.cn harbor.test.cn

關閉swap:

臨時關閉

swapoff -a

永久關閉(刪除或註釋掉swap那一行重啟即可)

vim /etc/fstab

關閉所有防火牆

systemctl stop firewalld

systemctl disable firewalld

禁用SELINUX:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

重啟使SELINUX生效

將橋接的IPv4流量傳遞到iptables的鏈,使設置生效:

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF

sysctl --system

1.2 kube-proxy開啟ipvs的前置條件

由於ipvs已經加入到了內核的主幹,所以為kube-proxy開啟ipvs的前提需要載入以下的內核模塊:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

在所有的Kubernetes節點master,node1和node2上執行以下腳本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

可以看到已經生效了: nf_conntrack_ipv4 15053 5 nf_defrag_ipv4 12729 1 nf_conntrack_ipv4 ip_vs_sh 12688 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 16 ip_vs 145497 22 ip_vs_rr,ip_vs_sh,ip_vs_wrr nf_conntrack 139264 9 ip_vs,nf_nat,nf_nat_ipv4,nf_nat_ipv6,xt_conntrack,nf_nat_masquerade_ipv4,nf_conntrack_netlink,nf_conntrack_ipv4,nf_conntrack_ipv6 libcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack

腳本創建了的/etc/sysconfig/modules/ipvs.modules文件,保證在節點重啟後能自動載入所需模塊。 使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已經正確載入所需的內核模塊。

在所有節點上安裝ipset軟體包,同時為了方便查看ipvs規則我們要安裝ipvsadm(可選)

yum install ipset ipvsadm

1.3 安裝Docker(所有節點)

配置docker國內源(阿裡雲)

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

安裝最新版docker-ce

yum -y install docker-ce systemctl enable docker && systemctl start docker docker --version

配置kubernetes的源(阿裡雲)

cat > /etc/yum.repos.d/kubernetes.repo << EOF [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

手動導入gpgkey或者關閉

gpgcheck=0

rpmkeys --import https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg rpmkeys --import https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

開始安裝kubeadm和kubelet:

yum install -y kubelet kubeadm kubectl systemctl enable kubelet systemctl start kubelet

1.4開始部署Kubernetes

初始化master

kubeadm init \ --apiserver-advertise-address=192.168.184.31 \ --image-repository lank8s.cn \ --kubernetes-version v1.18.6 \ --pod-network-cidr=10.244.0.0/16

關註輸出內容

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.184.31:6443 --token a9vg9z.dlboqvfuwwzauufq \ --discovery-token-ca-cert-hash sha256:c2ade88a856f15de80240ff4994661a6daa668113cea0c4a4073f701f05192cb

執行下麵命令 初始化當前用戶配置 使用kubectl會用到 master節點執行:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

安裝pod網路插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

在各個node上執行 下麵加入命令(加入集群中)

kubeadm join 192.168.233.251:6443 --token a9vg9z.dlboqvfuwwzauufq --discovery-token-ca-cert-hash sha256:c2ade88a856f15de80240ff4994661a6daa668113cea0c4a4073f701f05192cb

使用kubectl get po -A -o wide確保所有的Pod都處於Running狀態。

[root@master ~]# kubectl get po -A -o wide NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-system coredns-5c579bbb7b-flzkd 1/1 Running 5 2d 10.244.1.14 node1.test.cn <none> <none> kube-system coredns-5c579bbb7b-qz5m8 1/1 Running 4 2d 10.244.2.9 node2.test.cn <none> <none> kube-system etcd-master.test.cn 1/1 Running 5 2d 192.168.184.31 master.test.cn <none> <none> kube-system kube-apiserver-master.test.cn 1/1 Running 5 2d 192.168.184.31 master.test.cn <none> <none> kube-system kube-controller-manager-master.test.cn 1/1 Running 5 2d 192.168.184.31 master.test.cn <none> <none> kube-system kube-flannel-ds-amd64-bhmps 1/1 Running 6 2d 192.168.184.33 node2.test.cn <none> <none> kube-system kube-flannel-ds-amd64-mbpvb 1/1 Running 6 2d 192.168.184.32 node1.test.cn <none> <none> kube-system kube-flannel-ds-amd64-xnw2l 1/1 Running 6 2d 192.168.184.31 master.test.cn <none> <none> kube-system kube-proxy-8nkgs 1/1 Running 6 2d 192.168.184.32 node1.test.cn <none> <none> kube-system kube-proxy-jxtfk 1/1 Running 4 2d 192.168.184.31 master.test.cn <none> <none> kube-system kube-proxy-ls7xg 1/1 Running 4 2d 192.168.184.33 node2.test.cn <none> <none> kube-system kube-scheduler-master.test.cn 1/1 Running 4 2d 192.168.184.31 master.test.cn <none> <none>

kube-proxy開啟ipvs

#修改ConfigMap的kube-system/kube-proxy中的config.conf,把 mode: "" 改為mode: “ipvs" 保存退出即可 [root@k8smaster centos]# kubectl edit cm kube-proxy -n kube-system configmap/kube-proxy edited ###刪除之前的proxy pod [root@master centos]# kubectl get pod -n kube-system |grep kube-proxy |awk '{system("kubectl delete pod "$1" -n kube-system")}' pod "kube-proxy-2m5jh" deleted pod "kube-proxy-nfzfl" deleted pod "kube-proxy-shxdt" deleted #查看proxy運行狀態 [root@master centos]# kubectl get pod -n kube-system | grep kube-proxy kube-proxy-54qnw 1/1 Running 0 24s kube-proxy-bzssq 1/1 Running 0 14s kube-proxy-cvlcm 1/1 Running 0 37s #查看日誌,如果有 `Using ipvs Proxier.` 說明kube-proxy的ipvs 開啟成功! [root@master centos]# kubectl logs kube-proxy-54qnw -n kube-system I0518 20:24:09.319160 1 server_others.go:176] Using ipvs Proxier. W0518 20:24:09.319751 1 proxier.go:386] IPVS scheduler not specified, use rr by default I0518 20:24:09.320035 1 server.go:562] Version: v1.14.2 I0518 20:24:09.334372 1 conntrack.go:52] Setting nf_conntrack_max to 131072 I0518 20:24:09.334853 1 config.go:102] Starting endpoints config controller I0518 20:24:09.334916 1 controller_utils.go:1027] Waiting for caches to sync for endpoints config controller I0518 20:24:09.334945 1 config.go:202] Starting service config controller I0518 20:24:09.334976 1 controller_utils.go:1027] Waiting for caches to sync for service config controller I0518 20:24:09.435153 1 controller_utils.go:1034] Caches are synced for service config controller I0518 20:24:09.435271 1 controller_utils.go:1034] Caches are synced for endpoints config controller

查看 node節點是否ready

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master.test.cn Ready master 2d v1.18.6

node1.test.cn Ready <none> 2d v1.18.6

node2.test.cn Ready <none> 2d v1.18.6

至此 K8S 用kubeadm方式並且使用IPVS方案 安裝成功

二、harbor 安裝

2.1 準備

harbor是通過docker-compose 啟動的,我們首先要在soft.test.cn 節點 安裝docker-compose

curl -L https://github.com/docker/compose/releases/download/1.26.1/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

2.2 下載Harbor

harbor的官方地址:https://github.com/goharbor/harbor/releases

然後按照官方安裝文檔進行安裝操作:https://github.com/goharbor/harbor/blob/master/docs/install-config/_index.md

這裡的設置方法,我把自己的功能變數名稱粘貼上來了,如果自己實際操作,需要將harbor.test.cn替換成你自己的功能變數名稱

下載離線包

wget https://github.com/goharbor/harbor/releases/download/v1.10.4/harbor-offline-installer-v1.10.4.tgz

解壓安裝包

[root@soft ~]# tar zxvf harbor-offline-installer-v1.10.4.tgz

2.3 設置 https

生成 CA 證書私鑰

openssl genrsa -out ca.key 4096

生成 CA 證書

openssl req -x509 -new -nodes -sha512 -days 3650 \ -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=harbor.test.cn" \ -key ca.key \ -out ca.crt

2.4 生成伺服器證書

證書通常包含文件和文件,例如 .crt.key yourdomain.com.crt yourdomain.com.key

生成私鑰

openssl genrsa -out harbor.test.cn.key 4096

生成證書簽名請求 (CSR)

調整選項中的值以反映您的組織。如果使用 FQDN 連接港口主機,則必須將其指定為公共名稱 () 屬性,併在密鑰和 CSR 文件名中使用它。-subj CN

openssl req -sha512 -new \ -subj "/C=CN/ST=Beijing/L=Beijing/O=example/OU=Personal/CN=harbor.test.cn" \ -key harbor.test.cn.key \ -out harbor.test.cn.csr

生成 x509 v3 擴展文件

無論您使用 FQDN 還是 IP 地址連接到港口主機,都必須創建此文件,以便可以為符合主題替代名稱 (SAN) 和 x509 v3 擴展要求的港灣主機生成證書。替換條目以反映您的域。

cat > v3.ext <<-EOF authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment extendedKeyUsage = serverAuth subjectAltName = @alt_names [alt_names] DNS.1=test.cn DNS.2=test DNS.3=harbor.test.cn EOF

使用該文件為港口主機生成證書

將 CRS 和 CRT 文件名中的替換為harbor主機名

openssl x509 -req -sha512 -days 3650 \ -extfile v3.ext \ -CA ca.crt -CAkey ca.key -CAcreateserial \ -in harbor.test.cn.csr \ -out harbor.test.cn.crt

2.5 向harbor和docker提供證書

生成ca.crt 、yourdomain.com.crt和 yourdomain.com.key文件後,必須將它們提供給harbor和docker,並重新配置harbor 以使用它們

將伺服器證書和密鑰複製到港灣主機上的證書文件夾中

cp harbor.test.cn.crt /data/cert/ cp harbor.test.cn.key /data/cert/

轉換harbor.test.cn.crt為harbor.test.cn.cert ,供 Docker 使用

Docker 守護進程將crt文件解釋為 CA 證書,cert文件解釋為客戶端證書

openssl x509 -inform PEM -in yourdomain.com.crt -out yourdomain.com.cert

將伺服器證書、密鑰和 CA 文件複製到港灣主機上的 Docker 證書文件夾中。您必須先創建相應的文件夾

cp harbor.test.cn.cert /etc/docker/certs.d/harbor.test.cn/ cp harbor.test.cn.key /etc/docker/certs.d/harbor.test.cn/ cp ca.crt /etc/docker/certs.d/harbor.test.cn/

重啟docker

systemctl restart docker

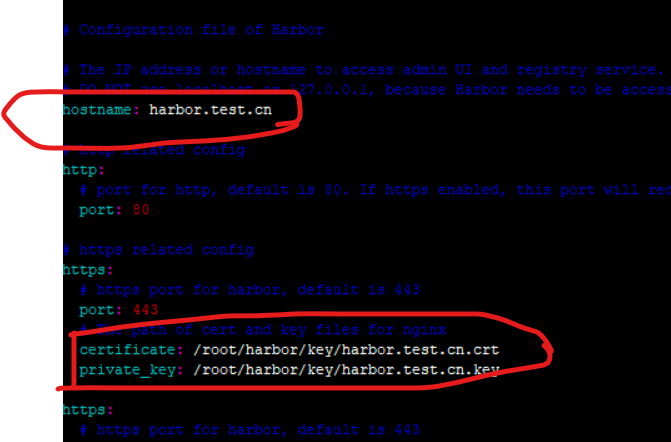

2.6 部署harbor

修改配置文件harbor.yml

兩處需要修改

1.修改hostname

2. 修改certificate 和key的路徑

運行腳本以啟用 HTTPS

[root@soft ~]# cd harbor

[root@soft harbor]# ./prepare

結束後執行install.sh

[root@soft harbor]# ./install.sh

harbor相關命令

查看結果,可以看到已經都是up的狀態,同時啟動了80和443埠映射

[root@soft harbor]# docker-compose ps Name Command State Ports --------------------------------------------------------------------------------------------------------------- harbor-core /harbor/harbor_core Up (healthy) harbor-db /docker-entrypoint.sh Up (healthy) 5432/tcp harbor-jobservice /harbor/harbor_jobservice ... Up (healthy) harbor-log /bin/sh -c /usr/local/bin/ ... Up (healthy) 127.0.0.1:1514->10514/tcp harbor-portal nginx -g daemon off; Up (healthy) 8080/tcp nginx nginx -g daemon off; Up (healthy) 0.0.0.0:80->8080/tcp, 0.0.0.0:443->8443/tcp redis redis-server /etc/redis.conf Up (healthy) 6379/tcp registry /home/harbor/entrypoint.sh Up (healthy) 5000/tcp registryctl /home/harbor/start.sh Up (healthy)

停止harbor運行

[root@soft harbor]# docker-compose down -v Stopping nginx ... done Stopping harbor-jobservice ... done Stopping harbor-core ... done Stopping harbor-portal ... done Stopping redis ... done Stopping registryctl ... done Stopping harbor-db ... done Stopping registry ... done Stopping harbor-log ... done Removing nginx ... done Removing harbor-jobservice ... done Removing harbor-core ... done Removing harbor-portal ... done Removing redis ... done Removing registryctl ... done Removing harbor-db ... done Removing registry ... done Removing harbor-log ... done Removing network harbor_harbor

啟動harbor進程

[root@soft harbor]# docker-compose up -d Creating network "harbor_harbor" with the default driver Creating harbor-log ... done Creating registry ... done Creating harbor-db ... done Creating redis ... done Creating harbor-portal ... done Creating registryctl ... done Creating harbor-core ... done Creating nginx ... done Creating harbor-jobservice ... done

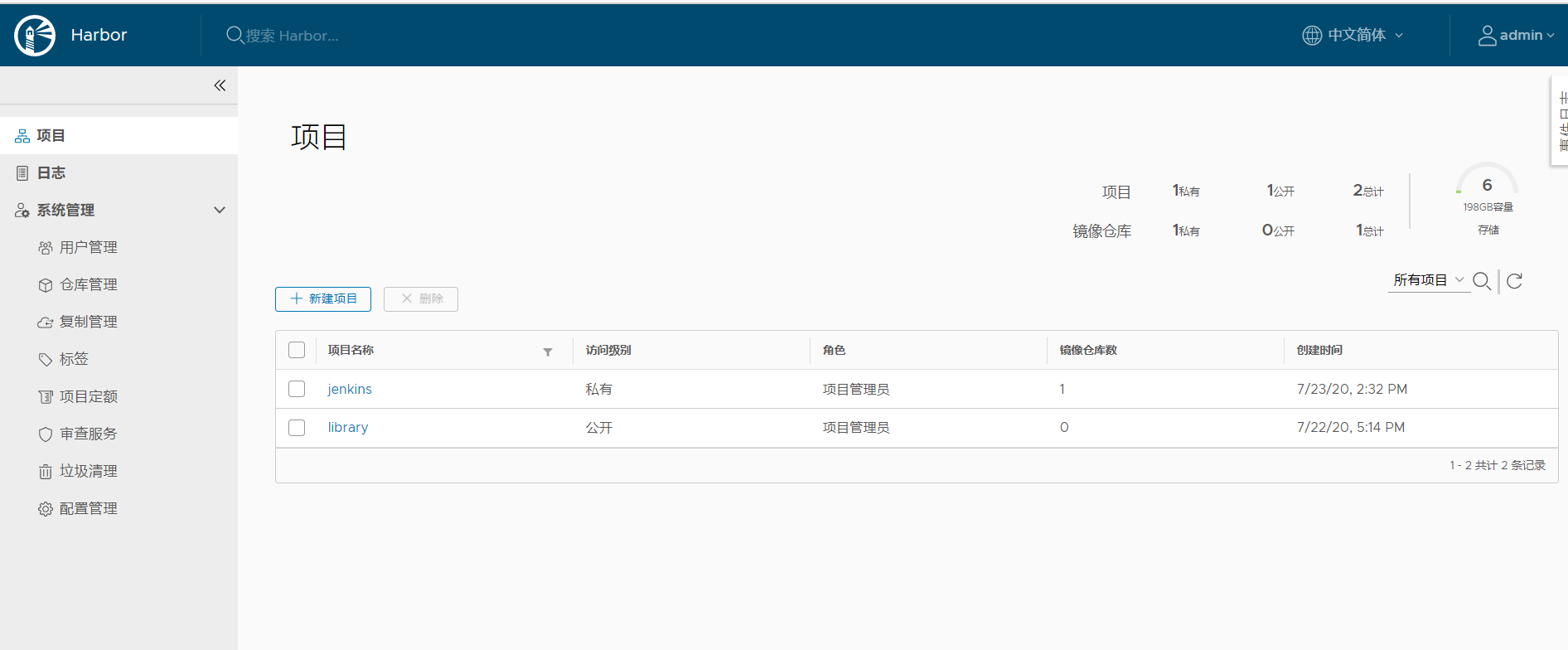

2.7 登錄測試

瀏覽器登錄https://harbor.test.cn 需要電腦配置hosts.賬號admin 密碼Harbor12345

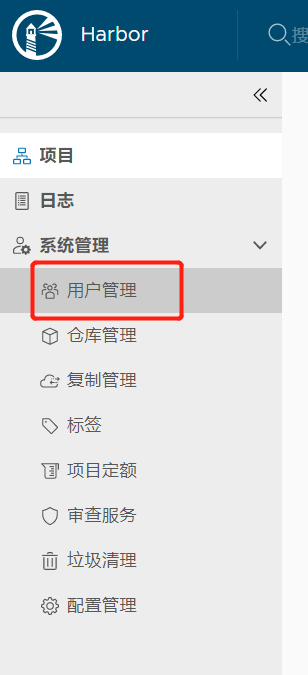

創建一個jenkins用戶,用於jenkins使用

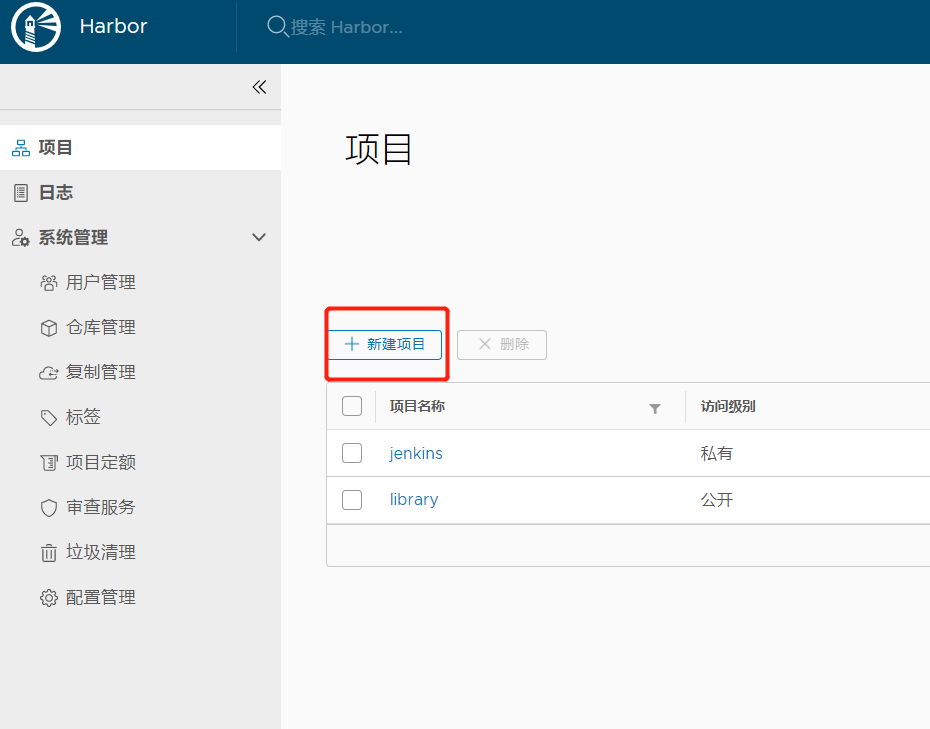

新建一個jenkins項目用於jenkins後續部署用

添加成員

在需要上傳鏡像的伺服器上修改docker倉庫連接方式為http,否則預設https無法連接。這裡以harbor.test.cn上我修改的sonarqube鏡像為例

vim /etc/docker/daemon.json

加入 { "insecure-registries" : ["harbor.test.cn"] }

重啟Docker生效

systemctl restart docker

三、Jenkins

安裝

Jenkins 官網地址:https://www.jenkins.io/zh/

可以根據不同的系統安裝,可以安裝lts版,長期支持版,也可以安裝每周更新版,我這裡選擇安裝長期支持版:

[root@soft ~]# wget https://mirrors.tuna.tsinghua.edu.cn/jenkins/redhat-stable/jenkins-2.235.2-1.1.noarch.rpm

安裝jdk

[root@soft harbor]# yum install -y java-1.8.0-openjdk

安裝jenkins

[root@soft harbor]# yum localinstall -y jenkins-2.235.2-1.1.noarch.rpm

之後我們設置開機啟動並啟動jenkins

[root@soft harbor]# systemctl enable jenkins

[root@soft harbor]# systemctl start jenkins

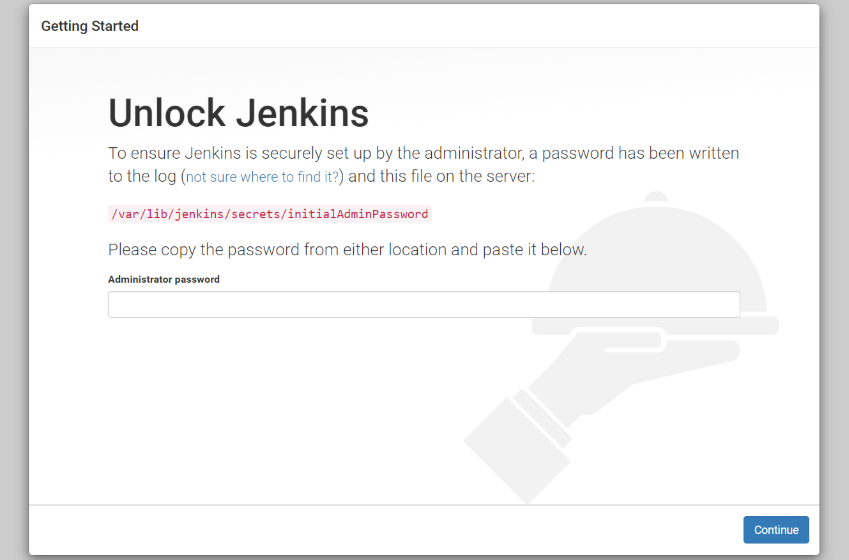

我們訪問jenkins頁面:http://192.168.184.34:8080/,可以看到jenkins已經可以初始化了

按照提示的路徑查看密碼

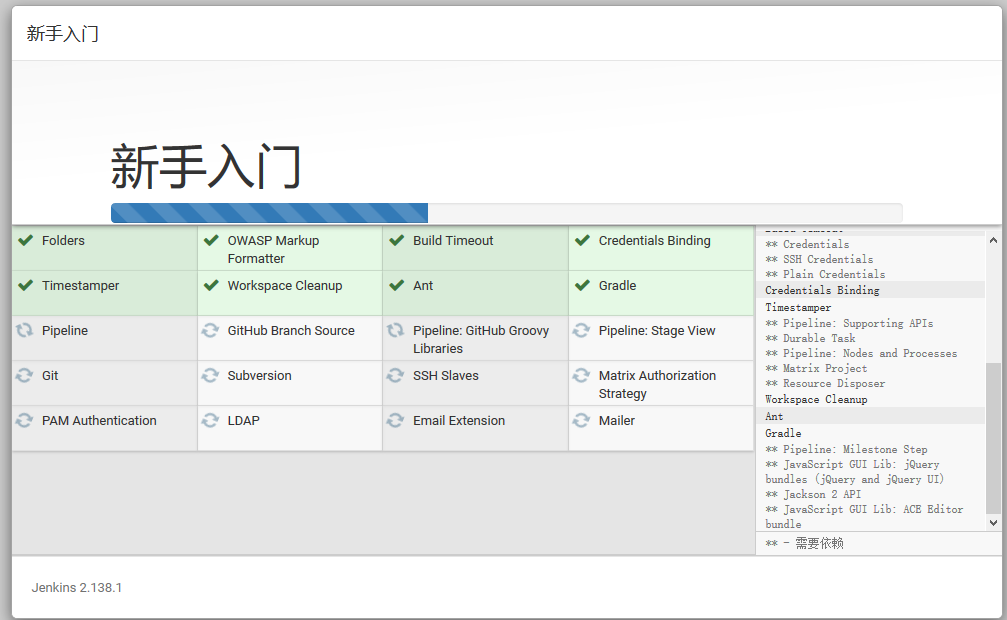

選擇安裝插件,第一個為預設安裝,第二個為手動這裡選擇預設的

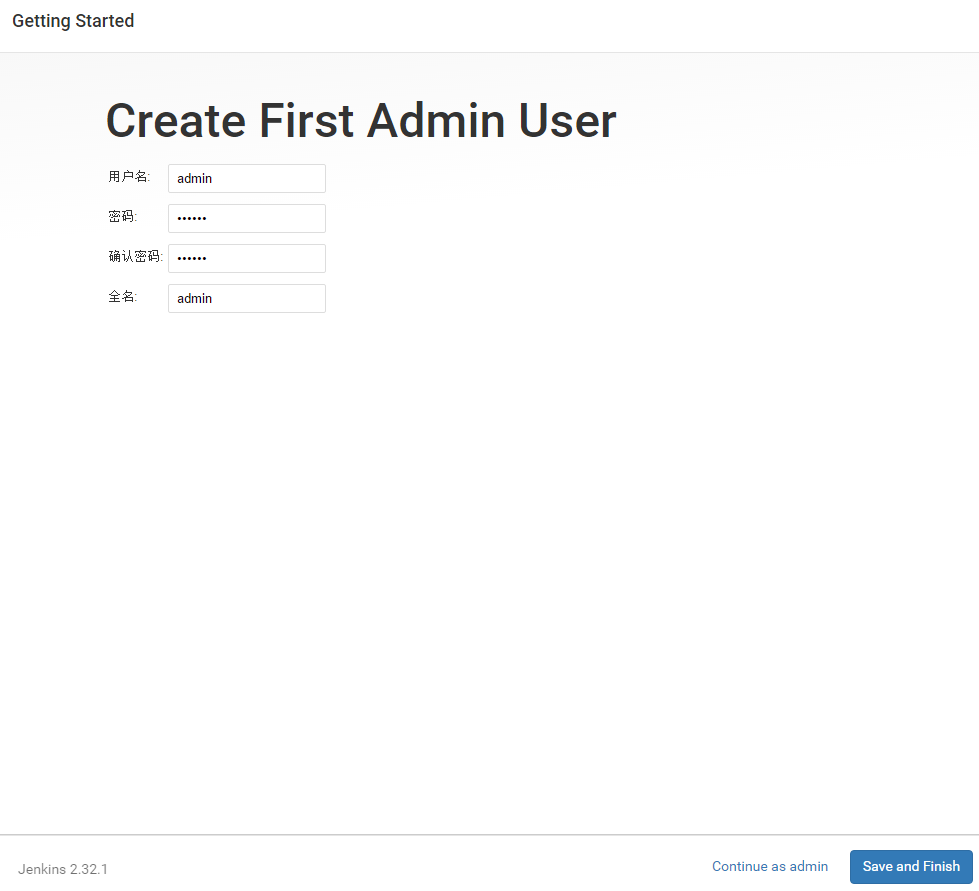

安裝完插件後,創建新用戶

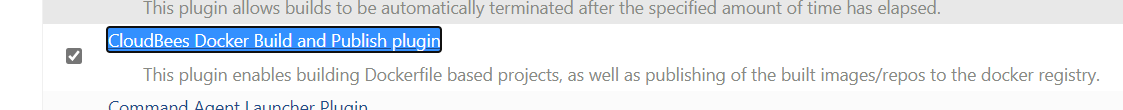

我們安裝插件CloudBees Docker Build and Publish plugin

安裝完成後我們新建item

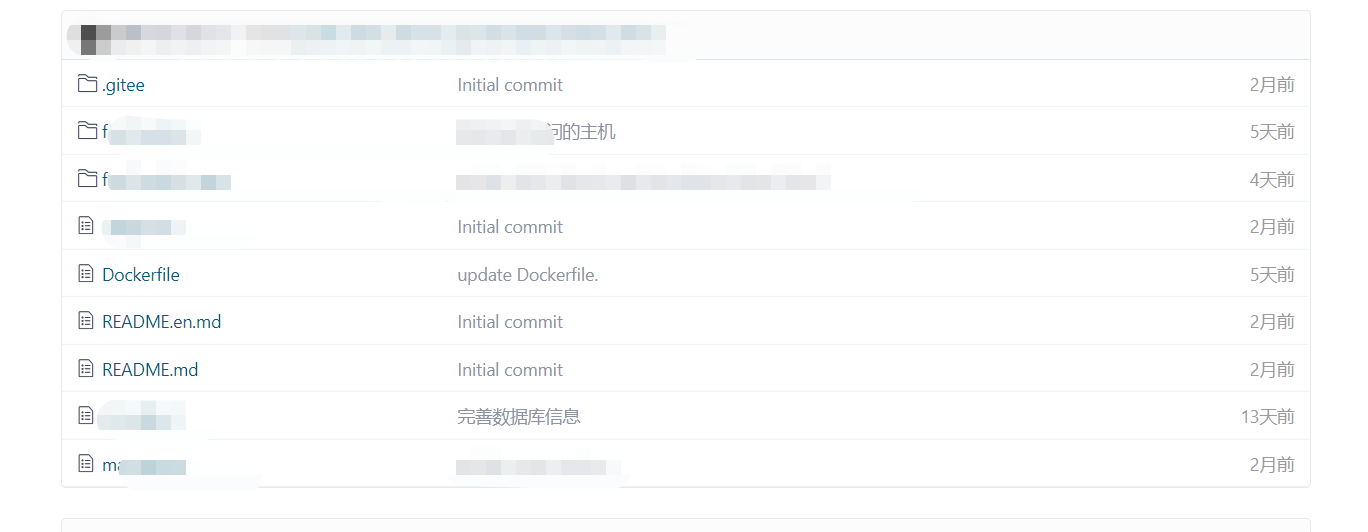

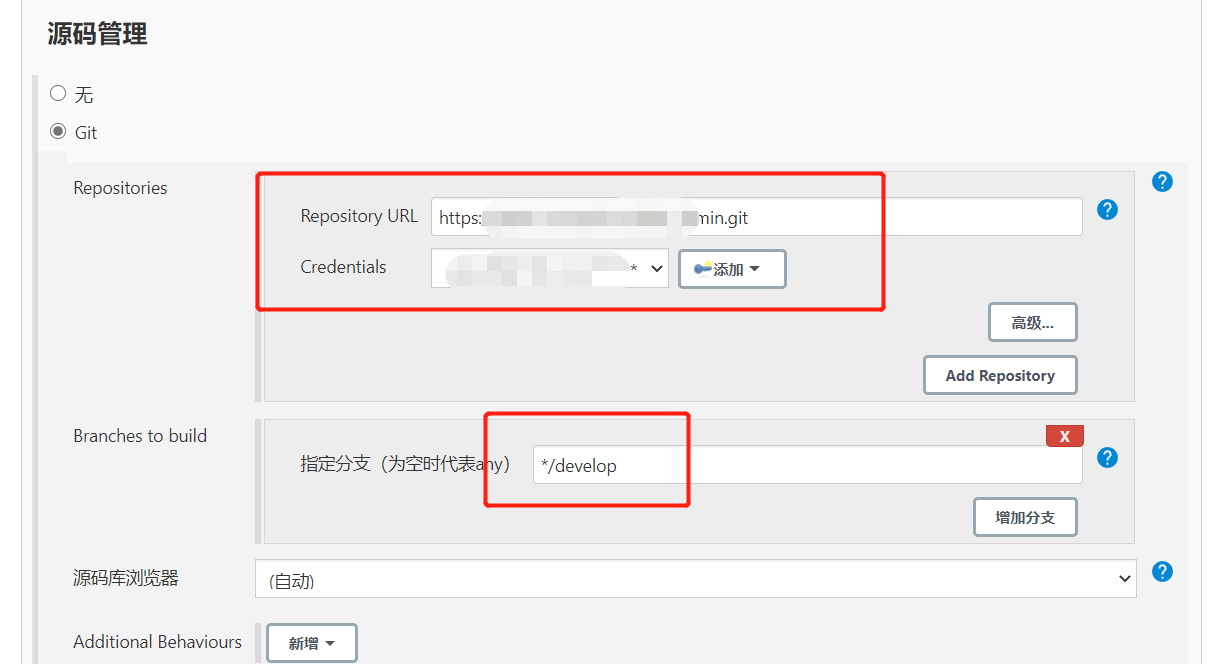

在源碼管理的這裡按需選擇,用svn就選svn,用git就選git,我這裡選擇了gitee網站上自己寫的測試代碼 django構建的,同樣分支預設應該為master,我這裡按需填寫了develop,自己按需填寫。

註意Dockerfile 一定要和代碼放在同一處(當前目錄最頂層),這樣docker build and publish 插件才能生效

Dockerfile

FROM python:3.6-alpine ENV PYTHONUNBUFFERED 1 WORKDIR /app RUN pip install django -i https://pypi.douban.com/simple COPY . /app CMD python /app/manage.py runserver 0.0.0.0:8000

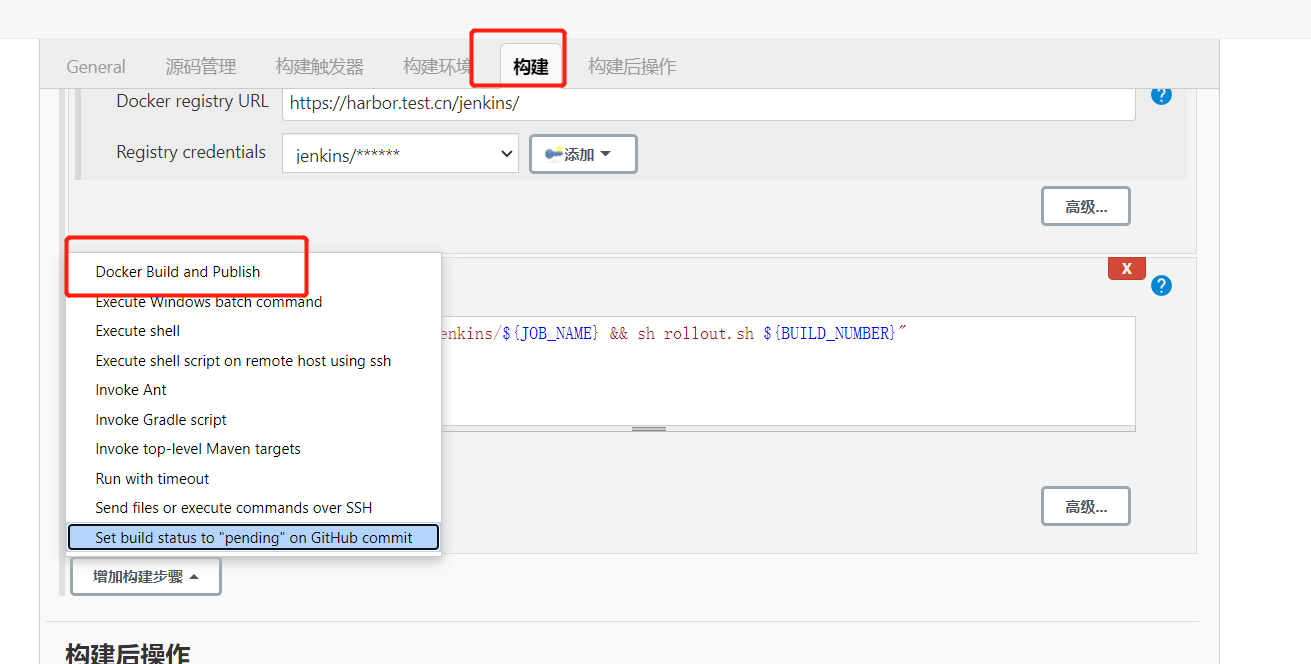

之後構建處選擇docker build and publish

給soft.test.cn主機的/var/run/docker.sock 666許可權

[root@soft harbor]# chmod 666 /var/run/docker.sock

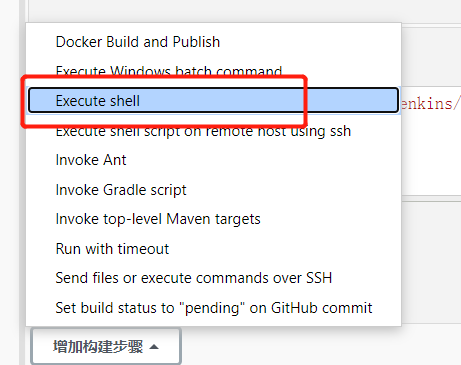

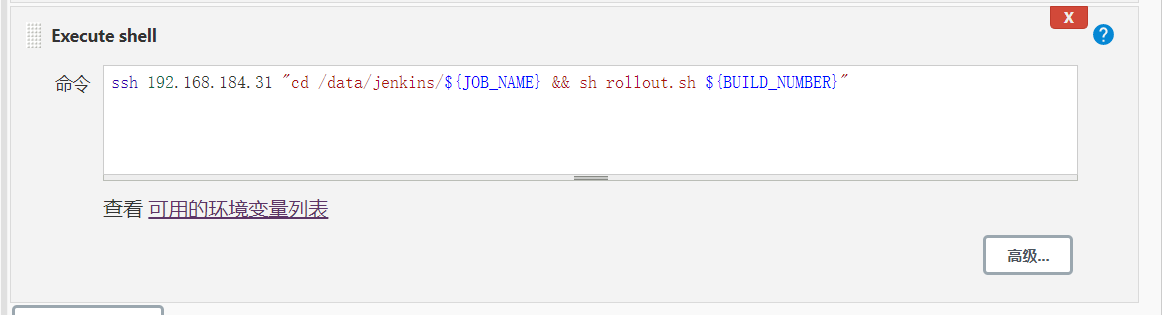

再次添加構建,選擇execute shell

命令中這麼寫為了在master主機上執行kubectl ,去部署pod

ssh 192.168.184.31 "cd /data/jenkins/${JOB_NAME} && sh rollout.sh ${BUILD_NUMBER}"

我的腳本是這麼寫的:

[root@master fanli_admin]# cat rollout.sh #!/bin/bash workdir="/data/jenkins/fanli_admin" project="fanli_admin" job_number=$(date +%s) cd ${workdir} oldversion=$(cat ${project}_delpoyment.yaml | grep "image:" | awk -F ':' '{print $NF}') newversion=$1 echo "old version is: "${oldversion} echo "new version is: "${newversion} ##tihuan jingxiangbanben sed -i.bak${job_number} 's/'"${oldversion}"'/'"${newversion}"'/g' ${project}_delpoyment.yaml ##zhixing shengjibanben kubectl apply -f ${project}_delpoyment.yaml --record=true [root@master fanli_admin]#

當然給配置了soft.test.cn 主機root用戶 免密鑰訪問master.test.cn root用戶

[root@soft harbor]# ssh-keygen #生成密鑰 [root@soft harbor]# ssh-copy-id 192.168.184.31 #將公鑰傳過去

點擊保存

修改為root 啟動jenkins

[root@soft harbor]# vim /etc/sysconfig/jenkins 將JENKINS_USER="jenkins" 修改為 JENKINS_USER="root"

重啟jenkins

[root@soft harbor]# systemctl restart jenkins

四、K8S 配置和部署Jenkins項目

配置k8s登錄harbor

創建secrets

kubectl create secret docker-registry harbor-login-registry --docker-server=harbor.test.cn --docker-username=jenkins --docker-password=123456

部署ingress

master.test.cn 主機

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.34.1/deploy/static/provider/baremetal/deploy.yaml

過一會可以看到已經安裝完成了

[root@master fanli_admin]# kubectl get po,svc -n ingress-nginx NAME READY STATUS RESTARTS AGE pod/ingress-nginx-admission-create-c9qfd 0/1 Completed 0 4d2h pod/ingress-nginx-admission-patch-6bdn4 0/1 Completed 0 4d2h pod/ingress-nginx-controller-8555c97f66-d7tlj 1/1 Running 2 4d2h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller NodePort 10.110.52.209 <none> 80:30657/TCP,443:30327/TCP 4d2h service/ingress-nginx-controller-admission ClusterIP 10.102.19.224 <none> 443/TCP 4d2h

部署前的準備

將fanli_admin_svc.yaml fanli_admin_ingress.yaml 放在和rollout.sh 相同的目錄

[root@master fanli_admin]# cat fanli_admin_delpoyment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: fanliadmin namespace: default spec: minReadySeconds: 5 strategy: # indicate which strategy we want for rolling update type: RollingUpdate rollingUpdate: maxSurge: 1 maxUnavailable: 1 replicas: 2 selector: matchLabels: app: fanli_admin template: metadata: labels: app: fanli_admin spec: imagePullSecrets: - name: harbor-login-registry terminationGracePeriodSeconds: 10 containers: - name: fanliadmin image: harbor.test.cn/jenkins/django:26 imagePullPolicy: IfNotPresent ports: - containerPort: 8000 #外部訪問埠 name: web protocol: TCP livenessProbe: httpGet: path: /members port: 8000 initialDelaySeconds: 60 #容器初始化完成後,等待60秒進行探針檢查 timeoutSeconds: 5 failureThreshold: 12 #當Pod成功啟動且檢查失敗時,Kubernetes將在放棄之前嘗試failureThreshold次。放棄生存檢查意味著重新啟動Pod。而放棄就緒檢查,Pod將被標記為未就緒。預設為3.最小值為1 readinessProbe: httpGet: path: /members port: 8000 initialDelaySeconds: 60 timeoutSeconds: 5 failureThreshold: 12fanli_admin_delpoyment.yaml

[root@master fanli_admin]# cat fanli_admin_svc.yaml apiVersion: v1 kind: Service metadata: name: fanliadmin namespace: default labels: app: fanli_admin spec: selector: app: fanli_admin type: ClusterIP ports: - name: web port: 8000 targetPort: 8000fanli_admin_svc.yaml

[root@master fanli_admin]# cat fanli_admin_ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: fanliadmin spec: rules: - host: fanli.test.cn http: paths: - path: / backend: serviceName: fanliadmin servicePort: 8000fanli_admin_ingress.yaml

部署

jenkins 首頁點擊該項目,build now

點擊完成後就會自動部署了

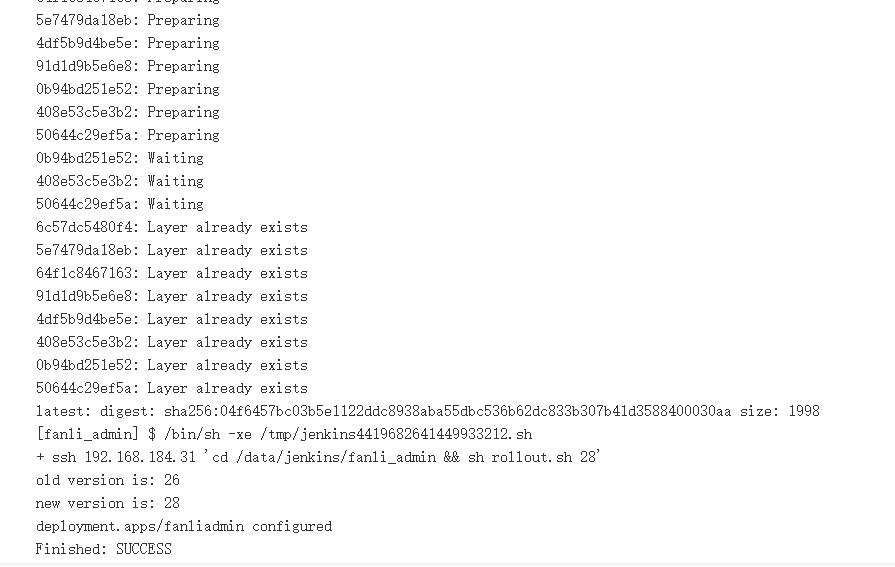

點擊控制台輸出,即可查看詳細的部署過程

看到控制台輸出success,說明已經成功了

這時候我們看k8s

[root@master fanli_admin]# kubectl get pod NAME READY STATUS RESTARTS AGE fanliadmin-5575cc56ff-pdk4r 1/1 Running 0 3m3s fanliadmin-5575cc56ff-sz2j5 1/1 Running 0 3m4s

[root@master fanli_admin]# kubectl rollout history deployment/fanliadmin deployment.apps/fanliadmin REVISION CHANGE-CAUSE 2 <none> 3 kubectl apply --filename=fanli_admin_delpoyment.yaml --record=true 4 kubectl apply --filename=fanli_admin_delpoyment.yaml --record=true 5 kubectl apply --filename=fanli_admin_delpoyment.yaml --record=true

已經部署新版本成功了

五、訪問

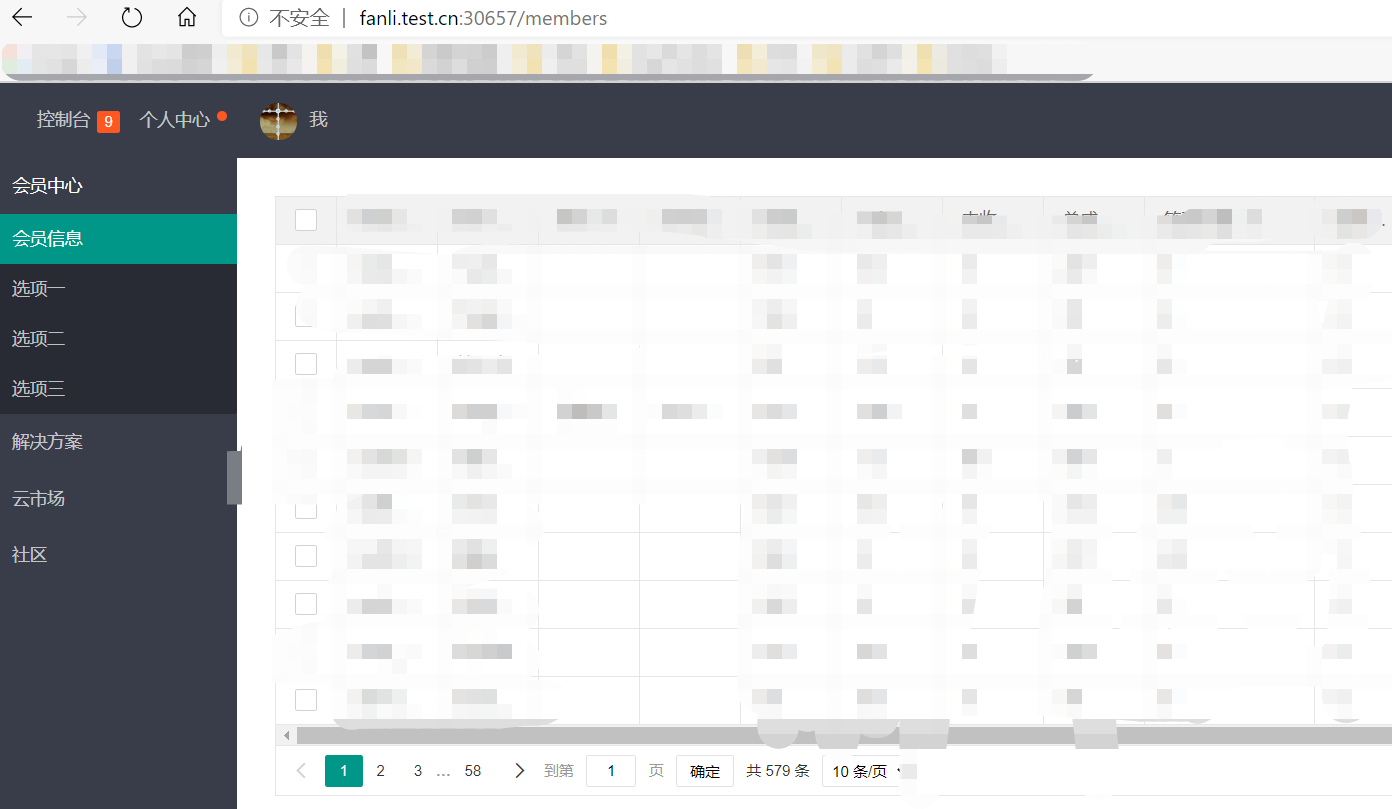

通過ingress 訪問

[root@master fanli_admin]# kubectl get pod,svc -n ingress-nginx NAME READY STATUS RESTARTS AGE pod/ingress-nginx-admission-create-c9qfd 0/1 Completed 0 4d2h pod/ingress-nginx-admission-patch-6bdn4 0/1 Completed 0 4d2h pod/ingress-nginx-controller-8555c97f66-d7tlj 1/1 Running 2 4d2h NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/ingress-nginx-controller NodePort 10.110.52.209 <none> 80:30657/TCP,443:30327/TCP 4d2h service/ingress-nginx-controller-admission ClusterIP 10.102.19.224 <none> 443/TCP 4d2h

我們可以看到,從外網訪問80埠需要訪問ingress的30657埠,443埠要訪問30327埠

這個時候我們可以看到自己寫的fanliadmin_ingress.yaml中綁定的功能變數名稱是fanli.test.cn

在windows電腦中綁定hosts

然後訪問 http://fanli.test.cn:30657/members