elasticsearch7.5.0+kibana-7.5.0+cerebro-0.8.5集群生產環境安裝配置及通過elasticsearch-migration工具做新老集群數據遷移 ...

一、伺服器準備

目前有兩台128G記憶體伺服器,故準備每台啟動兩個es實例,再加一臺虛機,共五個節點,保證down一臺伺服器兩個節點數據不受影響。

二、系統初始化

參見我上一篇kafka系統初始化:https://www.cnblogs.com/mkxfs/p/12030331.html

三、安裝elasticsearch7.5.0

1.因zookeeper和kafka需要java啟動

首先安裝jdk1.8環境

yum install java-1.8.0-openjdk-devel.x86_64 -y

2.官網下載es7.5.0

cd /opt

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.5.0-linux-x86_64.tar.gz

tar -zxf elasticsearch-7.5.0-linux-x86_64.tar.gz

mv elasticsearch-7.5.0 elasticsearch9300

創建es數據目錄

mkdir -p /data/es9300

mkdir -p /data/es9301

3.修改es配置文件

vim /opt/elasticsearch9300/config/elasticsearch.yml

最後添加:

cluster.name: en-es

node.name: node-1

path.data: /data/es9300

path.logs: /opt/elasticsearch9300/logs

bootstrap.memory_lock: true

network.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

cluster.routing.allocation.same_shard.host: true

cluster.initial_master_nodes: ["192.168.0.16:9300", "192.168.0.16:9301","192.168.0.17:9300", "192.168.0.17:9301","192.168.0.18:9300"]

discovery.zen.ping.unicast.hosts: ["192.168.0.16:9300", "192.168.0.16:9301","192.168.0.17:9300", "192.168.0.17:9301", "192.168.0.18:9300"]

discovery.zen.minimum_master_nodes: 3

node.max_local_storage_nodes: 2

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

4.修改jvm堆記憶體大小

vim /opt/elasticsearch9300/config/jvm.options

-Xms25g

-Xmx25g

5.部署本機第二個節點

cp -r /opt/elasticsearch9300 /opt/elasticsearch9301

vim /opt/elasticsearch9301/config/elasticsearch.yml

最後添加:

cluster.name: en-es

node.name: node-2

path.data: /data/es9301

path.logs: /opt/elasticsearch9301/logs

bootstrap.memory_lock: true

network.host: 0.0.0.0

http.port: 9201

transport.tcp.port: 9301

cluster.routing.allocation.same_shard.host: true

cluster.initial_master_nodes: ["192.168.0.16:9300", "192.168.0.16:9301","192.168.0.17:9300", "192.168.0.17:9301", "192.168.0.18:9300"]

discovery.zen.ping.unicast.hosts: ["192.168.0.16:9300", "192.168.0.16:9301","192.168.0.17:9300", "192.168.0.17:9301", "192.168.0.18:9300"]

discovery.zen.minimum_master_nodes: 3

node.max_local_storage_nodes: 2

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

6.繼續安裝其他三個節點,註意埠號

7.啟動elasticsearch服務

因為elasticsearch不允許root用戶啟動,故添加es賬號

groupadd es

useradd es -g es

授權es目錄給es用戶

chown -R es:es es9300

chown -R es:es es9301

chown -R es:es /data/es9300

chown -R es:es /data/es9301

啟動es服務

su - es -c "/opt/elasticsearch9300/bin/elasticsearch -d"

su - es -c "/opt/elasticsearch9301/bin/elasticsearch -d"

查看es日誌和埠,沒有報錯,啟動成功即可。

四、安裝kibana-7.5.0

1.官網下載kibana-7.5.0

cd /opt

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.5.0-linux-x86_64.tar.gz

tar -zxf kibana-7.5.0-linux-x86_64.tar.gz

2.修改kibana配置文件

vim /opt/kibana-7.5.0-linux-x86_64/config/kibana.yml

server.host: "192.168.0.16"

3.啟動kibana

添加kibana日誌目錄

mkdir /opt/kibana-7.5.0-linux-x86_64/logs

kibana也需要es用戶啟動

授權kibana目錄給es

chown -R es:es kibana-7.5.0-linux-x86_64

啟動:

su - es -c "nohup /opt/kibana-7.5.0-linux-x86_64/bin/kibana &>>/opt/kibana-7.5.0-linux-x86_64/logs/kibana.log &"

4.通過瀏覽器訪問kibana

http://192.168.0.16:5601

五、安裝es監控管理工具cerebro-0.8.5

1.下載cerebro-0.8.5release版

cd /opt

wget https://github.com/lmenezes/cerebro/releases/download/v0.8.5/cerebro-0.8.5.tgz

tar -zxf cerebro-0.8.5.tgz

2.修改cerebro配置

vim /opt/cerebro-0.8.5/conf/application.conf

hosts = [

{

host = "http://192.168.0.16:9200"

name = "en-es"

headers-whitelist = [ "x-proxy-user", "x-proxy-roles", "X-Forwarded-For" ]

}

3.啟動cerebro

nohup /opt/cerebro-0.8.5/bin/cerebro -Dhttp.port=9000 -Dhttp.address=192.168.0.16 &>/dev/null &

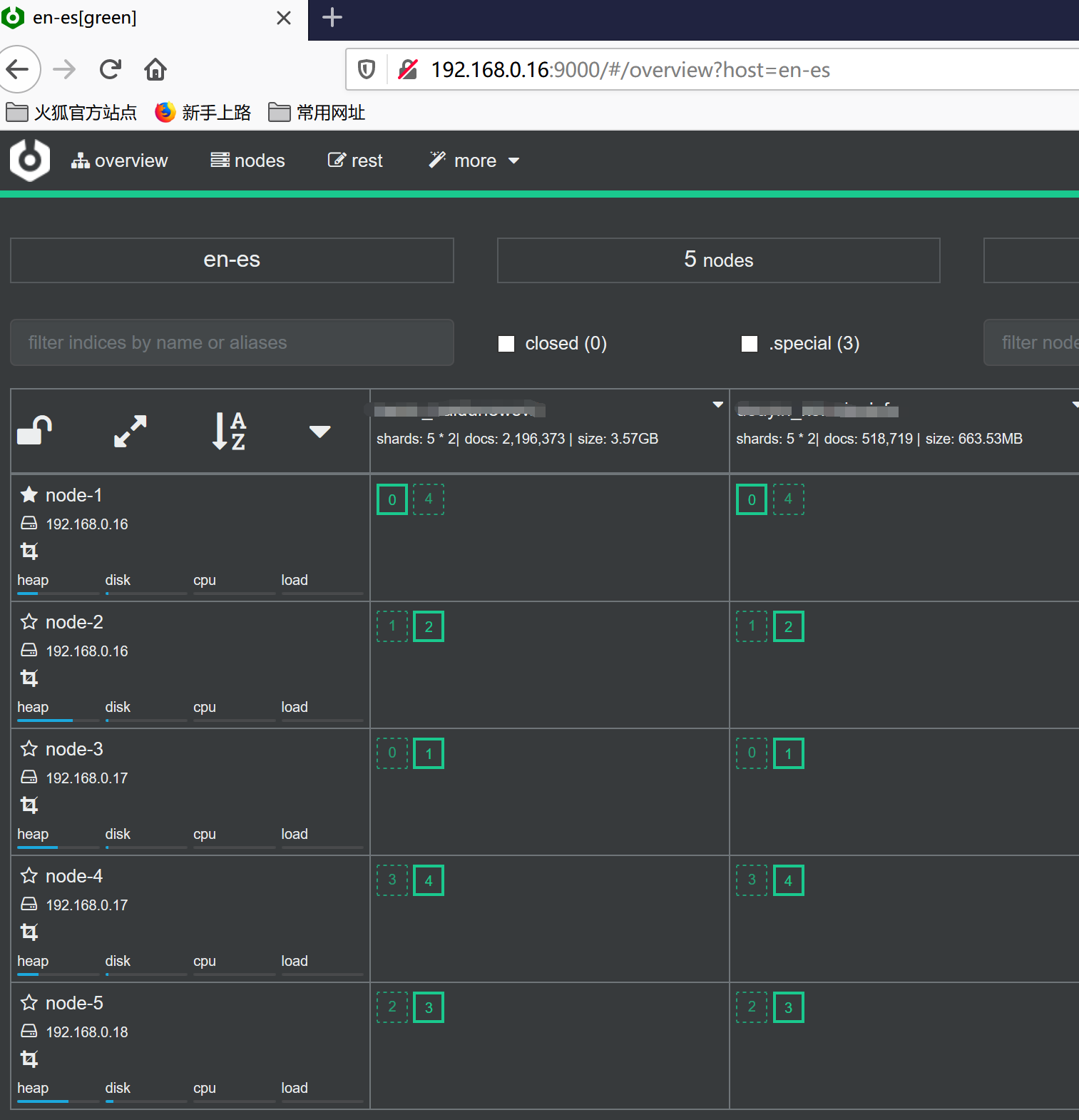

4.通過瀏覽器訪問

六、通過elasticsearch-migration將老集群數據遷移到新集群上

1.在16上安裝elasticsearch-migration

cd /opt

wget https://github.com/medcl/esm-v1/releases/download/v0.4.3/linux64.tar.gz

tar -zxf linux64.tar.gz

mv linux64 elasticsearch-migration

2.停止老集群所有寫入操作,開始遷移

/opt/elasticsearch-migration/esm -s http://192.168.0.66:9200 -d http://192.168.0.16:9200 -x indexname -w=5 -b=10 -c 10000 >/dev/null

3.等待遷移完成,一小時大約遷移7000w文檔,40G左右,同時最多建議遷移兩個索引