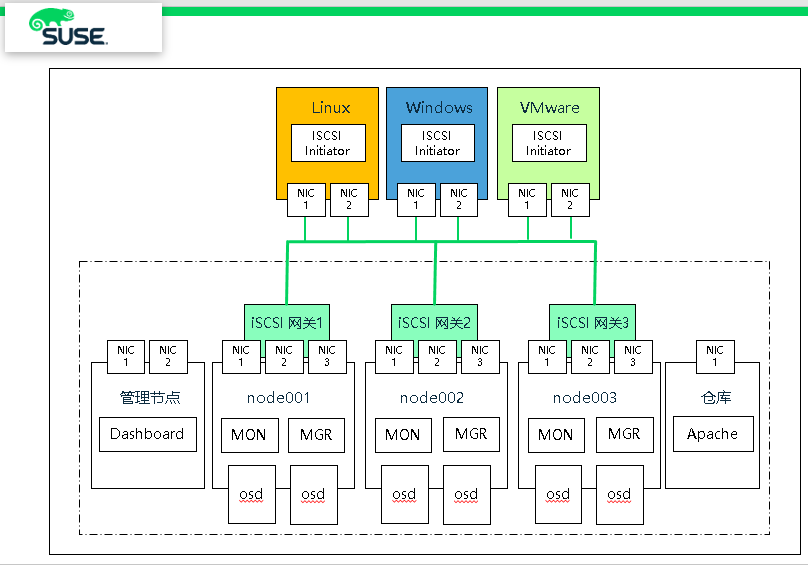

iSCSI網關集成了Ceph存儲和iSCSI標準,以提供一個高可用性(HA) iSCSI目標,該目標將RADOS塊設備(RBD)映像導出為SCSI磁碟。iSCSI協議允許客戶機 (initiator) 通過TCP/IP網路向SCSI存儲設備( targets )發送SCSI命令。這允許異構客戶機訪問 ...

iSCSI網關集成了Ceph存儲和iSCSI標準,以提供一個高可用性(HA) iSCSI目標,該目標將RADOS塊設備(RBD)映像導出為SCSI磁碟。iSCSI協議允許客戶機 (initiator) 通過TCP/IP網路向SCSI存儲設備( targets )發送SCSI命令。這允許異構客戶機訪問Ceph存儲集群。

每個iSCSI網關運行Linux IO目標內核子系統(LIO),以提供iSCSI協議支持。LIO利用用戶空間通過( TCMU ) 與Ceph的librbd庫交互,並向iSCSI客戶機暴露RBD鏡像。使用Ceph的iSCSI網關,可以有效地運行一個完全集成的塊存儲基礎設施,它具有傳統存儲區域網路(SAN)的所有特性和優點。

RBD 作為 VMware ESXI datastore 是否支持?

(1)目前來說,RBD是不支持datastore形式。

(2)iSCSI 是支持 datastore 這種方式,可以作為VMware Esxi 虛擬機提供存儲功能,性價比非常不錯的選擇。

1、創建池和鏡像

(1)創建池

# ceph osd pool create iscsi-images 128 128 replicated # ceph osd pool application enable iscsi-images rbd

(2)創建images

# rbd --pool iscsi-images create --size=2048 'iscsi-gateway-image001' # rbd --pool iscsi-images create --size=4096 'iscsi-gateway-image002' # rbd --pool iscsi-images create --size=2048 'iscsi-gateway-image003' # rbd --pool iscsi-images create --size=4096 'iscsi-gateway-image004'

(3)顯示images

# rbd ls -p iscsi-images iscsi-gateway-image001 iscsi-gateway-image002 iscsi-gateway-image003 iscsi-gateway-image004

2、deepsea 方式安裝iSCSI網關

(1)node001 和 node002節點上安裝,編輯policy.cfg 文件

vim /srv/pillar/ceph/proposals/policy.cfg ...... # IGW role-igw/cluster/node00[1-2]*.sls ......

(2)運行 stage 2 和 stage 4

# salt-run state.orch ceph.stage.2 # salt 'node001*' pillar.items public_network: 192.168.2.0/24 roles: - mon - mgr - storage - igw time_server: admin.example.com # salt-run state.orch ceph.stage.4

3、手動方式安裝iSCSI網關

(1)node003 節點安裝 iscsi 軟體包

# zypper -n in -t pattern ceph_iscsi # zypper -n in tcmu-runner tcmu-runner-handler-rbd \ ceph-iscsi patterns-ses-ceph_iscsi python3-Flask python3-click python3-configshell-fb \ python3-itsdangerous python3-netifaces python3-rtslib-fb \ python3-targetcli-fb python3-urwid targetcli-fb-common

(2)admin節點創建key,並複製到 node003

# ceph auth add client.igw.node003 mon 'allow *' osd 'allow *' mgr 'allow r' # ceph auth get client.igw.node003 client.igw.node003 key: AQC0eotdAAAAABAASZrZH9KEo0V0WtFTCW9AHQ== caps: [mgr] allow r caps: [mon] allow * caps: [osd] allow *

# ceph auth get client.igw.node003 >> /etc/ceph/ceph.client.igw.node003.keyring # scp /etc/ceph/ceph.client.igw.node003.keyring node003:/etc/ceph

(3)node003 節點啟動服務

# systemctl start tcmu-runner.service

# systemctl enable tcmu-runner.service

(4)node003 節點創建配置文件

# vim /etc/ceph/iscsi-gateway.cfg [config] cluster_client_name = client.igw.node003 pool = iscsi-images trusted_ip_list = 192.168.2.42,192.168.2.40,192.168.2.41 minimum_gateways = 1 fqdn_enabled=true # Additional API configuration options are as follows, defaults shown. api_port = 5000 api_user = admin api_password = admin api_secure = false # Log level logger_level = WARNING

(5)啟動 RBD target 服務

# systemctl start rbd-target-api.service

# systemctl enable rbd-target-api.service

(6)顯示配置信息

# gwcli info HTTP mode : http Rest API port : 5000 Local endpoint : http://localhost:5000/api Local Ceph Cluster : ceph 2ndary API IP's : 192.168.2.42,192.168.2.40,192.168.2.41

# gwcli ls o- / ...................................................................... [...] o- cluster ...................................................... [Clusters: 1] | o- ceph ......................................................... [HEALTH_OK] | o- pools ....................................................... [Pools: 1] | | o- iscsi-images ........ [(x3), Commit: 0.00Y/15718656K (0%), Used: 192K] | o- topology ............................................. [OSDs: 6,MONs: 3] o- disks .................................................... [0.00Y, Disks: 0] o- iscsi-targets ............................ [DiscoveryAuth: None, Targets: 0]

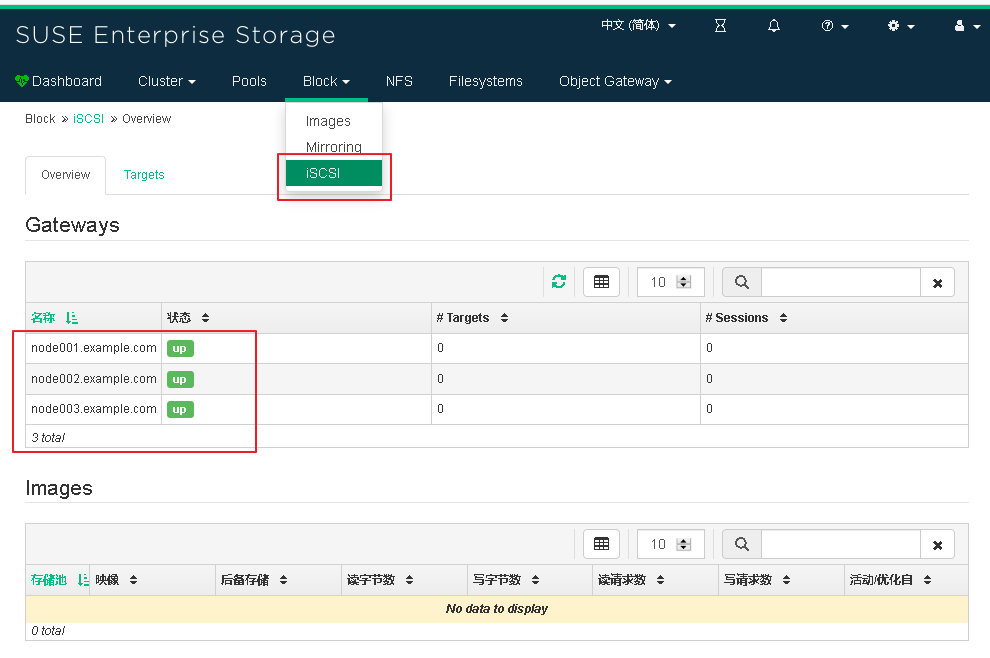

4、Dashboard 添加 iscsi 網關

(1)Admin節點上,查看 dashboard iSCSI 網關

admin:~ # ceph dashboard iscsi-gateway-list

{"gateways": {"node002.example.com": {"service_url": "http://admin:[email protected]:5000"},

"node001.example.com": {"service_url": "http://admin:[email protected]:5000"}}}

(2)添加 iSCSI 網關

# ceph dashboard iscsi-gateway-add http://admin:[email protected]:5000 # ceph dashboard iscsi-gateway-list {"gateways": {"node002.example.com": {"service_url": "http://admin:[email protected]:5000"}, "node001.example.com": {"service_url": "http://admin:[email protected]:5000"}, "node003.example.com": {"service_url": "http://admin:[email protected]:5000"}}}

(3)登陸 Dashboard 查看 iSCSI 網關

5、Export RBD Images via iSCSI

(1)創建 iSCSI target name

# gwcli

gwcli > /> cd /iscsi-targets

gwcli > /iscsi-targets> create iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01

(2)添加 iSCSI 網關

gwcli > /iscsi-targets> cd iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01/gateways

/iscsi-target...tvol/gateways> create node001.example.com 172.200.50.40

/iscsi-target...tvol/gateways> create node002.example.com 172.200.50.41

/iscsi-target...tvol/gateways> create node003.example.com 172.200.50.42

/iscsi-target...ay01/gateways> ls

o- gateways ......................................................... [Up: 3/3, Portals: 3]

o- node001.example.com ............................................. [172.200.50.40 (UP)]

o- node002.example.com ............................................. [172.200.50.41 (UP)]

o- node003.example.com ............................................. [172.200.50.42 (UP)]

註意:安裝主機名來定義

/iscsi-target...tvol/gateways> create node002 172.200.50.41

The first gateway defined must be the local machine

(3)添加 RBD 鏡像

/iscsi-target...tvol/gateways> cd /disks

/disks> attach iscsi-images/iscsi-gateway-image001

/disks> attach iscsi-images/iscsi-gateway-image002

(4)target 和 RBD 鏡像建立映射關係

/disks> cd /iscsi-targets/iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01/disks

/iscsi-target...teway01/disks> add iscsi-images/iscsi-gateway-image001

/iscsi-target...teway01/disks> add iscsi-images/iscsi-gateway-image002

(5)設置不驗證

gwcli > /> cd /iscsi-targets/iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01/hosts

/iscsi-target...teway01/hosts> auth disable_acl

/iscsi-target...teway01/hosts> exit

(6)查看配置信息

node001:~ # gwcli ls

o- / ............................................................................... [...]

o- cluster ............................................................... [Clusters: 1]

| o- ceph .................................................................. [HEALTH_OK]

| o- pools ................................................................ [Pools: 1]

| | o- iscsi-images .................. [(x3), Commit: 6G/15717248K (40%), Used: 1152K]

| o- topology ...................................................... [OSDs: 6,MONs: 3]

o- disks ................................................................ [6G, Disks: 2]

| o- iscsi-images .................................................. [iscsi-images (6G)]

| o- iscsi-gateway-image001 ............... [iscsi-images/iscsi-gateway-image001 (2G)]

| o- iscsi-gateway-image002 ............... [iscsi-images/iscsi-gateway-image002 (4G)]

o- iscsi-targets ..................................... [DiscoveryAuth: None, Targets: 1]

o- iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01 .............. [Gateways: 3]

o- disks ................................................................ [Disks: 2]

| o- iscsi-images/iscsi-gateway-image001 .............. [Owner: node001.example.com]

| o- iscsi-images/iscsi-gateway-image002 .............. [Owner: node002.example.com]

o- gateways .................................................. [Up: 3/3, Portals: 3]

| o- node001.example.com ...................................... [172.200.50.40 (UP)]

| o- node002.example.com ...................................... [172.200.50.41 (UP)]

| o- node003.example.com ...................................... [172.200.50.42 (UP)]

o- host-groups ........................................................ [Groups : 0]

o- hosts .................................................... [Hosts: 0: Auth: None]

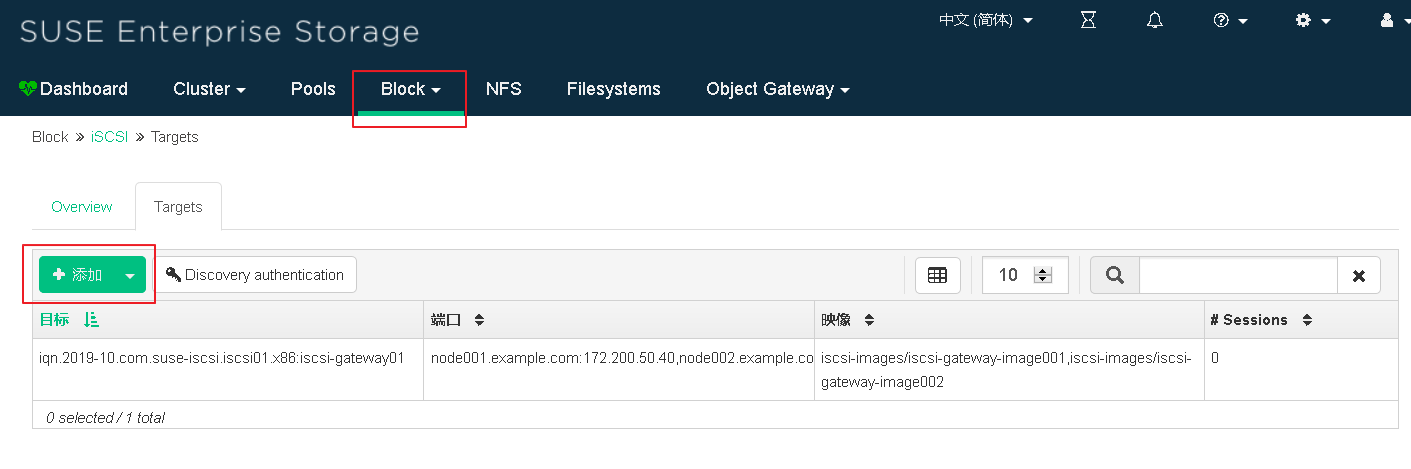

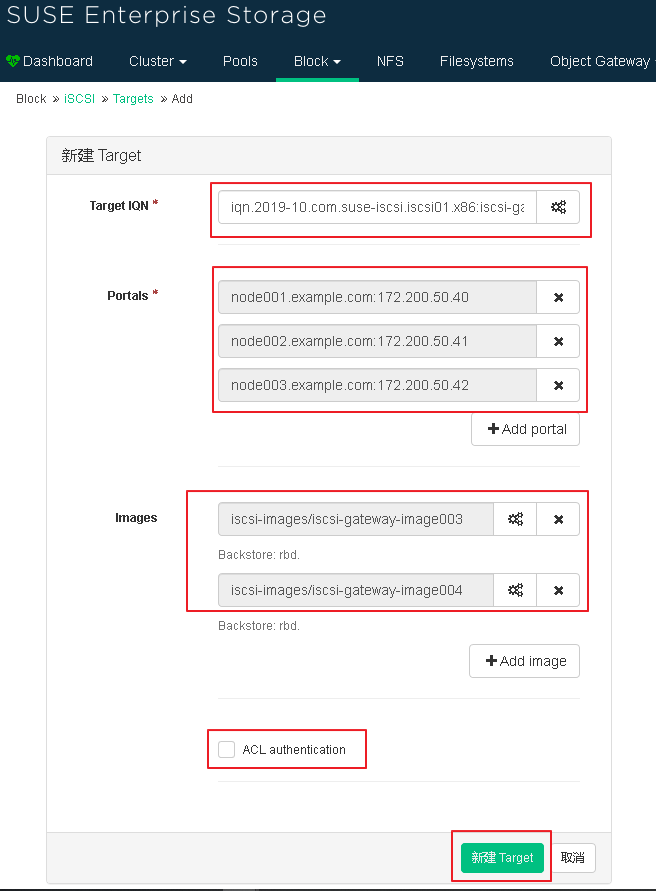

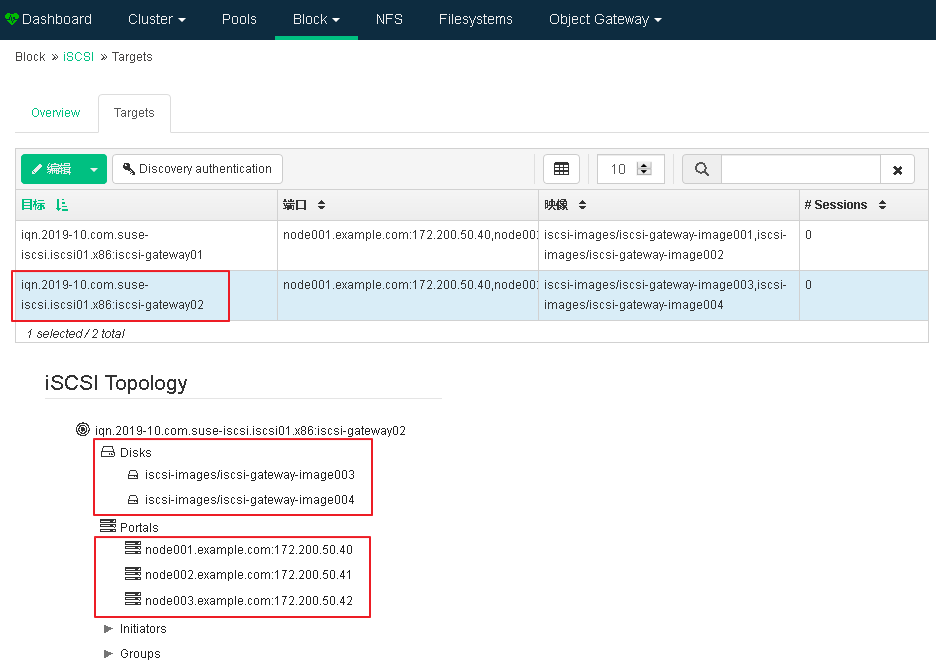

6、使用 Dashboard 界面輸出 RBD Images

(1)添加 iSCSI target

(2)編寫 target IQN,並且添加鏡像 Portals 和 images

(3)查看新添加 iSCSI target 信息

7、Linux 客戶端訪問

(1)啟動 iscsid 服務

- SLES or RHEL

# systemctl start iscsid.service

# systemctl enable iscsid.service

- Debian or Ubuntu

# systemctl start open-iscsi

(2)發現和連接 targets

# iscsiadm -m discovery -t st -p 172.200.50.40 172.200.50.40:3260,1 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01 172.200.50.41:3260,2 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01 172.200.50.42:3260,3 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway01 172.200.50.40:3260,1 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway02 172.200.50.41:3260,2 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway02 172.200.50.42:3260,3 iqn.2019-10.com.suse-iscsi.iscsi01.x86:iscsi-gateway02

(3)登錄target

# iscsiadm -m node -p 172.200.50.40 --login # iscsiadm -m node -p 172.200.50.41 --login # iscsiadm -m node -p 172.200.50.42 --login

# lsblk NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sda 8:0 0 25G 0 disk ├─sda1 8:1 0 509M 0 part /boot └─sda2 8:2 0 24.5G 0 part ├─vg00-lvswap 254:0 0 2G 0 lvm [SWAP] └─vg00-lvroot 254:1 0 122.5G 0 lvm / sdb 8:16 0 100G 0 disk └─vg00-lvroot 254:1 0 122.5G 0 lvm / sdc 8:32 0 2G 0 disk sdd 8:48 0 2G 0 disk sde 8:64 0 4G 0 disk sdf 8:80 0 4G 0 disk sdg 8:96 0 2G 0 disk sdh 8:112 0 4G 0 disk sdi 8:128 0 2G 0 disk sdj 8:144 0 4G 0 disk sdk 8:160 0 2G 0 disk sdl 8:176 0 2G 0 disk sdm 8:192 0 4G 0 disk sdn 8:208 0 4G 0 disk

(4)如果系統上已安裝 lsscsi 實用程式,您可以使用它來枚舉系統上可用的 SCSI 設備:

# lsscsi [1:0:0:0] cd/dvd NECVMWar VMware SATA CD01 1.00 /dev/sr0 [30:0:0:0] disk VMware, VMware Virtual S 1.0 /dev/sda [30:0:1:0] disk VMware, VMware Virtual S 1.0 /dev/sdb [33:0:0:0] disk SUSE RBD 4.0 /dev/sdc [33:0:0:1] disk SUSE RBD 4.0 /dev/sde [34:0:0:2] disk SUSE RBD 4.0 /dev/sdd [34:0:0:3] disk SUSE RBD 4.0 /dev/sdf [35:0:0:0] disk SUSE RBD 4.0 /dev/sdg [35:0:0:1] disk SUSE RBD 4.0 /dev/sdh [36:0:0:2] disk SUSE RBD 4.0 /dev/sdi [36:0:0:3] disk SUSE RBD 4.0 /dev/sdj [37:0:0:0] disk SUSE RBD 4.0 /dev/sdk [37:0:0:1] disk SUSE RBD 4.0 /dev/sdm [38:0:0:2] disk SUSE RBD 4.0 /dev/sdl [38:0:0:3] disk SUSE RBD 4.0 /dev/sdn

(5)多路徑設置

# zypper in multipath-tools # modprobe dm-multipath path # systemctl start multipathd.service # systemctl enable multipathd.service

# multipath -ll 36001405863b0b3975c54c5f8d1ce0e01 dm-3 SUSE,RBD size=4.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | `- 35:0:0:1 sdh 8:112 active ready running <=== 單條鏈路 active `-+- policy='service-time 0' prio=10 status=enabled |- 33:0:0:1 sde 8:64 active ready running `- 37:0:0:1 sdm 8:192 active ready running 3600140529260bf41c294075beede0c21 dm-2 SUSE,RBD size=2.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | `- 33:0:0:0 sdc 8:32 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 35:0:0:0 sdg 8:96 active ready running `- 37:0:0:0 sdk 8:160 active ready running 360014055d00387c82104d338e81589cb dm-4 SUSE,RBD size=2.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | `- 38:0:0:2 sdl 8:176 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 34:0:0:2 sdd 8:48 active ready running `- 36:0:0:2 sdi 8:128 active ready running 3600140522ec3f9612b64b45aa3e72d9c dm-5 SUSE,RBD size=4.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw |-+- policy='service-time 0' prio=50 status=active | `- 34:0:0:3 sdf 8:80 active ready running `-+- policy='service-time 0' prio=10 status=enabled |- 36:0:0:3 sdj 8:144 active ready running `- 38:0:0:3 sdn 8:208 active ready running

(5)編輯多路徑配置文件

# vim /etc/multipath.conf defaults { user_friendly_names yes } devices { device { vendor "(LIO-ORG|SUSE)" product "RBD" path_grouping_policy "multibus" # 所有有效路徑在一個優先組群中 path_checker "tur" # 在設備中執行 TEST UNIT READY 命令。 features "0" hardware_handler "1 alua" # 在切換路徑組群或者處理 I/O 錯誤時用來執行硬體具體動作的模塊。 prio "alua" failback "immediate" rr_weight "uniform" # 所有路徑都有相同的加權 no_path_retry 12 # 路徑故障後,重試12次,每次5秒 rr_min_io 100 # 指定切換到當前路徑組的下一個路徑前路由到該路徑的 I/O 請求數。 } }

# systemctl stop multipathd.service

# systemctl start multipathd.service

(6)查看多路徑狀態

# multipath -ll mpathd (3600140522ec3f9612b64b45aa3e72d9c) dm-5 SUSE,RBD size=4.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw `-+- policy='service-time 0' prio=23 status=active |- 34:0:0:3 sdf 8:80 active ready running <=== 多條鏈路 active |- 36:0:0:3 sdj 8:144 active ready running `- 38:0:0:3 sdn 8:208 active ready running mpathc (360014055d00387c82104d338e81589cb) dm-4 SUSE,RBD size=2.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw `-+- policy='service-time 0' prio=23 status=active |- 34:0:0:2 sdd 8:48 active ready running |- 36:0:0:2 sdi 8:128 active ready running `- 38:0:0:2 sdl 8:176 active ready running mpathb (36001405863b0b3975c54c5f8d1ce0e01) dm-3 SUSE,RBD size=4.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw `-+- policy='service-time 0' prio=23 status=active |- 33:0:0:1 sde 8:64 active ready running |- 35:0:0:1 sdh 8:112 active ready running `- 37:0:0:1 sdm 8:192 active ready running mpatha (3600140529260bf41c294075beede0c21) dm-2 SUSE,RBD size=2.0G features='2 queue_if_no_path retain_attached_hw_handler' hwhandler='1 alua' wp=rw `-+- policy='service-time 0' prio=23 status=active |- 33:0:0:0 sdc 8:32 active ready running |- 35:0:0:0 sdg 8:96 active ready running `- 37:0:0:0 sdk 8:160 active ready running

(7)顯示當前的device mapper的信息

# dmsetup ls --tree mpathd (254:5) ├─ (8:208) ├─ (8:144) └─ (8:80) mpathc (254:4) ├─ (8:176) ├─ (8:128) └─ (8:48) mpathb (254:3) ├─ (8:192) ├─ (8:112) └─ (8:64) mpatha (254:2) ├─ (8:160) ├─ (8:96) └─ (8:32) vg00-lvswap (254:0) └─ (8:2) vg00-lvroot (254:1) ├─ (8:16) └─ (8:2)

(8)客戶端 yast iscsi-client 工具查看

iSCSI的其他常用操作(客戶端)

(1)列出所有target

# iscsiadm -m node

(2)連接所有target

# iscsiadm -m node -L all

(3)連接指定target

# iscsiadm -m node -T iqn.... -p 172.29.88.62 --login

(4)使用如下命令可以查看配置信息

# iscsiadm -m node -o show -T iqn.2000-01.com.synology:rackstation.exservice-bak

(5)查看目前 iSCSI target 連接狀態

# iscsiadm -m session

# iscsiadm: No active sessions.

(目前沒有已連接的 iSCSI target)

(6)斷開所有target

# iscsiadm -m node -U all

(7)斷開指定target

# iscsiadm -m node -T iqn... -p 172.29.88.62 --logout

(8)刪除所有node信息

# iscsiadm -m node --op delete

(9)刪除指定節點(/var/lib/iscsi/nodes目錄下,先斷開session)

# iscsiadm -m node -o delete -name iqn.2012-01.cn.nayun:test-01

(10)刪除一個目標(/var/lib/iscsi/send_targets目錄下)

# iscsiadm --mode discovery -o delete -p 172.29.88.62:3260