曾經有人問我,為什麼要學習Python!我說:"因為我想學習爬蟲!""那你為什麼學習爬蟲呢?""因為可以批量下載很多很多妹子圖!"其實我都是為了學習,都是為了讓自己能更好的掌握Python,練手的項目!Emmmmm....沒錯,是為了學習 除了Python還能用什麼語言寫爬蟲? C,C++。高效率, ...

曾經有人問我,為什麼要學習Python!

我說:"因為我想學習爬蟲!"

"那你為什麼學習爬蟲呢?"

"因為可以批量下載很多很多妹子圖!"

其實我都是為了學習,都是為了讓自己能更好的掌握Python,練手的項目!

Emmmmm....沒錯,是為了學習

除了Python還能用什麼語言寫爬蟲?

- C,C++。高效率,快速,適合通用搜索引擎做全網爬取。缺點,開發慢,寫起來又臭又長,例如:天網搜索源代碼。

- 腳本語言:Perl, Python, Java, Ruby。簡單,易學,良好的文本處理能方便網頁內容的細緻提取,但效率往往不高,適合對少量網站的聚焦爬取

- C#?(貌似信息管理的人比較喜歡的語言)

那為什麼最終選擇Python?

我只想說:人生苦短,我用Python!

那怎麼爬取美膩的小姐姐照片呢?

那怎麼爬取美膩的小姐姐照片呢?其實爬蟲不難,主要就那麼幾個步驟

1、打開網頁,獲取源碼

2、獲取圖片

3、保存圖片地址與下載圖片

準備開車!

用到的模塊

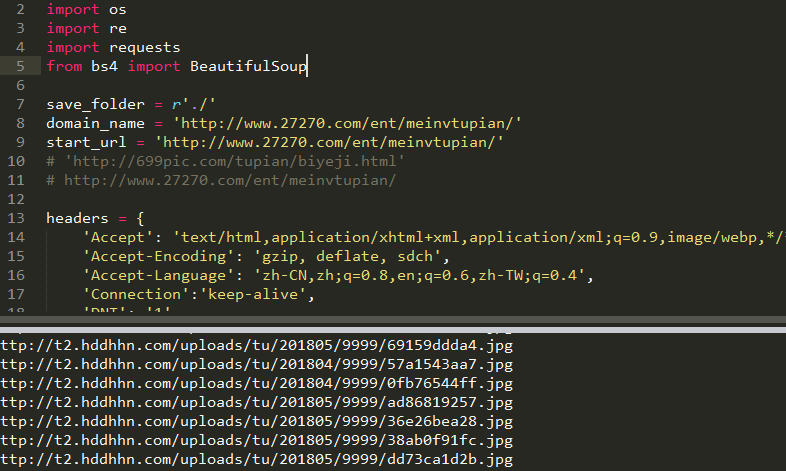

1 import os 2 import re 3 import requests 4 from bs4 import BeautifulSoup

模塊安裝

1 pip install requests 2 Pip install bs4

直接上主菜

1 # -*- coding: utf-8 -*- 2 import os 3 import re 4 import requests 5 from bs4 import BeautifulSoup 6 7 save_folder = r'./' 8 domain_name = 'http://www.27270.com/ent/meinvtupian/' 9 start_url = 'http://www.27270.com/ent/meinvtupian/' 10 # 'http://699pic.com/tupian/biyeji.html' 11 # http://www.27270.com/ent/meinvtupian/ 12 13 headers = { 14 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 15 'Accept-Encoding': 'gzip, deflate, sdch', 16 'Accept-Language': 'zh-CN,zh;q=0.8,en;q=0.6,zh-TW;q=0.4', 17 'Connection':'keep-alive', 18 'DNT': '1', 19 'Host': 'www.kongjie.com', 20 'Referer': 'http://www.kongjie.com/home.php?mod=space&do=album&view=all&order=hot&page=1', 21 'Upgrade-Insecure-Requests': '1', 22 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36' 23 } 24 uid_picid_pattern = re.compile(r'.*?uid=(\d+).*?picid=(\d+).*?') 25 26 27 28 def save_img(image_url, uid, picid): 29 """ 30 保存圖片到全局變數save_folder文件夾下,圖片名字為“uid_picid.ext”。 31 其中,uid是用戶id,picid是空姐網圖片id,ext是圖片的擴展名。 32 Python學習交流群:125240963,群內每天分享乾貨,包括最新的python企業案例學習資料和零基礎入門教程,歡迎各位小伙伴入群學習交流 33 """ 34 try: 35 response = requests.get(image_url, stream=True) 36 # 獲取文件擴展名 37 file_name_prefix, file_name_ext = os.path.splitext(image_url) 38 save_path = os.path.join(save_folder, uid + '_' + picid + file_name_ext) 39 with open(save_path, 'wb') as fw: 40 fw.write(response.content) 41 print(uid + '_' + picid + file_name_ext, 'image saved!', image_url) 42 except IOError as e: 43 print('save error!', e,"111", image_url,"222") 44 45 46 def save_images_in_album(album_url, count): 47 """ 48 進入空姐網用戶的相冊,開始一張一張的保存相冊中的圖片。 49 """ 50 # 解析出uid和picid,用於存儲圖片的名字 51 response = requests.get(album_url) 52 soup = BeautifulSoup(response.text, 'lxml') 53 image_div = soup.select('.articleV4Body img') 54 55 for image in image_div: 56 print(image.attrs['src']) 57 try: 58 response = requests.get(image.attrs['src']) 59 save_path = os.path.join(save_folder, str(count) + '.jpg') 60 with open(save_path, 'wb') as fw: 61 fw.write(response.content) 62 except IOError as e: 63 print('save error!', e, "222") 64 65 66 67 68 # next_image = soup.select_one('div.pns.mlnv.vm.mtm.cl a.btn[title="下一張"]') 69 # if not next_image: 70 # return 71 # # 解析下一張圖片的picid,防止重覆爬取圖片,不重覆則抓取 72 # next_image_url = next_image['href'] 73 # next_uid_picid_match = uid_picid_pattern.search(next_image_url) 74 # if not next_uid_picid_match: 75 # return 76 # next_uid = next_uid_picid_match.group(1) 77 # next_picid = next_uid_picid_match.group(2) 78 # # if not redis_con.hexists('kongjie', next_uid + ':' + next_picid): 79 # save_images_in_album(next_image_url) 80 81 82 def parse_album_url(url): 83 """ 84 解析出相冊url,然後進入相冊爬取圖片 85 """ 86 response = requests.get(url) 87 soup = BeautifulSoup(response.text, 'lxml') 88 people_list = soup.select('li a.tit') 89 count = 0 90 for people in people_list: 91 save_images_in_album(people.attrs['href'], count) 92 count = count + 1 93 # break 94 95 # # 爬取下一頁 96 # next_page = soup.select_one('a.nxt') 97 # if next_page: 98 # parse_album_url(next_page['href']) 99 100 if __name__ == '__main__': 101 parse_album_url(start_url)

運行結果

小姐姐的照片

看了小姐姐的照片,我甚至欣慰:果然沒有選錯語言