1. Scrapy框架 Scrapy是python下實現爬蟲功能的框架,能夠將數據解析、數據處理、數據存儲合為一體功能的爬蟲框架。 2. Scrapy安裝 1. 安裝依賴包 2. 安裝scrapy 註意事項:scrapy和twisted存在相容性問題,如果安裝twisted版本過高,運行scrapy ...

1. Scrapy框架

Scrapy是python下實現爬蟲功能的框架,能夠將數據解析、數據處理、數據存儲合為一體功能的爬蟲框架。

2. Scrapy安裝

1. 安裝依賴包

yum install gcc libffi-devel python-devel openssl-devel -y yum install libxslt-devel -y

2. 安裝scrapy

pip install scrapy

pip install twisted==13.1.0

註意事項:scrapy和twisted存在相容性問題,如果安裝twisted版本過高,運行scrapy startproject project_name的時候會提示報錯,安裝twisted==13.1.0即可。

3. 基於Scrapy爬取數據並存入到CSV

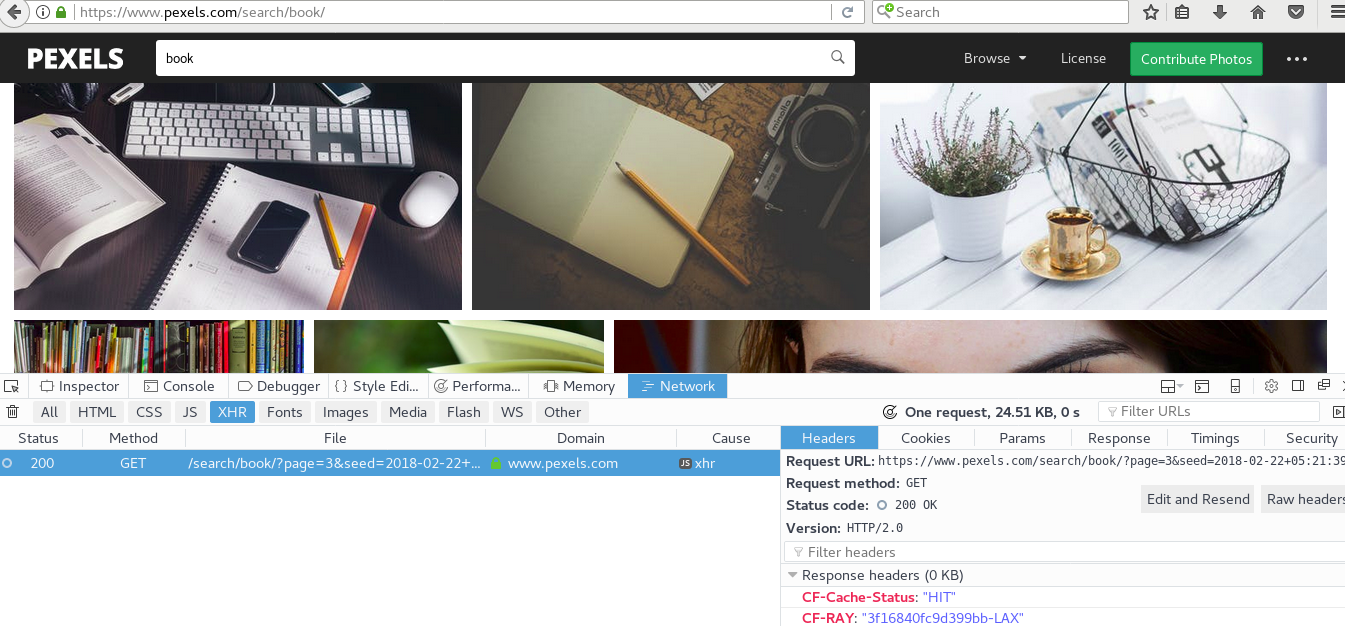

3.1. 爬蟲目標,獲取簡書中熱門專題的數據信息,站點為https://www.jianshu.com/recommendations/collections,點擊"熱門"是我們需要爬取的站點,該站點使用了AJAX非同步載入技術,通過F12鍵——Network——XHR,並翻頁獲取到頁面URL地址為https://www.jianshu.com/recommendations/collections?page=2&order_by=hot,通過修改page=後面的數值即可訪問多頁的數據,如下圖:

3.2. 爬取內容

需要爬取專題的內容包括:專題內容、專題描述、收錄文章數、關註人數,Scrapy使用xpath來清洗所需的數據,編寫爬蟲過程中可以手動通過lxml中的xpath獲取數據,確認無誤後再將其寫入到scrapy代碼中,區別點在於,scrapy需要使用extract()函數才能將數據提取出來。

3.3 創建爬蟲項目

[root@HappyLau jianshu_hot_topic]# scrapy startproject jianshu_hot_topic #項目目錄結構如下: [root@HappyLau python]# tree jianshu_hot_topic jianshu_hot_topic ├── jianshu_hot_topic │ ├── __init__.py │ ├── __init__.pyc │ ├── items.py │ ├── items.pyc │ ├── middlewares.py │ ├── pipelines.py │ ├── pipelines.pyc │ ├── settings.py │ ├── settings.pyc │ └── spiders │ ├── collection.py │ ├── collection.pyc │ ├── __init__.py │ ├── __init__.pyc │ ├── jianshu_hot_topic_spider.py #手動創建文件,用於爬蟲數據提取 │ └── jianshu_hot_topic_spider.pyc └── scrapy.cfg 2 directories, 16 files [root@HappyLau python]#

3.4 代碼內容

1. items.py代碼內容,定義需要爬取數據欄位

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

from scrapy import Item

from scrapy import Field

class JianshuHotTopicItem(scrapy.Item):

'''

@scrapy.item,繼承父類scrapy.Item的屬性和方法,該類用於定義需要爬取數據的子段

'''

collection_name = Field()

collection_description = Field()

collection_article_count = Field()

collection_attention_count = Field()

2. piders/jianshu_hot_topic_spider.py代碼內容,實現數據獲取的代碼邏輯,通過xpath實現

[root@HappyLau jianshu_hot_topic]# cat spiders/jianshu_hot_topic_spider.py

#_*_ coding:utf8 _*_

import random

from time import sleep

from scrapy.spiders import CrawlSpider

from scrapy.selector import Selector

from scrapy.http import Request

from jianshu_hot_topic.items import JianshuHotTopicItem

class jianshu_hot_topic(CrawlSpider):

'''

簡書專題數據爬取,獲取url地址中特定的子段信息

'''

name = "jianshu_hot_topic"

start_urls = ["https://www.jianshu.com/recommendations/collections?page=2&order_by=hot"]

def parse(self,response):

'''

@params:response,提取response中特定欄位信息

'''

item = JianshuHotTopicItem()

selector = Selector(response)

collections = selector.xpath('//div[@class="col-xs-8"]')

for collection in collections:

collection_name = collection.xpath('div/a/h4/text()').extract()[0].strip()

collection_description = collection.xpath('div/a/p/text()').extract()[0].strip()

collection_article_count = collection.xpath('div/div/a/text()').extract()[0].strip().replace('篇文章','')

collection_attention_count = collection.xpath('div/div/text()').extract()[0].strip().replace("人關註",'').replace("· ",'')

item['collection_name'] = collection_name

item['collection_description'] = collection_description

item['collection_article_count'] = collection_article_count

item['collection_attention_count'] = collection_attention_count

yield item

urls = ['https://www.jianshu.com/recommendations/collections?page={}&order_by=hot'.format(str(i)) for i in range(3,11)]

for url in urls:

sleep(random.randint(2,7))

yield Request(url,callback=self.parse)

3. pipelines文件內容,定義數據存儲的方式,此處定義數據存儲的邏輯,可以將數據存儲載MySQL資料庫,MongoDB資料庫,文件,CSV,Excel等存儲介質中,如下以存儲載CSV為例:

[root@HappyLau jianshu_hot_topic]# cat pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import csv

class JianshuHotTopicPipeline(object):

def process_item(self, item, spider):

f = file('/root/zhuanti.csv','a+')

writer = csv.writer(f)

writer.writerow((item['collection_name'],item['collection_description'],item['collection_article_count'],item['collection_attention_count']))

return item

4. 修改settings文件,

ITEM_PIPELINES = {

'jianshu_hot_topic.pipelines.JianshuHotTopicPipeline': 300,

}

3.5 運行scrapy爬蟲

返回到項目scrapy項目創建所在目錄,運行scrapy crawl spider_name即可,如下:

[root@HappyLau jianshu_hot_topic]# pwd

/root/python/jianshu_hot_topic

[root@HappyLau jianshu_hot_topic]# scrapy crawl jianshu_hot_topic

2018-02-24 19:12:23 [scrapy.utils.log] INFO: Scrapy 1.5.0 started (bot: jianshu_hot_topic)

2018-02-24 19:12:23 [scrapy.utils.log] INFO: Versions: lxml 3.2.1.0, libxml2 2.9.1, cssselect 1.0.3, parsel 1.4.0, w3lib 1.19.0, Twisted 13.1.0, Python 2.7.5 (default, Aug 4 2017, 00:39:18) - [GCC 4.8.5 20150623 (Red Hat 4.8.5-16)], pyOpenSSL 0.13.1 (OpenSSL 1.0.1e-fips 11 Feb 2013), cryptography 1.7.2, Platform Linux-3.10.0-693.el7.x86_64-x86_64-with-centos-7.4.1708-Core

2018-02-24 19:12:23 [scrapy.crawler] INFO: Overridden settings: {'NEWSPIDER_MODULE': 'jianshu_hot_topic.spiders', 'SPIDER_MODULES': ['jianshu_hot_topic.spiders'], 'ROBOTSTXT_OBEY': True, 'USER_AGENT': 'Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0', 'BOT_NAME': 'jianshu_hot_topic'}

查看/root/zhuanti.csv中的數據,即可實現。

4. 遇到的問題總結

1. twisted版本不見容,安裝過新的版本導致,安裝Twisted (13.1.0)即可

2. 中文數據無法寫入,提示'ascii'錯誤,通過設置python的encoding為utf即可,如下:

>>> import sys

>>> sys.getdefaultencoding()

'ascii'

>>> reload(sys)

<module 'sys' (built-in)>

>>> sys.setdefaultencoding('utf8')

>>> sys.getdefaultencoding()

'utf8'

3. 爬蟲無法獲取站點數據,由於headers導致,載settings.py文件中添加USER_AGENT變數,如:

USER_AGENT="Mozilla/5.0 (X11; Linux x86_64; rv:52.0) Gecko/20100101 Firefox/52.0"

Scrapy使用過程中可能會遇到結果執行失敗或者結果執行不符合預期,其現實的logs非常詳細,通過觀察日誌內容,並結合代碼+網上搜索資料即可解決。