一 kubeadm介紹 1.1 概述 參考《附003.Kubeadm部署Kubernetes》。 1.2 kubeadm功能 參考《附003.Kubeadm部署Kubernetes》。 二 部署規劃 2.1 節點規劃 節點主機名 IP 類型 運行服務 k8smaster01 172.24.8.71 ...

一 kubeadm介紹

1.1 概述

參考《附003.Kubeadm部署Kubernetes》。1.2 kubeadm功能

參考《附003.Kubeadm部署Kubernetes》。二 部署規劃

2.1 節點規劃

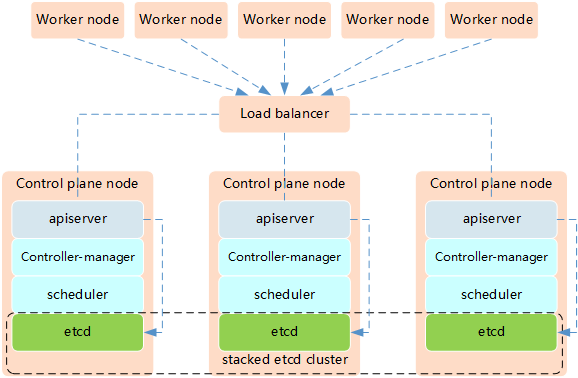

高可用架構二:使用獨立的Etcd集群,不與Master節點混布。

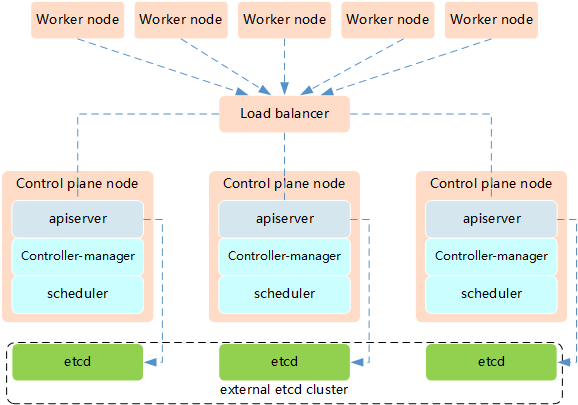

高可用架構二:使用獨立的Etcd集群,不與Master節點混布。

釋義:

兩種方式的相同之處在於都提供了控制平面的冗餘,實現了集群高可以用,區別在於:

釋義:

兩種方式的相同之處在於都提供了控制平面的冗餘,實現了集群高可以用,區別在於:

- Etcd混布方式

- 所需機器資源少

- 部署簡單,利於管理

- 容易進行橫向擴展

- 風險大,一臺宿主機掛了,master和etcd就都少了一套,集群冗餘度受到的影響比較大。

- Etcd獨立部署方式:

- 所需機器資源多(按照Etcd集群的奇數原則,這種拓撲的集群關控制平面最少需要6台宿主機了)

- 部署相對複雜,要獨立管理etcd集群和和master集群

- 解耦了控制平面和Etcd,集群風險小健壯性強,單獨掛了一臺master或etcd對集群的影響很小

2.2 初始準備

1 [root@k8smaster01 ~]# vi k8sinit.sh 2 # Modify Author: xhy 3 # Modify Date: 2019-06-23 22:19 4 # Version: 5 #***************************************************************# 6 # Initialize the machine. This needs to be executed on every machine. 7 8 # Add host domain name. 9 cat >> /etc/hosts << EOF 10 172.24.8.71 k8smaster01 11 172.24.8.72 k8smaster02 12 172.24.8.73 k8smaster03 13 172.24.8.74 k8snode01 14 172.24.8.75 k8snode02 15 172.24.8.76 k8snode03 16 EOF 17 18 # Add docker user 19 useradd -m docker 20 21 # Disable the SELinux. 22 sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config 23 24 # Turn off and disable the firewalld. 25 systemctl stop firewalld 26 systemctl disable firewalld 27 28 # Modify related kernel parameters & Disable the swap. 29 cat > /etc/sysctl.d/k8s.conf << EOF 30 net.ipv4.ip_forward = 1 31 net.bridge.bridge-nf-call-ip6tables = 1 32 net.bridge.bridge-nf-call-iptables = 1 33 net.ipv4.tcp_tw_recycle = 0 34 vm.swappiness = 0 35 vm.overcommit_memory = 1 36 vm.panic_on_oom = 0 37 net.ipv6.conf.all.disable_ipv6 = 1 38 EOF 39 sysctl -p /etc/sysctl.d/k8s.conf >&/dev/null 40 swapoff -a 41 sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab 42 modprobe br_netfilter 43 44 # Add ipvs modules 45 cat > /etc/sysconfig/modules/ipvs.modules <<EOF 46 #!/bin/bash 47 modprobe -- ip_vs 48 modprobe -- ip_vs_rr 49 modprobe -- ip_vs_wrr 50 modprobe -- ip_vs_sh 51 modprobe -- nf_conntrack_ipv4 52 EOF 53 chmod 755 /etc/sysconfig/modules/ipvs.modules 54 bash /etc/sysconfig/modules/ipvs.modules 55 56 # Install rpm 57 yum install -y conntrack git ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget gcc gcc-c++ make openssl-devel 58 59 # Install Docker Compose 60 sudo curl -L "https://github.com/docker/compose/releases/download/1.25.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose 61 sudo chmod +x /usr/local/bin/docker-compose 62 63 # Update kernel 64 rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org 65 rpm -Uvh https://www.elrepo.org/elrepo-release-7.0-4.el7.elrepo.noarch.rpm 66 yum --disablerepo="*" --enablerepo="elrepo-kernel" install -y kernel-ml-5.4.1-1.el7.elrepo 67 sed -i 's/^GRUB_DEFAULT=.*/GRUB_DEFAULT=0/' /etc/default/grub 68 grub2-mkconfig -o /boot/grub2/grub.cfg 69 yum update -y 70 71 # Reboot the machine. 72 reboot提示:對於某些特性,可能需要升級內核,因此如上腳本將內核升級至5.4。

2.3 互信配置

為了更方便遠程分發文件和執行命令,本實驗配置master節點到其它節點的 ssh 信任關係。1 [root@k8smaster01 ~]# ssh-keygen -f ~/.ssh/id_rsa -N '' 2 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster01 3 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster02 4 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8smaster03 5 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode01 6 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode02 7 [root@k8smaster01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@k8snode03提示:此操作僅需要在master節點操作。

三 集群部署

3.1 Docker安裝

1 [root@k8smaster01 ~]# yum -y update 2 [root@k8smaster01 ~]# yum -y install yum-utils device-mapper-persistent-data lvm2 3 [root@k8smaster01 ~]# yum-config-manager \ 4 --add-repo \ 5 http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo 6 [root@k8smaster01 ~]# yum list docker-ce --showduplicates | sort -r #查看可用版本 7 [root@k8smaster01 ~]# yum -y install docker-ce-18.09.9-3.el7.x86_64 #kubeadm當前不支持18.09以上版本 8 [root@k8smaster01 ~]# mkdir /etc/docker 9 [root@k8smaster01 ~]# cat > /etc/docker/daemon.json <<EOF 10 { 11 "registry-mirrors": ["https://dbzucv6w.mirror.aliyuncs.com"], 12 "exec-opts": ["native.cgroupdriver=systemd"], 13 "log-driver": "json-file", 14 "log-opts": { 15 "max-size": "100m" 16 }, 17 "storage-driver": "overlay2", 18 "storage-opts": [ 19 "overlay2.override_kernel_check=true" 20 ] 21 } 22 EOF #配置system管理cgroup

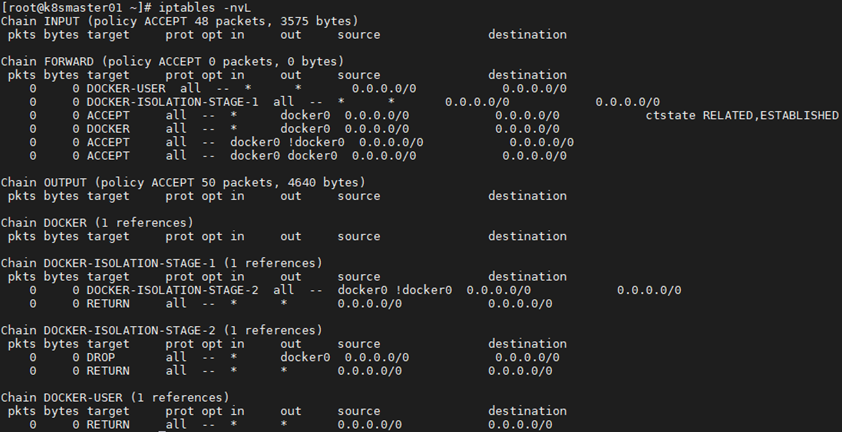

1 [root@k8smaster01 ~]# systemctl restart docker 2 [root@k8smaster01 ~]# systemctl enable docker 3 [root@k8smaster01 ~]# iptables -nvL #確認iptables filter表中FOWARD鏈的預設策略(pllicy)為ACCEPT

3.2 相關組件包

需要在每台機器上都安裝以下的軟體包: kubeadm: 用來初始化集群的指令; kubelet: 在集群中的每個節點上用來啟動 pod 和 container 等; kubectl: 用來與集群通信的命令行工具。 kubeadm不能安裝或管理 kubelet 或 kubectl ,所以得保證他們滿足通過 kubeadm 安裝的 Kubernetes 控制層對版本的要求。如果版本沒有滿足要求,可能導致一些意外錯誤或問題。 具體相關組件安裝見《附001.kubectl介紹及使用》。 提示:所有Master+Worker節點均需要如上操作。 Kubernetes 1.15版本所有相容相應組件的版本參考:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.15.md。3.3 正式安裝

1 [root@k8smaster01 ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo 2 [kubernetes] 3 name=Kubernetes 4 baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ 5 enabled=1 6 gpgcheck=1 7 repo_gpgcheck=1 8 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg 9 EOF 10 #配置yum源

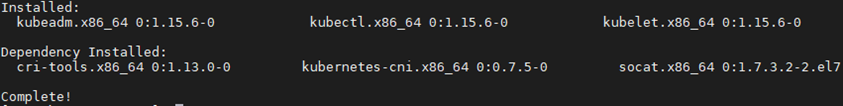

1 [root@k8smaster01 ~]# yum search kubelet --showduplicates #查看相應版本 2 [root@k8smaster01 ~]# yum install -y kubeadm-1.15.6-0.x86_64 kubelet-1.15.6-0.x86_64 kubectl-1.15.6-0.x86_64 --disableexcludes=kubernetes

說明:同時安裝了cri-tools, kubernetes-cni, socat三個依賴:

socat:kubelet的依賴;

cri-tools:即CRI(Container Runtime Interface)容器運行時介面的命令行工具。

說明:同時安裝了cri-tools, kubernetes-cni, socat三個依賴:

socat:kubelet的依賴;

cri-tools:即CRI(Container Runtime Interface)容器運行時介面的命令行工具。

1 [root@k8smaster01 ~]# systemctl enable kubelet

提示:所有Master+Worker節點均需要如上操作。此時不需要啟動kubelet,初始化的過程中會自動啟動的,如果此時啟動了會出現報錯,忽略即可。

三 部署高可用組件I

3.1 Keepalived安裝

1 [root@k8smaster01 ~]# wget https://www.keepalived.org/software/keepalived-2.0.19.tar.gz 2 [root@k8smaster01 ~]# tar -zxvf keepalived-2.0.19.tar.gz 3 [root@k8smaster01 ~]# cd keepalived-2.0.19/ 4 [root@k8smaster01 ~]# ./configure --sysconf=/etc --prefix=/usr/local/keepalived 5 [root@k8smaster01 keepalived-2.0.19]# make && make install 6 [root@k8smaster01 ~]# systemctl enable keepalived && systemctl start keepalived提示:所有Master節點均需要如上操作。

3.2 創建配置文件

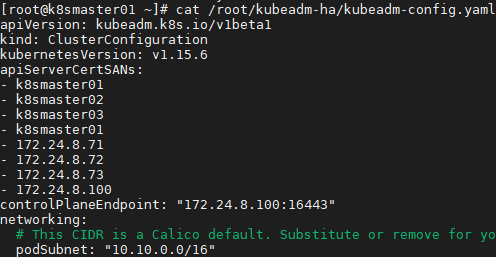

1 [root@k8smaster01 ~]# git clone https://github.com/cookeem/kubeadm-ha #拉取github的高可用自動配置腳本 2 [root@k8smaster01 ~]# vi /root/kubeadm-ha/kubeadm-config.yaml 3 apiVersion: kubeadm.k8s.io/v1beta1 4 kind: ClusterConfiguration 5 kubernetesVersion: v1.15.6 #配置安裝的版本 6 …… 7 podSubnet: "10.10.0.0/16" #指定pod網段及掩碼 8 ……

提示:若需要修改為國內Kubernetes初始化鏡像源,可通過在kubeadm-config.yaml中追加如下參數,若參考4.1步驟提前下載:

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_container

提示:若需要修改為國內Kubernetes初始化鏡像源,可通過在kubeadm-config.yaml中追加如下參數,若參考4.1步驟提前下載:

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_container

1 [root@k8smaster01 ~]# cd kubeadm-ha/ 2 [root@k8smaster01 kubeadm-ha]# vi create-config.sh 3 # master keepalived virtual ip address 4 export K8SHA_VIP=172.24.8.100 5 # master01 ip address 6 export K8SHA_IP1=172.24.8.71 7 # master02 ip address 8 export K8SHA_IP2=172.24.8.72 9 # master03 ip address 10 export K8SHA_IP3=172.24.8.73 11 # master keepalived virtual ip hostname 12 export K8SHA_VHOST=k8smaster01 13 # master01 hostname 14 export K8SHA_HOST1=k8smaster01 15 # master02 hostname 16 export K8SHA_HOST2=k8smaster02 17 # master03 hostname 18 export K8SHA_HOST3=k8smaster03 19 # master01 network interface name 20 export K8SHA_NETINF1=eth0 21 # master02 network interface name 22 export K8SHA_NETINF2=eth0 23 # master03 network interface name 24 export K8SHA_NETINF3=eth0 25 # keepalived auth_pass config 26 export K8SHA_KEEPALIVED_AUTH=412f7dc3bfed32194d1600c483e10ad1d 27 # calico reachable ip address 28 export K8SHA_CALICO_REACHABLE_IP=172.24.8.2 29 # kubernetes CIDR pod subnet 30 export K8SHA_CIDR=10.10.0.0 31 32 [root@k8smaster01 kubeadm-ha]# ./create-config.sh解釋:所有Master節點均需要如上操作。執行腳本後會生產如下配置文件清單: 執行create-config.sh腳本後,會自動生成以下配置文件:

- kubeadm-config.yaml:kubeadm初始化配置文件,位於kubeadm-ha代碼的./根目錄

- keepalived:keepalived配置文件,位於各個master節點的/etc/keepalived目錄

- nginx-lb:nginx-lb負載均衡配置文件,位於各個master節點的/root/nginx-lb目錄

- calico.yaml:calico網路組件部署文件,位於kubeadm-ha代碼的./calico目錄

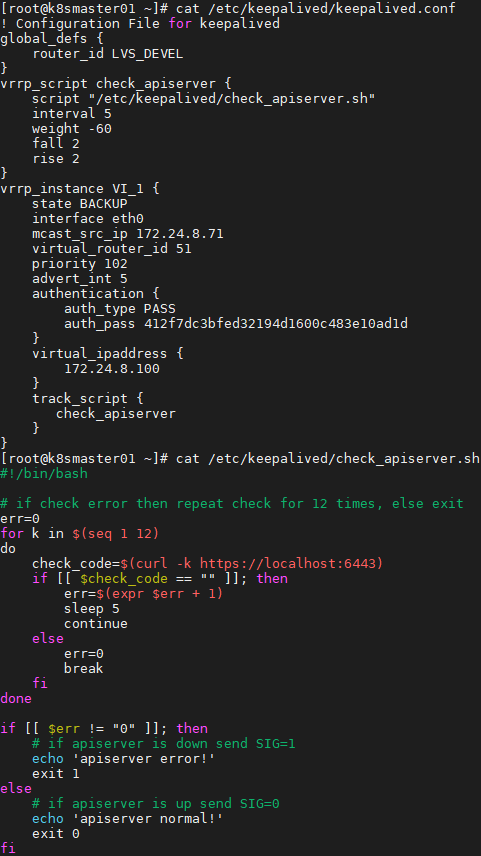

3.3 啟動Keepalived

1 [root@k8smaster01 ~]# cat /etc/keepalived/keepalived.conf 2 [root@k8smaster01 ~]# cat /etc/keepalived/check_apiserver.sh

1 [root@k8smaster01 ~]# systemctl restart keepalived.service 2 [root@k8smaster01 ~]# systemctl status keepalived.service 3 [root@k8smaster01 ~]# ping 172.24.8.100 4提示:所有Master節點均需要如上操作。

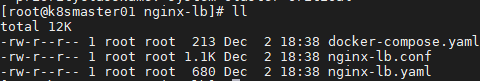

3.4 啟動Nginx

執行create-config.sh腳本後,nginx-lb的配置文件會自動複製到各個master的節點的/root/nginx-lb目錄 1 [root@k8smaster01 ~]# cd /root/nginx-lb/

1 [root@k8smaster01 nginx-lb]# docker-compose up -d #使用docker-compose方式啟動nginx-lb 2 [root@k8smaster01 ~]# docker-compose ps #檢查nginx-lb啟動狀態提示:所有Master節點均需要如上操作。

四 初始化集群-Mater

4.1 Master上初始化

1 [root@k8smaster01 ~]# kubeadm --kubernetes-version=v1.15.6 config images list #列出所需鏡像 2 k8s.gcr.io/kube-apiserver:v1.15.6 3 k8s.gcr.io/kube-controller-manager:v1.15.6 4 k8s.gcr.io/kube-scheduler:v1.15.6 5 k8s.gcr.io/kube-proxy:v1.15.6 6 k8s.gcr.io/pause:3.1 7 k8s.gcr.io/etcd:3.3.10 8 k8s.gcr.io/coredns:1.3.1

1 [root@k8smaster01 ~]# kubeadm --kubernetes-version=v1.15.6 config images pull #拉取kubernetes所需鏡像

註意:

由於國內Kubernetes鏡像可能無法pull,建議通過VPN等方式提前pull鏡像,然後上傳至所有master節點。

1 [root@VPN ~]# docker pull k8s.gcr.io/kube-apiserver:v1.15.6 2 [root@VPN ~]# docker pull k8s.gcr.io/kube-controller-manager:v1.15.6 3 [root@VPN ~]# docker pull k8s.gcr.io/kube-scheduler:v1.15.6 4 [root@VPN ~]# docker pull k8s.gcr.io/kube-proxy:v1.15.6 5 [root@VPN ~]# docker pull k8s.gcr.io/pause:3.1 6 [root@VPN ~]# docker pull k8s.gcr.io/etcd:3.3.10 7 [root@VPN ~]# docker pull k8s.gcr.io/coredns:1.3.1 8 [root@k8smaster01 ~]# docker load -i kube-apiserver.tar 9 [root@k8smaster01 ~]# docker load -i kube-controller-manager.tar 10 [root@k8smaster01 ~]# docker load -i kube-scheduler.tar 11 [root@k8smaster01 ~]# docker load -i kube-proxy.tar 12 [root@k8smaster01 ~]# docker load -i pause.tar 13 [root@k8smaster01 ~]# docker load -i etcd.tar 14 [root@k8smaster01 ~]# docker load -i coredns.tar

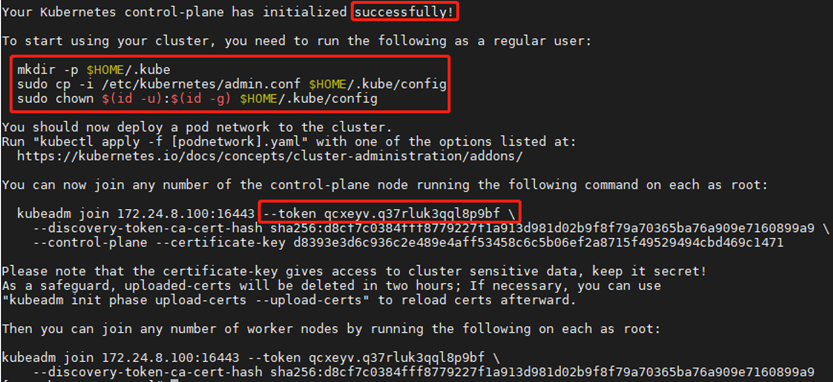

1 [root@k8smaster01 ~]# kubeadm init --config=/root/kubeadm-ha/kubeadm-config.yaml --upload-certs

保留如下命令用於後續節點添加:

保留如下命令用於後續節點添加:

1 You can now join any number of the control-plane node running the following command on each as root: 2 3 kubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \ 4 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9 \ 5 --control-plane --certificate-key d8393e3d6c936c2e489e4aff53458c6c5b06ef2a8715f49529494cbd469c1471 6 7 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! 8 As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use 9 "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. 10 11 Then you can join any number of worker nodes by running the following on each as root: 12 13 kubeadm join 172.24.8.100:16443 --token qcxeyv.q37rluk3qql8p9bf \ 14 --discovery-token-ca-cert-hash sha256:d8cf7c0384fff8779227f1a913d981d02b9f8f79a70365ba76a909e7160899a9註意:如上token具有預設24小時的有效期,token和hash值可通過如下方式獲取: kubeadm token list 如果 Token 過期以後,可以輸入以下命令,生成新的 Token

1 kubeadm token create 2 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 3

1 [root@k8smaster01 ~]# mkdir -p $HOME/.kube 2 [root@k8smaster01 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/admin.conf 3 [root@k8smaster01 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/admin.conf提示:預設使用k8s.gcr.io拉取鏡像,國內用戶可通過以下命令使用阿裡源:

1 [root@k8sm