ETL項目2:大數據清洗,處理:使用MapReduce進行離線數據分析並報表顯示完整項目 思路同我之前的博客的思路 https://www.cnblogs.com/symkmk123/p/10197467.html 但是數據是從web訪問的數據 avro第一次過濾 觀察數據的格式,我們主要分析第四個 ...

ETL項目2:大數據清洗,處理:使用MapReduce進行離線數據分析並報表顯示完整項目

思路同我之前的博客的思路 https://www.cnblogs.com/symkmk123/p/10197467.html

但是數據是從web訪問的數據

avro第一次過濾

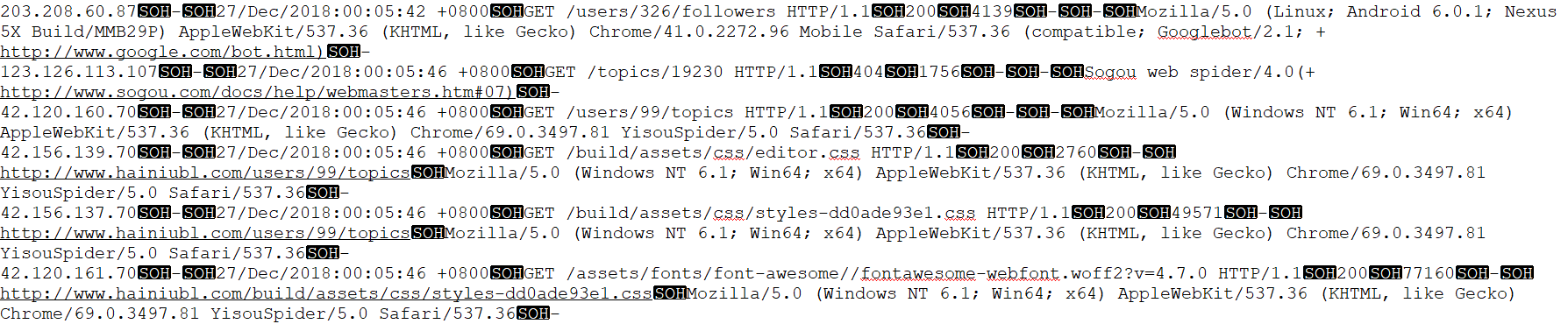

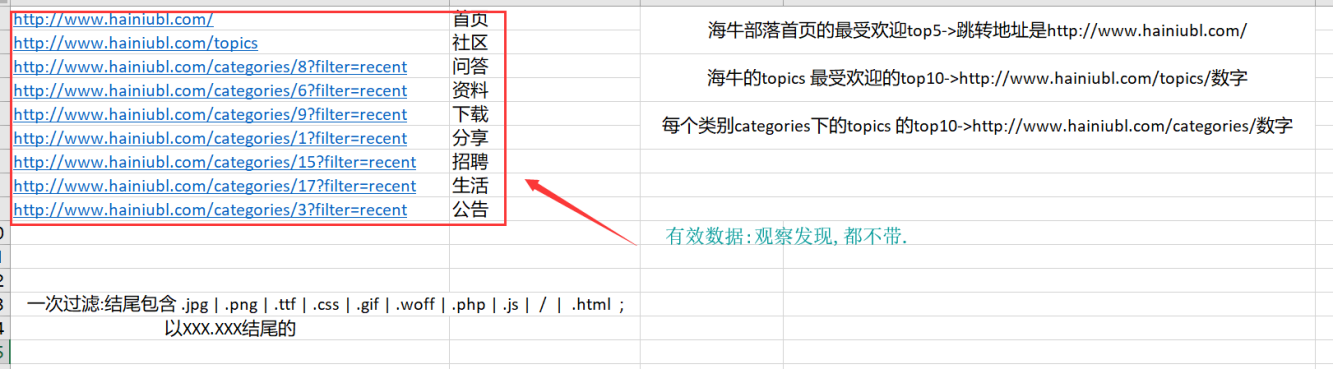

觀察數據的格式,我們主要分析第四個欄位的數據.發現有.css , .jpg .png等等等無效的數據.

通過觀察數據發現有效數據都不帶 . , 所以第一次過濾寫入avro總表裡的數據一次過濾後的有效數據,不包含 .css , .jpg , .png 這樣的數據

同時count持久化到mysql

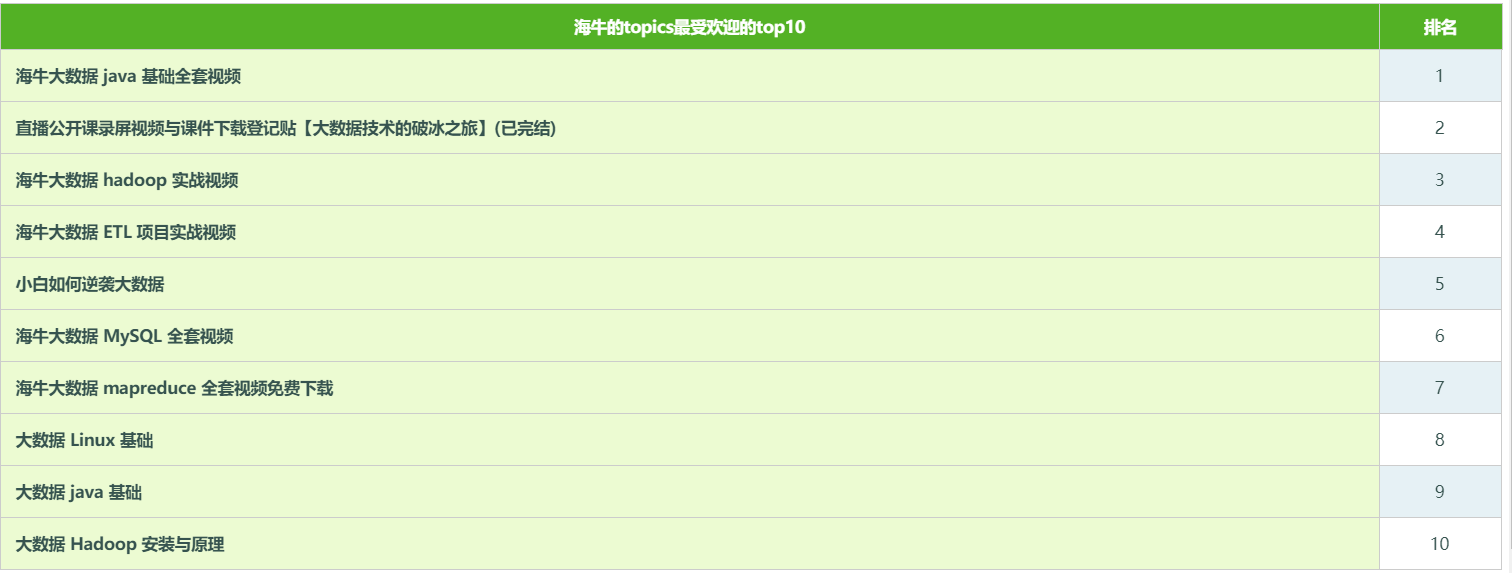

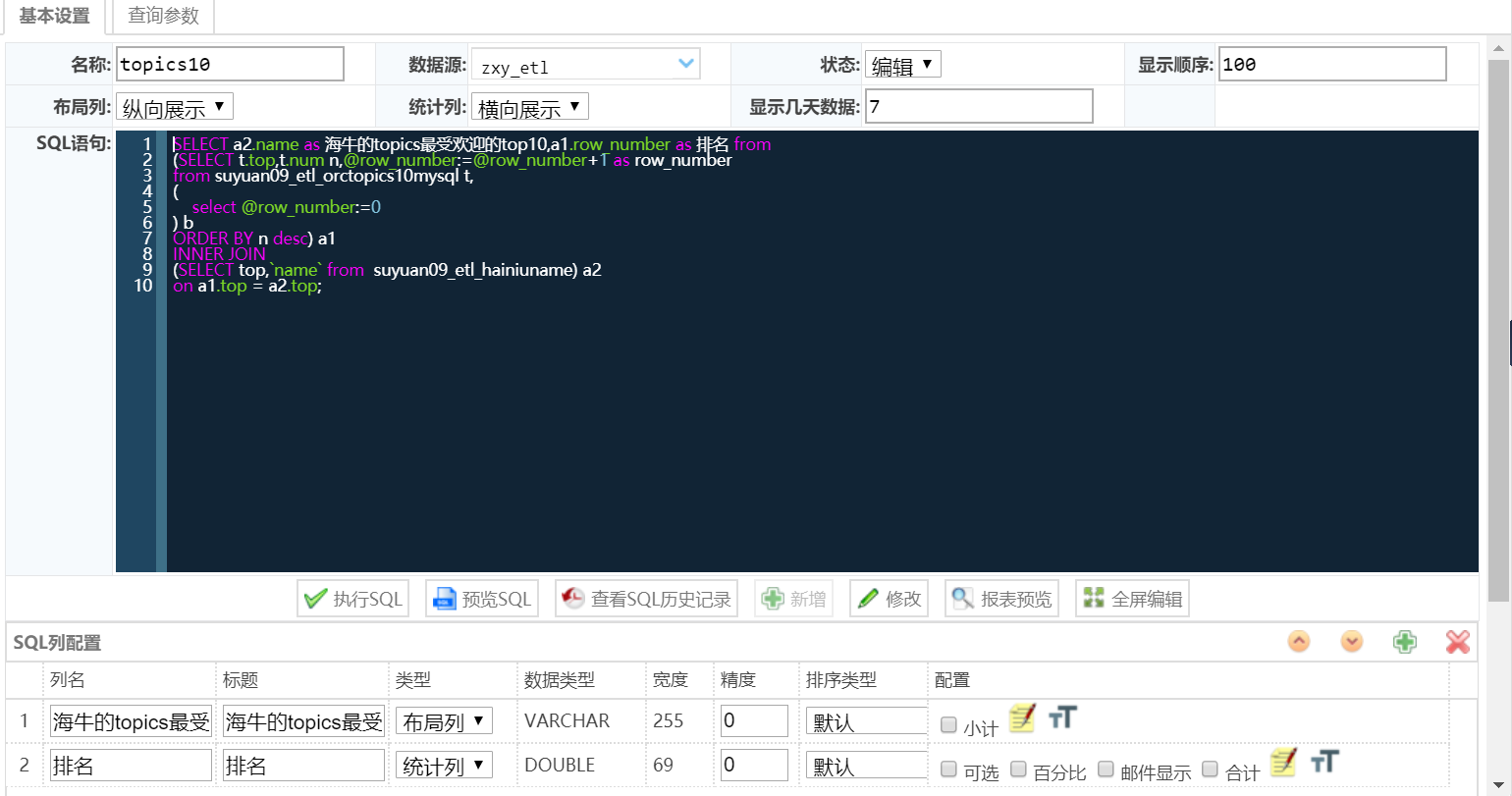

orc1:海牛的topics 最受歡迎的top10

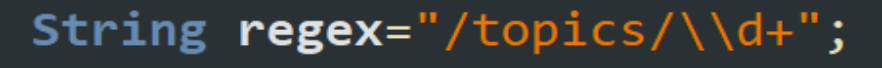

通過觀察發現這個需求的有效url是 /topics/數字的 所以在第一次過濾的數據的基礎上的正則就是

這種保留下來的也只是/topics/數字這種格式,方便用 hql統計結果

上代碼

//Text2Avro package mrrun.hainiuetl; import java.io.IOException; import java.text.ParseException; import java.text.SimpleDateFormat; import java.util.Date; import java.util.Locale; import org.apache.avro.Schema; import org.apache.avro.generic.GenericData; import org.apache.avro.generic.GenericRecord; import org.apache.avro.mapred.AvroKey; import org.apache.avro.mapreduce.AvroJob; import org.apache.avro.mapreduce.AvroKeyOutputFormat; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Counter; import org.apache.hadoop.mapreduce.CounterGroup; import org.apache.hadoop.mapreduce.Counters; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import mrrun.base.BaseMR; public class Text2Avro extends BaseMR { public static Schema schema = null; public static Schema.Parser parse = new Schema.Parser(); public static class Text2AvroMapper extends Mapper<LongWritable, Text, AvroKey<GenericRecord>, NullWritable> { @Override protected void setup(Mapper<LongWritable, Text, AvroKey<GenericRecord>, NullWritable>.Context context) throws IOException, InterruptedException { //根據user_install_status.avro文件內的格式,生成指定格式的schema對象 schema = parse.parse(Text2Avro.class.getResourceAsStream("/hainiu.avro")); } @Override protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException { String line = value.toString(); String[] splits = line.split("\001"); if(splits == null || splits.length != 10){ System.out.println("=============="); System.out.println(value.toString()); context.getCounter("etl_err", "bad line num").increment(1L); return; } // System.out.println(util.getIpArea("202.8.77.12")); String uip1 = splits[0]; String uip =IPUtil.getip(uip1); String datetime = splits[2]; StringBuilder sb=new StringBuilder(datetime); SimpleDateFormat sdf=new SimpleDateFormat("dd/MMM/yyyy:HH:mm:ss",Locale.ENGLISH); String sy=sb.toString(); Date myDate = null; try { myDate = sdf.parse(sy); } catch (ParseException e) { // TODO Auto-generated catch block e.printStackTrace(); } SimpleDateFormat sdf2=new SimpleDateFormat("yyyyMMddHHmmss"); //System.out.println(myDate); String format = sdf2.format(myDate); //GET /categories/8?filter=recent&page=12 HTTP/1.1 String url1 = splits[3]; StringBuilder sb2=new StringBuilder(url1); String url = sb2.toString(); String method=""; String top=""; String top1=""; String http=""; if(url!=null) { String[] s = url.split(" "); if(s.length==3) { method=s[0]; http=s[2]; top1=s[1]; if(top1.contains(".")) { context.getCounter("etl_err", "no line num").increment(1L); return; } else { top=top1; } } } String status1 = splits[4]; String status2 = splits[5]; String post = splits[6]; String from = splits[7]; String usagent1 = splits[8]; StringBuilder sb3=new StringBuilder(usagent1); String usagent = sb3.toString(); //根據創建的Schema對象,創建一行的對象 GenericRecord record = new GenericData.Record(Text2Avro.schema); record.put("uip", uip); record.put("datetime", format); record.put("method", method); record.put("http", http); record.put("top", top); record.put("from", from); record.put("status1", status1); record.put("status2", status2); record.put("post", post); record.put("usagent", usagent); context.getCounter("etl_good", "good line num").increment(1L); System.out.println(uip+" "+format+" "+top+" "+from+" "+post+" "+usagent+" "+status1+" "+status2+" "+http); context.write(new AvroKey<GenericRecord>(record), NullWritable.get()); } } @Override public Job getJob(Configuration conf) throws IOException { // // 開啟reduce輸出壓縮 // conf.set(FileOutputFormat.COMPRESS, "true"); // // 設置reduce輸出壓縮格式 // conf.set(FileOutputFormat.COMPRESS_CODEC, SnappyCodec.class.getName()); Job job = Job.getInstance(conf, getJobNameWithTaskId()); job.setJarByClass(Text2Avro.class); job.setMapperClass(Text2AvroMapper.class); job.setMapOutputKeyClass(AvroKey.class); job.setMapOutputValueClass(NullWritable.class); // 無reduce job.setNumReduceTasks(0); //設置輸出的format job.setOutputFormatClass(AvroKeyOutputFormat.class); //根據user_install_status.avro文件內的格式,生成指定格式的schema對象 schema = parse.parse(Text2Avro.class.getResourceAsStream("/hainiu.avro")); //設置avro文件的輸出 AvroJob.setOutputKeySchema(job, schema); FileInputFormat.addInputPath(job, getFirstJobInputPath()); FileOutputFormat.setOutputPath(job, getJobOutputPath(getJobNameWithTaskId())); return job; } @Override public String getJobName() { return "etltext2avro"; } }

//Avro2Orc_topic10 package mrrun.hainiuetl; import java.io.IOException; import java.util.regex.Matcher; import java.util.regex.Pattern; import org.apache.avro.Schema; import org.apache.avro.generic.GenericRecord; import org.apache.avro.mapred.AvroKey; import org.apache.avro.mapreduce.AvroJob; import org.apache.avro.mapreduce.AvroKeyInputFormat; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hive.ql.io.orc.CompressionKind; import org.apache.hadoop.hive.ql.io.orc.OrcNewOutputFormat; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Writable; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import mrrun.base.BaseMR; import mrrun.util.OrcFormat; import mrrun.util.OrcUtil; public class Avro2Orc_topic10 extends BaseMR { public static Schema schema = null; public static Schema.Parser parse = new Schema.Parser(); public static class Avro2OrcMapper extends Mapper<AvroKey<GenericRecord>, NullWritable, NullWritable, Writable>{ OrcUtil orcUtil = new OrcUtil(); @Override protected void setup(Context context) throws IOException, InterruptedException { orcUtil.setWriteOrcInspector(OrcFormat.etlorcSchema_topic10); } @Override protected void map(AvroKey<GenericRecord> key, NullWritable value,Context context) throws IOException, InterruptedException { //得到一行的對象 GenericRecord datum = key.datum(); String uip = (String) datum.get("uip"); String datetime = (String) datum.get("datetime"); //String method = (String) datum.get("method"); //String http = (String) datum.get("http"); String top1 = (String) datum.get("top"); String top=""; String regex="/topics/\\d+"; Pattern pattern=Pattern.compile(regex); Matcher matcher=pattern.matcher(top1); if(matcher.find()) { top=matcher.group(); } else { context.getCounter("etl_err", "notopics line num").increment(1L); return; } //orcUtil.addAttr(uip,datetime,method,http,uid,country,status1,status2,usagent); orcUtil.addAttr(uip,datetime,top); Writable w = orcUtil.serialize(); context.getCounter("etl_good", "good line num").increment(1L); System.out.println(uip+" "+top); context.write(NullWritable.get(), w); } } @Override public Job getJob(Configuration conf) throws IOException { //關閉map的推測執行,使得一個map處理 一個region的數據 conf.set("mapreduce.map.spedulative", "false"); //設置orc文件snappy壓縮 conf.set("orc.compress", CompressionKind.SNAPPY.name()); //設置orc文件 有索引 conf.set("orc.create.index", "true"); Job job = Job.getInstance(conf, getJobNameWithTaskId()); job.setJarByClass(Avro2Orc_topic10.class); job.setMapperClass(Avro2OrcMapper.class); job.setMapOutputKeyClass(NullWritable.class); job.setMapOutputValueClass(Writable.class); // 無reduce job.setNumReduceTasks(0); job.setInputFormatClass(AvroKeyInputFormat.class); //根據user_install_status.avro文件內的格式,生成指定格式的schema對象 schema = parse.parse(Avro2Orc_topic10.class.getResourceAsStream("/hainiu.avro")); AvroJob.setInputKeySchema(job, schema); job.setOutputFormatClass(OrcNewOutputFormat.class); FileInputFormat.addInputPath(job, getFirstJobInputPath()); FileOutputFormat.setOutputPath(job, getJobOutputPath(getJobNameWithTaskId())); return job; } @Override public String getJobName() { return "etlAvro2Orc_topic10"; } }

//Text2AvroJob package mrrun.hainiuetl; import java.text.SimpleDateFormat; import java.util.Date; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.conf.Configured; import org.apache.hadoop.mapreduce.Counter; import org.apache.hadoop.mapreduce.CounterGroup; import org.apache.hadoop.mapreduce.Counters; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.jobcontrol.ControlledJob; import org.apache.hadoop.mapreduce.lib.jobcontrol.JobControl; import org.apache.hadoop.util.Tool; import org.apache.hadoop.util.ToolRunner; import mrrun.util.JobRunResult; import mrrun.util.JobRunUtil; public class Text2AvroJob extends Configured implements Tool{ @Override public int run(String[] args) throws Exception { //獲取Configuration對象 Configuration conf = getConf(); //創建任務鏈對象 JobControl jobc = new JobControl("etltext2avro"); Text2Avro avro = new Text2Avro(); //只需要賦值一次就行 avro.setConf(conf); ControlledJob orcCJob = avro.getControlledJob(); Job job = orcCJob.getJob(); job.waitForCompletion(true); JobRunResult result = JobRunUtil.run(jobc); result.setCounters("etl1", orcCJob.getJob().getCounters()); result.print(true); Counters counterMap = result.getCounterMap("etl1"); CounterGroup group1 = counterMap.getGroup("etl_good"); CounterGroup group2 = counterMap.getGroup("etl_err"); Counter good = group1.findCounter("good line num"); Counter bad = group2.findCounter("bad line num"); System.out.println("\t\t"+good.getDisplayName()+" = "+good.getValue()); System.out.println("\t\t"+bad.getDisplayName()+" = "+bad.getValue()); System.out.println("=======+++++++++===="); Date date=new Date(); SimpleDateFormat sdf3=new SimpleDateFormat("yyyyMMdd"); String format2 = sdf3.format(date); Results results=new Results(); long bad_num = bad.getValue(); long good_num = good.getValue(); long total_num=bad_num+good_num; results.setBad_num(bad_num); results.setGood_num(good_num); results.setTotal_num(total_num); results.setDay(format2); double d=bad_num*1.0/total_num*1.0; results.setBad_rate(d); System.out.println((double)((double)bad_num/(double)total_num)); DAO dao=new DAO(); if(dao.getday(format2)!=null) { Results getday = dao.getday(format2); Long bad_num2 = getday.getBad_num(); Long good_num2 = getday.getGood_num(); Long total_num2 = getday.getTotal_num(); getday.setDay(format2); getday.setBad_num(bad_num2+bad_num); getday.setGood_num(good_num2+good_num); getday.setTotal_num(total_num2+total_num); double badrate=(bad_num2+bad_num)*1.0/(total_num2+total_num)*1.0; getday.setBad_rate(badrate); dao.update(getday); } else { dao.insert(results); } jobc.addJob(orcCJob); return 0; } public static void main(String[] args) throws Exception { // -Dtask.id=1226 -Dtask.input.dir=/tmp/avro/input_hainiuetl -Dtask.base.dir=/tmp/avro System.exit(ToolRunner.run(new Text2AvroJob(), args)); } }

放一個

自動化腳本思路同第一個ETL項目

直接放代碼

yitiaolong.sh #!/bin/bash source /etc/profile mmdd=`date -d 1' days ago' +%m%d` yymm=`date -d 1' days ago' +%Y%m` dd=`date -d 1' days ago' +%d` /usr/local/hive/bin/hive -e "use suyuan09;alter table etlavrosy add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" /usr/local/hive/bin/hive -e "use suyuan09;alter table hainiuetltopics10_orc add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" /usr/local/hive/bin/hive -e "use suyuan09;alter table hainiuetlcategories10_orc add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" /usr/local/hive/bin/hive -e "use suyuan09;alter table hainiuetlspider_orc add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" /usr/local/hive/bin/hive -e "use suyuan09;alter table hainiuetlip_orc add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" /usr/local/hive/bin/hive -e "use suyuan09;alter table hainiuetlindex5_orc add IF NOT EXISTS partition(month='${yymm}',day='${dd}');" #3-4運行mr hdfs_path1=/user/hainiu/data/hainiuetl/input/${yymm}/${dd} avro_path1=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etltext2avro -Dtask.id=${mmdd} -Dtask.input.dir=${hdfs_path1} -Dtask.base.dir=${avro_path1}` #orctopics10mr.sh avro_path2=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd}/etltext2avro_${mmdd}/part-*.avro orc_path2=/user/suyuan09/hainiuetl/orctopics10/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etlavro2orc_topic10 -Dtask.id=${mmdd} -Dtask.input.dir=${avro_path2} -Dtask.base.dir=${orc_path2}` #orccategories10mr.sh avro_path3=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd}/etltext2avro_${mmdd}/part-*.avro orc_path3=/user/suyuan09/hainiuetl/orccategories10/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etlavro2orc_categories10 -Dtask.id=${mmdd} -Dtask.input.dir=${avro_path3} -Dtask.base.dir=${orc_path3}` #orcspidermr.sh avro_path4=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd}/etltext2avro_${mmdd}/part-*.avro orc_path4=/user/suyuan09/hainiuetl/orcspider/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etlavro2orc_spider -Dtask.id=${mmdd} -Dtask.input.dir=${avro_path4} -Dtask.base.dir=${orc_path4}` #orcipmr.sh avro_path5=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd}/etltext2avro_${mmdd}/part-*.avro orc_path5=/user/suyuan09/hainiuetl/orcip/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etlavro2orc_ip -Dtask.id=${mmdd} -Dtask.input.dir=${avro_path5} -Dtask.base.dir=${orc_path5}` #orcindex5mr.sh avro_path6=/user/suyuan09/hainiuetl/hainiuavro/${yymm}/${dd}/etltext2avro_${mmdd}/part-*.avro orc_path6=/user/suyuan09/hainiuetl/orcindex5/${yymm}/${dd} `/usr/local/hadoop/bin/hadoop jar /home/suyuan09/etl/hainiu/jar/181210_hbase-1.0.0-symkmk123.jar etlavro2orc_index5 -Dtask.id=${mmdd} -Dtask.input.dir=${avro_path6} -Dtask.base.dir=${orc_path6}` #把orc挪到分區目錄 #orc2etl.sh /usr/local/hadoop/bin/hadoop fs -cp hdfs://ns1/user/suyuan09/hainiuetl/orctopics10/${yymm}/${dd}/etlAvro2Orc_topic10_${mmdd}/part-* hdfs://ns1/user/suyuan09/etlorc/hainiuetltopics10_orc/month=${yymm}/day=${dd} /usr/local/hadoop/bin/hadoop fs -cp hdfs://ns1/user/suyuan09/hainiuetl/orccategories10/${yymm}/${dd}/etlAvro2Orc_categories10_${mmdd}/part-* hdfs://ns1/user/suyuan09/etlorc/hainiuetlcategories10_orc/month=${yymm}/day=${dd} /usr/local/hadoop/bin/hadoop fs -cp hdfs://ns1/user/suyuan09/hainiuetl/orcspider/${yymm}/${dd}/etlAvro2Orc_spider_${mmdd}/part-* hdfs://ns1/user/suyuan09/etlorc/hainiuetlspider_orc/month=${yymm}/day=${dd} /usr/local/hadoop/bin/hadoop fs -cp hdfs://ns1/user/suyuan09/hainiuetl/orcindex5/${yymm}/${dd}/etlAvro2Orc_index5_${mmdd}/part-* hdfs://ns1/user/suyuan09/etlorc/hainiuetlindex5_orc/month=${yymm}/day=${dd} /usr/local/hadoop/bin/hadoop fs -cp hdfs://ns1/user/suyuan09/hainiuetl/orcip/${yymm}/${dd}/etlAvro2Orc_ip_${mmdd}/part-* hdfs://ns1/user/suyuan09/etlorc/hainiuetlip_orc/month=${yymm}/day=${dd} #自動從hive到mysql腳本 #hive2data.sh /usr/local/hive/bin/hive -e "use suyuan09;select t.top,t.num from(select top,count(*) num from hainiuetlindex5_orc group by top) t sort by t.num desc limit 5;" > /home/suyuan09/etl/hainiu/orc2mysql/myindex5${yymmdd} /usr/local/hive/bin/hive -e "use suyuan09;select t.top,t.num from(select top,count(*) num from hainiuetltopics10_orc group by top) t sort by t.num desc limit 10;" > /home/suyuan09/etl/hainiu/orc2mysql/mytopics10${yymmdd} /usr/local/hive/bin/hive -e "use suyuan09;select t.top,t.num from(select top,count(*) num from hainiuetlcategories10_orc group by top) t sort by t.num desc limit 10;" > /home/suyuan09/etl/hainiu/orc2mysql/mycategories10${yymmdd} /usr/local/hive/bin/hive -e "use suyuan09;select t.uip,t.num from(select uip,count(*) num from hainiuetlip_orc group by uip) t sort by t.num desc;" > /home/suyuan09/etl/hainiu/orc2mysql/myip${yymmdd} /usr/local/hive/bin/hive -e "use suyuan09;select t.usagent,t.num from(select usagent,count(*) num from hainiuetlspider_orc group by usagent) t sort by t.num desc;" > /home/suyuan09/etl/hainiu/orc2mysql/myspider${yymmdd} #data->mysql腳本 #data2mysql.sh #mysql -h 172.33.101.123 -P 3306 -u tony -pYourPassword -D YourDbName <<EOF /bin/mysql -h192.168.88.195 -p3306 -uhainiu -p12345678 -Dhainiutest <<EOF LOAD DATA LOCAL INFILE "/home/suyuan09/etl/hainiu/orc2mysql/mytopics10${yymmdd}" INTO TABLE suyuan09_etl_orctopics10mysql FIELDS TERMINATED BY '\t'; LOAD DATA LOCAL INFILE "/home/suyuan09/etl/hainiu/orc2mysql/mycategories10${yymmdd}" INTO TABLE suyuan09_etl_orccategories10mysql FIELDS TERMINATED BY '\t'; LOAD DATA LOCAL INFILE "/home/suyuan09/etl/hainiu/orc2mysql/myindex5${yymmdd}" INTO TABLE suyuan09_etl_orcindex5mysql FIELDS TERMINATED BY '\t'; LOAD DATA LOCAL INFILE "/home/suyuan09/etl/hainiu/orc2mysql/myspider${yymmdd}" INTO TABLE suyuan09_etl_orcspidermysql FIELDS TERMINATED BY '\t'; LOAD DATA LOCAL INFILE "/home/suyuan09/etl/hainiu/orc2mysql/myip${yymmdd}" INTO TABLE suyuan09_etl_orcipmysql FIELDS TERMINATED BY '\t'; EOF

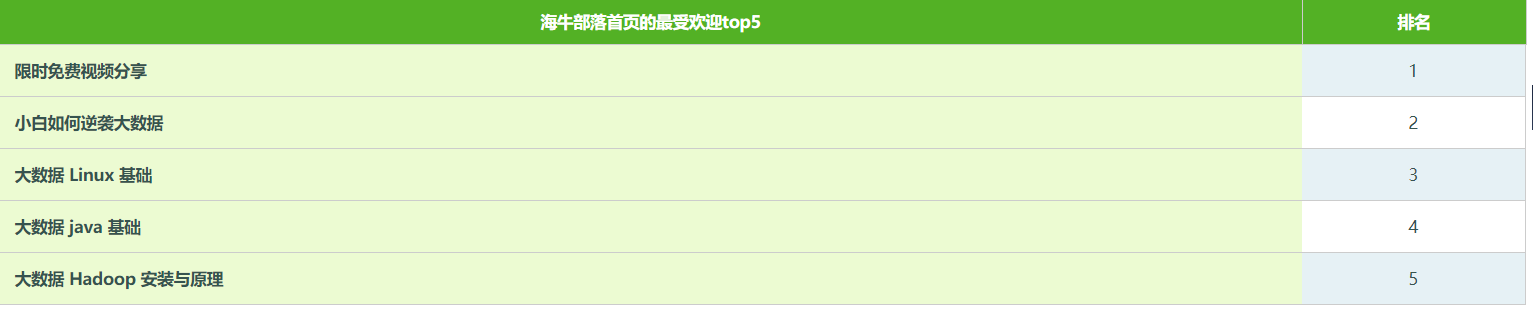

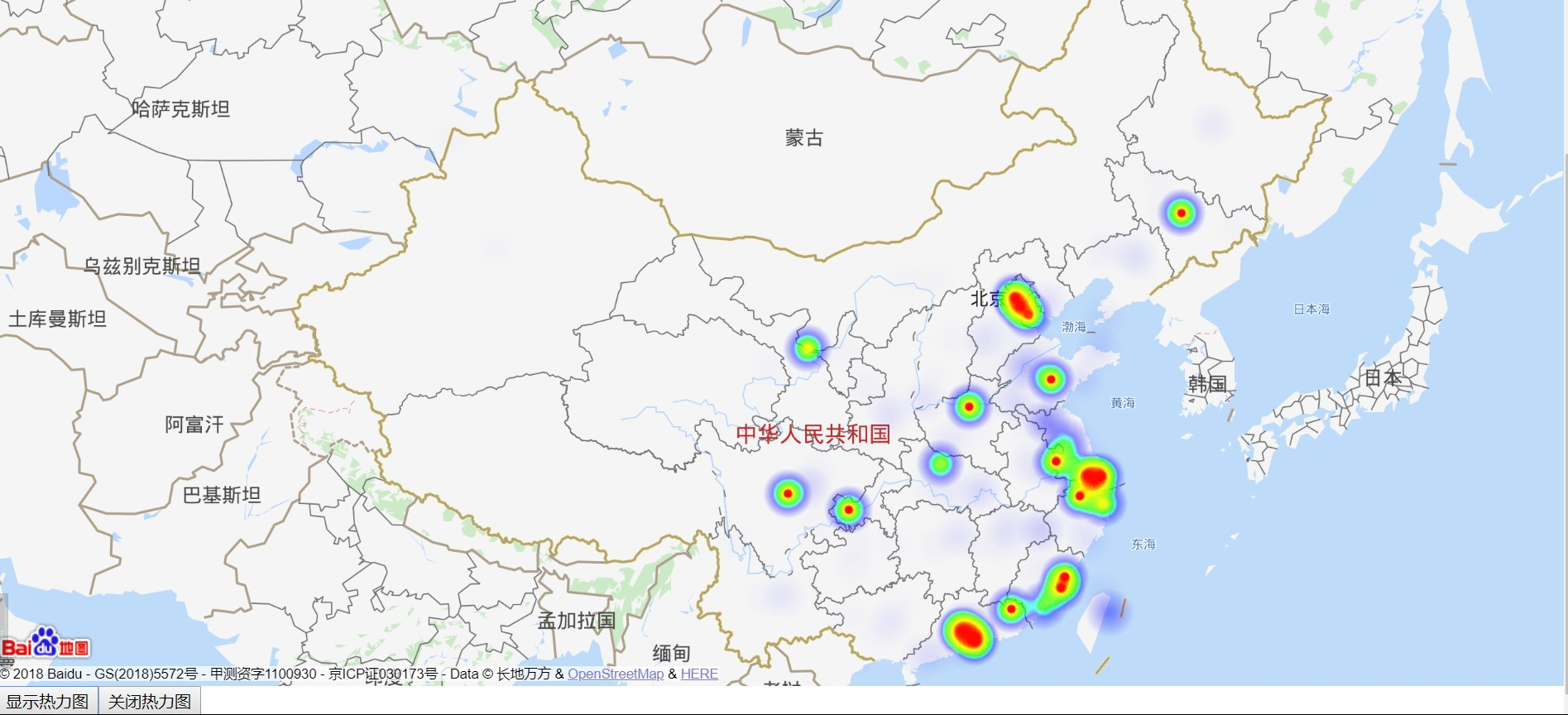

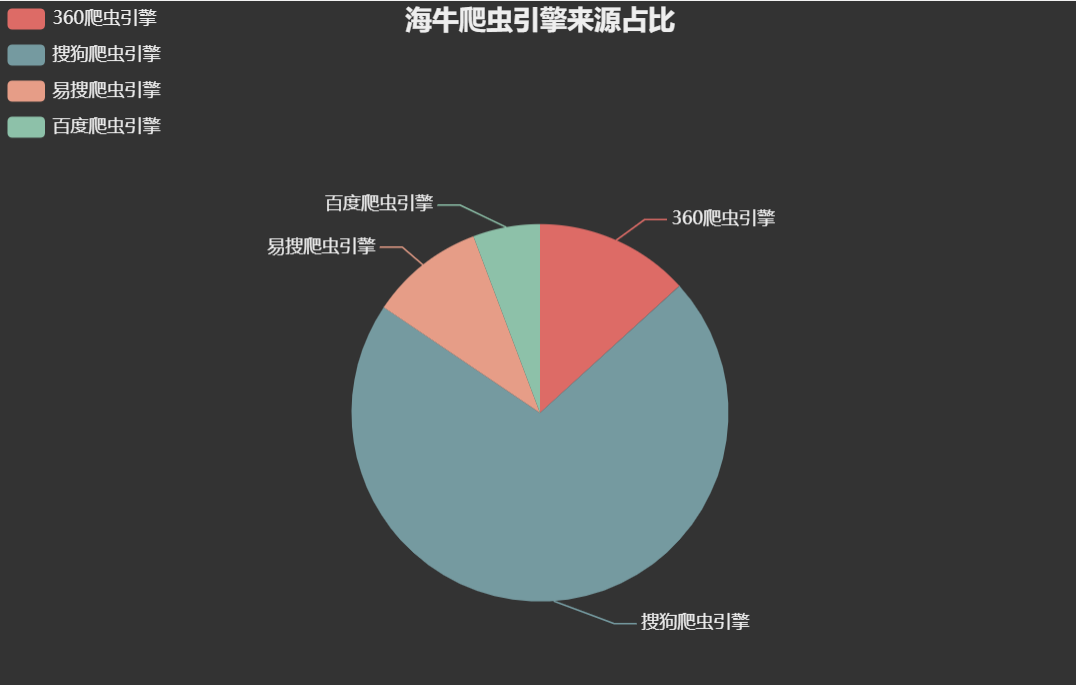

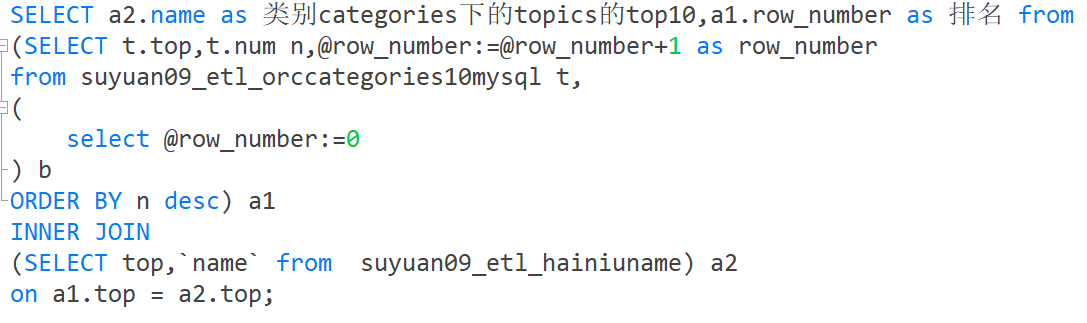

報表展示

其中 mysql沒有自帶排序函數,自己寫一個

熱力圖參考之前我之前的博客 https://www.cnblogs.com/symkmk123/p/9309322.html 其中之前是用 c# 寫的,這裡用java + spring 改寫一下

思路看之前的博客這裡放代碼

經緯度轉換類:LngAndLatUtil

package suyuan.web; import java.io.BufferedReader; import java.io.IOException; import java.io.InputStreamReader; import java.io.UnsupportedEncodingException; import java.net.MalformedURLException; import java.net.URL; import java.net.URLConnection; public class LngAndLatUtil { public Object[] getCoordinate(String addr) throws IOException { String lng = null;// 經度 String lat = null;// 緯度 String address = null; try { address = java.net.URLEncoder.encode(addr, "UTF-8"); } catch (UnsupportedEncodingException e1) { e1.printStackTrace(); } String key = "你的秘鑰"; String url = String.format("http://api.map.baidu.com/geocoder?address=%s&output=json&key=%s", address, key); URL myURL = null; URLConnection httpsConn = null; try { myURL = new URL(url); } catch (MalformedURLException e) { e.printStackTrace(); } InputStreamReader insr = null; BufferedReader br = null; try { httpsConn = (URLConnection) myURL.openConnection();// 不使用代理 if (httpsConn != null) { insr = new InputStreamReader(httpsConn.getInputStream(), "UTF-8"); br = new BufferedReader(insr); String data = null; int count = 1; while ((data = br.readLine()) != null) { if (count == 5) { try{ lng = (String) data.subSequence(data.indexOf(":") + 1, data.indexOf(","));// 經度 count++; } catch(StringIndexOutOfBoundsException e) { e.printStackTrace(); } } else if (count == 6) { lat = data.substring(data.indexOf(":") + 1);// 緯度 count++; } else { count++; } } } } catch (IOException e) { e.printStackTrace(); } finally { if (insr != null) { insr.close(); } if (br != null) { br.close(); } } return new Object[] { lng, lat }; } }

IPDTO:(資料庫映射類)

package suyuan.entity; public class IPDTO { public String top; public Integer num; public String getTop() { return top; } public void setTop(String top) { this.top = top; } public Integer getNum() { return num; } public void setNum(Integer num) { this.num = num; } }

IP:(熱力圖json類)

package suyuan.entity; public class IP { public String lng ; public String lat ; public int count ; public String getLng() { return lng; } public void setLng(String lng) { this.lng = lng; } public String getLat() { return lat; } public void setLat(String lat) { this.lat = lat; } public int getCount() { return count; } public void setCount(int count) { this.count = count; } }

DAO層轉換方法

public List<IP> getip() throws SQLException { List<IPDTO> ipdto = null; List<IP> ipList=new ArrayList<IP>(); // 編寫SQL語句 String sql = "SELECT top,num FROM `suyuan09_etl_orcipmysql`"; // 占位符賦值? // 執行 ipdto = qr.query(sql, new BeanListHandler<IPDTO>(IPDTO.class)); for(IPDTO ips: ipdto) { IP ip=new IP(); Integer num = ips.getNum(); String top = ips.getTop(); // 封裝 LngAndLatUtil getLatAndLngByBaidu = new LngAndLatUtil(); Object[] o = null; try { o = getLatAndLngByBaidu.getCoordinate(top); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } ip.setLng(String.valueOf(o[0])); ip.setLat(String.valueOf(o[1])); ip.setCount(num); ipList.add(ip); } // 返回 return ipList; }

控制器調用返回json:

@RequestMapping("/getip") public @ResponseBody List<IP> getip()throws Exception{ return studentService.getip(); }

jsp頁面顯示:

<%@ page language="java" import="java.util.*" pageEncoding="UTF-8"%> <%@ taglib uri="http://java.sun.com/jsp/jstl/core" prefix="c"%> <%@ taglib uri="http://java.sun.com/jsp/jstl/fmt" prefix="fmt"%> <!DOCTYPE HTML> <html> <head > <meta charset="UTF-8"> <script type="text/javascript" src="http://api.map.baidu.com/api?v=2.0&ak=1fReIR62vFbOc2vgrBnRAGyUtLkgoIIH"></script> <script type="text/javascript" src="http://api.map.baidu.com/library/Heatmap/2.0/src/Heatmap_min.js"></script> <script type="text/javascript" src="${pageContext.request.contextPath}/js/jquery-1.9.1.js"></script> <title></title> <style type="text/css"> ul,li{list-style: none;margin:0;padding:0;float:left;} html{height:100%} body{height:100%;margin:0px;padding:0px;font-family:"微軟雅黑";} #container{height:700px;width:100%;} #r-result{width:100%;} </style> </head> <body> <form id="form1" runat="server"> <div> <div id="container"></div> <div id="r-result"> <input type="button" onclick="openHeatmap();" value="顯示熱力圖"/><input type="button" onclick="closeHeatmap();" value="關閉熱力圖"/> </div> </div> </form> </body> </html> <script type="text/javascript"> var map = new BMap.Map("container"); // 創建地圖實例 var point = new BMap.Point(118.906886, 31.895532); map.centerAndZoom(point, 15); // 初始化地圖,設置中心點坐標和地圖級別 map.enableScrollWheelZoom(); // 允許滾輪縮放 if (!isSupportCanvas()) { alert('熱力圖目前只支持有canvas支持的瀏覽器,您所使用的瀏覽器不能使用熱力圖功能~') } heatmapOverlay = new BMapLib.HeatmapOverlay({ "radius": 20 }); map.addOverlay(heatmapOverlay); heatmapOverlay.setDataSet({ data: function () { var serie = []; $.ajax({ url: "${pageContext.request.contextPath}/getip", dataType: "json", async: false, success: function (dataJson) { for (var i = 0; i < dataJson.length; i++) { var item = { //