實驗說明: 實驗環境: 宿主機系統 :Fedora 28 WorkStation 虛擬機管理器 :Virt-Manager 1.5.1 虛擬機配置 :ha1 CentOS 7.2 1511 (minimal) virbr0: 192.168.122.57 ha2 CentOS 7.2 1511 (m ...

實驗說明:

實驗環境:

- 宿主機系統 :Fedora 28 WorkStation

- 虛擬機管理器 :Virt-Manager 1.5.1

- 虛擬機配置 :ha1 CentOS 7.2 1511 (minimal) virbr0: 192.168.122.57

ha2 CentOS 7.2 1511 (minimal) virbr0: 192.168.122.58

ha3 CentOS 7.2 1511 (minimal) virbr0: 192.168.122.59

實驗步驟:

宿主機上操作:主要涉及ntp伺服器的搭建以及fencing的配置

-

確保宿主機系統是聯網的,配置網路請參考 此鏈接

-

安裝 virt-manager

# dnf install virt-manager libvirt

-

使用virt-manager創建kvm虛擬機並配置kvm虛擬機 請參考 此鏈接

-

安裝fence、ntpd等軟體

# dnf install -y ntp fence-virtd fence-virtd-multicast fence-virtd-libvirt fence-virt*

-

配置ntpd

設置區時為上海# timedatectl list-timezones | grep Shanghai # timedatectl set-timezone Asia/Shanghai

修改ntp配置文件

# 刪除原配置中的相關配置項 # sed -e '/^server/d' -e '/^#server/d' -e '/^fudge/d' -e '/^#fudge/d' -i /etc/ntp.conf # 在結尾添加ntp伺服器配置 # sed -e '$a server 127.127.1.0' -e '$a fudge 127.127.1.0 stratum' -i /etc/ntp.conf

設置開機自啟、開啟服務並查看狀態

# systemctl enable ntpd.service && systemctl start ntpd.service && systemctl status ntpd.service

ntpq -c peers ntpq -c assoc timedatectl

-

配置fence-virtd

創建 /etc/cluster 文件夾

# mkdir -p /etc/cluster

生成fence_xvm.key文件

# echo fecb9e62cbcf4e54dcfb > /etc/cluster/fence_xvm.key

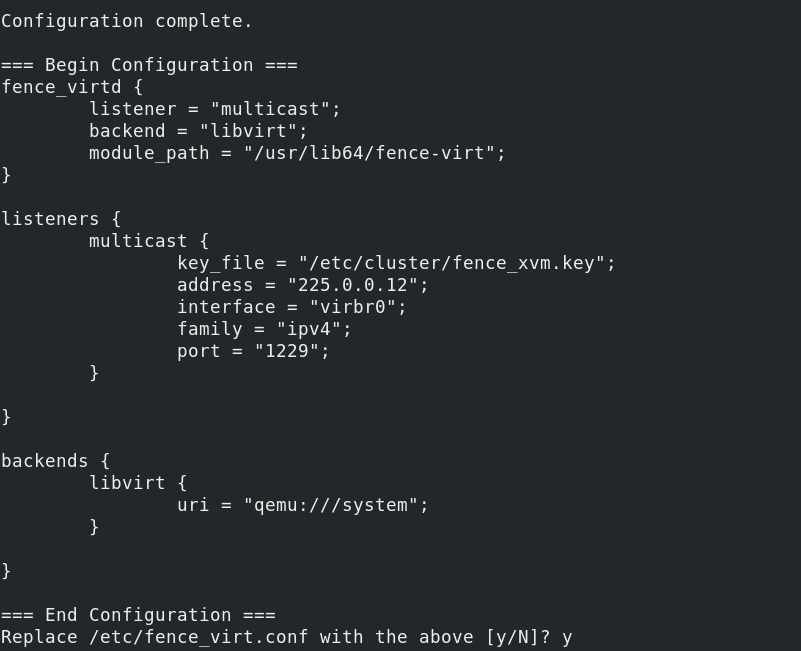

初始化fence_virtd

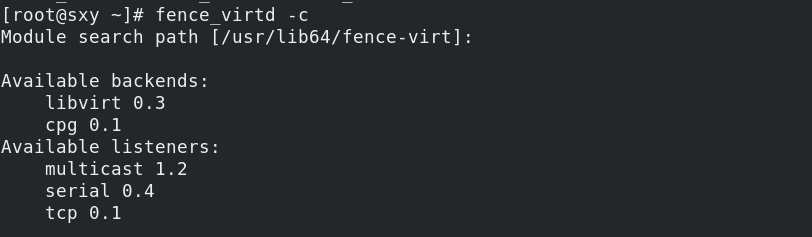

# fence_virtd -c

確認模塊搜索路徑

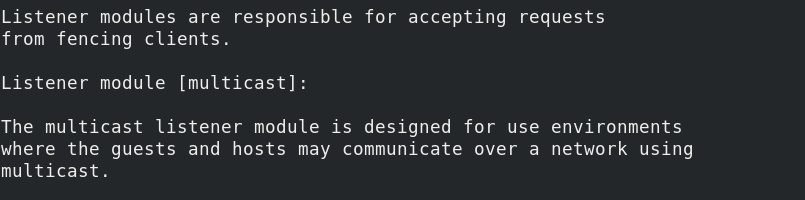

確認監聽方式

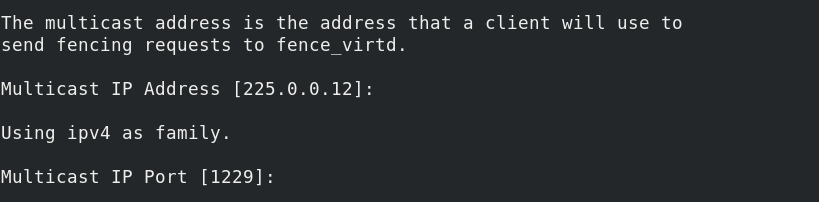

確認監聽IP地址以及埠

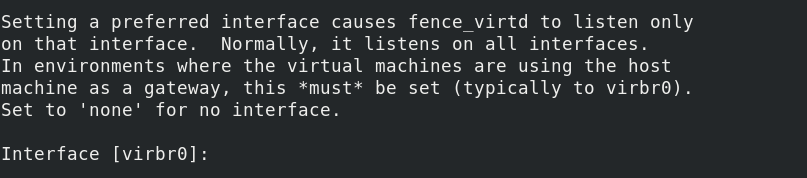

確認監聽網卡介面

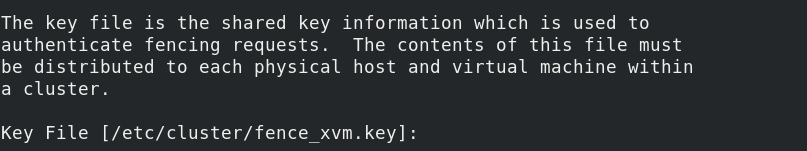

確認密鑰路徑

確認後端虛擬化模塊

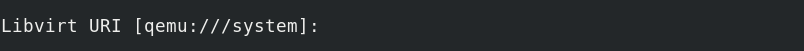

確認Libvirt URL

是否替換文件

設置開機自啟、開啟服務並查看狀態# systemctl enable fence_virtd && systemctl start fence_virtd && systemctl status fence_virtd

-

驗證fencevirtd

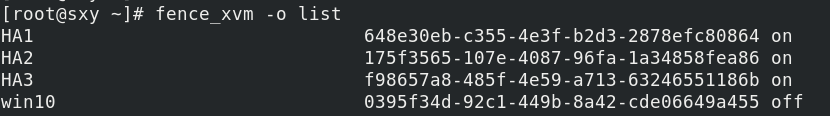

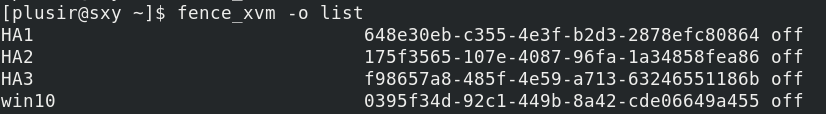

查詢所有狀態的虛擬機# fence_xvm -o list

查詢結果如下圖:

-

Fencing操作

關閉所有已開啟的虛擬機# 啟動(on)、關機(off)、重啟(reboot)、狀態獲取(status)

# fence_xvm -o off -H HA1 # fence_xvm -o off -H HA2 # fence_xvm -o off -H HA3再次查詢,結果如下圖:

kvm虛擬機上操作:主要涉及Ntp服務同步和Pacemaker集群的搭建

-

配置kvm虛擬機

-

安裝ntpd、pcs等軟體

# yum install -y ntp pcs pacemaker corosync fence-agents-all resource-agents

-

配置ntpd

與宿主機上操作類似,此處不再展開1 # timedatectl set-timezone Asia/Shanghai 2 3 # sed -i s/^server.*// /etc/ntp.conf 4 # echo "server 192.168.43.177 iburst" >> /etc/ntp.conf 5 # echo "SYNC_HWCLOCK=yes" >> /etc/sysconfig/ntpdate 6 # systemctl enable ntpd.service && systemctl start ntpd.service && systemctl status ntpd.service

查詢當前時間設置

# timedatectl

-

配置Pacemaker集群

檢查是否安裝Pacemaker軟體

創建Fencing設備密鑰Key# mkdir -p /etc/cluster # echo fecb9e62cbcf4e54dcfb > /etc/cluster/fence_xvm.key

設置hacluster用戶密碼

# echo 000000|passwd --stdin hacluster

創建Pacemaker集群

創建時需要進行授權認證,以及需要添加到集群的主機名# pcs cluster auth ha1 ha2 ha3 -u hacluster -p 000000 --force

設置集群的名稱時需要指定集群名稱和需要添加到集群的主機名

# pcs cluster setup --force --name openstack-ha ha1 ha2 ha3

設置在集群所有節點啟動時啟用corosync和pacemaker

# pcs cluster enable --all

設置在集群所有節點都開啟集群

# pcs cluster start --all

查詢集群狀態

# pcs status

為了實現對虛擬機的Fencing操作,需要為Pacemaker集群配置Stonith資源

1 # pcs stonith create fence1 fence_xvm multicast_address=225.0.0.12 2 # pcs stonith create fence2 fence_xvm multicast_address=225.0.0.12 3 # pcs stonith create fence3 fence_xvm multicast_address=225.0.0.12

查詢集群狀態

[root@ha1 ~]# pcs status Cluster name: openstack-ha Stack: corosync Current DC: ha1 (version 1.1.18-11.el7_5.3-2b07d5c5a9) - partition with quorum Last updated: Thu Aug 16 15:30:59 2018 Last change: Thu Aug 16 12:44:03 2018 by root via cibadmin on ha1 3 nodes configured 3 resources configured Online: [ ha1 ha2 ha3 ] Full list of resources: fence1 (stonith:fence_xvm): Started ha1 fence2 (stonith:fence_xvm): Started ha2 fence3 (stonith:fence_xvm): Started ha3 Daemon Status: corosync: active/enabled pacemaker: active/enabled pcsd: active/enabled

查詢當前 Pacemaker 集群中的 Stonith 資源

[root@ha1 ~]# pcs stonith show fence1 (stonith:fence_xvm): Started ha1 fence2 (stonith:fence_xvm): Started ha2 fence3 (stonith:fence_xvm): Started ha3