Slony是PostgreSQL領域中最廣泛的複製解決方案之一。它不僅是最古老的複製實現之一,它也是一個擁有最廣泛的外部工具支持的工具,比如pgAdmin3。多年來,Slony是在PostgreSQL中複製數據的惟一可行的解決方案。Slony使用邏輯複製;Slony-I一般要求表有主鍵,或者唯一鍵; ...

Slony是PostgreSQL領域中最廣泛的複製解決方案之一。它不僅是最古老的複製實現之一,它也是一個擁有最廣泛的外部工具支持的工具,比如pgAdmin3。多年來,Slony是在PostgreSQL中複製數據的惟一可行的解決方案。Slony使用邏輯複製;Slony-I一般要求表有主鍵,或者唯一鍵;Slony的工作不是基於PostgreSQL事務日誌的;而是基於觸發器的;基於邏輯複製高可用性;PostgreSQL除了slony;還有Londiste,BDR等等後續文章會講到

1. 安裝Slony

下載地址:http://www.slony.info;安裝步驟:

# tar -jxvf slony1-2.2.5.tar.bz2 # cd slony1-2.2.5 # ./configure --with-pgconfigdir=/opt/pgsql96/bin # make # make install

安裝完成!

執行./configure時;會在當前目錄是否可以找到pg_config命令;本例pg_config在/opt/pgsql96/bin目錄下;

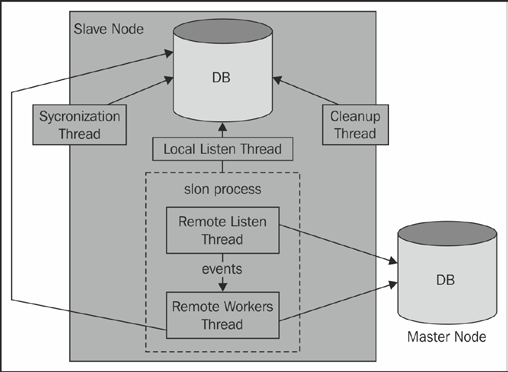

2. Slony架構圖

3. 複製表

現有實驗環境:

| 主機名 | IP | 角色 |

| PostgreSQL201 | 192.168.1.201 | master |

| PostgreSQL202 | 192.168.1.202 | slave |

3.1 在兩台資料庫中都創建一個slony的超級用戶;專為slony服務

create user slony superuser password 'li0924';

3.2 本實驗兩台主機都有lottu資料庫;以lottu資料庫中的表作為實驗對象;在兩個資料庫中以相同的方式創建該表synctab,因為表結構不會自動複製。

create table synctab(id int primary key,name text);

3.3 在所有節點設置允許Slony-I用戶遠程登錄;在pg_hba.conf文件添加

host all slony 192.168.1.0/24 trust

3.4 設置slony(在master主機操作)

編寫一個slonik腳本用於註冊這些節點的腳本如下所示:

[postgres@Postgres201 ~]$ cat slony_setup.sh #!/bin/sh MASTERDB=lottu SLAVEDB=lottu HOST1=192.168.1.201 HOST2=192.168.1.202 DBUSER=slony slonik<<_EOF_ cluster name = first_cluster; # define nodes (this is needed by pretty much # all slonik scripts) node 1 admin conninfo = 'dbname=$MASTERDB host=$HOST1 user=$DBUSER'; node 2 admin conninfo = 'dbname=$SLAVEDB host=$HOST2 user=$DBUSER'; # init cluster init cluster ( id=1, comment = 'Master Node'); # group tables into sets create set (id=1, origin=1, comment='Our tables'); set add table (set id=1, origin=1, id=1, fully qualified name = 'lottu.synctab', comment='sample table'); store node (id=2, comment = 'Slave node', event node=1); store path (server = 1, client = 2, conninfo='dbname=$MASTERDB host=$HOST1 user=$DBUSER'); store path (server = 2, client = 1, conninfo='dbname=$SLAVEDB host=$HOST2 user=$DBUSER'); _EOF_

現在這個表在Slony的控制下,我們可以開始訂閱腳本如下所示:

[postgres@Postgres201 ~]$ cat slony_subscribe.sh #!/bin/sh MASTERDB=lottu SLAVEDB=lottu HOST1=192.168.1.201 HOST2=192.168.1.202 DBUSER=slony slonik<<_EOF_ cluster name = first_cluster; node 1 admin conninfo = 'dbname=$MASTERDB host=$HOST1 user=$DBUSER'; node 2 admin conninfo = 'dbname=$SLAVEDB host=$HOST2 user=$DBUSER'; subscribe set ( id = 1, provider = 1, receiver = 2, forward = no); _EOF_

在master主機執行腳本

[postgres@Postgres201 ~]$ ./slony_setup.sh [postgres@Postgres201 ~]$ ./slony_subscribe.sh & [1] 1225

定義了我們想要複製的東西之後,我們可以在每台主機啟動slon守護進程

slon first_cluster 'host=192.168.1.201 dbname=lottu user=slony' & slon first_cluster 'host=192.168.1.202 dbname=lottu user=slony' &

3.5 驗證slony-I是否配置成功?

在master主機執行dml操作

[postgres@Postgres201 ~]$ psql lottu lottu psql (9.6.0) Type "help" for help. lottu=# \d synctab Table "lottu.synctab" Column | Type | Modifiers --------+---------+----------- id | integer | not null name | text | Indexes: "synctab_pkey" PRIMARY KEY, btree (id) Triggers: _first_cluster_logtrigger AFTER INSERT OR DELETE OR UPDATE ON synctab FOR EACH ROW EXECUTE PROCEDURE _first_cluster.logtrigger('_first_cluster', '1', 'k') _first_cluster_truncatetrigger BEFORE TRUNCATE ON synctab FOR EACH STATEMENT EXECUTE PROCEDURE _first_cluster.log_truncate('1') Disabled user triggers: _first_cluster_denyaccess BEFORE INSERT OR DELETE OR UPDATE ON synctab FOR EACH ROW EXECUTE PROCEDURE _first_cluster.denyaccess('_first_cluster') _first_cluster_truncatedeny BEFORE TRUNCATE ON synctab FOR EACH STATEMENT EXECUTE PROCEDURE _first_cluster.deny_truncate() lottu=# insert into synctab values (1001,'lottu'); INSERT 0 1

在slave主機查看是否對應變化

[postgres@Postgres202 ~]$ psql psql (9.6.0) Type "help" for help. postgres=# \c lottu lottu You are now connected to database "lottu" as user "lottu". lottu=> select * from synctab ; id | name ------+------- 1001 | lottu (1 row)

4. Slony-I相關表或者視圖查看

4.1 配置成功;會在所在的資料庫中生成一個schema

[postgres@Postgres201 ~]$ psql lottu lottu psql (9.6.0) Type "help" for help. lottu=# \dn List of schemas Name | Owner ----------------+---------- _first_cluster | slony lottu | lottu public | postgres (3 rows)

4.2 查看集群中的節點信息

lottu=# select * from _first_cluster.sl_node; no_id | no_active | no_comment | no_failed -------+-----------+-------------+----------- 1 | t | Master Node | f 2 | t | Slave node | f (2 rows)

4.3 查看集群中的集合信息

lottu=# select * from _first_cluster.sl_set; set_id | set_origin | set_locked | set_comment --------+------------+------------+------------- 1 | 1 | | Our tables (1 row)

4.4 查看集群中的表信息

lottu=# select * from _first_cluster.sl_table; -[ RECORD 1 ]------------- tab_id | 1 tab_reloid | 57420 tab_relname | synctab tab_nspname | lottu tab_set | 1 tab_idxname | synctab_pkey tab_altered | f tab_comment | sample table

5. 日常維護

5.1 Slony-I向現有集群中增加一個複製表

以表synctab2為例:

create table synctab2(id int primary key,name text,reg_time timestamp);

我們要創建一個新的表格集;腳本是這樣的

[postgres@Postgres201 ~]$ cat slony_add_table_set.sh #!/bin/sh MASTERDB=lottu SLAVEDB=lottu HOST1=192.168.1.201 HOST2=192.168.1.202 DBUSER=slony slonik<<_EOF_ cluster name = first_cluster; node 1 admin conninfo = 'dbname=$MASTERDB host=$HOST1 user=$DBUSER'; node 2 admin conninfo = 'dbname=$SLAVEDB host=$HOST2 user=$DBUSER'; create set (id=2, origin=1, comment='a second replication set'); set add table (set id=2, origin=1, id=2, fully qualified name ='lottu.synctab2', comment='second table'); subscribe set(id=1, provider=1,receiver=2); subscribe set(id=2, provider=1,receiver=2); merge set(id=1, add id=2,origin=1); _EOF_

執行slony_add_table_set.sh腳本

[postgres@Postgres201 ~]$ ./slony_add_table_set.sh <stdin>:8 subscription in progress before mergeSet. waiting <stdin>:8 subscription in progress before mergeSet. waiting

查看是否添加成功

lottu=# select * from _first_cluster.sl_table; -[ RECORD 1 ]-------------- tab_id | 1 tab_reloid | 57420 tab_relname | synctab tab_nspname | lottu tab_set | 1 tab_idxname | synctab_pkey tab_altered | f tab_comment | sample table -[ RECORD 2 ]-------------- tab_id | 2 tab_reloid | 57840 tab_relname | synctab2 tab_nspname | lottu tab_set | 1 tab_idxname | synctab2_pkey tab_altered | f tab_comment | second table

5.2 Slony-I向現有集群中刪除一個複製表

[postgres@Postgres201 ~]$ cat slony_drop_table.sh #!/bin/sh MASTERDB=lottu SLAVEDB=lottu HOST1=192.168.1.201 HOST2=192.168.1.202 DBUSER=slony slonik<<_EOF_ cluster name = first_cluster; node 1 admin conninfo = 'dbname=$MASTERDB host=$HOST1 user=$DBUSER'; node 2 admin conninfo = 'dbname=$SLAVEDB host=$HOST2 user=$DBUSER'; set drop table (id=2, origin=1); _EOF_

執行slony_drop_table.sh腳本

[postgres@Postgres201 ~]$ ./slony_drop_table.sh

查看是否刪除成功

lottu=# select * from _first_cluster.sl_table; tab_id | tab_reloid | tab_relname | tab_nspname | tab_set | tab_idxname | tab_altered | tab_comment --------+------------+-------------+-------------+---------+--------------+-------------+-------------- 1 | 57420 | synctab | lottu | 1 | synctab_pkey | f | sample table (1 row)

5. 3刪除slony

[postgres@Postgres201 ~]$ cat slony_drop_node.sh #!/bin/sh MASTERDB=lottu SLAVEDB=lottu HOST1=192.168.1.201 HOST2=192.168.1.202 DBUSER=slony slonik<<_EOF_ cluster name = first_cluster; node 1 admin conninfo = 'dbname=$MASTERDB host=$HOST1 user=$DBUSER'; node 2 admin conninfo = 'dbname=$SLAVEDB host=$HOST2 user=$DBUSER'; uninstall node (id = 1); uninstall node (id = 2); _EOF_

執行腳本如下:

[postgres@Postgres201 ~]$ ./slony_drop_node.sh <stdin>:4: NOTICE: Slony-I: Please drop schema "_first_cluster" <stdin>:4: NOTICE: drop cascades to 175 other objects DETAIL: drop cascades to table _first_cluster.sl_node drop cascades to table _first_cluster.sl_nodelock drop cascades to table _first_cluster.sl_set drop cascades to table _first_cluster.sl_setsync drop cascades to table _first_cluster.sl_table drop cascades to table _first_cluster.sl_sequence drop cascades to table _first_cluster.sl_path drop cascades to table _first_cluster.sl_listen drop cascades to table _first_cluster.sl_subscribe drop cascades to table _first_cluster.sl_event drop cascades to table _first_cluster.sl_confirm drop cascades to table _first_cluster.sl_seqlog drop cascades to function _first_cluster.sequencelastvalue(text) drop cascades to table _first_cluster.sl_log_1 drop cascades to table _first_cluster.sl_log_2 drop cascades to table _first_cluster.sl_log_script drop cascades to table _first_cluster.sl_registry drop cascades to table _first_cluster.sl_apply_stats drop cascades to view _first_cluster.sl_seqlastvalue drop cascades to view _first_cluster.sl_failover_targets drop cascades to sequence _first_cluster.sl_local_node_id drop cascades to sequence _first_cluster.sl_event_seq drop cascades to sequence _first_cluster.sl_action_seq drop cascades to sequence _first_cluster.sl_log_status drop cascades to table _first_cluster.sl_config_lock drop cascades to table _first_cluster.sl_event_lock drop cascades to table _first_cluster.sl_archive_counter drop cascades to table _first_cluster.sl_components drop cascades to type _first_cluster.vactables drop cascades to function _first_cluster.createevent(name,text) drop cascades to function _first_cluster.createevent(name,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text,text,text) drop cascades to function _first_cluster.denyaccess() drop cascades to trigger _first_cluster_denyaccess on table lottu.synctab drop cascades to function _first_cluster.lockedset() drop cascades to function _first_cluster.getlocalnodeid(name) drop cascades to function _first_cluster.getmoduleversion() drop cascades to function _first_cluster.resetsession() drop cascades to function _first_cluster.logapply() drop cascades to function _first_cluster.logapplysetcachesize(integer) drop cascades to function _first_cluster.logapplysavestats(name,integer,interval) drop cascades to function _first_cluster.checkmoduleversion() drop cascades to function _first_cluster.decode_tgargs(bytea) drop cascades to function _first_cluster.logtrigger() drop cascades to trigger _first_cluster_logtrigger on table lottu.synctab drop cascades to function _first_cluster.terminatenodeconnections(integer) drop cascades to function _first_cluster.killbackend(integer,text) drop cascades to function _first_cluster.seqtrack(integer,bigint) drop cascades to function _first_cluster.slon_quote_brute(text) drop cascades to function _first_cluster.slon_quote_input(text) drop cascades to function _first_cluster.slonyversionmajor() drop cascades to function _first_cluster.slonyversionminor() drop cascades to function _first_cluster.slonyversionpatchlevel() drop cascades to function _first_cluster.slonyversion() drop cascades to function _first_cluster.registry_set_int4(text,integer) drop cascades to function _first_cluster.registry_get_int4(text,integer) drop cascades to function _first_cluster.registry_set_text(text,text) drop cascades to function _first_cluster.registry_get_text(text,text) drop cascades to function _first_cluster.registry_set_timestamp(text,timestamp with time zone) drop cascades to function _first_cluster.registry_get_timestamp(text,timestamp with time zone) drop cascades to function _first_cluster.cleanupnodelock() drop cascades to function _first_cluster.registernodeconnection(integer) drop cascades to function _first_cluster.initializelocalnode(integer,text) drop cascades to function _first_cluster.storenode(integer,text) drop cascades to function _first_cluster.storenode_int(integer,text) drop cascades to function _first_cluster.enablenode(integer) drop cascades to function _first_cluster.enablenode_int(integer) drop cascades to function _first_cluster.disablenode(integer) drop cascades to function _first_cluster.disablenode_int(integer) drop cascades to function _first_cluster.dropnode(integer[]) drop cascades to function _first_cluster.dropnode_int(integer) drop cascades to function _first_cluster.prefailover(integer,boolean) drop cascades to function _first_cluster.failednode(integer,integer,integer[]) drop cascades to function _first_cluster.failednode2(integer,integer,bigint,integer[]) drop cascades to function _first_cluster.failednode3(integer,integer,bigint) drop cascades to function _first_cluster.failoverset_int(integer,integer,bigint) drop cascades to function _first_cluster.uninstallnode() drop cascades to function _first_cluster.clonenodeprepare(integer,integer,text) drop cascades to function _first_cluster.clonenodeprepare_int(integer,integer,text) drop cascades to function _first_cluster.clonenodefinish(integer,integer) drop cascades to function _first_cluster.storepath(integer,integer,text,integer) drop cascades to function _first_cluster.storepath_int(integer,integer,text,integer) drop cascades to function _first_cluster.droppath(integer,integer) drop cascades to function _first_cluster.droppath_int(integer,integer) drop cascades to function _first_cluster.storelisten(integer,integer,integer) drop cascades to function _first_cluster.storelisten_int(integer,integer,integer) drop cascades to function _first_cluster.droplisten(integer,integer,integer) drop cascades to function _first_cluster.droplisten_int(integer,integer,integer) drop cascades to function _first_cluster.storeset(integer,text) drop cascades to function _first_cluster.storeset_int(integer,integer,text) drop cascades to function _first_cluster.lockset(integer) drop cascades to function _first_cluster.unlockset(integer) drop cascades to function _first_cluster.moveset(integer,integer) drop cascades to function _first_cluster.moveset_int(integer,integer,integer,bigint) and 75 other objects (see server log for list) <stdin>:5: NOTICE: Slony-I: Please drop schema "_first_cluster" <stdin>:5: NOTICE: drop cascades to 175 other objects DETAIL: drop cascades to table _first_cluster.sl_node drop cascades to table _first_cluster.sl_nodelock drop cascades to table _first_cluster.sl_set drop cascades to table _first_cluster.sl_setsync drop cascades to table _first_cluster.sl_table drop cascades to table _first_cluster.sl_sequence drop cascades to table _first_cluster.sl_path drop cascades to table _first_cluster.sl_listen drop cascades to table _first_cluster.sl_subscribe drop cascades to table _first_cluster.sl_event drop cascades to table _first_cluster.sl_confirm drop cascades to table _first_cluster.sl_seqlog drop cascades to function _first_cluster.sequencelastvalue(text) drop cascades to table _first_cluster.sl_log_1 drop cascades to table _first_cluster.sl_log_2 drop cascades to table _first_cluster.sl_log_script drop cascades to table _first_cluster.sl_registry drop cascades to table _first_cluster.sl_apply_stats drop cascades to view _first_cluster.sl_seqlastvalue drop cascades to view _first_cluster.sl_failover_targets drop cascades to sequence _first_cluster.sl_local_node_id drop cascades to sequence _first_cluster.sl_event_seq drop cascades to sequence _first_cluster.sl_action_seq drop cascades to sequence _first_cluster.sl_log_status drop cascades to table _first_cluster.sl_config_lock drop cascades to table _first_cluster.sl_event_lock drop cascades to table _first_cluster.sl_archive_counter drop cascades to table _first_cluster.sl_components drop cascades to type _first_cluster.vactables drop cascades to function _first_cluster.createevent(name,text) drop cascades to function _first_cluster.createevent(name,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text,text) drop cascades to function _first_cluster.createevent(name,text,text,text,text,text,text,text,text,text) drop cascades to function _first_cluster.denyaccess() drop cascades to trigger _first_cluster_denyaccess on table lottu.synctab drop cascades to function _first_cluster.lockedset() drop cascades to function _first_cluster.getlocalnodeid(name) drop cascades to function _first_cluster.getmoduleversion() drop cascades to function _first_cluster.resetsession() drop cascades to function _first_cluster.logapply() drop cascades to function _first_cluster.logapplysetcachesize(integer) drop cascades to function _first_cluster.logapplysavestats(name,integer,interval) drop cascades to function _first_cluster.checkmoduleversion() drop cascades to function _first_cluster.decode_tgargs(bytea) drop cascades to function _first_cluster.logtrigger() drop cascades to trigger _first_cluster_logtrigger on table lottu.synctab drop cascades to function _first_cluster.terminatenodeconnections(integer) drop cascades to function _first_cluster.killbackend(integer,text) drop cascades to function _first_cluster.seqtrack(integer,bigint) drop cascades to function _first_cluster.slon_quote_brute(text) drop cascades to function _first_cluster.slon_quote_input(text) drop cascades to function _first_cluster.slonyversionmajor() drop cascades to function _first_cluster.slonyversionminor() drop cascades to function _first_cluster.slonyversionpatchlevel() drop cascades to function _first_cluster.slonyversion() drop cascades to function _first_cluster.registry_set_int4(text,integer) drop cascades to function _first_cluster.registry_get_int4(text,integer) drop cascades to function _first_cluster.registry_set_text(text,text) drop cascades to function _first_cluster.registry_get_text(text,text) drop cascades to function _first_cluster.registry_set_timestamp(text,timestamp with time zone) drop cascades to function _first_cluster.registry_get_timestamp(text,timestamp with time zone) drop cascades to function _first_cluster.cleanupnodelock() drop cascades to function _first_cluster.registernodeconnection(integer) drop cascades to function _first_cluster.initializelocalnode(integer,text) drop cascades to function _first_cluster.storenode(integer,text) drop cascades to function _first_cluster.storenode_int(integer,text) drop cascades to function _first_cluster.enablenode(integer) drop cascades to function _first_cluster.enablenode_int(integer) drop cascades to function _first_cluster.disablenode(integer) drop cascades to function _first_cluster.disablenode_int(integer) drop cascades to function _first_cluster.dropnode(integer[]) drop cascades to function _first_cluster.dropnode_int(integer) drop cascades to function _first_cluster.prefailover(integer,boolean) drop cascades to function _first_cluster.failednode(integer,integer,integer[]) drop cascades to function _first_cluster.failednode2(integer,integer,bigint,integer[]) drop cascades to function _first_cluster.failednode3(integer,integer,bigint) drop cascades to function _first_cluster.failoverset_int(integer,integer,bigint) drop cascades to function _first_cluster.uninstallnode() drop cascades to function _first_cluster.clonenodeprepare(integer,integer,text) drop cascades to function _first_cluster.clonenodeprepare_int(integer,integer,text) drop cascades to function _first_cluster.clonenodefinish(integer,integer) drop cascades to function _first_cluster.storepath(integer,integer,text,integer) drop cascades to function _first_cluster.storepath_int(integer,integer,text,integer) drop cascades to function _first_cluster.droppath(integer,integer) drop cascades to function _first_cluster.droppath_int(integer,integer) drop cascades to function _first_cluster.storelisten(integer,integer,integer) drop cascades to function _first_cluster.storelisten_int(integer,integer,integer) drop cascades to function _first_cluster.droplisten(integer,integer,integer) drop cascades to function _first_cluster.droplisten_int(integer,integer,integer) drop cascades to function _first_cluster.storeset(integer,text) drop cascades to function _first_cluster.storeset_int(integer,integer,text) drop cascades to function _first_cluster.lockset(integer) drop cascades to function _first_cluster.unlockset(integer) drop cascades to function _first_cluster.moveset(integer,integer) drop cascades to function _first_cluster.moveset_int(integer,integer,integer,bigint) and 75 other objects (see server log for list)View Code

完美;一切歸零!

查考文獻

https://www.cnblogs.com/ilifeilong/p/7009322.html

https://www.cnblogs.com/gaojian/p/3196244.html