前言 上篇Redis Sentinel安裝與部署,實現redis的高可用實現了redis的高可用,針對的主要是master宕機的情況,我們發現所有節點的數據都是一樣的,那麼一旦數據量過大,redi也會效率下降的問題。redis3.0版本正式推出後,有效地解決了Redis分散式方面的需求,當遇到單機內 ...

前言

上篇Redis Sentinel安裝與部署,實現redis的高可用實現了redis的高可用,針對的主要是master宕機的情況,我們發現所有節點的數據都是一樣的,那麼一旦數據量過大,redi也會效率下降的問題。redis3.0版本正式推出後,有效地解決了Redis分散式方面的需求,當遇到單機記憶體、併發、流量等瓶頸時,可以採用Cluster架構方法達到負載均衡的目的。

而此篇將帶領大家實現Redis Cluster的搭建, 併進行簡單的客戶端操作。

github地址:https://github.com/youzhibing/redis

環境準備

redis版本:redis-3.0.0

linux:centos6.7

ip:192.168.11.202,不同的埠實現不同的redis實例

客戶端jedis,基於spring-boot

redis cluster環境搭建

節點準備

192.168.11.202:6382,192.168.11.202:6383,192.168.11.202:6384,192.168.11.202:6385,192.168.11.202:6386,192.168.11.202:6387搭建初始集群

192.168.11.202:6388,192.168.11.202:6389擴容時用到

redis-6382.conf

port 6382 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6382.log" dbfilename "dump-6382.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6382.conf"View Code

redis-6383.conf

port 6383 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6383.log" dbfilename "dump-6383.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6383.conf"View Code

redis-6384.conf

port 6384 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6384.log" dbfilename "dump-6384.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6384.conf"View Code

redis-6385.conf

port 6385 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6385.log" dbfilename "dump-6385.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6385.conf"View Code

redis-6386.conf

port 6386 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6386.log" dbfilename "dump-6386.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6386.conf"View Code

redis-6387.conf

port 6387 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6387.log" dbfilename "dump-6387.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6387.conf"View Code

啟動全部節點

[root@slave1 redis_cluster]# cd /opt/redis-3.0.0/redis_cluster/ [root@slave1 redis_cluster]# ./../src/redis-server redis-6382.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6383.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6384.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6385.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6386.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6387.confView Code

創建集群

節點全部啟動後,每個節點目前只能識別出自己的節點信息,彼此之間並不知道對方的存在;

採用redis-trib.rb來實現集群的快速搭建,redis-trib.rb是採用Rudy實現的集群管理工具,內部通過Cluster相關命令幫我們簡化集群創建、檢查、槽遷移和均衡等常見運維操作。

有興趣的朋友可以採用cluster 命令一步一步的手動實現redis cluster的搭建,就可以明白redis-trib.rb是如何快速實現redis cluster的搭建的。

搭建命令如下,其中--replicas 1表示每個主節點配置1個從節點

[root@slave1 src]# cd /opt/redis-3.0.0/src/

[root@slave1 src]# ./redis-trib.rb create --replicas 1 192.168.11.202:6382 192.168.11.202:6383 192.168.11.202:6384 192.168.11.202:6385 192.168.11.202:6386 192.168.11.202:6387

創建過程中會給出主從節點角色分配的計劃,如下所示

>>> Creating cluster Connecting to node 192.168.11.202:6382: OK Connecting to node 192.168.11.202:6383: OK Connecting to node 192.168.11.202:6384: OK Connecting to node 192.168.11.202:6385: OK Connecting to node 192.168.11.202:6386: OK Connecting to node 192.168.11.202:6387: OK >>> Performing hash slots allocation on 6 nodes... Using 3 masters: 192.168.11.202:6382 192.168.11.202:6383 192.168.11.202:6384 Adding replica 192.168.11.202:6385 to 192.168.11.202:6382 Adding replica 192.168.11.202:6386 to 192.168.11.202:6383 Adding replica 192.168.11.202:6387 to 192.168.11.202:6384 M: 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 slots:0-5460 (5461 slots) master M: 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 slots:5461-10922 (5462 slots) master M: 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 slots:10923-16383 (5461 slots) master S: 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 replicates 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe S: 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 replicates 3771e67edab547deff6bd290e1a07b23646906ee S: a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 replicates 10b3789bb30889b5e6f67175620feddcd496d19e Can I set the above configuration? (type 'yes' to accept):

為什麼192.168.11.202:6382 192.168.11.202:6383 192.168.11.202:6384是主節點,請看註意點中第1點;當我們同意這份計劃之後,輸入yes,redis-trib.rb開始執行節點握手和槽分配操作,輸出如下

>>> Nodes configuration updated >>> Assign a different config epoch to each node >>> Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join... >>> Performing Cluster Check (using node 192.168.11.202:6382) M: 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 slots:0-5460 (5461 slots) master M: 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 slots:5461-10922 (5462 slots) master M: 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 slots:10923-16383 (5461 slots) master M: 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slots: (0 slots) master replicates 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe M: 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slots: (0 slots) master replicates 3771e67edab547deff6bd290e1a07b23646906ee M: a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slots: (0 slots) master replicates 10b3789bb30889b5e6f67175620feddcd496d19e [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

16384個槽全部被分配,集群創建成功。

集群完整性檢查

redis-trib.rb check命令可以完成檢查工作,check命令只需給出集群中任意一個節點地址就可以完成整個集群的檢查工作,如下

redis-trib.rb check 192.168.11.202:6382, 輸出結果如下

Connecting to node 192.168.11.202:6382: OK Connecting to node 192.168.11.202:6385: OK Connecting to node 192.168.11.202:6383: OK Connecting to node 192.168.11.202:6384: OK Connecting to node 192.168.11.202:6387: OK Connecting to node 192.168.11.202:6386: OK >>> Performing Cluster Check (using node 192.168.11.202:6382) M: 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 slots:0-5460 (5461 slots) master 1 additional replica(s) S: 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slots: (0 slots) slave replicates 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe M: 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 slots:5461-10922 (5462 slots) master 1 additional replica(s) M: 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 slots:10923-16383 (5461 slots) master 1 additional replica(s) S: a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slots: (0 slots) slave replicates 10b3789bb30889b5e6f67175620feddcd496d19e S: 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slots: (0 slots) slave replicates 3771e67edab547deff6bd290e1a07b23646906ee [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.View Code

[OK] All 16384 slots covered.表示集群所有的槽都已分配到節點。

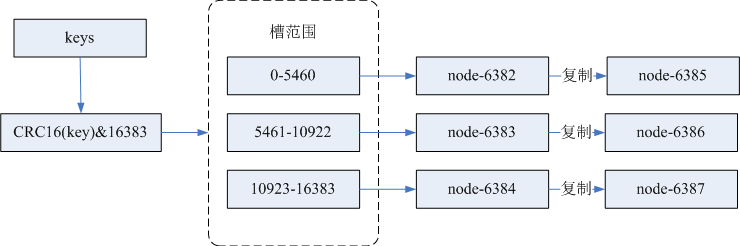

引入了槽之後,整個數據流向如下圖所示:

至於為什麼引入槽,請看註意點中第3點

redis cluster簡單操作

連接集群,隨便連接某個節點都可以;-c 集群支持,支持自動重定向

[root@slave1 redis_cluster]# ./../src/redis-cli -h 192.168.11.202 -p 6382 -a myredis -c

連接上redis cluster後就可以執行相關redis命令了,如下

192.168.11.202:6382> get name -> Redirected to slot [5798] located at 192.168.11.202:6388 "youzhibing" 192.168.11.202:6388> set weight 112 -> Redirected to slot [16280] located at 192.168.11.202:6384 OK 192.168.11.202:6384> get weight "112" 192.168.11.202:6384>

客戶端(Jedis)連接與操作

redis-cluster.properties

#cluster redis.cluster.host=192.168.11.202 redis.cluster.port=6382,6383,6384,6385,6386,6387 #redis讀寫超時時間(毫秒) redis.cluster.socketTimeout=1000 #redis連接超時時間(毫秒) redis.cluster.connectionTimeOut=3000 #最大嘗試連接次數 redis.cluster.maxAttempts=10 #最大重定向次數 redis.cluster.maxRedirects=5 #master連接密碼 redis.password=myredis # 連接池 # 連接池最大連接數(使用負值表示沒有限制) redis.pool.maxActive=150 # 連接池中的最大空閑連接 redis.pool.maxIdle=10 # 連接池中的最小空閑連接 redis.pool.minIdle=1 # 獲取連接時的最大等待毫秒數,小於零:阻塞不確定的時間,預設-1 redis.pool.maxWaitMillis=3000 # 每次釋放連接的最大數目 redis.pool.numTestsPerEvictionRun=50 # 釋放連接的掃描間隔(毫秒) redis.pool.timeBetweenEvictionRunsMillis=3000 # 連接最小空閑時間(毫秒) redis.pool.minEvictableIdleTimeMillis=1800000 # 連接空閑多久後釋放, 當空閑時間>該值 且 空閑連接>最大空閑連接數 時直接釋放(毫秒) redis.pool.softMinEvictableIdleTimeMillis=10000 # 在獲取連接的時候檢查有效性, 預設false redis.pool.testOnBorrow=true # 在空閑時檢查有效性, 預設false redis.pool.testWhileIdle=true # 在歸還給pool時,是否提前進行validate操作 redis.pool.testOnReturn=true # 連接耗盡時是否阻塞, false報異常,ture阻塞直到超時, 預設true redis.pool.blockWhenExhausted=trueView Code

RedisClusterConfig.java

package com.lee.redis.config.cluster; import java.util.HashSet; import java.util.Set; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.beans.factory.annotation.Value; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.context.annotation.PropertySource; import org.springframework.util.StringUtils; import redis.clients.jedis.HostAndPort; import redis.clients.jedis.JedisCluster; import redis.clients.jedis.JedisPoolConfig; import com.alibaba.fastjson.JSON; import com.lee.redis.exception.LocalException; @Configuration @PropertySource("redis/redis-cluster.properties") public class RedisClusterConfig { private static final Logger LOGGER = LoggerFactory.getLogger(RedisClusterConfig.class); // pool @Value("${redis.pool.maxActive}") private int maxTotal; @Value("${redis.pool.maxIdle}") private int maxIdle; @Value("${redis.pool.minIdle}") private int minIdle; @Value("${redis.pool.maxWaitMillis}") private long maxWaitMillis; @Value("${redis.pool.numTestsPerEvictionRun}") private int numTestsPerEvictionRun; @Value("${redis.pool.timeBetweenEvictionRunsMillis}") private long timeBetweenEvictionRunsMillis; @Value("${redis.pool.minEvictableIdleTimeMillis}") private long minEvictableIdleTimeMillis; @Value("${redis.pool.softMinEvictableIdleTimeMillis}") private long softMinEvictableIdleTimeMillis; @Value("${redis.pool.testOnBorrow}") private boolean testOnBorrow; @Value("${redis.pool.testWhileIdle}") private boolean testWhileIdle; @Value("${redis.pool.testOnReturn}") private boolean testOnReturn; @Value("${redis.pool.blockWhenExhausted}") private boolean blockWhenExhausted; // cluster @Value("${redis.cluster.host}") private String host; @Value("${redis.cluster.port}") private String port; @Value("${redis.cluster.socketTimeout}") private int socketTimeout; @Value("${redis.cluster.connectionTimeOut}") private int connectionTimeOut; @Value("${redis.cluster.maxAttempts}") private int maxAttempts; @Value("${redis.cluster.maxRedirects}") private int maxRedirects; @Value("${redis.password}") private String password; @Bean public JedisPoolConfig jedisPoolConfig() { JedisPoolConfig jedisPoolConfig = new JedisPoolConfig(); jedisPoolConfig.setMaxTotal(maxTotal); jedisPoolConfig.setMaxIdle(maxIdle); jedisPoolConfig.setMinIdle(minIdle); jedisPoolConfig.setMaxWaitMillis(maxWaitMillis); jedisPoolConfig.setNumTestsPerEvictionRun(numTestsPerEvictionRun); jedisPoolConfig .setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis); jedisPoolConfig .setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis); jedisPoolConfig .setSoftMinEvictableIdleTimeMillis(softMinEvictableIdleTimeMillis); jedisPoolConfig.setTestOnBorrow(testOnBorrow); jedisPoolConfig.setTestWhileIdle(testWhileIdle); jedisPoolConfig.setTestOnReturn(testOnReturn); jedisPoolConfig.setBlockWhenExhausted(blockWhenExhausted); return jedisPoolConfig; } @Bean public JedisCluster jedisCluster(JedisPoolConfig jedisPoolConfig) { if (StringUtils.isEmpty(host)) { LOGGER.info("redis集群主機未配置"); throw new LocalException("redis集群主機未配置"); } if (StringUtils.isEmpty(port)) { LOGGER.info("redis集群埠未配置"); throw new LocalException("redis集群埠未配置"); } String[] hosts = host.split(","); String[] portArray = port.split(";"); if (hosts.length != portArray.length) { LOGGER.info("redis集群主機數與埠數不匹配"); throw new LocalException("redis集群主機數與埠數不匹配"); } Set<HostAndPort> redisNodes = new HashSet<HostAndPort>(); for (int i = 0; i < hosts.length; i++) { String ports = portArray[i]; String[] hostPorts = ports.split(","); for (String port : hostPorts) { HostAndPort node = new HostAndPort(hosts[i], Integer.parseInt(port)); redisNodes.add(node); } } LOGGER.info("Set<RedisNode> : {}", JSON.toJSONString(redisNodes), true); return new JedisCluster(redisNodes, connectionTimeOut, socketTimeout, maxAttempts, password, jedisPoolConfig); } }View Code

ApplicationCluster.java

package com.lee.redis; import org.springframework.boot.Banner; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.EnableAutoConfiguration; import org.springframework.context.annotation.ComponentScan; import org.springframework.context.annotation.Configuration; @Configuration @EnableAutoConfiguration @ComponentScan(basePackages={"com.lee.redis.config.cluster"}) public class ApplicationCluster { public static void main(String[] args) { SpringApplication app = new SpringApplication(ApplicationCluster.class); app.setBannerMode(Banner.Mode.OFF); // 是否列印banner // app.setApplicationContextClass(); // 指定spring應用上下文啟動類 app.setWebEnvironment(false); app.run(args); } }View Code

RedisClusterTest.java

package com.lee.redis; import java.util.List; import java.util.Map; import org.junit.Test; import org.junit.runner.RunWith; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.boot.test.context.SpringBootTest; import org.springframework.test.context.junit4.SpringRunner; import com.alibaba.fastjson.JSON; import redis.clients.jedis.JedisCluster; import redis.clients.jedis.JedisPool; @RunWith(SpringRunner.class) @SpringBootTest(classes = ApplicationCluster.class) public class RedisClusterTest { private static final Logger LOGGER = LoggerFactory.getLogger(RedisClusterTest.class); @Autowired private JedisCluster jedisCluster; @Test public void initTest() { String name = jedisCluster.get("name"); LOGGER.info("name is {}", name); // list操作 long count = jedisCluster.lpush("list:names", "陳芸"); // lpush的返回值是在 push操作後的 list 長度 LOGGER.info("count = {}", count); long nameLen = jedisCluster.llen("list:names"); LOGGER.info("list:names lens is {}", nameLen); List<String> nameList = jedisCluster.lrange("list:names", 0, nameLen); LOGGER.info("names : {}", JSON.toJSONString(nameList)); } }View Code

執行RedisClusterTest.java中的initTest方法, 結果如下

...... 2018-03-06 09:56:05|INFO|com.lee.redis.RedisClusterTest|name is youzhibing 2018-03-06 09:56:05|INFO|com.lee.redis.RedisClusterTest|count = 3 2018-03-06 09:56:05|INFO|com.lee.redis.RedisClusterTest|list:names lens is 3 2018-03-06 09:56:05|INFO|com.lee.redis.RedisClusterTest|names : ["陳芸","沈復","沈復"] ......

集群的伸縮與故障轉移

cluster擴容

新增節點:192.168.11.202:6388, 192.168.11.202:6389, 配置文件與之前的基本一致

redis-6388.conf

port 6388 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6388.log" dbfilename "dump-6388.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6388.conf"View Code

redis-6389.conf

port 6389 bind 192.168.11.202 requirepass "myredis" daemonize yes logfile "6389.log" dbfilename "dump-6389.rdb" dir "/opt/soft/redis/cluster_data" masterauth "myredis" cluster-enabled yes cluster-node-timeout 15000 cluster-config-file "nodes-6389.conf"View Code

啟動6388、6389節點

[root@slave1 redis_cluster]# cd /opt/redis-3.0.0/redis_cluster/ [root@slave1 redis_cluster]# ./../src/redis-server redis-6388.conf [root@slave1 redis_cluster]# ./../src/redis-server redis-6389.confView Code

加入集群:

[root@slave1 redis_cluster]# ./../src/redis-trib.rb add-node 192.168.11.202:6388 192.168.11.202:6382

#將6389添加成6388的從節點

[root@slave1 redis_cluster]# ./../src/redis-trib.rb add-node --slave --master-id e073db09e7aaed3c20d133726a26c8994932262c 192.168.11.202:6389 192.168.11.202:6382

遷移槽和數據

採用redis-trib-rb reshard命令執行槽重分片:[root@slave1 redis_cluster]# ./../src/redis-trib.rb reshard 192.168.11.202:6382

當出現 How many slots do you want to move (from 1 to 16384)? 提示我們想移動多少個槽,我們輸入4096

當出現What is the receiving node ID? 提示我們哪個主節點接收新移動的槽, 我們輸入6388的節點id:e073db09e7aaed3c20d133726a26c8994932262c,目標節點只能指定一個(節點id可以拷貝的哦)

之後輸入源節點的id,用done結束,這裡我用的all,就是從之前的全部主節點中移動4096個槽到6388

數據遷移之前會列印出所有的槽從源節點到目標節點的計劃,確認無誤後輸入yes執行遷移工作

若遷移過程沒有出錯,那麼遷移則順利完成

cluster故障轉移

6388上的所有key

192.168.11.202:6388> keys * 1) "list:names" 2) "name" 192.168.11.202:6388>

殺掉6388進程

[root@slave1 redis_cluster]# ps -ef | grep redis-server | grep 6388 root 8280 1 0 Mar05 ? 00:05:07 ./../src/redis-server 192.168.11.202:6388 [cluster] [root@slave1 redis_cluster]# kill -9 8280 #集群節點查看 [root@slave1 redis_cluster]# ./../src/redis-cli -h 192.168.11.202 -p 6382 -a myredis -c 192.168.11.202:6382> cluster nodes 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slave 3771e67edab547deff6bd290e1a07b23646906ee 0 1520304517911 5 connected 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 myself,master - 0 0 1 connected 1394-5460 a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slave 10b3789bb30889b5e6f67175620feddcd496d19e 0 1520304514886 6 connected 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 master - 0 1520304516904 3 connected 12318-16383 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 master - 0 1520304513879 2 connected 7106-10922 e073db09e7aaed3c20d133726a26c8994932262c 192.168.11.202:6388 master,fail - 1520304485678 1520304484473 10 disconnected 0-1393 5461-7105 10923-12317 37de0d2dc1c267760156d4230502fa96a6bba64d 192.168.11.202:6389 slave e073db09e7aaed3c20d133726a26c8994932262c 0 1520304515895 10 connected 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slave 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 0 1520304518923 4 connected 192.168.11.202:6382> #查詢key name 192.168.11.202:6382> get name -> Redirected to slot [5798] located at 192.168.11.202:6389 "youzhibing" 192.168.11.202:6389> keys * 1) "list:names" 2) "name" 192.168.11.202:6389>

6389已經成為主節點,承擔著之前6388的角色,集群狀態還是ok的, 對外提供的服務不受任何影響

重新啟動6388服務

[root@slave1 redis_cluster]# ./../src/redis-server redis-6388.conf #查看集群節點 192.168.11.202:6389> cluster nodes 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 master - 0 1520304789567 1 connected 1394-5460 37de0d2dc1c267760156d4230502fa96a6bba64d 192.168.11.202:6389 myself,master - 0 0 12 connected 0-1393 5461-7105 10923-12317 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slave 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 0 1520304788061 1 connected a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slave 10b3789bb30889b5e6f67175620feddcd496d19e 0 1520304787556 3 connected 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 master - 0 1520304786550 2 connected 7106-10922 e073db09e7aaed3c20d133726a26c8994932262c 192.168.11.202:6388 slave 37de0d2dc1c267760156d4230502fa96a6bba64d 0 1520304786047 12 connected 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 master - 0 1520304785542 3 connected 12318-16383 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slave 3771e67edab547deff6bd290e1a07b23646906ee 0 1520304788561 2 connected 192.168.11.202:6389>

可以看到6388啟動成功後,仍在集群中,只是是作為6389的從節點了

cluster收縮

1、我們下線6389和6388節點

通過集群節點信息我們知道6389負責槽:0-1393 5461-7105 10923-12317, 現在將0-1393遷移到6382,5461-7105遷移到6383, 10923-12317遷移到6384

How many slots do you want to move (from 1 to 16384)?1394 What is the receiving node ID? 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:37de0d2dc1c267760156d4230502fa96a6bba64d Source node #2:done ...... Do you want to proceed with the proposed reshard plan (yes/no)? yes How many slots do you want to move (from 1 to 16384)? 1645 What is the receiving node ID? 3771e67edab547deff6bd290e1a07b23646906ee Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:37de0d2dc1c267760156d4230502fa96a6bba64d Source node #2:done ...... Do you want to proceed with the proposed reshard plan (yes/no)? yes How many slots do you want to move (from 1 to 16384)?1395 What is the receiving node ID? 10b3789bb30889b5e6f67175620feddcd496d19e Please enter all the source node IDs. Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs. Source node #1:37de0d2dc1c267760156d4230502fa96a6bba64d Source node #2:done ...... Do you want to proceed with the proposed reshard plan (yes/no)? yesView Code

槽節點遷移完之後,集群節點信息,發現6388已經沒有分配槽了

192.168.11.202:6382> cluster nodes 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 master - 0 1520333368013 16 connected 5461-10922 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 myself,master - 0 0 13 connected 0-5460 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 master - 0 1520333372037 17 connected 10923-16383 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slave 3771e67edab547deff6bd290e1a07b23646906ee 0 1520333370024 16 connected a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slave 10b3789bb30889b5e6f67175620feddcd496d19e 0 1520333370525 17 connected 37de0d2dc1c267760156d4230502fa96a6bba64d 192.168.11.202:6389 slave e073db09e7aaed3c20d133726a26c8994932262c 0 1520333369017 15 connected 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slave 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 0 1520333367008 13 connected e073db09e7aaed3c20d133726a26c8994932262c 192.168.11.202:6388 master - 0 1520333371031 15 connected

2、忘記節點

由於集群內的節點不停地通過Gossip消息彼此交換節點信息,因此需要通過一種健壯的機制讓集群內所有節點忘記下線的節點。也就是說讓其他節點不再與要下線的節點進行Gossip消息交換。

利用redis-trib.rb del-node命令實現節點下線,先下線從節點再下線主節點,避免不必要的全量複製。命令如下

[root@slave1 redis_cluster]# ./../src/redis-trib.rb del-node 192.168.11.202:6389 37de0d2dc1c267760156d4230502fa96a6bba64d

[root@slave1 redis_cluster]# ./../src/redis-trib.rb del-node 192.168.11.202:6388 e073db09e7aaed3c20d133726a26c8994932262c

集群節點信息如下

192.168.11.202:6382> cluster nodes 3771e67edab547deff6bd290e1a07b23646906ee 192.168.11.202:6383 master - 0 1520333828887 16 connected 5461-10922 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 192.168.11.202:6382 myself,master - 0 0 13 connected 0-5460 10b3789bb30889b5e6f67175620feddcd496d19e 192.168.11.202:6384 master - 0 1520333827377 17 connected 10923-16383 4f36b08d8067a003af45dbe96a5363f348643509 192.168.11.202:6386 slave 3771e67edab547deff6bd290e1a07b23646906ee 0 1520333826880 16 connected a583def1e6a059e4fdb3592557fd6ab691fd61ec 192.168.11.202:6387 slave 10b3789bb30889b5e6f67175620feddcd496d19e 0 1520333829892 17 connected 7649466ec006e0902a7f1578417247a6d5540c47 192.168.11.202:6385 slave 0ec055f9daa5b4f570e6a4c4d46e5285d16e0afe 0 1520333827879 13 connected

16384個槽節點都有分佈,集群狀態ok, 節點6389和6388下線成功

註意點

1、創建集群的時候,redis-trib.rb會儘可能保證主從節點不分配在同一機器下,因此會重新排序節點順序;節點列表順序用於確定主從角色,先主節點之後是從節點

2、redis-trib.rb創建集群的時候,節點地址必須是不包含任何槽 / 數據的節點,否則會拒絕創建集群

3、虛擬槽的採用主要是針對一致性哈希分區的不足而提出的,一致性哈希分區不適用少量節點的情況,而虛擬槽的範圍(redis cluster槽範圍是0 ~ 16383)一般遠大於節點數的,然後每個節點負責一定數量的槽,這樣就規避掉了少量節點的問題,因為在數據與節點之間多了一層虛擬槽的映射

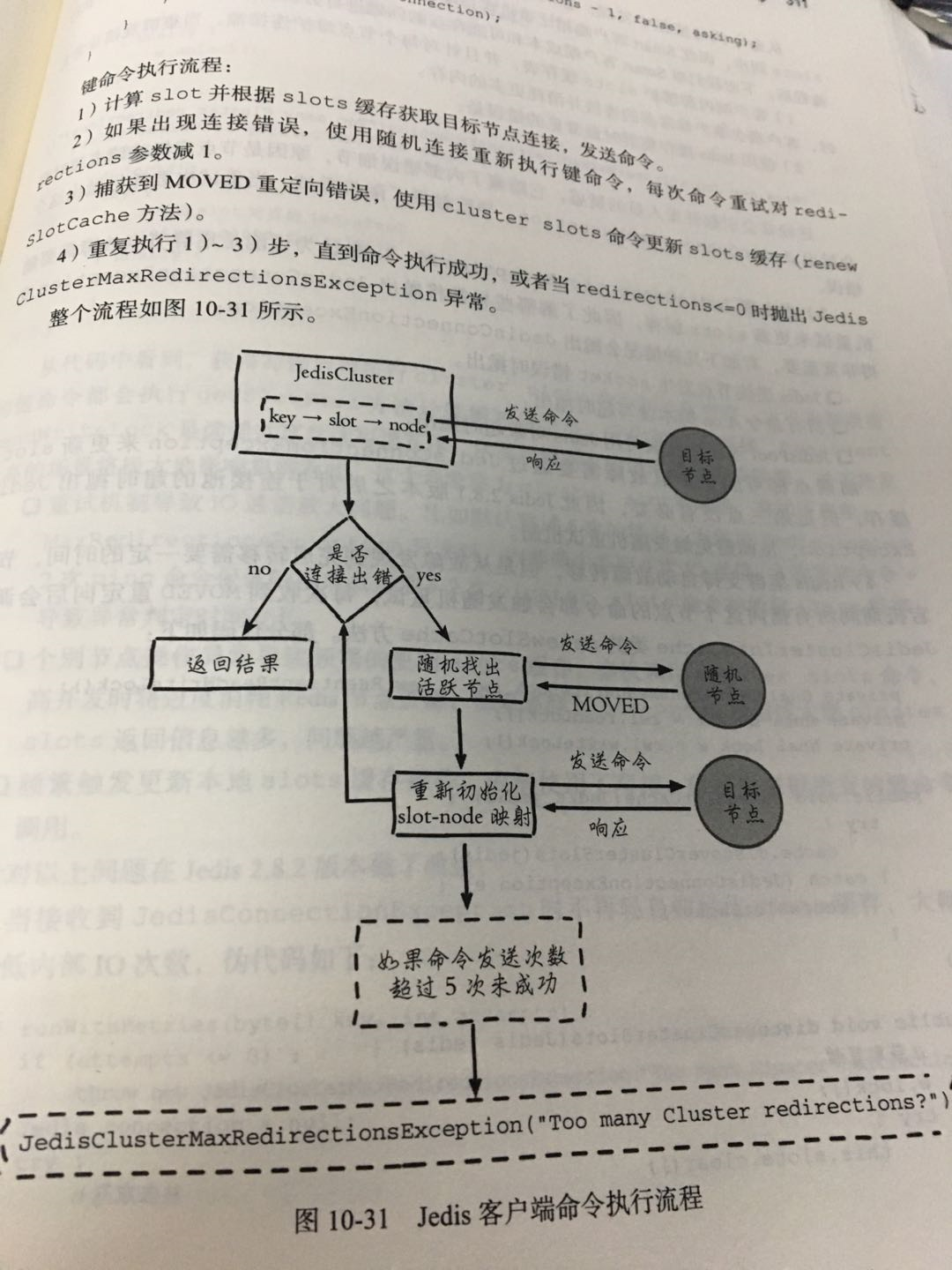

4、Jedis連接redis cluster的時候,配置redis-cluster節點的時候只需要配置任意一個可達的節點即可,不一定全部節點都配置上,因為每個節點都有整個集群的信息;Jedis鍵命令執行流程如下圖, 有興趣的朋友可以查看Jedis源碼; 當然,全部節點都配置是更全面的做法

5、集群的伸縮與故障轉移對客戶端沒有影響,只要整個集群狀態是ok,那麼客戶端的請求都是能夠得到正常響應的

6、只有保證16384個槽節點都能分配到節點上,那麼集群狀態就是ok,才能正常對外提供服務;所以 無論是集群擴容還是收縮,都必須保證16384個槽能正確的分配到節點上

參考

《Redis開發與運維》

http://www.redis.cn/topics/sentinel.html