Hadoop集群部署,就是以Cluster mode方式進行部署。本文是基於JDK1.7.0_79,hadoop2.7.5。 1.Hadoop的節點構成如下: HDFS daemon: NameNode, SecondaryNameNode, DataNode YARN damones: Resou ...

Hadoop集群部署,就是以Cluster mode方式進行部署。本文是基於JDK1.7.0_79,hadoop2.7.5。

1.Hadoop的節點構成如下:

HDFS daemon: NameNode, SecondaryNameNode, DataNode

YARN damones: ResourceManager, NodeManager, WebAppProxy

MapReduce Job History Server

本次測試的分散式環境為:Master 1台 (test166),Slave 1台(test167)

2.1 安裝JDK及下載解壓hadoop

JDK安裝可參考:https://www.cnblogs.com/Dylansuns/p/6974272.html 或者簡單安裝:https://www.cnblogs.com/shihaiming/p/5809553.html

從官網下載Hadoop最新版2.7.5

[hadoop@hadoop-master ~]$ su - hadoop

[hadoop@hadoop-master ~]$ cd /usr/hadoop/ [hadoop@hadoop-master ~]$ wget http://mirrors.shu.edu.cn/apache/hadoop/common/hadoop-2.7.5/hadoop-2.7.5.tar.gz

將hadoop解壓到/usr/hadoop/下

[hadoop@hadoop-master ~]$ tar zxvf /root/hadoop-2.7.5.tar.gz

結果:

[hadoop@hadoop-master ~]$ ll total 211852 drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Desktop drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Documents drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Downloads drwxr-xr-x. 10 hadoop hadoop 4096 Feb 22 01:36 hadoop-2.7.5 -rw-rw-r--. 1 hadoop hadoop 216929574 Dec 16 12:03 hadoop-2.7.5.tar.gz drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Music drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Pictures drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Public drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Templates drwxr-xr-x. 2 hadoop hadoop 6 Jan 31 23:41 Videos [hadoop@hadoop-master ~]$

2.2 在各節點上設置主機名及創建hadoop組和用戶

所有節點(master,slave)

1 [root@hadoop-master ~]# su - root 2 [root@hadoop-master ~]# vi /etc/hosts 3 10.86.255.166 hadoop-master 4 10.86.255.167 slave1 5 註意:修改hosts中,是立即生效的,無需source或者. 。

先使用

建立hadoop用戶組

新建用戶,useradd -d /usr/hadoop -g hadoop -m hadoop (新建用戶hadoop指定用戶主目錄/usr/hadoop 及所屬組hadoop)

passwd hadoop 設置hadoop密碼(這裡設置密碼為hadoop)

[root@hadoop-master ~]# groupadd hadoop

[root@hadoop-master ~]# useradd -d /usr/hadoop -g hadoop -m hadoop

[root@hadoop-master ~]# passwd hadoop

2.3 在各節點上設置SSH無密碼登錄

最終達到目的:即在master:節點執行 ssh hadoop@salve1不需要密碼,此處只需配置master訪問slave1免密。

su - hadoop

進入~/.ssh目錄

執行:ssh-keygen -t rsa,一路回車

生成兩個文件,一個私鑰,一個公鑰,在master1中執行:cp id_rsa.pub authorized_keys

[hadoop@hadoop-master ~]$ su - hadoop

[hadoop@hadoop-master ~]$ pwd /usr/hadoop [hadoop@hadoop-master ~]$ cd .ssh [hadoop@hadoop-master .ssh]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/usr/hadoop/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /usr/hadoop/.ssh/id_rsa. Your public key has been saved in /usr/hadoop/.ssh/id_rsa.pub. The key fingerprint is: 11:b2:23:8c:e7:32:1d:4c:2f:00:32:1a:15:43:bb:de hadoop@hadoop-master The key's randomart image is: +--[ RSA 2048]----+ |=+*.. . . | |oo O . o . | |. o B + . | | = + . . | | + o S | | . + | | . E | | | | | +-----------------+ [hadoop@hadoop-master .ssh]$

[hadoop@hadoop-master .ssh]$ cp id_rsa.pub authorized_keys

[hadoop@hadoop-master .ssh]$ ll

total 16

-rwx------. 1 hadoop hadoop 1230 Jan 31 23:27 authorized_keys

-rwx------. 1 hadoop hadoop 1675 Feb 23 19:07 id_rsa

-rwx------. 1 hadoop hadoop 402 Feb 23 19:07 id_rsa.pub

-rwx------. 1 hadoop hadoop 874 Feb 13 19:40 known_hosts

[hadoop@hadoop-master .ssh]$

2.3.1:本機無密鑰登錄

[hadoop@hadoop-master ~]$ pwd

/usr/hadoop

[hadoop@hadoop-master ~]$ chmod -R 700 .ssh

[hadoop@hadoop-master ~]$ cd .ssh

[hadoop@hadoop-master .ssh]$ chmod 600 authorized_keys

[hadoop@hadoop-master .ssh]$ ll

total 16

-rwx------. 1 hadoop hadoop 1230 Jan 31 23:27 authorized_keys

-rwx------. 1 hadoop hadoop 1679 Jan 31 23:26 id_rsa

-rwx------. 1 hadoop hadoop 410 Jan 31 23:26 id_rsa.pub

-rwx------. 1 hadoop hadoop 874 Feb 13 19:40 known_hosts

驗證:

沒有提示輸入密碼則表示本機無密鑰登錄成功,如果此步不成功,後續啟動hdfs腳本會要求輸入密碼

[hadoop@hadoop-master ~]$ ssh hadoop@hadoop-master Last login: Fri Feb 23 18:54:59 2018 from hadoop-master [hadoop@hadoop-master ~]$

2.3.2:master與其他節點無密鑰登錄

( 若已有authorized_keys,則執行ssh-copy-id ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop@slave1 上面命令的功能ssh-copy-id將pub值寫入遠程機器的~/.ssh/authorized_key中

)

從master中把authorized_keys分發到各個結點上(會提示輸入密碼,輸入slave1相應的密碼即可):

scp /usr/hadoop/.ssh/authorized_keys hadoop@slave1:/home/master/.ssh

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

hadoop@slave1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'hadoop@slave1'" and check to make sure that only the key(s) you wanted were added.

[hadoop@hadoop-master .ssh]$

然後在各個節點對authorized_keys執行(一定要執行該步,否則會報錯):chmod 600 authorized_keys

保證.ssh 700,.ssh/authorized_keys 600許可權

測試如下(第一次ssh時會提示輸入yes/no,輸入yes即可):

[hadoop@hadoop-master ~]$ ssh hadoop@slave1 Last login: Fri Feb 23 18:40:10 2018 [hadoop@slave1 ~]$

[hadoop@slave1 ~]$ exit

logout

Connection to slave1 closed.

[hadoop@hadoop-master ~]$

2.4 設置Hadoop的環境變數

Master及slave1都需操作

[root@hadoop-master ~]# su - root [root@hadoop-master ~]# vi /etc/profile 末尾添加,保證任何路徑下可執行hadoop命令 JAVA_HOME=/usr/java/jdk1.7.0_79 CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar PATH=/usr/hadoop/hadoop-2.7.5/bin:$JAVA_HOME/bin:$PATH

讓設置生效

[root@hadoop-master ~]# source /etc/profile 或者 [root@hadoop-master ~]# . /etc/profile

Master設置hadoop環境

su - hadoop

1 # vi etc/hadoop/hadoop-env.sh 新增以下內容 2 export JAVA_HOME=/usr/java/jdk1.7.0_79 3 export HADOOP_HOME=/usr/hadoop/hadoop-2.7.5

此時hadoop安裝已完成,可執行hadoop命令,後續步驟為集群部署

[hadoop@hadoop-master ~]$ hadoop Usage: hadoop [--config confdir] [COMMAND | CLASSNAME] CLASSNAME run the class named CLASSNAME or where COMMAND is one of: fs run a generic filesystem user client version print the version jar <jar> run a jar file note: please use "yarn jar" to launch YARN applications, not this command. checknative [-a|-h] check native hadoop and compression libraries availability distcp <srcurl> <desturl> copy file or directories recursively archive -archiveName NAME -p <parent path> <src>* <dest> create a hadoop archive classpath prints the class path needed to get the credential interact with credential providers Hadoop jar and the required libraries daemonlog get/set the log level for each daemon trace view and modify Hadoop tracing settings Most commands print help when invoked w/o parameters. [hadoop@hadoop-master ~]$

2.5 Hadoop設定

2.5.0 開放埠50070

註:centos7版本對防火牆進行 加強,不再使用原來的iptables,啟用firewall

Master節點:

su - root firewall-cmd --state 查看狀態(若關閉,則先開啟systemctl start firewalld) firewall-cmd --list-ports 查看已開放的埠 開啟8000埠:firewall-cmd --zone=public(作用域) --add-port=8000/tcp(埠和訪問類型) --permanent(永久生效) firewall-cmd --zone=public --add-port=1521/tcp --permanent firewall-cmd --zone=public --add-port=3306/tcp --permanent firewall-cmd --zone=public --add-port=50070/tcp --permanent firewall-cmd --zone=public --add-port=8088/tcp --permanent firewall-cmd --zone=public --add-port=19888/tcp --permanent firewall-cmd --zone=public --add-port=9000/tcp --permanent firewall-cmd --zone=public --add-port=9001/tcp --permanent firewall-cmd --reload -重啟防火牆 firewall-cmd --list-ports 查看已開放的埠 systemctl stop firewalld.service停止防火牆 systemctl disable firewalld.service禁止防火牆開機啟動 關閉埠:firewall-cmd --zone= public --remove-port=8000/tcp --permanent

Slave1節點:

su - root

systemctl stop firewalld.service停止防火牆

systemctl disable firewalld.service禁止防火牆開機啟動

2.5.1 在Master節點的設定文件中指定Slave節點

[hadoop@hadoop-master hadoop]$ pwd /usr/hadoop/hadoop-2.7.5/etc/hadoop [hadoop@hadoop-master hadoop]$ vi slaves slave1

2.5.2 在各節點指定HDFS文件存儲的位置(預設是/tmp)

Master節點: namenode

創建目錄並賦予許可權

Su - root # mkdir -p /usr/local/hadoop-2.7.5/tmp/dfs/name # chmod -R 777 /usr/local/hadoop-2.7.5/tmp # chown -R hadoop:hadoop /usr/local/hadoop-2.7.5

Slave節點:datanode

創建目錄並賦予許可權,改變所有者

Su - root # mkdir -p /usr/local/hadoop-2.7.5/tmp/dfs/data # chmod -R 777 /usr/local/hadoop-2.7.5/tmp # chown -R hadoop:hadoop /usr/local/hadoop-2.7.5

2.5.3 在Master中設置配置文件(包括yarn)

su - hadoop

1 # vi etc/hadoop/core-site.xml 2 3 <configuration> 4 5 <property> 6 7 <name>fs.default.name</name> 8 9 <value>hdfs://hadoop-master:9000</value> 10 11 </property> 12 13 <property> 14 15 <name>hadoop.tmp.dir</name> 16 17 <value>/usr/local/hadoop-2.7.5/tmp</value> 18 19 </property> 20 21 </configuration>

1 # vi etc/hadoop/hdfs-site.xml 2 3 <configuration> 4 5 <property> 6 7 <name>dfs.replication</name> 8 9 <value>3</value> 10 11 </property> 12 13 <property> 14 15 <name>dfs.name.dir</name> 16 17 <value>/usr/local/hadoop-2.7.5/tmp/dfs/name</value> 18 19 </property> 20 21 <property> 22 23 <name>dfs.data.dir</name> 24 25 <value>/usr/local/hadoop-2.7.5/tmp/dfs/data</value> 26 27 </property> 28 29 30 31 </configuration>

1 #cp mapred-site.xml.template mapred-site.xml 2 3 # vi etc/hadoop/mapred-site.xml 4 5 <configuration> 6 7 <property> 8 9 <name>mapreduce.framework.name</name> 10 11 <value>yarn</value> 12 13 </property> 14 15 </configuration>

YARN設定

yarn的組成(Master節點: resourcemanager ,Slave節點: nodemanager)

以下僅在master操作,後面步驟會統一分發至salve1。

1 # vi etc/hadoop/yarn-site.xml 2 3 <configuration> 4 5 <property> 6 7 <name>yarn.resourcemanager.hostname</name> 8 9 <value>hadoop-master</value> 10 11 </property> 12 13 <property> 14 15 <name>yarn.nodemanager.aux-services</name> 16 17 <value>mapreduce_shuffle</value> 18 19 </property> 20 21 </configuration>

2.5.4將Master的文件分發至slave1節點。

cd /usr/hadoop scp -r hadoop-2.7.5 hadoop@hadoop-master:/usr/hadoop

2.5.5 Master上啟動job history server,Slave節點上指定

此步2.5.5可跳過

Mater:

啟動jobhistory daemon

# sbin/mr-jobhistory-daemon.sh start historyserver

確認

# jps

訪問Job History Server的web頁面

http://localhost:19888/

Slave節點:

1 # vi etc/hadoop/mapred-site.xml 2 3 <property> 4 5 <name>mapreduce.jobhistory.address</name> 6 7 <value>hadoop-master:10020</value> 8 9 </property>

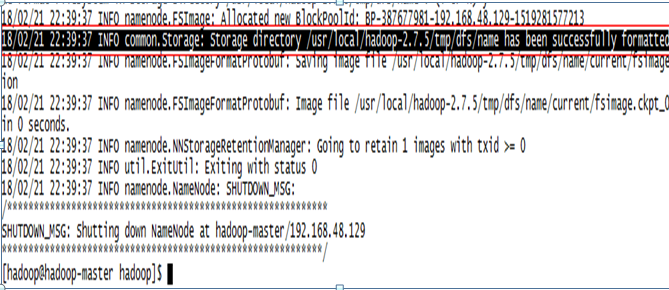

2.5.6 格式化HDFS(Master)

# hadoop namenode -format

Master結果:

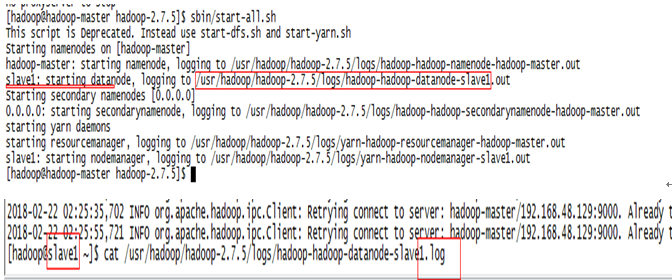

2.5.7 在Master上啟動daemon,Slave上的服務會一起啟動

啟動:

[hadoop@hadoop-master hadoop-2.7.5]$ pwd /usr/hadoop/hadoop-2.7.5[hadoop@hadoop-master hadoop-2.7.5]$ sbin/start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [hadoop-master] hadoop-master: starting namenode, logging to /usr/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-namenode-hadoop-master.out slave1: starting datanode, logging to /usr/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-datanode-slave1.out Starting secondary namenodes [0.0.0.0] 0.0.0.0: starting secondarynamenode, logging to /usr/hadoop/hadoop-2.7.5/logs/hadoop-hadoop-secondarynamenode-hadoop-master.out starting yarn daemons starting resourcemanager, logging to /usr/hadoop/hadoop-2.7.5/logs/yarn-hadoop-resourcemanager-hadoop-master.out slave1: starting nodemanager, logging to /usr/hadoop/hadoop-2.7.5/logs/yarn-hadoop-nodemanager-slave1.out [hadoop@hadoop-master hadoop-2.7.5]$

確認

Master節點:

[hadoop@hadoop-master hadoop-2.7.5]$ jps 81209 NameNode 81516 SecondaryNameNode 82052 Jps 81744 ResourceManager

Slave節點:

[hadoop@slave1 ~]$ jps 58913 NodeManager 59358 Jps 58707 DataNode

停止(需要的時候再停止,後續步驟需running狀態):

[hadoop@hadoop-master hadoop-2.7.5]$ sbin/stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

Stopping namenodes on [hadoop-master]

hadoop-master: stopping namenode

slave1: stopping datanode

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

stopping yarn daemons

stopping resourcemanager

slave1: stopping nodemanager

no proxyserver to stop

2.5.8 創建HDFS

# hdfs dfs -mkdir /user # hdfs dfs -mkdir /user/test22

2.5.9 拷貝input文件到HDFS目錄下

# hdfs dfs -put etc/hadoop/*.sh /user/test22/input

查看

# hdfs dfs -ls /user/test22/input

2.5.10 執行hadoop job

統計單詞的例子,此時的output是hdfs中的目錄,hdfs dfs -ls可查看

# hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.5.jar wordcount /user/test22/input output

確認執行結果

# hdfs dfs -cat output/*

2.5.11 查看錯誤日誌

註:日誌在salve1的*.log中而不是在master或*.out中

2.6 Q&A

1. hdfs dfs -put 報錯如下,解決關閉master&salve防火牆

hdfs.DFSClient: Exception in createBlockOutputStream

java.net.NoRouteToHostException: No route to host