在 "上一篇" 我們對CoreCLR中的JIT有了一個基礎的瞭解, 這一篇我們將更詳細分析JIT的實現. JIT的實現代碼主要在 "https://github.com/dotnet/coreclr/tree/master/src/jit" 下, 要對一個的函數的JIT過程進行詳細分析, 最好的辦法 ...

在上一篇我們對CoreCLR中的JIT有了一個基礎的瞭解,

這一篇我們將更詳細分析JIT的實現.

JIT的實現代碼主要在https://github.com/dotnet/coreclr/tree/master/src/jit下,

要對一個的函數的JIT過程進行詳細分析, 最好的辦法是查看JitDump.

查看JitDump需要自己編譯一個Debug版本的CoreCLR, windows可以看這裡, linux可以看這裡,

編譯完以後定義環境變數COMPlus_JitDump=Main, Main可以換成其他函數的名稱, 然後使用該Debug版本的CoreCLR執行程式即可.

JitDump的例子可以看這裡, 包含了Debug模式和Release模式的輸出.

接下來我們來結合代碼一步步的看JIT中的各個過程.

以下的代碼基於CoreCLR 1.1.0和x86/x64分析, 新版本可能會有變化.

(為什麼是1.1.0? 因為JIT部分我看了半年時間, 開始看的時候2.0還未出來)

JIT的觸發

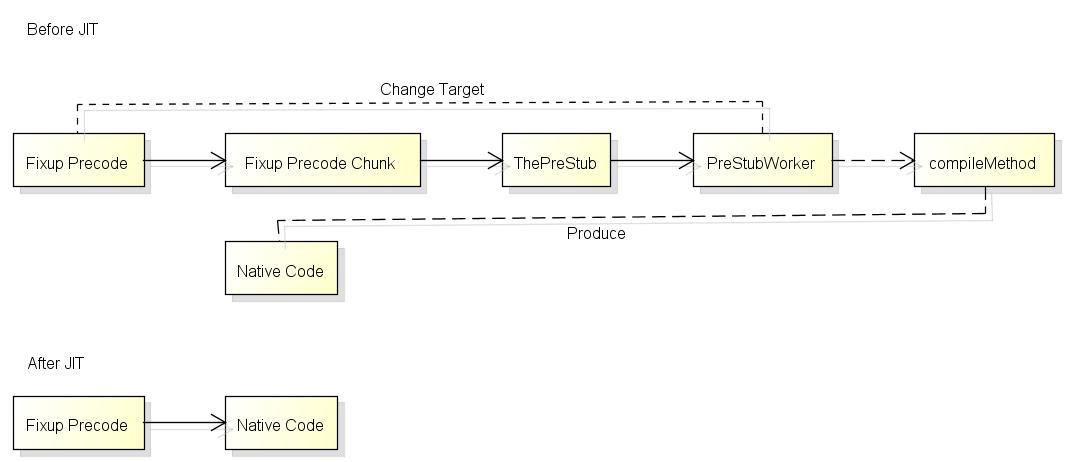

在上一篇中我提到了, 觸發JIT編譯會在第一次調用函數時, 會從樁(Stub)觸發:

這就是JIT Stub實際的樣子, 函數第一次調用前Fixup Precode的狀態:

Fixup Precode:

(lldb) di --frame --bytes

-> 0x7fff7c21f5a8: e8 2b 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5ad: 5e popq %rsi

0x7fff7c21f5ae: 19 05 e8 23 6c fe sbbl %eax, -0x193dc18(%rip)

0x7fff7c21f5b4: ff 5e a8 lcalll *-0x58(%rsi)

0x7fff7c21f5b7: 04 e8 addb $-0x18, %al

0x7fff7c21f5b9: 1b 6c fe ff sbbl -0x1(%rsi,%rdi,8), %ebp

0x7fff7c21f5bd: 5e popq %rsi

0x7fff7c21f5be: 00 03 addb %al, (%rbx)

0x7fff7c21f5c0: e8 13 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5c5: 5e popq %rsi

0x7fff7c21f5c6: b0 02 movb $0x2, %al

(lldb) di --frame --bytes

-> 0x7fff7c2061d8: e9 13 3f 9d 79 jmp 0x7ffff5bda0f0 ; PrecodeFixupThunk

0x7fff7c2061dd: cc int3

0x7fff7c2061de: cc int3

0x7fff7c2061df: cc int3

0x7fff7c2061e0: 49 ba 00 da d0 7b ff 7f 00 00 movabsq $0x7fff7bd0da00, %r10

0x7fff7c2061ea: 40 e9 e0 ff ff ff jmp 0x7fff7c2061d0這兩段代碼只有第一條指令是相關的, 註意callq後面的5e 19 05, 這些並不是彙編指令而是函數的信息, 下麵會提到.

接下來跳轉到Fixup Precode Chunk, 從這裡開始的代碼所有函數都會共用:

Fixup Precode Chunk:

(lldb) di --frame --bytes

-> 0x7ffff5bda0f0 <PrecodeFixupThunk>: 58 popq %rax ; rax = 0x7fff7c21f5ad

0x7ffff5bda0f1 <PrecodeFixupThunk+1>: 4c 0f b6 50 02 movzbq 0x2(%rax), %r10 ; r10 = 0x05 (precode chunk index)

0x7ffff5bda0f6 <PrecodeFixupThunk+6>: 4c 0f b6 58 01 movzbq 0x1(%rax), %r11 ; r11 = 0x19 (methoddesc chunk index)

0x7ffff5bda0fb <PrecodeFixupThunk+11>: 4a 8b 44 d0 03 movq 0x3(%rax,%r10,8), %rax ; rax = 0x7fff7bdd5040 (methoddesc chunk)

0x7ffff5bda100 <PrecodeFixupThunk+16>: 4e 8d 14 d8 leaq (%rax,%r11,8), %r10 ; r10 = 0x7fff7bdd5108 (methoddesc)

0x7ffff5bda104 <PrecodeFixupThunk+20>: e9 37 ff ff ff jmp 0x7ffff5bda040 ; ThePreStub這段代碼的源代碼在vm\amd64\unixasmhelpers.S:

LEAF_ENTRY PrecodeFixupThunk, _TEXT

pop rax // Pop the return address. It points right after the call instruction in the precode.

// Inline computation done by FixupPrecode::GetMethodDesc()

movzx r10,byte ptr [rax+2] // m_PrecodeChunkIndex

movzx r11,byte ptr [rax+1] // m_MethodDescChunkIndex

mov rax,qword ptr [rax+r10*8+3]

lea METHODDESC_REGISTER,[rax+r11*8]

// Tail call to prestub

jmp C_FUNC(ThePreStub)

LEAF_END PrecodeFixupThunk, _TEXTpopq %rax後rax會指向剛纔callq後面的地址, 再根據後面儲存的索引值可以得到編譯函數的MethodDesc, 接下來跳轉到The PreStub:

ThePreStub:

(lldb) di --frame --bytes

-> 0x7ffff5bda040 <ThePreStub>: 55 pushq %rbp

0x7ffff5bda041 <ThePreStub+1>: 48 89 e5 movq %rsp, %rbp

0x7ffff5bda044 <ThePreStub+4>: 53 pushq %rbx

0x7ffff5bda045 <ThePreStub+5>: 41 57 pushq %r15

0x7ffff5bda047 <ThePreStub+7>: 41 56 pushq %r14

0x7ffff5bda049 <ThePreStub+9>: 41 55 pushq %r13

0x7ffff5bda04b <ThePreStub+11>: 41 54 pushq %r12

0x7ffff5bda04d <ThePreStub+13>: 41 51 pushq %r9

0x7ffff5bda04f <ThePreStub+15>: 41 50 pushq %r8

0x7ffff5bda051 <ThePreStub+17>: 51 pushq %rcx

0x7ffff5bda052 <ThePreStub+18>: 52 pushq %rdx

0x7ffff5bda053 <ThePreStub+19>: 56 pushq %rsi

0x7ffff5bda054 <ThePreStub+20>: 57 pushq %rdi

0x7ffff5bda055 <ThePreStub+21>: 48 8d a4 24 78 ff ff ff leaq -0x88(%rsp), %rsp ; allocate transition block

0x7ffff5bda05d <ThePreStub+29>: 66 0f 7f 04 24 movdqa %xmm0, (%rsp) ; fill transition block

0x7ffff5bda062 <ThePreStub+34>: 66 0f 7f 4c 24 10 movdqa %xmm1, 0x10(%rsp) ; fill transition block

0x7ffff5bda068 <ThePreStub+40>: 66 0f 7f 54 24 20 movdqa %xmm2, 0x20(%rsp) ; fill transition block

0x7ffff5bda06e <ThePreStub+46>: 66 0f 7f 5c 24 30 movdqa %xmm3, 0x30(%rsp) ; fill transition block

0x7ffff5bda074 <ThePreStub+52>: 66 0f 7f 64 24 40 movdqa %xmm4, 0x40(%rsp) ; fill transition block

0x7ffff5bda07a <ThePreStub+58>: 66 0f 7f 6c 24 50 movdqa %xmm5, 0x50(%rsp) ; fill transition block

0x7ffff5bda080 <ThePreStub+64>: 66 0f 7f 74 24 60 movdqa %xmm6, 0x60(%rsp) ; fill transition block

0x7ffff5bda086 <ThePreStub+70>: 66 0f 7f 7c 24 70 movdqa %xmm7, 0x70(%rsp) ; fill transition block

0x7ffff5bda08c <ThePreStub+76>: 48 8d bc 24 88 00 00 00 leaq 0x88(%rsp), %rdi ; arg 1 = transition block*

0x7ffff5bda094 <ThePreStub+84>: 4c 89 d6 movq %r10, %rsi ; arg 2 = methoddesc

0x7ffff5bda097 <ThePreStub+87>: e8 44 7e 11 00 callq 0x7ffff5cf1ee0 ; PreStubWorker at prestub.cpp:958

0x7ffff5bda09c <ThePreStub+92>: 66 0f 6f 04 24 movdqa (%rsp), %xmm0

0x7ffff5bda0a1 <ThePreStub+97>: 66 0f 6f 4c 24 10 movdqa 0x10(%rsp), %xmm1

0x7ffff5bda0a7 <ThePreStub+103>: 66 0f 6f 54 24 20 movdqa 0x20(%rsp), %xmm2

0x7ffff5bda0ad <ThePreStub+109>: 66 0f 6f 5c 24 30 movdqa 0x30(%rsp), %xmm3

0x7ffff5bda0b3 <ThePreStub+115>: 66 0f 6f 64 24 40 movdqa 0x40(%rsp), %xmm4

0x7ffff5bda0b9 <ThePreStub+121>: 66 0f 6f 6c 24 50 movdqa 0x50(%rsp), %xmm5

0x7ffff5bda0bf <ThePreStub+127>: 66 0f 6f 74 24 60 movdqa 0x60(%rsp), %xmm6

0x7ffff5bda0c5 <ThePreStub+133>: 66 0f 6f 7c 24 70 movdqa 0x70(%rsp), %xmm7

0x7ffff5bda0cb <ThePreStub+139>: 48 8d a4 24 88 00 00 00 leaq 0x88(%rsp), %rsp

0x7ffff5bda0d3 <ThePreStub+147>: 5f popq %rdi

0x7ffff5bda0d4 <ThePreStub+148>: 5e popq %rsi

0x7ffff5bda0d5 <ThePreStub+149>: 5a popq %rdx

0x7ffff5bda0d6 <ThePreStub+150>: 59 popq %rcx

0x7ffff5bda0d7 <ThePreStub+151>: 41 58 popq %r8

0x7ffff5bda0d9 <ThePreStub+153>: 41 59 popq %r9

0x7ffff5bda0db <ThePreStub+155>: 41 5c popq %r12

0x7ffff5bda0dd <ThePreStub+157>: 41 5d popq %r13

0x7ffff5bda0df <ThePreStub+159>: 41 5e popq %r14

0x7ffff5bda0e1 <ThePreStub+161>: 41 5f popq %r15

0x7ffff5bda0e3 <ThePreStub+163>: 5b popq %rbx

0x7ffff5bda0e4 <ThePreStub+164>: 5d popq %rbp

0x7ffff5bda0e5 <ThePreStub+165>: 48 ff e0 jmpq *%rax

%rax should be patched fixup precode = 0x7fff7c21f5a8

(%rsp) should be the return address before calling "Fixup Precode"看上去相當長但做的事情很簡單, 它的源代碼在vm\amd64\theprestubamd64.S:

NESTED_ENTRY ThePreStub, _TEXT, NoHandler

PROLOG_WITH_TRANSITION_BLOCK 0, 0, 0, 0, 0

//

// call PreStubWorker

//

lea rdi, [rsp + __PWTB_TransitionBlock] // pTransitionBlock*

mov rsi, METHODDESC_REGISTER

call C_FUNC(PreStubWorker)

EPILOG_WITH_TRANSITION_BLOCK_TAILCALL

TAILJMP_RAX

NESTED_END ThePreStub, _TEXT它會備份寄存器到棧, 然後調用PreStubWorker這個函數, 調用完畢以後恢復棧上的寄存器,

再跳轉到PreStubWorker的返回結果, 也就是打完補丁後的Fixup Precode的地址(0x7fff7c21f5a8).

PreStubWorker是C編寫的函數, 它會調用JIT的編譯函數, 然後對Fixup Precode打補丁.

打補丁時會讀取前面的5e, 5e代表precode的類型是PRECODE_FIXUP, 打補丁的函數是FixupPrecode::SetTargetInterlocked.

打完補丁以後的Fixup Precode如下:

Fixup Precode:

(lldb) di --bytes -s 0x7fff7c21f5a8

0x7fff7c21f5a8: e9 a3 87 3a 00 jmp 0x7fff7c5c7d50

0x7fff7c21f5ad: 5f popq %rdi

0x7fff7c21f5ae: 19 05 e8 23 6c fe sbbl %eax, -0x193dc18(%rip)

0x7fff7c21f5b4: ff 5e a8 lcalll *-0x58(%rsi)

0x7fff7c21f5b7: 04 e8 addb $-0x18, %al

0x7fff7c21f5b9: 1b 6c fe ff sbbl -0x1(%rsi,%rdi,8), %ebp

0x7fff7c21f5bd: 5e popq %rsi

0x7fff7c21f5be: 00 03 addb %al, (%rbx)

0x7fff7c21f5c0: e8 13 6c fe ff callq 0x7fff7c2061d8

0x7fff7c21f5c5: 5e popq %rsi

0x7fff7c21f5c6: b0 02 movb $0x2, %al下次再調用函數時就可以直接jmp到編譯結果了.

JIT Stub的實現可以讓運行時只編譯實際會運行的函數, 這樣可以大幅減少程式的啟動時間, 第二次調用時的消耗(1個jmp)也非常的小.

註意調用虛方法時的流程跟上面的流程有一點不同, 虛方法的地址會保存在函數表中,

打補丁時會對函數表而不是Precode打補丁, 下次調用時函數表中指向的地址是編譯後的地址, 有興趣可以自己試試分析.

接下來我們看看PreStubWorker的內部處理.

JIT的入口點

PreStubWorker的源代碼如下:

extern "C" PCODE STDCALL PreStubWorker(TransitionBlock * pTransitionBlock, MethodDesc * pMD)

{

PCODE pbRetVal = NULL;

BEGIN_PRESERVE_LAST_ERROR;

STATIC_CONTRACT_THROWS;

STATIC_CONTRACT_GC_TRIGGERS;

STATIC_CONTRACT_MODE_COOPERATIVE;

STATIC_CONTRACT_ENTRY_POINT;

MAKE_CURRENT_THREAD_AVAILABLE();

#ifdef _DEBUG

Thread::ObjectRefFlush(CURRENT_THREAD);

#endif

FrameWithCookie<PrestubMethodFrame> frame(pTransitionBlock, pMD);

PrestubMethodFrame * pPFrame = &frame;

pPFrame->Push(CURRENT_THREAD);

INSTALL_MANAGED_EXCEPTION_DISPATCHER;

INSTALL_UNWIND_AND_CONTINUE_HANDLER;

ETWOnStartup (PrestubWorker_V1,PrestubWorkerEnd_V1);

_ASSERTE(!NingenEnabled() && "You cannot invoke managed code inside the ngen compilation process.");

// Running the PreStubWorker on a method causes us to access its MethodTable

g_IBCLogger.LogMethodDescAccess(pMD);

// Make sure the method table is restored, and method instantiation if present

pMD->CheckRestore();

CONSISTENCY_CHECK(GetAppDomain()->CheckCanExecuteManagedCode(pMD));

// Note this is redundant with the above check but we do it anyway for safety

//

// This has been disabled so we have a better chance of catching these. Note that this check is

// NOT sufficient for domain neutral and ngen cases.

//

// pMD->EnsureActive();

MethodTable *pDispatchingMT = NULL;

if (pMD->IsVtableMethod())

{

OBJECTREF curobj = pPFrame->GetThis();

if (curobj != NULL) // Check for virtual function called non-virtually on a NULL object

{

pDispatchingMT = curobj->GetTrueMethodTable();

#ifdef FEATURE_ICASTABLE

if (pDispatchingMT->IsICastable())

{

MethodTable *pMDMT = pMD->GetMethodTable();

TypeHandle objectType(pDispatchingMT);

TypeHandle methodType(pMDMT);

GCStress<cfg_any>::MaybeTrigger();

INDEBUG(curobj = NULL); // curobj is unprotected and CanCastTo() can trigger GC

if (!objectType.CanCastTo(methodType))

{

// Apperantly ICastable magic was involved when we chose this method to be called

// that's why we better stick to the MethodTable it belongs to, otherwise

// DoPrestub() will fail not being able to find implementation for pMD in pDispatchingMT.

pDispatchingMT = pMDMT;

}

}

#endif // FEATURE_ICASTABLE

// For value types, the only virtual methods are interface implementations.

// Thus pDispatching == pMT because there

// is no inheritance in value types. Note the BoxedEntryPointStubs are shared

// between all sharable generic instantiations, so the == test is on

// canonical method tables.

#ifdef _DEBUG

MethodTable *pMDMT = pMD->GetMethodTable(); // put this here to see what the MT is in debug mode

_ASSERTE(!pMD->GetMethodTable()->IsValueType() ||

(pMD->IsUnboxingStub() && (pDispatchingMT->GetCanonicalMethodTable() == pMDMT->GetCanonicalMethodTable())));

#endif // _DEBUG

}

}

GCX_PREEMP_THREAD_EXISTS(CURRENT_THREAD);

pbRetVal = pMD->DoPrestub(pDispatchingMT);

UNINSTALL_UNWIND_AND_CONTINUE_HANDLER;

UNINSTALL_MANAGED_EXCEPTION_DISPATCHER;

{

HardwareExceptionHolder

// Give debugger opportunity to stop here

ThePreStubPatch();

}

pPFrame->Pop(CURRENT_THREAD);

POSTCONDITION(pbRetVal != NULL);

END_PRESERVE_LAST_ERROR;

return pbRetVal;

}這個函數接收了兩個參數,

第一個是TransitionBlock, 其實就是一個指向棧的指針, 裡面保存了備份的寄存器,

第二個是MethodDesc, 是當前編譯函數的信息, lldb中使用dumpmd pMD即可看到具體信息.

之後會調用MethodDesc::DoPrestub, 如果函數是虛方法則傳入this對象類型的MethodTable.

MethodDesc::DoPrestub的源代碼如下:

PCODE MethodDesc::DoPrestub(MethodTable *pDispatchingMT)

{

CONTRACT(PCODE)

{

STANDARD_VM_CHECK;

POSTCONDITION(RETVAL != NULL);

}

CONTRACT_END;

Stub *pStub = NULL;

PCODE pCode = NULL;

Thread *pThread = GetThread();

MethodTable *pMT = GetMethodTable();

// Running a prestub on a method causes us to access its MethodTable

g_IBCLogger.LogMethodDescAccess(this);

// A secondary layer of defense against executing code in inspection-only assembly.

// This should already have been taken care of by not allowing inspection assemblies

// to be activated. However, this is a very inexpensive piece of insurance in the name

// of security.

if (IsIntrospectionOnly())

{

_ASSERTE(!"A ReflectionOnly assembly reached the prestub. This should not have happened.");

COMPlusThrow(kInvalidOperationException, IDS_EE_CODEEXECUTION_IN_INTROSPECTIVE_ASSEMBLY);

}

if (ContainsGenericVariables())

{

COMPlusThrow(kInvalidOperationException, IDS_EE_CODEEXECUTION_CONTAINSGENERICVAR);

}

/************************** DEBUG CHECKS *************************/

/*-----------------------------------------------------------------

// Halt if needed, GC stress, check the sharing count etc.

*/

#ifdef _DEBUG

static unsigned ctr = 0;

ctr++;

if (g_pConfig->ShouldPrestubHalt(this))

{

_ASSERTE(!"PreStubHalt");

}

LOG((LF_CLASSLOADER, LL_INFO10000, "In PreStubWorker for %s::%s\n",

m_pszDebugClassName, m_pszDebugMethodName));

// This is a nice place to test out having some fatal EE errors. We do this only in a checked build, and only

// under the InjectFatalError key.

if (g_pConfig->InjectFatalError() == 1)

{

EEPOLICY_HANDLE_FATAL_ERROR(COR_E_EXECUTIONENGINE);

}

else if (g_pConfig->InjectFatalError() == 2)

{

EEPOLICY_HANDLE_FATAL_ERROR(COR_E_STACKOVERFLOW);

}

else if (g_pConfig->InjectFatalError() == 3)

{

TestSEHGuardPageRestore();

}

// Useful to test GC with the prestub on the call stack

if (g_pConfig->ShouldPrestubGC(this))

{

GCX_COOP();

GCHeap::GetGCHeap()->GarbageCollect(-1);

}

#endif // _DEBUG

STRESS_LOG1(LF_CLASSLOADER, LL_INFO10000, "Prestubworker: method %pM\n", this);

GCStress<cfg_any, EeconfigFastGcSPolicy, CoopGcModePolicy>::MaybeTrigger();

// Are we in the prestub because of a rejit request? If so, let the ReJitManager

// take it from here.

pCode = ReJitManager::DoReJitIfNecessary(this);

if (pCode != NULL)

{

// A ReJIT was performed, so nothing left for DoPrestub() to do. Return now.

//

// The stable entrypoint will either be a pointer to the original JITted code

// (with a jmp at the top to jump to the newly-rejitted code) OR a pointer to any

// stub code that must be executed first (e.g., a remoting stub), which in turn

// will call the original JITted code (which then jmps to the newly-rejitted

// code).

RETURN GetStableEntryPoint();

}

#ifdef FEATURE_PREJIT

// If this method is the root of a CER call graph and we've recorded this fact in the ngen image then we're in the prestub in

// order to trip any runtime level preparation needed for this graph (P/Invoke stub generation/library binding, generic

// dictionary prepopulation etc.).

GetModule()->RestoreCer(this);

#endif // FEATURE_PREJIT

#ifdef FEATURE_COMINTEROP

/************************** INTEROP *************************/

/*-----------------------------------------------------------------

// Some method descriptors are COMPLUS-to-COM call descriptors

// they are not your every day method descriptors, for example

// they don't have an IL or code.

*/

if (IsComPlusCall() || IsGenericComPlusCall())

{

pCode = GetStubForInteropMethod(this);

GetPrecode()->SetTargetInterlocked(pCode);

RETURN GetStableEntryPoint();

}

#endif // FEATURE_COMINTEROP

// workaround: This is to handle a punted work item dealing with a skipped module constructor

// due to appdomain unload. Basically shared code was JITted in domain A, and then

// this caused a link to another shared module with a module CCTOR, which was skipped

// or aborted in another appdomain we were trying to propagate the activation to.

//

// Note that this is not a fix, but that it just minimizes the window in which the

// issue can occur.

if (pThread->IsAbortRequested())

{

pThread->HandleThreadAbort();

}

/************************** CLASS CONSTRUCTOR ********************/

// Make sure .cctor has been run

if (IsClassConstructorTriggeredViaPrestub())

{

pMT->CheckRunClassInitThrowing();

}

/************************** BACKPATCHING *************************/

// See if the addr of code has changed from the pre-stub

#ifdef FEATURE_INTERPRETER

if (!IsReallyPointingToPrestub())

#else

if (!IsPointingToPrestub())

#endif

{

LOG((LF_CLASSLOADER, LL_INFO10000,

" In PreStubWorker, method already jitted, backpatching call point\n"));

RETURN DoBackpatch(pMT, pDispatchingMT, TRUE);

}

// record if remoting needs to intercept this call

BOOL fRemotingIntercepted = IsRemotingInterceptedViaPrestub();

BOOL fReportCompilationFinished = FALSE;

/************************** CODE CREATION *************************/

if (IsUnboxingStub())

{

pStub = MakeUnboxingStubWorker(this);

}

#ifdef FEATURE_REMOTING

else if (pMT->IsInterface() && !IsStatic() && !IsFCall())

{

pCode = CRemotingServices::GetDispatchInterfaceHelper(this);

GetOrCreatePrecode();

}

#endif // FEATURE_REMOTING

#if defined(FEATURE_SHARE_GENERIC_CODE)

else if (IsInstantiatingStub())

{

pStub = MakeInstantiatingStubWorker(this);

}

#endif // defined(FEATURE_SHARE_GENERIC_CODE)

else if (IsIL() || IsNoMetadata())

{

// remember if we need to backpatch the MethodTable slot

BOOL fBackpatch = !fRemotingIntercepted

&& !IsEnCMethod();

#ifdef FEATURE_PREJIT

//

// See if we have any prejitted code to use.

//

pCode = GetPreImplementedCode();

#ifdef PROFILING_SUPPORTED

if (pCode != NULL)

{

BOOL fShouldSearchCache = TRUE;

{

BEGIN_PIN_PROFILER(CORProfilerTrackCacheSearches());

g_profControlBlock.pProfInterface->

JITCachedFunctionSearchStarted((FunctionID) this,

&fShouldSearchCache);

END_PIN_PROFILER();

}

if (!fShouldSearchCache)

{

#ifdef FEATURE_INTERPRETER

SetNativeCodeInterlocked(NULL, pCode, FALSE);

#else

SetNativeCodeInterlocked(NULL, pCode);

#endif

_ASSERTE(!IsPreImplemented());

pCode = NULL;

}

}

#endif // PROFILING_SUPPORTED

if (pCode != NULL)

{

LOG((LF_ZAP, LL_INFO10000,

"ZAP: Using code" FMT_ADDR "for %s.%s sig=\"%s\" (token %x).\n",

DBG_ADDR(pCode),

m_pszDebugClassName,

m_pszDebugMethodName,

m_pszDebugMethodSignature,

GetMemberDef()));

TADDR pFixupList = GetFixupList();

if (pFixupList != NULL)

{

Module *pZapModule = GetZapModule();

_ASSERTE(pZapModule != NULL);

if (!pZapModule->FixupDelayList(pFixupList))

{

_ASSERTE(!"FixupDelayList failed");

ThrowHR(COR_E_BADIMAGEFORMAT);

}

}

#ifdef HAVE_GCCOVER

if (GCStress<cfg_instr_ngen>::IsEnabled())

SetupGcCoverage(this, (BYTE*) pCode);

#endif // HAVE_GCCOVER

#ifdef PROFILING_SUPPORTED

/*

* This notifies the profiler that a search to find a

* cached jitted function has been made.

*/

{

BEGIN_PIN_PROFILER(CORProfilerTrackCacheSearches());

g_profControlBlock.pProfInterface->

JITCachedFunctionSearchFinished((FunctionID) this, COR_PRF_CACHED_FUNCTION_FOUND);

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

}

//

// If not, try to jit it

//

#endif // FEATURE_PREJIT

#ifdef FEATURE_READYTORUN

if (pCode == NULL)

{

Module * pModule = GetModule();

if (pModule->IsReadyToRun())

{

pCode = pModule->GetReadyToRunInfo()->GetEntryPoint(this);

if (pCode != NULL)

fReportCompilationFinished = TRUE;

}

}

#endif // FEATURE_READYTORUN

if (pCode == NULL)

{

NewHolder<COR_ILMETHOD_DECODER> pHeader(NULL);

// Get the information on the method

if (!IsNoMetadata())

{

COR_ILMETHOD* ilHeader = GetILHeader(TRUE);

if(ilHeader == NULL)

{

#ifdef FEATURE_COMINTEROP

// Abstract methods can be called through WinRT derivation if the deriving type

// is not implemented in managed code, and calls through the CCW to the abstract

// method. Throw a sensible exception in that case.

if (pMT->IsExportedToWinRT() && IsAbstract())

{

COMPlusThrowHR(E_NOTIMPL);

}

#endif // FEATURE_COMINTEROP

COMPlusThrowHR(COR_E_BADIMAGEFORMAT, BFA_BAD_IL);

}

COR_ILMETHOD_DECODER::DecoderStatus status = COR_ILMETHOD_DECODER::FORMAT_ERROR;

{

// Decoder ctor can AV on a malformed method header

AVInRuntimeImplOkayHolder AVOkay;

pHeader = new COR_ILMETHOD_DECODER(ilHeader, GetMDImport(), &status);

if(pHeader == NULL)

status = COR_ILMETHOD_DECODER::FORMAT_ERROR;

}

if (status == COR_ILMETHOD_DECODER::VERIFICATION_ERROR &&

Security::CanSkipVerification(GetModule()->GetDomainAssembly()))

{

status = COR_ILMETHOD_DECODER::SUCCESS;

}

if (status != COR_ILMETHOD_DECODER::SUCCESS)

{

if (status == COR_ILMETHOD_DECODER::VERIFICATION_ERROR)

{

// Throw a verification HR

COMPlusThrowHR(COR_E_VERIFICATION);

}

else

{

COMPlusThrowHR(COR_E_BADIMAGEFORMAT, BFA_BAD_IL);

}

}

#ifdef _VER_EE_VERIFICATION_ENABLED

static ConfigDWORD peVerify;

if (peVerify.val(CLRConfig::EXTERNAL_PEVerify))

Verify(pHeader, TRUE, FALSE); // Throws a VerifierException if verification fails

#endif // _VER_EE_VERIFICATION_ENABLED

} // end if (!IsNoMetadata())

// JIT it

LOG((LF_CLASSLOADER, LL_INFO1000000,

" In PreStubWorker, calling MakeJitWorker\n"));

// Create the precode eagerly if it is going to be needed later.

if (!fBackpatch)

{

GetOrCreatePrecode();

}

// Mark the code as hot in case the method ends up in the native image

g_IBCLogger.LogMethodCodeAccess(this);

pCode = MakeJitWorker(pHeader, 0, 0);

#ifdef FEATURE_INTERPRETER

if ((pCode != NULL) && !HasStableEntryPoint())

{

// We don't yet have a stable entry point, so don't do backpatching yet.

// But we do have to handle some extra cases that occur in backpatching.

// (Perhaps I *should* get to the backpatching code, but in a mode where we know

// we're not dealing with the stable entry point...)

if (HasNativeCodeSlot())

{

// We called "SetNativeCodeInterlocked" in MakeJitWorker, which updated the native

// code slot, but I think we also want to update the regular slot...

PCODE tmpEntry = GetTemporaryEntryPoint();

PCODE pFound = FastInterlockCompareExchangePointer(GetAddrOfSlot(), pCode, tmpEntry);

// Doesn't matter if we failed -- if we did, it's because somebody else made progress.

if (pFound != tmpEntry) pCode = pFound;

}

// Now we handle the case of a FuncPtrPrecode.

FuncPtrStubs * pFuncPtrStubs = GetLoaderAllocator()->GetFuncPtrStubsNoCreate();

if (pFuncPtrStubs != NULL)

{

Precode* pFuncPtrPrecode = pFuncPtrStubs->Lookup(this);

if (pFuncPtrPrecode != NULL)

{

// If there is a funcptr precode to patch, attempt to patch it. If we lose, that's OK,

// somebody else made progress.

pFuncPtrPrecode->SetTargetInterlocked(pCode);

}

}

}

#endif // FEATURE_INTERPRETER

} // end if (pCode == NULL)

} // end else if (IsIL() || IsNoMetadata())

else if (IsNDirect())

{

if (!GetModule()->GetSecurityDescriptor()->CanCallUnmanagedCode())

Security::ThrowSecurityException(g_SecurityPermissionClassName, SPFLAGSUNMANAGEDCODE);

pCode = GetStubForInteropMethod(this);

GetOrCreatePrecode();

}

else if (IsFCall())

{

// Get the fcall implementation

BOOL fSharedOrDynamicFCallImpl;

pCode = ECall::GetFCallImpl(this, &fSharedOrDynamicFCallImpl);

if (fSharedOrDynamicFCallImpl)

{

// Fake ctors share one implementation that has to be wrapped by prestub

GetOrCreatePrecode();

}

}

else if (IsArray())

{

pStub = GenerateArrayOpStub((ArrayMethodDesc*)this);

}

else if (IsEEImpl())

{

_ASSERTE(GetMethodTable()->IsDelegate());

pCode = COMDelegate::GetInvokeMethodStub((EEImplMethodDesc*)this);

GetOrCreatePrecode();

}

else

{

// This is a method type we don't handle yet

_ASSERTE(!"Unknown Method Type");

}

/************************** POSTJIT *************************/

#ifndef FEATURE_INTERPRETER

_ASSERTE(pCode == NULL || GetNativeCode() == NULL || pCode == GetNativeCode());

#else // FEATURE_INTERPRETER

// Interpreter adds a new possiblity == someone else beat us to installing an intepreter stub.

_ASSERTE(pCode == NULL || GetNativeCode() == NULL || pCode == GetNativeCode()

|| Interpreter::InterpretationStubToMethodInfo(pCode) == this);

#endif // FEATURE_INTERPRETER

// At this point we must have either a pointer to managed code or to a stub. All of the above code

// should have thrown an exception if it couldn't make a stub.

_ASSERTE((pStub != NULL) ^ (pCode != NULL));

/************************** SECURITY *************************/

// Lets check to see if we need declarative security on this stub, If we have

// security checks on this method or class then we need to add an intermediate

// stub that performs declarative checks prior to calling the real stub.

// record if security needs to intercept this call (also depends on whether we plan to use stubs for declarative security)

#if !defined( HAS_REMOTING_PRECODE) && defined (FEATURE_REMOTING)

/************************** REMOTING *************************/

// check for MarshalByRef scenarios ... we need to intercept

// Non-virtual calls on MarshalByRef types

if (fRemotingIntercepted)

{

// let us setup a remoting stub to intercept all the calls

Stub *pRemotingStub = CRemotingServices::GetStubForNonVirtualMethod(this,

(pStub != NULL) ? (LPVOID)pStub->GetEntryPoint() : (LPVOID)pCode, pStub);

if (pRemotingStub != NULL)

{

pStub = pRemotingStub;

pCode = NULL;

}

}

#endif // HAS_REMOTING_PRECODE

_ASSERTE((pStub != NULL) ^ (pCode != NULL));

#if defined(_TARGET_X86_) || defined(_TARGET_AMD64_)

//

// We are seeing memory reordering race around fixups (see DDB 193514 and related bugs). We get into

// situation where the patched precode is visible by other threads, but the resolved fixups

// are not. IT SHOULD NEVER HAPPEN according to our current understanding of x86/x64 memory model.

// (see email thread attached to the bug for details).

//

// We suspect that there may be bug in the hardware or that hardware may have shortcuts that may be

// causing grief. We will try to avoid the race by executing an extra memory barrier.

//

MemoryBarrier();

#endif

if (pCode != NULL)

{

if (HasPrecode())

GetPrecode()->SetTargetInterlocked(pCode);

else

if (!HasStableEntryPoint())

{

// Is the result an interpreter stub?

#ifdef FEATURE_INTERPRETER

if (Interpreter::InterpretationStubToMethodInfo(pCode) == this)

{

SetEntryPointInterlocked(pCode);

}

else

#endif // FEATURE_INTERPRETER

{

SetStableEntryPointInterlocked(pCode);

}

}

}

else

{

if (!GetOrCreatePrecode()->SetTargetInterlocked(pStub->GetEntryPoint()))

{

pStub->DecRef();

}

else

if (pStub->HasExternalEntryPoint())

{

// If the Stub wraps code that is outside of the Stub allocation, then we

// need to free the Stub allocation now.

pStub->DecRef();

}

}

#ifdef FEATURE_INTERPRETER

_ASSERTE(!IsReallyPointingToPrestub());

#else // FEATURE_INTERPRETER

_ASSERTE(!IsPointingToPrestub());

_ASSERTE(HasStableEntryPoint());

#endif // FEATURE_INTERPRETER

if (fReportCompilationFinished)

DACNotifyCompilationFinished(this);

RETURN DoBackpatch(pMT, pDispatchingMT, FALSE);

}這個函數比較長, 我們只需要關註兩個地方:

pCode = MakeJitWorker(pHeader, 0, 0);MakeJitWorker會調用JIT編譯函數, pCode是編譯後的機器代碼地址.

if (HasPrecode())

GetPrecode()->SetTargetInterlocked(pCode);SetTargetInterlocked會對Precode打補丁, 第二次調用函數時會直接跳轉到編譯結果.

MakeJitWorker的源代碼如下:

PCODE MethodDesc::MakeJitWorker(COR_ILMETHOD_DECODER* ILHeader, DWORD flags, DWORD flags2)

{

STANDARD_VM_CONTRACT;

BOOL fIsILStub = IsILStub(); // @TODO: understand the need for this special case

LOG((LF_JIT, LL_INFO1000000,

"MakeJitWorker(" FMT_ADDR ", %s) for %s:%s\n",

DBG_ADDR(this),

fIsILStub ? " TRUE" : "FALSE",

GetMethodTable()->GetDebugClassName(),

m_pszDebugMethodName));

PCODE pCode = NULL;

ULONG sizeOfCode = 0;

#ifdef FEATURE_INTERPRETER

PCODE pPreviousInterpStub = NULL;

BOOL fInterpreted = FALSE;

BOOL fStable = TRUE; // True iff the new code address (to be stored in pCode), is a stable entry point.

#endif

#ifdef FEATURE_MULTICOREJIT

MulticoreJitManager & mcJitManager = GetAppDomain()->GetMulticoreJitManager();

bool fBackgroundThread = (flags & CORJIT_FLG_MCJIT_BACKGROUND) != 0;

#endif

{

// Enter the global lock which protects the list of all functions being JITd

ListLockHolder pJitLock (GetDomain()->GetJitLock());

// It is possible that another thread stepped in before we entered the global lock for the first time.

pCode = GetNativeCode();

if (pCode != NULL)

{

#ifdef FEATURE_INTERPRETER

if (Interpreter::InterpretationStubToMethodInfo(pCode) == this)

{

pPreviousInterpStub = pCode;

}

else

#endif // FEATURE_INTERPRETER

goto Done;

}

const char *description = "jit lock";

INDEBUG(description = m_pszDebugMethodName;)

ListLockEntryHolder pEntry(ListLockEntry::Find(pJitLock, this, description));

// We have an entry now, we can release the global lock

pJitLock.Release();

// Take the entry lock

{

ListLockEntryLockHolder pEntryLock(pEntry, FALSE);

if (pEntryLock.DeadlockAwareAcquire())

{

if (pEntry->m_hrResultCode == S_FALSE)

{

// Nobody has jitted the method yet

}

else

{

// We came in to jit but someone beat us so return the

// jitted method!

// We can just fall through because we will notice below that

// the method has code.

// @todo: Note that we may have a failed HRESULT here -

// we might want to return an early error rather than

// repeatedly failing the jit.

}

}

else

{

// Taking this lock would cause a deadlock (presumably because we

// are involved in a class constructor circular dependency.) For

// instance, another thread may be waiting to run the class constructor

// that we are jitting, but is currently jitting this function.

//

// To remedy this, we want to go ahead and do the jitting anyway.

// The other threads contending for the lock will then notice that

// the jit finished while they were running class constructors, and abort their

// current jit effort.

//

// We don't have to do anything special right here since we

// can check HasNativeCode() to detect this case later.

//

// Note that at this point we don't have the lock, but that's OK because the

// thread which does have the lock is blocked waiting for us.

}

// It is possible that another thread stepped in before we entered the lock.

pCode = GetNativeCode();

#ifdef FEATURE_INTERPRETER

if (pCode != NULL && (pCode != pPreviousInterpStub))

#else

if (pCode != NULL)

#endif // FEATURE_INTERPRETER

{

goto Done;

}

SString namespaceOrClassName, methodName, methodSignature;

PCODE pOtherCode = NULL; // Need to move here due to 'goto GotNewCode'

#ifdef FEATURE_MULTICOREJIT

bool fCompiledInBackground = false;

// If not called from multi-core JIT thread,

if (! fBackgroundThread)

{

// Quick check before calling expensive out of line function on this method's domain has code JITted by background thread

if (mcJitManager.GetMulticoreJitCodeStorage().GetRemainingMethodCount() > 0)

{

if (MulticoreJitManager::IsMethodSupported(this))

{

pCode = mcJitManager.RequestMethodCode(this); // Query multi-core JIT manager for compiled code

// Multicore JIT manager starts background thread to pre-compile methods, but it does not back-patch it/notify profiler/notify DAC,

// Jumtp to GotNewCode to do so

if (pCode != NULL)

{

fCompiledInBackground = true;

#ifdef DEBUGGING_SUPPORTED

// Notify the debugger of the jitted function

if (g_pDebugInterface != NULL)

{

g_pDebugInterface->JITComplete(this, pCode);

}

#endif

goto GotNewCode;

}

}

}

}

#endif

if (fIsILStub)

{

// we race with other threads to JIT the code for an IL stub and the

// IL header is released once one of the threads completes. As a result

// we must be inside the lock to reliably get the IL header for the

// stub.

ILStubResolver* pResolver = AsDynamicMethodDesc()->GetILStubResolver();

ILHeader = pResolver->GetILHeader();

}

#ifdef MDA_SUPPORTED

MdaJitCompilationStart* pProbe = MDA_GET_ASSISTANT(JitCompilationStart);

if (pProbe)

pProbe->NowCompiling(this);

#endif // MDA_SUPPORTED

#ifdef PROFILING_SUPPORTED

// If profiling, need to give a chance for a tool to examine and modify

// the IL before it gets to the JIT. This allows one to add probe calls for

// things like code coverage, performance, or whatever.

{

BEGIN_PIN_PROFILER(CORProfilerTrackJITInfo());

// Multicore JIT should be disabled when CORProfilerTrackJITInfo is on

// But there could be corner case in which profiler is attached when multicore background thread is calling MakeJitWorker

// Disable this block when calling from multicore JIT background thread

if (!IsNoMetadata()

#ifdef FEATURE_MULTICOREJIT

&& (! fBackgroundThread)

#endif

)

{

g_profControlBlock.pProfInterface->JITCompilationStarted((FunctionID) this, TRUE);

// The profiler may have changed the code on the callback. Need to

// pick up the new code. Note that you have to be fully trusted in

// this mode and the code will not be verified.

COR_ILMETHOD *pilHeader = GetILHeader(TRUE);

new (ILHeader) COR_ILMETHOD_DECODER(pilHeader, GetMDImport(), NULL);

}

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

#ifdef FEATURE_INTERPRETER

// We move the ETW event for start of JITting inward, after we make the decision

// to JIT rather than interpret.

#else // FEATURE_INTERPRETER

// Fire an ETW event to mark the beginning of JIT'ing

ETW::MethodLog::MethodJitting(this, &namespaceOrClassName, &methodName, &methodSignature);

#endif // FEATURE_INTERPRETER

#ifdef FEATURE_STACK_SAMPLING

#ifdef FEATURE_MULTICOREJIT

if (!fBackgroundThread)

#endif // FEATURE_MULTICOREJIT

{

StackSampler::RecordJittingInfo(this, flags, flags2);

}

#endif // FEATURE_STACK_SAMPLING

EX_TRY

{

pCode = UnsafeJitFunction(this, ILHeader, flags, flags2, &sizeOfCode);

}

EX_CATCH

{

// If the current thread threw an exception, but a competing thread

// somehow succeeded at JITting the same function (e.g., out of memory

// encountered on current thread but not competing thread), then go ahead

// and swallow this current thread's exception, since we somehow managed

// to successfully JIT the code on the other thread.

//

// Note that if a deadlock cycle is broken, that does not result in an

// exception--the thread would just pass through the lock and JIT the

// function in competition with the other thread (with the winner of the

// race decided later on when we do SetNativeCodeInterlocked). This

// try/catch is purely to deal with the (unusual) case where a competing

// thread succeeded where we aborted.

pOtherCode = GetNativeCode();

if (pOtherCode == NULL)

{

pEntry->m_hrResultCode = E_FAIL;

EX_RETHROW;

}

}

EX_END_CATCH(RethrowTerminalExceptions)

if (pOtherCode != NULL)

{

// Somebody finished jitting recursively while we were jitting the method.

// Just use their method & leak the one we finished. (Normally we hope

// not to finish our JIT in this case, as we will abort early if we notice

// a reentrant jit has occurred. But we may not catch every place so we

// do a definitive final check here.

pCode = pOtherCode;

goto Done;

}

_ASSERTE(pCode != NULL);

#ifdef HAVE_GCCOVER

if (GCStress<cfg_instr_jit>::IsEnabled())

{

SetupGcCoverage(this, (BYTE*) pCode);

}

#endif // HAVE_GCCOVER

#ifdef FEATURE_INTERPRETER

// Determine whether the new code address is "stable"...= is not an interpreter stub.

fInterpreted = (Interpreter::InterpretationStubToMethodInfo(pCode) == this);

fStable = !fInterpreted;

#endif // FEATURE_INTERPRETER

#ifdef FEATURE_MULTICOREJIT

// If called from multi-core JIT background thread, store code under lock, delay patching until code is queried from application threads

if (fBackgroundThread)

{

// Fire an ETW event to mark the end of JIT'ing

ETW::MethodLog::MethodJitted(this, &namespaceOrClassName, &methodName, &methodSignature, pCode, 0 /* ReJITID */);

#ifdef FEATURE_PERFMAP

// Save the JIT'd method information so that perf can resolve JIT'd call frames.

PerfMap::LogJITCompiledMethod(this, pCode, sizeOfCode);

#endif

mcJitManager.GetMulticoreJitCodeStorage().StoreMethodCode(this, pCode);

goto Done;

}

GotNewCode:

#endif

// If this function had already been requested for rejit (before its original

// code was jitted), then give the rejit manager a chance to jump-stamp the

// code we just compiled so the first thread entering the function will jump

// to the prestub and trigger the rejit. Note that the PublishMethodHolder takes

// a lock to avoid a particular kind of rejit race. See

// code:ReJitManager::PublishMethodHolder::PublishMethodHolder#PublishCode for

// details on the rejit race.

//

// Aside from rejit, performing a SetNativeCodeInterlocked at this point

// generally ensures that there is only one winning version of the native

// code. This also avoid races with profiler overriding ngened code (see

// matching SetNativeCodeInterlocked done after

// JITCachedFunctionSearchStarted)

#ifdef FEATURE_INTERPRETER

PCODE pExpected = pPreviousInterpStub;

if (pExpected == NULL) pExpected = GetTemporaryEntryPoint();

#endif

{

ReJitPublishMethodHolder publishWorker(this, pCode);

if (!SetNativeCodeInterlocked(pCode

#ifdef FEATURE_INTERPRETER

, pExpected, fStable

#endif

))

{

// Another thread beat us to publishing its copy of the JITted code.

pCode = GetNativeCode();

goto Done;

}

}

#ifdef FEATURE_INTERPRETER

// State for dynamic methods cannot be freed if the method was ever interpreted,

// since there is no way to ensure that it is not in use at the moment.

if (IsDynamicMethod() && !fInterpreted && (pPreviousInterpStub == NULL))

{

AsDynamicMethodDesc()->GetResolver()->FreeCompileTimeState();

}

#endif // FEATURE_INTERPRETER

// We succeeded in jitting the code, and our jitted code is the one that's going to run now.

pEntry->m_hrResultCode = S_OK;

#ifdef PROFILING_SUPPORTED

// Notify the profiler that JIT completed.

// Must do this after the address has been set.

// @ToDo: Why must we set the address before notifying the profiler ??

// Note that if IsInterceptedForDeclSecurity is set no one should access the jitted code address anyway.

{

BEGIN_PIN_PROFILER(CORProfilerTrackJITInfo());

if (!IsNoMetadata())

{

g_profControlBlock.pProfInterface->

JITCompilationFinished((FunctionID) this,

pEntry->m_hrResultCode,

TRUE);

}

END_PIN_PROFILER();

}

#endif // PROFILING_SUPPORTED

#ifdef FEATURE_MULTICOREJIT

if (! fCompiledInBackground)

#endif

#ifdef FEATURE_INTERPRETER

// If we didn't JIT, but rather, created an interpreter stub (i.e., fStable is false), don't tell ETW that we did.