接著上一篇極簡入門 ,打算嘗試一下 spark2.2.0 ,結果搞了一下午。 1. 版本問題: sbt 沒有換 還是之前的版本,其他的版本 , spark官網上這麼說的: 2. build.sbt 文件: 3. 本地intellij idea里直接run spark 程式 ,不使用spark-sub ...

接著上一篇極簡入門 ,打算嘗試一下 spark2.2.0 ,結果搞了一下午。

1. 版本問題:

sbt 沒有換 還是之前的版本,其他的版本 , spark官網上這麼說的:

Spark runs on Java 8+, Python 2.7+/3.4+ and R 3.1+.

For the Scala API, Spark 2.2.0 uses Scala 2.11.

You will need to use a compatible Scala version (2.11.x).

這裡scala必須是2.11,我試過用版本2.12都不行。

2. build.sbt 文件:

name := "sparkDemo" version := "0.1" scalaVersion := "2.11.8" libraryDependencies += "org.apache.spark" %% "spark-core_2.11" % "2.2.0" libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.2.0" libraryDependencies += "org.scalatest" %% "scalatest" % "2.2.4" % Test

3. 本地intellij idea里直接run spark 程式 ,不使用spark-submit 方式。

直接右鍵 run要執行的class名字就可以了,註意 sparkConf().setMaster("local")

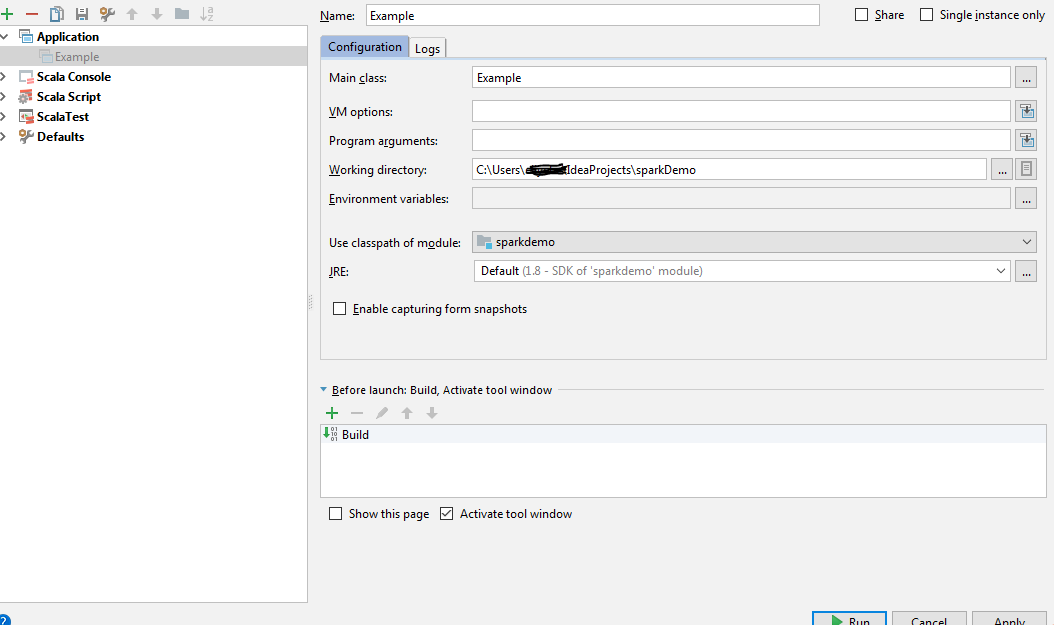

如果右鍵沒有可以run 的選項的話,就config一下,點擊工具欄的Run --> Run(綠色按鈕)—>Edit Configurations-->添加一個application-->在main class中輸入要運行的類的名字-->右下角apply。

4.再去右鍵 應該就有run 這個選項了。然後出現了這樣的log:

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 17/08/27 13:08:08 INFO SparkContext: Running Spark version 2.2.0 17/08/27 13:08:09 ERROR Shell: Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

網上搜這個問題很多,直接貼上一條答案:

我是在這裡:

https://github.com/SweetInk/hadoop-common-2.7.1-bin

下載winutils.exe還有libwinutils.lib 到我的%HADOOP_HOME%/bin文件夾下,下載了hdfs.dll到C:\windows\System32里。

然後在程式中加上:

System.setProperty("hadoop.home.dir", "C:\\Program Files\\hadoop")

5. 然後 。。又出現了新的exception:

Exception in thread "main" java.lang.ExceptionInInitializerError during calling of...

Caused by: java.lang.SecurityException: class "javax.servlet.FilterRegistration"'s signer

information does not match signer information of other classes in the same package

搜索問題原因,由於是類的衝突,就把 "javax.servlet" exclude掉,build.sbt中加入下麵一行,執行sbt compile。

libraryDependencies += "org.apache.hadoop" % "hadoop-common" % "2.5.0-cdh5.2.0" % "provided" excludeAll ExclusionRule(organization = "javax.servlet")

貼上原答案:

6.運行成功。

代碼來自spark example:

object Test {

def main(args:Array[String]): Unit ={

val spark = SparkSession.builder().appName("groupbytest")

.master("local").getOrCreate()

val numMappers = if(args.length>0) args(0)toInt else 2

val numKVPairs = if (args.length>1) args(1).toInt else 1000

val valSize = if (args.length>2) args(2).toInt else 1000

val numReducers = if (args.length>3) args(3).toInt else numMappers

val pairs1 = spark.sparkContext.parallelize(0 until numMappers,numMappers).flatMap{

p=>

val ranGen = new Random

val arr1 = new Array[(Int,Array[Byte])](numKVPairs)

for (i<- 0 until numKVPairs){

val byteArr = new Array[Byte](valSize)

ranGen.nextBytes(byteArr)

arr1(i)=(ranGen.nextInt(Int.MaxValue),byteArr)

}

arr1

}.cache()

pairs1.count()

println(pairs1.groupByKey(numReducers).count())

spark.stop()

}

}

部分log,以及列印出的結果:

17/08/28 16:49:37 INFO TaskSetManager: Finished task 1.0 in stage 2.0 (TID 5) in 75 ms on localhost (executor driver) (2/2) 17/08/28 16:49:37 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool 17/08/28 16:49:37 INFO DAGScheduler: ResultStage 2 (count at Test.scala:29) finished in 0.230 s 17/08/28 16:49:37 INFO DAGScheduler: Job 1 finished: count at Test.scala:29, took 0.819872 s 2000 17/08/28 16:49:37 INFO SparkUI: Stopped Spark web UI at http://192.168.56.1:4040 17/08/28 16:49:37 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!