R + Hive = RHive 支持原創:http://blog.fens.me/nosql-r-hive/ R利劍NoSQL系列文章 之 Hive Hive介紹 Hive安裝 RHive安裝 RHive函數庫 RHive基本使用操作 1. Hive介紹 Hive是建立在Hadoop上的數據倉庫基 ...

R + Hive = RHive

支持原創:http://blog.fens.me/nosql-r-hive/

R利劍NoSQL系列文章 之 Hive

- Hive介紹

- Hive安裝

- RHive安裝

- RHive函數庫

- RHive基本使用操作

1. Hive介紹

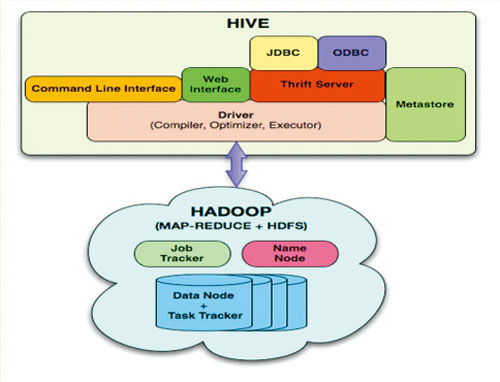

Hive是建立在Hadoop上的數據倉庫基礎構架。它提供了一系列的工具,可以用來進行數據提取轉化載入(ETL),這是一種可以存儲、查詢和分析存儲在 Hadoop 中的大規模數據的機制。Hive 定義了簡單的類 SQL 查詢語言,稱為 HQL,它允許熟悉 SQL 的用戶查詢數據。同時,這個語言也允許熟悉 MapReduce 開發者的開發自定義的 mapper 和 reducer 來處理內建的 mapper 和 reducer 無法完成的複雜的分析工作。

Hive 沒有專門的數據格式。 Hive 可以很好的工作在 Thrift 之上,控制分隔符,也允許用戶指定數據格式

上面內容摘自 百度百科(http://baike.baidu.com/view/699292.htm)

hive與關係資料庫的區別:

- 數據存儲不同:hive基於hadoop的HDFS,關係資料庫則基於本地文件系統

- 計算模型不同:hive基於hadoop的mapreduce,關係資料庫則基於索引的記憶體計算模型

- 應用場景不同:hive是OLAP數據倉庫系統提供海量數據查詢的,實時性很差;關係資料庫是OLTP事務系統,為實時查詢業務服務

- 擴展性不同:hive基於hadoop很容易通過分散式增加存儲能力和計算能力,關係資料庫水平擴展很難,要不斷增加單機的性能

2. Hive安裝

Hive是基於Hadoop開發的數據倉庫產品,所以首先我們要先有Hadoop的環境。

Hadoop安裝,請參考:Hadoop環境搭建, 創建Hadoop母體虛擬機

Hive的安裝,請參考:Hive安裝及使用攻略

Hadoop-1.0.3的下載地址

http://archive.apache.org/dist/hadoop/core/hadoop-1.0.3/

Hive-0.9.0的下載地址

http://archive.apache.org/dist/hive/hive-0.9.0/

Hive安裝好後

啟動hiveserver的服務

~ nohup hive --service hiveserver &

Starting Hive Thrift Server

打開hive shell

~ hive shell

Logging initialized using configuration in file:/home/conan/hadoop/hive-0.9.0/conf/hive-log4j.proper ties

Hive history file=/tmp/conan/hive_job_log_conan_201306261459_153868095.txt

#查看hive的表

hive> show tables;

hive_algo_t_account

o_account

r_t_account

Time taken: 2.12 seconds

#查看o_account表的數據

hive> select * from o_account;

1 [email protected] 2013-04-22 12:21:39

2 [email protected] 2013-04-22 12:21:39

3 [email protected] 2013-04-22 12:21:39

4 [email protected] 2013-04-22 12:21:39

5 [email protected] 2013-04-22 12:21:39

6 [email protected] 2013-04-22 12:21:39

7 [email protected] 2013-04-23 09:21:24

8 [email protected] 2013-04-23 09:21:24

9 [email protected] 2013-04-23 09:21:24

10 [email protected] 2013-04-23 09:21:24

11 [email protected] 2013-04-23 09:21:24

Time taken: 0.469 seconds

3. RHive安裝

請提前配置好JAVA的環境:

~ java -version

java version "1.6.0_29"

Java(TM) SE Runtime Environment (build 1.6.0_29-b11)

Java HotSpot(TM) 64-Bit Server VM (build 20.4-b02, mixed mode)

安裝R:Ubuntu 12.04,請更新源再下載R2.15.3版本

~ sudo sh -c "echo deb http://mirror.bjtu.edu.cn/cran/bin/linux/ubuntu precise/ >>/etc/apt/sources.list"

~ sudo apt-get update

~ sudo apt-get install r-base-core=2.15.3-1precise0precise1

安裝R依賴庫:rjava

#配置rJava

~ sudo R CMD javareconf

#啟動R程式

~ sudo R

install.packages("rJava")

安裝RHiveinstall.packages("RHive")

library(RHive)

Loading required package: rJava

Loading required package: Rserve

This is RHive 0.0-7. For overview type ‘?RHive’.

HIVE_HOME=/home/conan/hadoop/hive-0.9.0

call rhive.init() because HIVE_HOME is set.

由於RHive已經從CRAN上移除,需要動手下載安裝,下載地址:https://cran.r-project.org/src/contrib/Archive/RHive/。我們需要動手下載RHive_0.0-7.tar.gz包,然後通過命令進行安裝。

# 安裝RHive

~ R CMD INSTALL RHive_0.0-7.tar.gz

4. RHive函數庫

rhive.aggregate rhive.connect rhive.hdfs.exists rhive.mapapply

rhive.assign rhive.desc.table rhive.hdfs.get rhive.mrapply

rhive.basic.by rhive.drop.table rhive.hdfs.info rhive.napply

rhive.basic.cut rhive.env rhive.hdfs.ls rhive.query

rhive.basic.cut2 rhive.exist.table rhive.hdfs.mkdirs rhive.reduceapply

rhive.basic.merge rhive.export rhive.hdfs.put rhive.rm

rhive.basic.mode rhive.exportAll rhive.hdfs.rename rhive.sapply

rhive.basic.range rhive.hdfs.cat rhive.hdfs.rm rhive.save

rhive.basic.scale rhive.hdfs.chgrp rhive.hdfs.tail rhive.script.export

rhive.basic.t.test rhive.hdfs.chmod rhive.init rhive.script.unexport

rhive.basic.xtabs rhive.hdfs.chown rhive.list.tables

rhive.size.table

rhive.big.query rhive.hdfs.close rhive.load rhive.write.table

rhive.block.sample rhive.hdfs.connect rhive.load.table

rhive.close rhive.hdfs.du rhive.load.table2

Hive和RHive的基本操作對比:

#連接到hive

Hive: hive shell

RHive: rhive.connect("192.168.1.210")

#列出所有hive的表

Hive: show tables;

RHive: rhive.list.tables()

#查看表結構

Hive: desc o_account;

RHive: rhive.desc.table('o_account'), rhive.desc.table('o_account',TRUE)

#執行HQL查詢

Hive: select * from o_account;

RHive: rhive.query('select * from o_account')

#查看hdfs目錄

Hive: dfs -ls /;

RHive: rhive.hdfs.ls()

#查看hdfs文件內容

Hive: dfs -cat /user/hive/warehouse/o_account/part-m-00000;

RHive: rhive.hdfs.cat('/user/hive/warehouse/o_account/part-m-00000')

#斷開連接

Hive: quit;

RHive: rhive.close()

5. RHive基本使用操作

#初始化

rhive.init()

#連接hive

rhive.connect("192.168.1.210")

#查看所有表

rhive.list.tables()

tab_name

1 hive_algo_t_account

2 o_account

3 r_t_account

#查看表結構

rhive.desc.table('o_account');

col_name data_type comment

1 id int

2 email string

3 create_date string

#執行HQL查詢

rhive.query("select * from o_account");

id email create_date

1 1 [email protected] 2013-04-22 12:21:39

2 2 [email protected] 2013-04-22 12:21:39

3 3 [email protected] 2013-04-22 12:21:39

4 4 [email protected] 2013-04-22 12:21:39

5 5 [email protected] 2013-04-22 12:21:39

6 6 [email protected] 2013-04-22 12:21:39

7 7 [email protected] 2013-04-23 09:21:24

8 8 [email protected] 2013-04-23 09:21:24

9 9 [email protected] 2013-04-23 09:21:24

10 10 [email protected] 2013-04-23 09:21:24

11 11 [email protected] 2013-04-23 09:21:24

#關閉連接

rhive.close()

[1] TRUE

創建臨時表

rhive.block.sample('o_account', subset="id<5")

[1] "rhive_sblk_1372238856"

rhive.query("select * from rhive_sblk_1372238856");

id email create_date

1 1 [email protected] 2013-04-22 12:21:39

2 2 [email protected] 2013-04-22 12:21:39

3 3 [email protected] 2013-04-22 12:21:39

4 4 [email protected] 2013-04-22 12:21:39

#查看hdfs的文件

rhive.hdfs.ls('/user/hive/warehouse/rhive_sblk_1372238856/')

permission owner group length modify-time

1 rw-r--r-- conan supergroup 141 2013-06-26 17:28

file

1 /user/hive/warehouse/rhive_sblk_1372238856/000000_0

rhive.hdfs.cat('/user/hive/warehouse/rhive_sblk_1372238856/000000_0')

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39

按範圍分割欄位數據

rhive.basic.cut('o_account','id',breaks='0:100:3')

[1] "rhive_result_20130626173626"

attr(,"result:size")

[1] 443

rhive.query("select * from rhive_result_20130626173626");

email create_date id

1 [email protected] 2013-04-22 12:21:39 (0,3]

2 [email protected] 2013-04-22 12:21:39 (0,3]

3 [email protected] 2013-04-22 12:21:39 (0,3]

4 [email protected] 2013-04-22 12:21:39 (3,6]

5 [email protected] 2013-04-22 12:21:39 (3,6]

6 [email protected] 2013-04-22 12:21:39 (3,6]

7 [email protected] 2013-04-23 09:21:24 (6,9]

8 [email protected] 2013-04-23 09:21:24 (6,9]

9 [email protected] 2013-04-23 09:21:24 (6,9]

10 [email protected] 2013-04-23 09:21:24 (9,12]

11 [email protected] 2013-04-23 09:21:24 (9,12]

Hive操作HDFS

#查看hdfs文件目錄

rhive.hdfs.ls()

permission owner group length modify-time file

1 rwxr-xr-x conan supergroup 0 2013-04-24 01:52 /hbase

2 rwxr-xr-x conan supergroup 0 2013-06-23 10:59 /home

3 rwxr-xr-x conan supergroup 0 2013-06-26 11:18 /rhive

4 rwxr-xr-x conan supergroup 0 2013-06-23 13:27 /tmp

5 rwxr-xr-x conan supergroup 0 2013-04-24 19:28 /user

#查看hdfs文件內容

rhive.hdfs.cat('/user/hive/warehouse/o_account/part-m-00000')

[email protected] 12:21:39

[email protected] 12:21:39

[email protected] 12:21:39