非常感謝你能夠堅持看到第四篇,同時這也是這個Volley系列教程的最後一篇了。經過前三節的學習,相信你也已經懂得如何運用Volley提供的Request以及自定義Request了,這一節我將從源碼的角度帶領大家理解Volley的工作流程。 ...

歡迎訪問我的個人博客轉發請註明出處:http://www.wensibo.top/2017/02/17/一口一口吃掉Volley(四)/

非常感謝你能夠堅持看到第四篇,同時這也是這個Volley系列教程的最後一篇了。經過前三節的學習,相信你也已經懂得如何運用Volley提供的Request以及自定義Request了,這一節我將從源碼的角度帶領大家理解Volley的工作流程。

從newRequestQueue()看起

我們都知道,使用Volley最開始要做的就是使用newRequestQueue()獲取一個RequestQueue對象,仔細看一下這個方法

- newRequestQueue()

public static RequestQueue newRequestQueue(Context context, HttpStack stack, int maxDiskCacheBytes) {

File cacheDir = new File(context.getCacheDir(), DEFAULT_CACHE_DIR);

String userAgent = "volley/0";

try {

String packageName = context.getPackageName();

PackageInfo info = context.getPackageManager().getPackageInfo(packageName, 0);

userAgent = packageName + "/" + info.versionCode;

} catch (NameNotFoundException e) {

}

if (stack == null) {

if (Build.VERSION.SDK_INT >= 9) {

stack = new HurlStack();

} else {

// Prior to Gingerbread, HttpUrlConnection was unreliable.

// See: http://android-developers.blogspot.com/2011/09/androids-http-clients.html

stack = new HttpClientStack(AndroidHttpClient.newInstance(userAgent));

}

}

Network network = new BasicNetwork(stack);

RequestQueue queue;

if (maxDiskCacheBytes <= -1)

{

// No maximum size specified

queue = new RequestQueue(new DiskBasedCache(cacheDir), network);

}

else

{

// Disk cache size specified

queue = new RequestQueue(new DiskBasedCache(cacheDir, maxDiskCacheBytes), network);

}

queue.start();

return queue;

}在方法內部我們可以看到在api等級大於9的時候,使用HurlStack實例來進行主要的網路請求工作,到這裡已經很明顯了,Volley底層是使用HttpUrlConnection進行的;而對於小於9的API則創建否則就創建一個HttpClientStack的實例,也就是對於9之前的API使用HttpClient進行網路通訊。最後被包裝為一個BasicNetwork對象。

接著根據得到的BasicNetwork對象和一個DiskBasedCache對象(磁碟緩存)來構造一個RequestQueue,並且調用了它的start方法來啟動這個線程。

接著看start()

- start()

public void start() {

stop(); // Make sure any currently running dispatchers are stopped.

// Create the cache dispatcher and start it.

mCacheDispatcher = new CacheDispatcher(mCacheQueue, mNetworkQueue, mCache, mDelivery);

mCacheDispatcher.start();

// Create network dispatchers (and corresponding threads) up to the pool size.

for (int i = 0; i < mDispatchers.length; i++) {

NetworkDispatcher networkDispatcher = new NetworkDispatcher(mNetworkQueue, mNetwork,

mCache, mDelivery);

mDispatchers[i] = networkDispatcher;

networkDispatcher.start();

}

}首先先創建CacheDispatcher對象,接著進入for迴圈這個for迴圈遍歷了mCacheDispatcher,這個mCacheDispatcher其實相當於一個線程池,這個線程池的大小預設是4。然後分別讓這裡面的線程運行起來(調用了它們的start方法)。這裡為什麼要有多個線程來處理呢?原因很簡單,因為我們每一個請求都不一定會馬上處理完畢,多個線程進行同時處理的話效率會提高。 所以最終這裡會有5個線程,4個是網路線程NetworkDispatcher,1個是緩存線程CacheDispatcher。

得到了RequestQueue之後,我們只需要構建出相應的Request,然後調用RequestQueue的add()方法將Request傳入就可以完成網路請求操作了,那就先來看一下add()吧!

add()方法

- add()

public <T> Request<T> add(Request<T> request) {

// Tag the request as belonging to this queue and add it to the set of current requests.

request.setRequestQueue(this);

synchronized (mCurrentRequests) {

mCurrentRequests.add(request);

}

// Process requests in the order they are added.

request.setSequence(getSequenceNumber());

request.addMarker("add-to-queue");

// If the request is uncacheable, skip the cache queue and go straight to the network.

if (!request.shouldCache()) {

mNetworkQueue.add(request);

return request;

}

// Insert request into stage if there's already a request with the same cache key in flight.

synchronized (mWaitingRequests) {

String cacheKey = request.getCacheKey();

if (mWaitingRequests.containsKey(cacheKey)) {

// There is already a request in flight. Queue up.

Queue<Request<?>> stagedRequests = mWaitingRequests.get(cacheKey);

if (stagedRequests == null) {

stagedRequests = new LinkedList<Request<?>>();

}

stagedRequests.add(request);

mWaitingRequests.put(cacheKey, stagedRequests);

if (VolleyLog.DEBUG) {

VolleyLog.v("Request for cacheKey=%s is in flight, putting on hold.", cacheKey);

}

} else {

// Insert 'null' queue for this cacheKey, indicating there is now a request in

// flight.

mWaitingRequests.put(cacheKey, null);

mCacheQueue.add(request);

}

return request;

}

}可以看到,在第13行的時候會判斷當前的請求是否可以緩存,如果不能緩存則在第14行直接將這條請求加入網路請求隊列,可以緩存的話則在第36行將這條請求加入緩存隊列。在預設情況下,每條請求都是可以緩存的,當然我們也可以調用Request的setShouldCache(false)方法來改變這一預設行為。

那就先來看看NetworkDispatcher的run()吧!

- run()

@Override

public void run() {

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

Request<?> request;

while (true) {

long startTimeMs = SystemClock.elapsedRealtime();

// release previous request object to avoid leaking request object when mQueue is drained.

request = null;

try {

// Take a request from the queue.

request = mQueue.take();

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

try {

request.addMarker("network-queue-take");

// If the request was cancelled already, do not perform the

// network request.

if (request.isCanceled()) {

request.finish("network-discard-cancelled");

continue;

}

addTrafficStatsTag(request);

// Perform the network request.

NetworkResponse networkResponse = mNetwork.performRequest(request);

request.addMarker("network-http-complete");

// If the server returned 304 AND we delivered a response already,

// we're done -- don't deliver a second identical response.

if (networkResponse.notModified && request.hasHadResponseDelivered()) {

request.finish("not-modified");

continue;

}

// Parse the response here on the worker thread.

Response<?> response = request.parseNetworkResponse(networkResponse);

request.addMarker("network-parse-complete");

// Write to cache if applicable.

// TODO: Only update cache metadata instead of entire record for 304s.

if (request.shouldCache() && response.cacheEntry != null) {

mCache.put(request.getCacheKey(), response.cacheEntry);

request.addMarker("network-cache-written");

}

// Post the response back.

request.markDelivered();

mDelivery.postResponse(request, response);

} catch (VolleyError volleyError) {

volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs);

parseAndDeliverNetworkError(request, volleyError);

} catch (Exception e) {

VolleyLog.e(e, "Unhandled exception %s", e.toString());

VolleyError volleyError = new VolleyError(e);

volleyError.setNetworkTimeMs(SystemClock.elapsedRealtime() - startTimeMs);

mDelivery.postError(request, volleyError);

}

}

}第4行設置了這些線程的優先順序,這個優先順序比較低,目的是為了儘量減少對UI線程的影響保證流暢度。

接著第12行,調用mQueue的take方法取出隊列頭的一個請求進行處理,這個mQueue就是我們在上面add方法中添加進去的一個請求。

直接看到第34行,如果請求沒有被取消,也就是正常的情況下,我們會調用mNetwork的performRequest方法進行請求的處理。不知道你還記的這個mNetwork不,它其實就是我們上面提到的那個由HttpUrlConnection層層包裝的網路請求對象。

如果請求得到了結果,我們會看到55行調用了mDelivery的postResponose方法來回傳我們的請求結果。

先來看performRequest()

因為Network是一個介面,這裡具體的實現是BasicNetwork,所以我們可以看到其中重寫的performRequest()如下:

- performRequest()

@Override

public NetworkResponse performRequest(Request<?> request) throws VolleyError {

long requestStart = SystemClock.elapsedRealtime();

while (true) {

HttpResponse httpResponse = null;

byte[] responseContents = null;

Map<String, String> responseHeaders = Collections.emptyMap();

try {

// Gather headers.

Map<String, String> headers = new HashMap<String, String>();

addCacheHeaders(headers, request.getCacheEntry());

httpResponse = mHttpStack.performRequest(request, headers);

StatusLine statusLine = httpResponse.getStatusLine();

int statusCode = statusLine.getStatusCode();

responseHeaders = convertHeaders(httpResponse.getAllHeaders());

// Handle cache validation.

if (statusCode == HttpStatus.SC_NOT_MODIFIED) {

Entry entry = request.getCacheEntry();

if (entry == null) {

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, null,

responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart);

}

// A HTTP 304 response does not have all header fields. We

// have to use the header fields from the cache entry plus

// the new ones from the response.

// http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html#sec10.3.5

entry.responseHeaders.putAll(responseHeaders);

return new NetworkResponse(HttpStatus.SC_NOT_MODIFIED, entry.data,

entry.responseHeaders, true,

SystemClock.elapsedRealtime() - requestStart);

}

// Handle moved resources

if (statusCode == HttpStatus.SC_MOVED_PERMANENTLY || statusCode == HttpStatus.SC_MOVED_TEMPORARILY) {

String newUrl = responseHeaders.get("Location");

request.setRedirectUrl(newUrl);

}

// Some responses such as 204s do not have content. We must check.

if (httpResponse.getEntity() != null) {

responseContents = entityToBytes(httpResponse.getEntity());

} else {

// Add 0 byte response as a way of honestly representing a

// no-content request.

responseContents = new byte[0];

}

// if the request is slow, log it.

long requestLifetime = SystemClock.elapsedRealtime() - requestStart;

logSlowRequests(requestLifetime, request, responseContents, statusLine);

if (statusCode < 200 || statusCode > 299) {

throw new IOException();

}

return new NetworkResponse(statusCode, responseContents, responseHeaders, false,

SystemClock.elapsedRealtime() - requestStart);

} catch (Exception e) {

···

}

}

}這段代碼中,先10和11行代碼將cache的屬性設置給header,接著第12行調用mHttpStack對象的performRequest方法並傳入請求對象和頭部來進行請求,得到一個HttpResponse對象。

接著將HttpResponse對象中的狀態碼取出,如果值為HttpStatus.SC_NOT_MODIFIED(也就是304),則表示請求得到的Response沒有變化,直接顯示緩存內容。

第45行表示請求成功並且獲取到請求內容,將內容取出並作為一個NetworkResponse對象的屬性並返回給NetworkDispatcher。

在NetworkDispatcher中收到了NetworkResponse這個返回值後又會調用Request的parseNetworkResponse()方法來解析NetworkResponse中的數據,以及將數據寫入到緩存,這個方法的實現是交給Request的子類來完成的,因為不同種類的Request解析的方式也肯定不同,這就是為什麼我們在自定義Request的時候必須要重寫parseNetworkResponse()這個方法的原因了。

在解析完了NetworkResponse中的數據之後,又會調用ExecutorDelivery的postResponse()方法來回調解析出的數據。

接著是postResponse()

- postResponse()

@Override

public void postResponse(Request<?> request, Response<?> response, Runnable runnable) {

request.markDelivered();

request.addMarker("post-response");

mResponsePoster.execute(new ResponseDeliveryRunnable(request, response, runnable));

}這裡看到第5行調用了mResponsePoster的execute方法並傳入了一個ResponseDeliveryRunnable對象,再看mResponsePoster的定義:

public ExecutorDelivery(final Handler handler) {

// Make an Executor that just wraps the handler.

mResponsePoster = new Executor() {

@Override

public void execute(Runnable command) {

handler.post(command);

}

};

}也就是我們在這裡把ResponseDeliveryRunnable對象通過Handler的post方法發送出去了。這裡為什麼要發送到MainLooper中?因為RequestQueue是在子線程中執行的,回調到的代碼也是在子線程中的,如果在回調中修改UI,就會報錯。再者,為什麼要使用post方法?原因也很簡單,因為我們在消息發出之後再進行回調,post方法允許我們傳入一個Runnable的實現類,post成功會自動執行它的run方法,這個時候在run方法中進行結果的判斷並且進行回調:

- run()

@Override

public void run() {

// If this request has canceled, finish it and don't deliver.

if (mRequest.isCanceled()) {

mRequest.finish("canceled-at-delivery");

return;

}

// Deliver a normal response or error, depending.

if (mResponse.isSuccess()) {

mRequest.deliverResponse(mResponse.result);

} else {

mRequest.deliverError(mResponse.error);

}

// If this is an intermediate response, add a marker, otherwise we're done

// and the request can be finished.

if (mResponse.intermediate) {

mRequest.addMarker("intermediate-response");

} else {

mRequest.finish("done");

}

// If we have been provided a post-delivery runnable, run it.

if (mRunnable != null) {

mRunnable.run();

}

}可以看到,11行是調用Request的deleverResponse方法將結果回調給Request。舉例看一下StringRequest中該方法是如何實現的:

- deliverResponse()

@Override

protected void deliverResponse(String response) {

if (mListener != null) {

mListener.onResponse(response);

}

}直接通過我們構造StringRequest時傳進來的Listener的回調方法onResponse來將結果回調給Activity。deleverError也是同樣的做法。

看完網路線程NetworkDispatcher之後再來看一下緩存線程CacheDispatcher是如何工作的

最後來看CacheDispatcher的run()方法

- run()

@Override

public void run() {

if (DEBUG) VolleyLog.v("start new dispatcher");

Process.setThreadPriority(Process.THREAD_PRIORITY_BACKGROUND);

// Make a blocking call to initialize the cache.

mCache.initialize();

Request<?> request;

while (true) {

// release previous request object to avoid leaking request object when mQueue is drained.

request = null;

try {

// Take a request from the queue.

request = mCacheQueue.take();

} catch (InterruptedException e) {

// We may have been interrupted because it was time to quit.

if (mQuit) {

return;

}

continue;

}

try {

request.addMarker("cache-queue-take");

// If the request has been canceled, don't bother dispatching it.

if (request.isCanceled()) {

request.finish("cache-discard-canceled");

continue;

}

// Attempt to retrieve this item from cache.

Cache.Entry entry = mCache.get(request.getCacheKey());

if (entry == null) {

request.addMarker("cache-miss");

// Cache miss; send off to the network dispatcher.

mNetworkQueue.put(request);

continue;

}

// If it is completely expired, just send it to the network.

if (entry.isExpired()) {

request.addMarker("cache-hit-expired");

request.setCacheEntry(entry);

mNetworkQueue.put(request);

continue;

}

// We have a cache hit; parse its data for delivery back to the request.

request.addMarker("cache-hit");

Response<?> response = request.parseNetworkResponse(

new NetworkResponse(entry.data, entry.responseHeaders));

request.addMarker("cache-hit-parsed");

if (!entry.refreshNeeded()) {

// Completely unexpired cache hit. Just deliver the response.

mDelivery.postResponse(request, response);

} else {

// Soft-expired cache hit. We can deliver the cached response,

// but we need to also send the request to the network for

// refreshing.

request.addMarker("cache-hit-refresh-needed");

request.setCacheEntry(entry);

// Mark the response as intermediate.

response.intermediate = true;

// Post the intermediate response back to the user and have

// the delivery then forward the request along to the network.

final Request<?> finalRequest = request;

mDelivery.postResponse(request, response, new Runnable() {

@Override

public void run() {

try {

mNetworkQueue.put(finalRequest);

} catch (InterruptedException e) {

// Not much we can do about this.

}

}

});

}

} catch (Exception e) {

VolleyLog.e(e, "Unhandled exception %s", e.toString());

}

}

}首先在10行可以看到一個while(true)迴圈,說明緩存線程始終是在運行的,

接著在第33行會嘗試從緩存當中取出響應結果,如何為空的話則把這條請求加入到網路請求隊列中,如果不為空的話再判斷該緩存是否已過期,如果已經過期了則同樣把這條請求加入到網路請求隊列中,否則就認為不需要重髮網絡請求,直接使用緩存中的數據即可。

之後會在第39行調用Request的parseNetworkResponse()方法來對數據進行解析,再往後就是將解析出來的數據進行回調了,跟上面的回掉思路是完全一樣的!

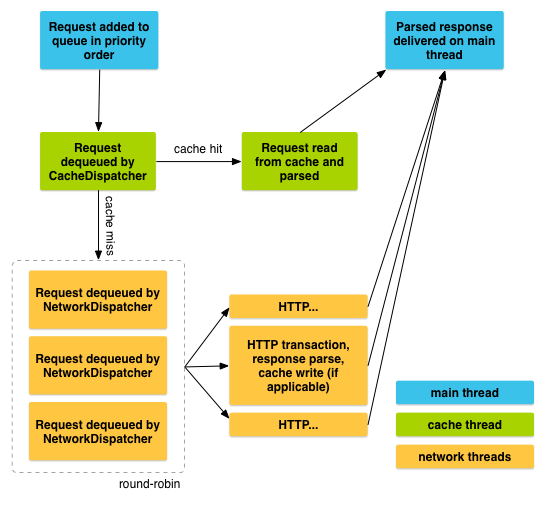

至此,我們可以通過通過Volley官方提供的流程圖重新回顧一下整個的流程

其中藍色部分代表主線程,綠色部分代表緩存線程,橙色部分代表網路線程。我們在主線程中調用RequestQueue的add()方法來添加一條網路請求,這條請求會先被加入到緩存隊列當中,如果發現可以找到相應的緩存結果就直接讀取緩存並解析,然後回調給主線程。如果在緩存中沒有找到結果,則將這條請求加入到網路請求隊列中,然後處理髮送HTTP請求,解析響應結果,寫入緩存,並回調主線程。

希望通過這個系列的文章你能夠清晰的掌握和理解Volley,儘管他現在已經不流行了,接下來我會持續為大家講解比較好的開源框架,TX