一、創建用戶# useradd spark# passwd spark二、下載軟體JDK,Scala,SBT,Maven版本信息如下:JDK jdk-7u79-linux-x64.gzScala scala-2.10.5.tgzSBT sbt-0.13.7.zipMaven apache-maven...

一、創建用戶

# useradd spark

# passwd spark

二、下載軟體

JDK,Scala,SBT,Maven

版本信息如下:

JDK jdk-7u79-linux-x64.gz

Scala scala-2.10.5.tgz

SBT sbt-0.13.7.zip

Maven apache-maven-3.2.5-bin.tar.gz

註意:如果只是安裝Spark環境,則只需JDK和Scala即可,SBT和Maven是為了後續的源碼編譯。

三、解壓上述文件併進行環境變數配置

# cd /usr/local/

# tar xvf /root/jdk-7u79-linux-x64.gz

# tar xvf /root/scala-2.10.5.tgz

# tar xvf /root/apache-maven-3.2.5-bin.tar.gz

# unzip /root/sbt-0.13.7.zip

修改環境變數的配置文件

# vim /etc/profile

export JAVA_HOME=/usr/local/jdk1.7.0_79 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export SCALA_HOME=/usr/local/scala-2.10.5 export MAVEN_HOME=/usr/local/apache-maven-3.2.5 export SBT_HOME=/usr/local/sbt export PATH=$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin:$MAVEN_HOME/bin:$SBT_HOME/bin

使配置文件生效

# source /etc/profile

測試環境變數是否生效

# java –version

java version "1.7.0_79" Java(TM) SE Runtime Environment (build 1.7.0_79-b15) Java HotSpot(TM) 64-Bit Server VM (build 24.79-b02, mixed mode)

# scala –version

Scala code runner version 2.10.5 -- Copyright 2002-2013, LAMP/EPFL

# mvn –version

Apache Maven 3.2.5 (12a6b3acb947671f09b81f49094c53f426d8cea1; 2014-12-15T01:29:23+08:00) Maven home: /usr/local/apache-maven-3.2.5 Java version: 1.7.0_79, vendor: Oracle Corporation Java home: /usr/local/jdk1.7.0_79/jre Default locale: en_US, platform encoding: UTF-8 OS name: "linux", version: "3.10.0-229.el7.x86_64", arch: "amd64", family: "unix"

# sbt --version

sbt launcher version 0.13.7

四、主機名綁定

[root@spark01 ~]# vim /etc/hosts

192.168.244.147 spark01

五、配置spark

切換到spark用戶下

下載hadoop和spark,可使用wget命令下載

spark-1.4.0 http://d3kbcqa49mib13.cloudfront.net/spark-1.4.0-bin-hadoop2.6.tgz

Hadoop http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

解壓上述文件併進行環境變數配置

修改spark用戶環境變數的配置文件

[spark@spark01 ~]$ vim .bash_profile

export SPARK_HOME=$HOME/spark-1.4.0-bin-hadoop2.6 export HADOOP_HOME=$HOME/hadoop-2.6.0 export HADOOP_CONF_DIR=$HOME/hadoop-2.6.0/etc/hadoop export PATH=$PATH:$SPARK_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

使配置文件生效

[spark@spark01 ~]$ source .bash_profile

修改spark配置文件

[spark@spark01 ~]$ cd spark-1.4.0-bin-hadoop2.6/conf/

[spark@spark01 conf]$ cp spark-env.sh.template spark-env.sh

[spark@spark01 conf]$ vim spark-env.sh

在後面添加如下內容:

export SCALA_HOME=/usr/local/scala-2.10.5 export SPARK_MASTER_IP=spark01 export SPARK_WORKER_MEMORY=1500m export JAVA_HOME=/usr/local/jdk1.7.0_79

有條件的童鞋可將SPARK_WORKER_MEMORY適當設大一點,因為我虛擬機記憶體是2G,所以只給了1500m。

配置slaves

[spark@spark01 conf]$ cp slaves slaves.template

[spark@spark01 conf]$ vim slaves

將localhost修改為spark01

啟動master

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ sbin/start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /home/spark/spark-1.4.0-bin-hadoop2.6/sbin/../logs/spark-spark-org.apache.spark.deploy.master.Master-1-spark01.out

查看上述日誌的輸出內容

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ cd logs/

[spark@spark01 logs]$ cat spark-spark-org.apache.spark.deploy.master.Master-1-spark01.out

Spark Command: /usr/local/jdk1.7.0_79/bin/java -cp /home/spark/spark-1.4.0-bin-hadoop2.6/sbin/../conf/:/home/spark/spark-1.4.0-bin-hadoop2.6/lib/spark-assembly-1.4.0-hadoop2.6.0.jar:/home/spark/spark-1.4.0-bin-hadoop2.6/lib/datanucleus-core-3.2.10.jar:/home/spark/spark-1.4.0-bin-hadoop2.6/lib/datanucleus-api-jdo-3.2.6.jar:/home/spark/spark-1.4.0-bin-hadoop2.6/lib/datanucleus-rdbms-3.2.9.jar:/home/spark/hadoop-2.6.0/etc/hadoop/ -Xms512m -Xmx512m -XX:MaxPermSize=128m org.apache.spark.deploy.master.Master --ip spark01 --port 7077 --webui-port 8080 ======================================== 16/01/16 15:12:30 INFO master.Master: Registered signal handlers for [TERM, HUP, INT] 16/01/16 15:12:31 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/01/16 15:12:32 INFO spark.SecurityManager: Changing view acls to: spark 16/01/16 15:12:32 INFO spark.SecurityManager: Changing modify acls to: spark 16/01/16 15:12:32 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark) 16/01/16 15:12:33 INFO slf4j.Slf4jLogger: Slf4jLogger started 16/01/16 15:12:33 INFO Remoting: Starting remoting 16/01/16 15:12:33 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkMaster@spark01:7077] 16/01/16 15:12:33 INFO util.Utils: Successfully started service 'sparkMaster' on port 7077. 16/01/16 15:12:34 INFO server.Server: jetty-8.y.z-SNAPSHOT 16/01/16 15:12:34 INFO server.AbstractConnector: Started SelectChannelConnector@spark01:6066 16/01/16 15:12:34 INFO util.Utils: Successfully started service on port 6066. 16/01/16 15:12:34 INFO rest.StandaloneRestServer: Started REST server for submitting applications on port 6066 16/01/16 15:12:34 INFO master.Master: Starting Spark master at spark://spark01:7077 16/01/16 15:12:34 INFO master.Master: Running Spark version 1.4.0 16/01/16 15:12:34 INFO server.Server: jetty-8.y.z-SNAPSHOT 16/01/16 15:12:34 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:8080 16/01/16 15:12:34 INFO util.Utils: Successfully started service 'MasterUI' on port 8080. 16/01/16 15:12:34 INFO ui.MasterWebUI: Started MasterWebUI at http://192.168.244.147:8080 16/01/16 15:12:34 INFO master.Master: I have been elected leader! New state: ALIVE

從日誌中也可看出,master啟動正常

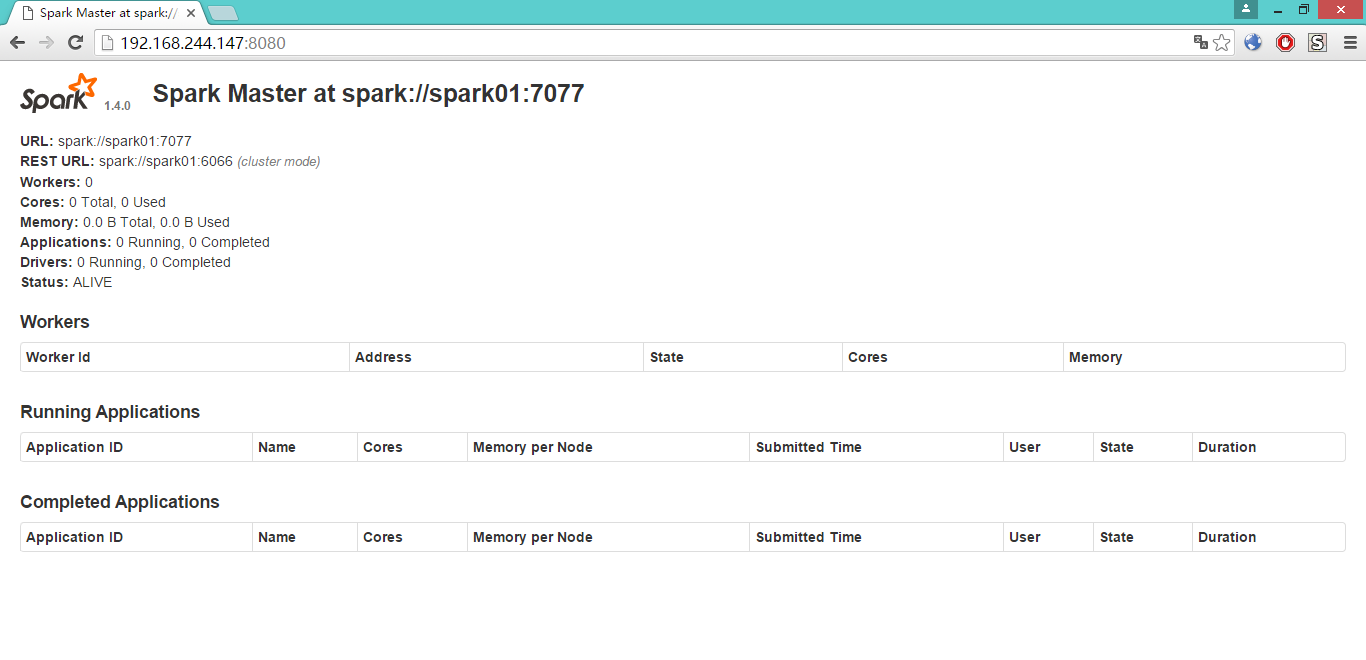

下麵來看看master的 web管理界面,預設在8080埠

啟動worker

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ sbin/start-slaves.sh spark://spark01:7077

spark01: Warning: Permanently added 'spark01,192.168.244.147' (ECDSA) to the list of known hosts. spark@spark01's password: spark01: starting org.apache.spark.deploy.worker.Worker, logging to /home/spark/spark-1.4.0-bin-hadoop2.6/sbin/../logs/spark-spark-org.apache.spark.deploy.worker.Worker-1-spark01.out

輸入spark01上spark用戶的密碼

可通過日誌的信息來確認workder是否正常啟動,因信息太多,在這裡就不貼出了。

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ cd logs/

[spark@spark01 logs]$ cat spark-spark-org.apache.spark.deploy.worker.Worker-1-spark01.out

啟動spark shell

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ bin/spark-shell --master spark://spark01:7077

16/01/16 15:33:17 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 16/01/16 15:33:18 INFO spark.SecurityManager: Changing view acls to: spark 16/01/16 15:33:18 INFO spark.SecurityManager: Changing modify acls to: spark 16/01/16 15:33:18 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark) 16/01/16 15:33:18 INFO spark.HttpServer: Starting HTTP Server 16/01/16 15:33:18 INFO server.Server: jetty-8.y.z-SNAPSHOT 16/01/16 15:33:18 INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:42300 16/01/16 15:33:18 INFO util.Utils: Successfully started service 'HTTP class server' on port 42300. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 1.4.0 /_/ Using Scala version 2.10.4 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_79) Type in expressions to have them evaluated. Type :help for more information. 16/01/16 15:33:30 INFO spark.SparkContext: Running Spark version 1.4.0 16/01/16 15:33:30 INFO spark.SecurityManager: Changing view acls to: spark 16/01/16 15:33:30 INFO spark.SecurityManager: Changing modify acls to: spark 16/01/16 15:33:30 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark) 16/01/16 15:33:31 INFO slf4j.Slf4jLogger: Slf4jLogger started 16/01/16 15:33:31 INFO Remoting: Starting remoting 16/01/16 15:33:31 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://[email protected]:43850] 16/01/16 15:33:31 INFO util.Utils: Successfully started service 'sparkDriver' on port 43850. 16/01/16 15:33:31 INFO spark.SparkEnv: Registering MapOutputTracker 16/01/16 15:33:31 INFO spark.SparkEnv: Registering BlockManagerMaster 16/01/16 15:33:31 INFO storage.DiskBlockManager: Created local directory at /tmp/spark-7b7bd4bd-ff20-4e3d-a354-61a4ca7c4b2f/blockmgr-0e855210-3609-4204-b5e3-151e0c096c15 16/01/16 15:33:31 INFO storage.MemoryStore: MemoryStore started with capacity 265.4 MB 16/01/16 15:33:31 INFO spark.HttpFileServer: HTTP File server directory is /tmp/spark-7b7bd4bd-ff20-4e3d-a354-61a4ca7c4b2f/httpd-56ac16d2-dd82-41cb-99d7-4d11ef36b42e 16/01/16 15:33:31 INFO spark.HttpServer: Starting HTTP Server 16/01/16 15:33:31 INFO server.Server: jetty-8.y.z-SNAPSHOT 16/01/16 15:33:31 INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:47633 16/01/16 15:33:31 INFO util.Utils: Successfully started service 'HTTP file server' on port 47633. 16/01/16 15:33:31 INFO spark.SparkEnv: Registering OutputCommitCoordinator 16/01/16 15:33:31 INFO server.Server: jetty-8.y.z-SNAPSHOT 16/01/16 15:33:31 INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:4040 16/01/16 15:33:31 INFO util.Utils: Successfully started service 'SparkUI' on port 4040. 16/01/16 15:33:31 INFO ui.SparkUI: Started SparkUI at http://192.168.244.147:4040 16/01/16 15:33:32 INFO client.AppClient$ClientActor: Connecting to master akka.tcp://sparkMaster@spark01:7077/user/Master... 16/01/16 15:33:33 INFO cluster.SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20160116153332-0000 16/01/16 15:33:33 INFO client.AppClient$ClientActor: Executor added: app-20160116153332-0000/0 on worker-20160116152314-192.168.244.147-58914 (192.168.244.147:58914) with 2 cores 16/01/16 15:33:33 INFO cluster.SparkDeploySchedulerBackend: Granted executor ID app-20160116153332-0000/0 on hostPort 192.168.244.147:58914 with 2 cores, 512.0 MB RAM 16/01/16 15:33:33 INFO client.AppClient$ClientActor: Executor updated: app-20160116153332-0000/0 is now LOADING 16/01/16 15:33:33 INFO client.AppClient$ClientActor: Executor updated: app-20160116153332-0000/0 is now RUNNING 16/01/16 15:33:34 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 33146. 16/01/16 15:33:34 INFO netty.NettyBlockTransferService: Server created on 33146 16/01/16 15:33:34 INFO storage.BlockManagerMaster: Trying to register BlockManager 16/01/16 15:33:34 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.244.147:33146 with 265.4 MB RAM, BlockManagerId(driver, 192.168.244.147, 33146) 16/01/16 15:33:34 INFO storage.BlockManagerMaster: Registered BlockManager 16/01/16 15:33:34 INFO cluster.SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0 16/01/16 15:33:34 INFO repl.SparkILoop: Created spark context.. Spark context available as sc. 16/01/16 15:33:38 INFO hive.HiveContext: Initializing execution hive, version 0.13.1 16/01/16 15:33:43 INFO metastore.HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore 16/01/16 15:33:43 INFO metastore.ObjectStore: ObjectStore, initialize called 16/01/16 15:33:44 INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored 16/01/16 15:33:44 INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored 16/01/16 15:33:44 INFO cluster.SparkDeploySchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://[email protected]:46741/user/Executor#-2043358626]) with ID 0 16/01/16 15:33:44 WARN DataNucleus.Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 16/01/16 15:33:45 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.244.147:33017 with 265.4 MB RAM, BlockManagerId(0, 192.168.244.147, 33017) 16/01/16 15:33:46 WARN DataNucleus.Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) 16/01/16 15:33:48 INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order" 16/01/16 15:33:48 INFO metastore.MetaStoreDirectSql: MySQL check failed, assuming we are not on mysql: Lexical error at line 1, column 5. Encountered: "@" (64), after : "". 16/01/16 15:33:52 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 16/01/16 15:33:52 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 16/01/16 15:33:54 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. 16/01/16 15:33:54 INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. 16/01/16 15:33:54 INFO metastore.ObjectStore: Initialized ObjectStore 16/01/16 15:33:54 WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 0.13.1aa 16/01/16 15:33:55 INFO metastore.HiveMetaStore: Added admin role in metastore 16/01/16 15:33:55 INFO metastore.HiveMetaStore: Added public role in metastore 16/01/16 15:33:56 INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty 16/01/16 15:33:56 INFO session.SessionState: No Tez session required at this point. hive.execution.engine=mr. 16/01/16 15:33:56 INFO repl.SparkILoop: Created sql context (with Hive support).. SQL context available as sqlContext. scala>

打開spark shell以後,可以寫一個簡單的程式,say hello to the world

scala> println("helloworld") helloworld

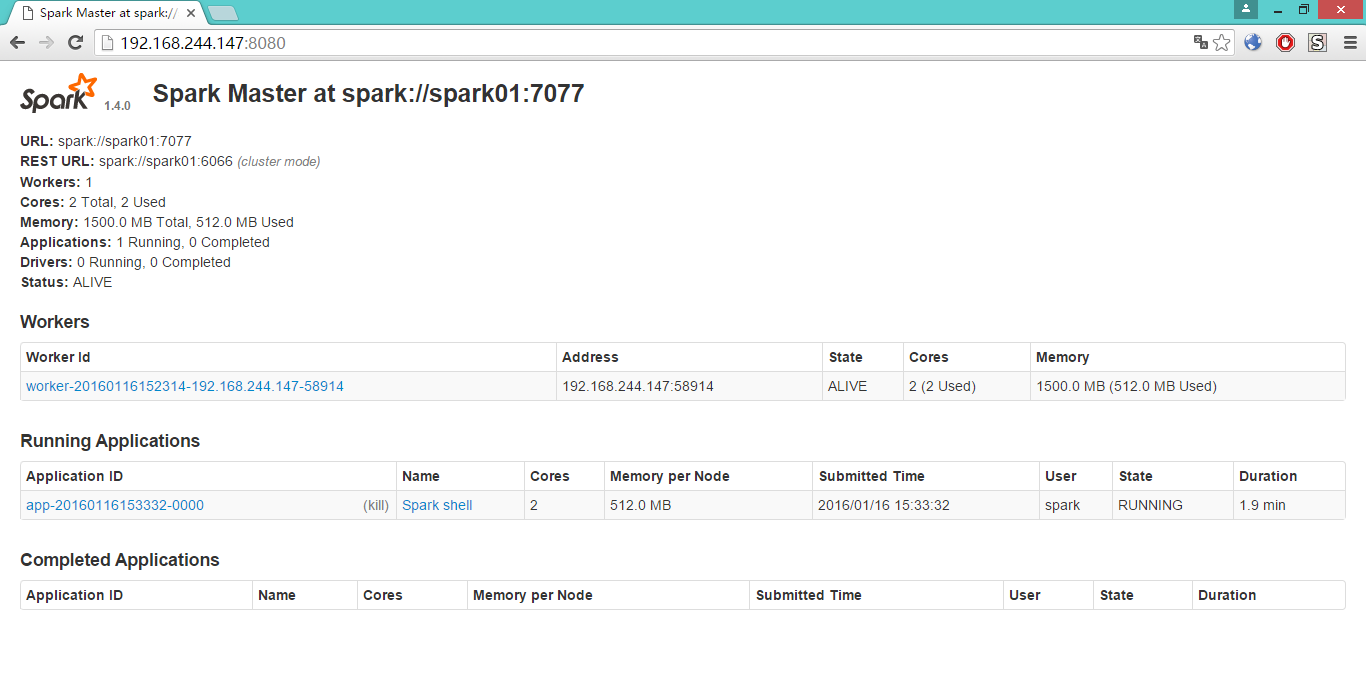

再來看看spark的web管理界面,可以看出,多了一個Workders和Running Applications的信息

至此,Spark的偽分散式環境搭建完畢,

有以下幾點需要註意:

1. 上述中的Maven和SBT是非必須的,只是為了後續的源碼編譯,所以,如果只是單純的搭建Spark環境,可不用下載Maven和SBT。

2. 該Spark的偽分散式環境其實是集群的基礎,只需修改極少的地方,然後copy到slave節點上即可,鑒於篇幅有限,後文再表。