Hive是為瞭解決hadoop中mapreduce編寫困難,提供給熟悉sql的人使用的。只要你對SQL有一定的瞭解,就能通過Hive寫出mapreduce的程式,而不需要去學習hadoop中的api。 在部署前需要確認安裝jdk以及Hadoop 如果需要安裝jdk以及hadoop可以參考我之前的博客 ...

Hive是為瞭解決hadoop中mapreduce編寫困難,提供給熟悉sql的人使用的。只要你對SQL有一定的瞭解,就能通過Hive寫出mapreduce的程式,而不需要去學習hadoop中的api。

在部署前需要確認安裝jdk以及Hadoop

如果需要安裝jdk以及hadoop可以參考我之前的博客:

Linux下安裝jdk

Linux下安裝hadoop偽分散式

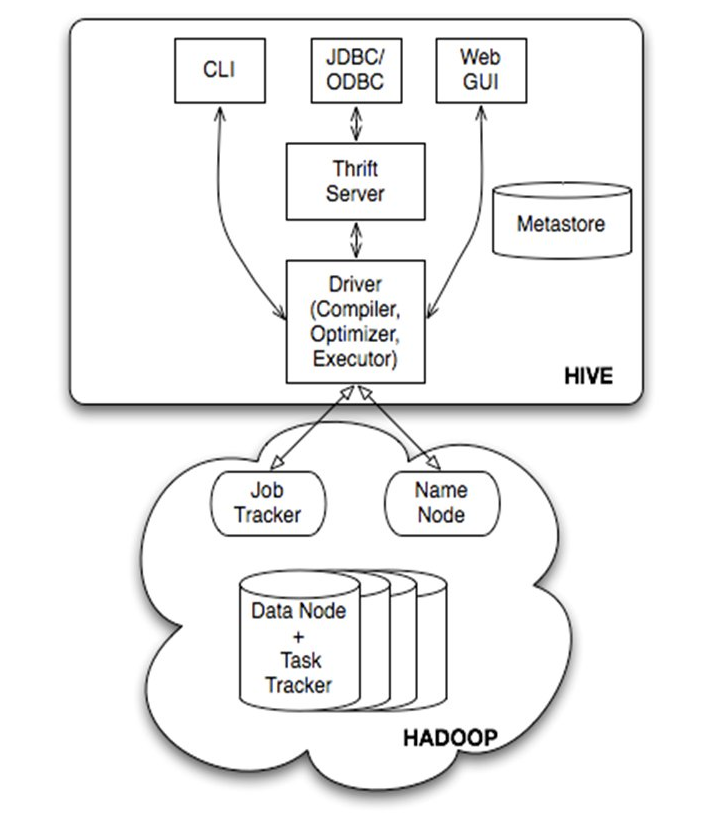

在安裝之前,先瞭解下Hive都有哪些東西。

下載並解壓縮

去主頁選擇鏡像地址:

http://www.apache.org/dyn/closer.cgi/hive/

在鏡像地址中選擇下載的版本,我這裡使用的是最新的2.1.0

https://mirrors.tuna.tsinghua.edu.cn/apache/hive/hive-2.1.0/

下載後,解壓縮

# 解壓縮

tar -zxvf apache-hive-2.1.0-bin.tar.gz

# 拷貝到指定目錄

mv apache-hive-2.1.0-bin /usr修改環境變數

# 編輯/etc/profile

vi /etc/profile

# set hive environment

export HIVE_HOME=/usr/apache-hive-2.1.0-bin

export PATH=$PATH:$HIVE_HOME/bin

# 配置文件生效

source /etc/profile創建並修改配置文件

# 進入conf目錄

cd $HIVE_HOME/conf

# 拷貝hive-site.xml

cp hive-default.xml.template hive-site.xml

# 修改其中的參數

修改所有的system.io.tmpdir為指定的目錄(自己定,我放在/usr/hive/tmp下了)

修改所有的system.user.name為自己的名字(我直接修改成xingoo了)

初始化schema

由於Hive中所有的原信息都需要存儲到關係型資料庫裡面,因此需要初始化資料庫表。我這裡直接使用的是預設的derby資料庫,關於數據的配置就不用修改了,直接使用預設的就好了。

# 進入指定的目錄

cd $HIVE_HOME/bin

# 初始化,如果是mysql則derby可以直接替換成mysql

./schematool -initSchema -dbType derby註意這裡有可能報錯:

[root@localhost bin]# ./schematool -initSchema -dbType derby createDatabaseIfNotExist=true

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.1.0

Initialization script hive-schema-2.1.0.derby.sql

Error: FUNCTION 'NUCLEUS_ASCII' already exists. (state=X0Y68,code=30000)

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

Use --verbose for detailed stacktrace.

*** schemaTool failed ***這時需要修改derby初始化腳本

# 進入指定的目錄

cd $HIVE_HOME/scripts/metastore/upgrade/derby

# 修改錯誤堆棧中的腳本hive-schema-2.1.0.derby.sql,註釋上面兩句

-- ----------------------------------------------

-- DDL Statements for functions

-- ----------------------------------------------

-- CREATE FUNCTION "APP"."NUCLEUS_ASCII" (C CHAR(1)) RETURNS INTEGER LANGUAGE JAVA PARAMETER STYLE JAVA READS SQL DATA CALLED ON NULL INPUT EXTERNAL NAME 'org.datanucleus.store.rdbms.adapter.DerbySQLFunction.ascii' ;

-- CREATE FUNCTION "APP"."NUCLEUS_MATCHES" (TEXT VARCHAR(8000),PATTERN VARCHAR(8000)) RETURNS INTEGER LANGUAGE JAVA PARAMETER STYLE JAVA READS SQL DATA CALLED ON NULL INPUT EXTERNAL NAME 'org.datanucleus.store.rdbms.adapter.DerbySQLFunction.matches' ;

再次執行,就可以了

[root@localhost bin]# vim ../scripts/metastore/upgrade/derby/hive-schema-2.1.0.derby.sql

[root@localhost bin]# ./schematool -initSchema -dbType derby createDatabaseIfNotExist=true

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.1.0

Initialization script hive-schema-2.1.0.derby.sql

Initialization script completed

schemaTool completed

啟動hive cli測試

[root@localhost bin]# hive

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/apache-hive-2.1.0-bin/lib/hive-common-2.1.0.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 1.931 seconds, Fetched: 1 row(s)

至此,Hive就算安裝完啦!

踩過的坑

沒有初始化資料庫,定義Schema

[root@localhost bin]# hive

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/apache-hive-2.1.0-bin/lib/hive-common-2.1.0.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql))

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:578)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql))

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:226)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:545)

... 9 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: MetaException(message:Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql))

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3593)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

... 14 more

Caused by: MetaException(message:Hive metastore database is not initialized. Please use schematool (e.g. ./schematool -initSchema -dbType ...) to create the schema. If needed, don't forget to include the option to auto-create the underlying database in your JDBC connection string (e.g. ?createDatabaseIfNotExist=true for mysql))

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3364)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3336)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3590)

... 16 more

解決辦法:

執行命令$HIVE_HOME/bin/schematool -initSchema -dbType derby

derby資料庫腳本定義有問題

[root@localhost bin]# ./schematool -initSchema -dbType derby createDatabaseIfNotExist=true

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=true

Metastore Connection Driver : org.apache.derby.jdbc.EmbeddedDriver

Metastore connection User: APP

Starting metastore schema initialization to 2.1.0

Initialization script hive-schema-2.1.0.derby.sql

Error: FUNCTION 'NUCLEUS_ASCII' already exists. (state=X0Y68,code=30000)

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

Underlying cause: java.io.IOException : Schema script failed, errorcode 2

Use --verbose for detailed stacktrace.

*** schemaTool failed ***解決辦法:

註釋掉sql腳本中的兩句定義..

配置文件中${system.io.tmpdir}不識別

[root@localhost bin]# hive

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/apache-hive-2.1.0-bin/lib/hive-common-2.1.0.jar!/hive-log4j2.properties Async: true

Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at org.apache.hadoop.fs.Path.initialize(Path.java:206)

at org.apache.hadoop.fs.Path.<init>(Path.java:172)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:631)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:550)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:518)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:483)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

at java.net.URI.checkPath(URI.java:1823)

at java.net.URI.<init>(URI.java:745)

at org.apache.hadoop.fs.Path.initialize(Path.java:203)

... 12 more

解決辦法:

替換hive-site.xml中的${system.io.tmpdir}屬性

hive-site.xml中${system.user.name}不識別

[root@localhost bin]# hive

which: no hbase in (/usr/lib64/qt-3.3/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0/bin:/root/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/apache-hive-2.1.0-bin/bin:/usr/java/jdk1.8.0/bin:/usr/hadoop/hadoop-2.6.4/bin:/usr/apache-hive-2.1.0-bin/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/apache-hive-2.1.0-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/usr/apache-hive-2.1.0-bin/lib/hive-common-2.1.0.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

Failed with exception java.io.IOException:java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:user.name%7D

Time taken: 1.781 seconds

解決辦法:

替換hive-site.xml中的${system.user.name}屬性

參考

1 FUNCTION 'NUCLEUS_ASCII' already exists.

2 如何在hive中定義schema

3 Hive部署註意事項

4 Hive配置入門