一旦你的程式docker化之後,你會遇到各種問題,比如原來採用的本地記日誌的方式就不再方便了,雖然你可以掛載到宿主機,但你使用 --scale 的話,會導致 記錄日誌異常,所以最好的方式還是要做日誌中心化,另一個問題,原來一個請求在一個進程中的痙攣失敗,你可以在日誌中巡查出調用堆棧,但是docker ...

一旦你的程式docker化之後,你會遇到各種問題,比如原來採用的本地記日誌的方式就不再方便了,雖然你可以掛載到宿主機,但你使用 --scale 的話,會導致

記錄日誌異常,所以最好的方式還是要做日誌中心化,另一個問題,原來一個請求在一個進程中的痙攣失敗,你可以在日誌中巡查出調用堆棧,但是docker化之後,

原來一個進程的東西會拆成幾個微服務,這時候最好就要有一個分散式的調用鏈跟蹤,類似於wcf中的svctraceview工具。

一:搭建skywalking

gihub地址是:https://github.com/apache/incubator-skywalking 從文檔中大概看的出來,大體分三個部分:存儲,收集器,探針,存儲這裡就選用推薦的

elasticsearch。收集器準備和es部署在一起,探針就有各自語言的實現了,總之這裡就有三個docker container: es,kibana,skywalking, 如果不用容器編排工具

的話就比較麻煩。

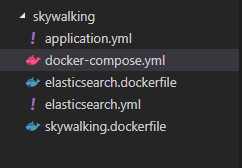

下麵是本次搭建的一個目錄結構:

1. elasticsearch.yml

es的配置文件,不過這裡有一個坑,就是一定要將 network.publish_host: 0.0.0.0 ,否則skywalking會連不上 9300埠。

network.publish_host: 0.0.0.0 transport.tcp.port: 9300 network.host: 0.0.0.0

2. elasticsearch.dockerfile

在up的時候,將這個es文件copy到 容器的config文件夾下。

FROM elasticsearch:5.6.4 EXPOSE 9200 9300 COPY elasticsearch.yml /usr/share/elasticsearch/config/

3. application.yml

skywalking的配置文件,這裡也有一個坑:連接es的地址中,配置的 clustername一定要修改成和es的clustername保持一致,否則會連不上,這裡容器之間用link

進行互聯,所以es的ip改成elasticsearch就可以了,其他的ip改成0.0.0.0 。

# Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. #cluster: # zookeeper: # hostPort: localhost:2181 # sessionTimeout: 100000 naming: jetty: host: 0.0.0.0 port: 10800 contextPath: / cache: # guava: caffeine: remote: gRPC: host: 0.0.0.0 port: 11800 agent_gRPC: gRPC: host: 0.0.0.0 port: 11800 #Set these two setting to open ssl #sslCertChainFile: $path #sslPrivateKeyFile: $path #Set your own token to active auth #authentication: xxxxxx agent_jetty: jetty: host: 0.0.0.0 port: 12800 contextPath: / analysis_register: default: analysis_jvm: default: analysis_segment_parser: default: bufferFilePath: ../buffer/ bufferOffsetMaxFileSize: 10M bufferSegmentMaxFileSize: 500M bufferFileCleanWhenRestart: true ui: jetty: host: 0.0.0.0 port: 12800 contextPath: / storage: elasticsearch: clusterName: elasticsearch clusterTransportSniffer: true clusterNodes: elasticsearch:9300 indexShardsNumber: 2 indexReplicasNumber: 0 highPerformanceMode: true ttl: 7 #storage: # h2: # url: jdbc:h2:~/memorydb # userName: sa configuration: default: # namespace: xxxxx # alarm threshold applicationApdexThreshold: 2000 serviceErrorRateThreshold: 10.00 serviceAverageResponseTimeThreshold: 2000 instanceErrorRateThreshold: 10.00 instanceAverageResponseTimeThreshold: 2000 applicationErrorRateThreshold: 10.00 applicationAverageResponseTimeThreshold: 2000 # thermodynamic thermodynamicResponseTimeStep: 50 thermodynamicCountOfResponseTimeSteps: 40View Code

4. skywalking.dockerfile

接下來就是 skywalking的 下載安裝,使用dockerfile流程化。

FROM centos:7 LABEL username="[email protected]" WORKDIR /app RUN yum install -y wget && \ yum install -y java-1.8.0-openjdk ADD http://mirrors.hust.edu.cn/apache/incubator/skywalking/5.0.0-RC2/apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz /app RUN tar -xf apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz && \ mv apache-skywalking-apm-incubating skywalking RUN ls /app #copy文件 COPY application.yml /app/skywalking/config/application.yml WORKDIR /app/skywalking/bin USER root RUN echo "tail -f /dev/null" >> /app/skywalking/bin/startup.sh CMD ["/bin/sh","-c","/app/skywalking/bin/startup.sh" ]

5. docker-compose.yml

最後就是將這三個容器進行編排,要註意的是,因為收集器會將數據放入到es中,所有一定要將es的data掛載到宿主機的大硬碟下,否則你的空間會不足的。

version: '3.1' services: #elastic 鏡像 elasticsearch: build: context: . dockerfile: elasticsearch.dockerfile # ports: # - "9200:9200" # - "9300:9300" volumes: - "/data/es2:/usr/share/elasticsearch/data" #kibana 可視化查詢,暴露 5601 kibana: image: kibana links: - elasticsearch ports: - 5601:5601 depends_on: - "elasticsearch" #skywalking skywalking: build: context: . dockerfile: skywalking.dockerfile ports: - "10800:10800" - "11800:11800" - "12800:12800" - "8080:8080" links: - elasticsearch depends_on: - "elasticsearch"

二:一鍵部署

要部署在docker中,你還得需要安裝docker-ce 和 docker-compose,大家可以參照官方安裝一下。

1. Docker-ce 的安裝

sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engine sudo yum install -y yum-utils \ device-mapper-persistent-data \ lvm2 sudo yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo sudo yum install docker-ce

然後啟動一下docker 服務,可以看到版本是18.06.1

[root@localhost ~]# service docker start Redirecting to /bin/systemctl start docker.service [root@localhost ~]# docker -v Docker version 18.06.1-ce, build e68fc7a

2. docker-compose的安裝

sudo curl -L "https://github.com/docker/compose/releases/download/1.22.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose sudo chmod +x /usr/local/bin/docker-compose

3. 最後在centos上執行 docker-compopse up --build 就可以了,如果不想terminal上運行,可以加 -d 使用後臺執行。

[root@localhost docker]# docker-compose up --build Creating network "docker_default" with the default driver Building elasticsearch Step 1/3 : FROM elasticsearch:5.6.4 ---> 7a047c21aa48 Step 2/3 : EXPOSE 9200 9300 ---> Using cache ---> 8d66bb57b09d Step 3/3 : COPY elasticsearch.yml /usr/share/elasticsearch/config/ ---> Using cache ---> 02b516c03b95 Successfully built 02b516c03b95 Successfully tagged docker_elasticsearch:latest Building skywalking Step 1/12 : FROM centos:7 ---> 5182e96772bf Step 2/12 : LABEL username="[email protected]" ---> Using cache ---> b95b96a92042 Step 3/12 : WORKDIR /app ---> Using cache ---> afdf4efe3426 Step 4/12 : RUN yum install -y wget && yum install -y java-1.8.0-openjdk ---> Using cache ---> 46be0ca0f7b5 Step 5/12 : ADD http://mirrors.hust.edu.cn/apache/incubator/skywalking/5.0.0-RC2/apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz /app ---> Using cache ---> d5c30bcfd5ea Step 6/12 : RUN tar -xf apache-skywalking-apm-incubating-5.0.0-RC2.tar.gz && mv apache-skywalking-apm-incubating skywalking ---> Using cache ---> 1438d08d18fa Step 7/12 : RUN ls /app ---> Using cache ---> b594124672ea Step 8/12 : COPY application.yml /app/skywalking/config/application.yml ---> Using cache ---> 10eaf0805a65 Step 9/12 : WORKDIR /app/skywalking/bin ---> Using cache ---> bc0f02291536 Step 10/12 : USER root ---> Using cache ---> 4498afca5fe6 Step 11/12 : RUN echo "tail -f /dev/null" >> /app/skywalking/bin/startup.sh ---> Using cache ---> 1c4be7c6b32a Step 12/12 : CMD ["/bin/sh","-c","/app/skywalking/bin/startup.sh" ] ---> Using cache ---> ecfc97e4c97d Successfully built ecfc97e4c97d Successfully tagged docker_skywalking:latest Creating docker_elasticsearch_1 ... done Creating docker_skywalking_1 ... done Creating docker_kibana_1 ... done Attaching to docker_elasticsearch_1, docker_kibana_1, docker_skywalking_1 elasticsearch_1 | [2018-09-17T23:51:47,611][INFO ][o.e.n.Node ] [] initializing ... elasticsearch_1 | [2018-09-17T23:51:47,729][INFO ][o.e.e.NodeEnvironment ] [FC_bOh1] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/sda3)]], net usable_space [5gb], net total_space [22.1gb], spins? [possibly], types [xfs] elasticsearch_1 | [2018-09-17T23:51:47,730][INFO ][o.e.e.NodeEnvironment ] [FC_bOh1] heap size [1.9gb], compressed ordinary object pointers [true] elasticsearch_1 | [2018-09-17T23:51:47,731][INFO ][o.e.n.Node ] node name [FC_bOh1] derived from node ID [FC_bOh1nS_uW6JKy_46iBg]; set [node.name] to override elasticsearch_1 | [2018-09-17T23:51:47,732][INFO ][o.e.n.Node ] version[5.6.4], pid[1], build[8bbedf5/2017-10-31T18:55:38.105Z], OS[Linux/3.10.0-327.el7.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_151/25.151-b12] elasticsearch_1 | [2018-09-17T23:51:47,732][INFO ][o.e.n.Node ] JVM arguments [-Xms2g, -Xmx2g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -Djdk.io.permissionsUseCanonicalPath=true, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Dlog4j.skipJansi=true, -XX:+HeapDumpOnOutOfMemoryError, -Des.path.home=/usr/share/elasticsearch] skywalking_1 | SkyWalking Collector started successfully! elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [aggs-matrix-stats] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [ingest-common] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-expression] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-groovy] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-mustache] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [lang-painless] elasticsearch_1 | [2018-09-17T23:51:49,067][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [parent-join] elasticsearch_1 | [2018-09-17T23:51:49,068][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [percolator] elasticsearch_1 | [2018-09-17T23:51:49,068][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [reindex] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [transport-netty3] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] loaded module [transport-netty4] elasticsearch_1 | [2018-09-17T23:51:49,069][INFO ][o.e.p.PluginsService ] [FC_bOh1] no plugins loaded skywalking_1 | SkyWalking Web Application started successfully! elasticsearch_1 | [2018-09-17T23:51:51,950][INFO ][o.e.d.DiscoveryModule ] [FC_bOh1] using discovery type [zen] kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} elasticsearch_1 | [2018-09-17T23:51:53,456][INFO ][o.e.n.Node ] initialized elasticsearch_1 | [2018-09-17T23:51:53,457][INFO ][o.e.n.Node ] [FC_bOh1] starting ... kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","info"],"pid":12,"state":"yellow","message":"Status changed from uninitialized to yellow - Waiting for Elasticsearch","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["error","elasticsearch","admin"],"pid":12,"message":"Request error, retrying\nHEAD http://elasticsearch:9200/ => connect ECONNREFUSED 172.21.0.2:9200"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"Unable to revive connection: http://elasticsearch:9200/"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"No living connections"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","error"],"pid":12,"state":"red","message":"Status changed from yellow to red - Unable to connect to Elasticsearch at http://elasticsearch:9200.","prevState":"yellow","prevMsg":"Waiting for Elasticsearch"} elasticsearch_1 | [2018-09-17T23:51:53,829][INFO ][o.e.t.TransportService ] [FC_bOh1] publish_address {172.21.0.2:9300}, bound_addresses {0.0.0.0:9300} elasticsearch_1 | [2018-09-17T23:51:53,870][INFO ][o.e.b.BootstrapChecks ] [FC_bOh1] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","plugin:[email protected]","info"],"pid":12,"state":"green","message":"Status changed from uninitialized to green - Ready","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["listening","info"],"pid":12,"message":"Server running at http://0.0.0.0:5601"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:53Z","tags":["status","ui settings","error"],"pid":12,"state":"red","message":"Status changed from uninitialized to red - Elasticsearch plugin is red","prevState":"uninitialized","prevMsg":"uninitialized"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:56Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"Unable to revive connection: http://elasticsearch:9200/"} kibana_1 | {"type":"log","@timestamp":"2018-09-17T23:51:56Z","tags":["warning","elasticsearch","admin"],"pid":12,"message":"No living connections"} elasticsearch_1 | [2018-09-17T23:51:57,094][INFO ][o.e.c.s.ClusterService ] [FC_bOh1] new_master {FC_bOh1}{FC_bOh1nS_uW6JKy_46iBg}{tNMEW5HYQm6O4aiqpU0uWA}{172.21.0.2}{172.21.0.2:9300}, reason: zen-disco-elected-as-master ([0] nodes joined) elasticsearch_1 | [2018-09-17T23:51:57,129][INFO ][o.e.h.n.Netty4HttpServerTransport] [FC_bOh1] publish_address {172.21.0.2:9200}, bound_addresses {0.0.0.0:9200} elasticsearch_1 | [2018-09-17T23:51:57,129][INFO ][o.e.n.Node ] [FC_bOh1] started elasticsearch_1 | [2018-09-17T23:51:57,157][INFO ][o.e.g.GatewayService ] [FC_bOh1] recovered [0] indices into cluster_state elasticsearch_1 | [2018-09-17T23:51:57,368][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_alarm_list_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,557][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_alarm_list_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:57,685][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [memory_pool_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,742][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[memory_pool_metric_month][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:57,886][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [service_metric_day] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:57,962][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[service_metric_day][0]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,115][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_hour] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:58,176][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_hour][1]] ...]). elasticsearch_1 | [2018-09-17T23:51:58,356][INFO ][o.e.c.m.MetaDataCreateIndexService] [FC_bOh1] [application_metric_month] creating index, cause [api], templates [], shards [2]/[0], mappings [type] elasticsearch_1 | [2018-09-17T23:51:58,437][INFO ][o.e.c.r.a.AllocationService] [FC_bOh1] Cluster health status changed from [YELLOW] to [GREEN] (reason: [shards started [[application_metric_month][0]] ...]). elasticsearch_1